Can blockchain solve its oracle problem?

The so-called oracle problem may not be intractable, however — despite what Song

suggests. “Yes, there is progress,” says Halaburda. “In supply-chain oracles, we

have for example sensors with their individual digital signatures. We are

learning about how many sensors there need to be, and how to distinguish

manipulation from malfunction from multiple readings.” “We are also getting

better in writing contracts taking into account these different cases, so that

the manipulation is less beneficial,” Halaburda continues. “In DeFi, we also

have multiple sources, and techniques to cross-validate. While we are making

progress, though, we haven’t gotten to the end of the road yet.” As noted,

oracles are critical to the emerging DeFi sector. “In order for DeFi

applications to work and provide value to people and organizations around the

world, they require information from the real world — like pricing data for

derivatives,” Sam Kim, partner at Umbrella Network — a decentralized layer-two

oracle solution — tells Magazine, adding:

The so-called oracle problem may not be intractable, however — despite what Song

suggests. “Yes, there is progress,” says Halaburda. “In supply-chain oracles, we

have for example sensors with their individual digital signatures. We are

learning about how many sensors there need to be, and how to distinguish

manipulation from malfunction from multiple readings.” “We are also getting

better in writing contracts taking into account these different cases, so that

the manipulation is less beneficial,” Halaburda continues. “In DeFi, we also

have multiple sources, and techniques to cross-validate. While we are making

progress, though, we haven’t gotten to the end of the road yet.” As noted,

oracles are critical to the emerging DeFi sector. “In order for DeFi

applications to work and provide value to people and organizations around the

world, they require information from the real world — like pricing data for

derivatives,” Sam Kim, partner at Umbrella Network — a decentralized layer-two

oracle solution — tells Magazine, adding:

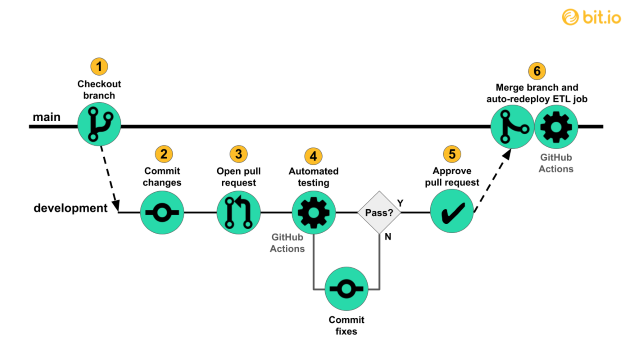

Putting the trust back in software testing in 2022

Millions of organisations rely on manual processes to check the quality of their

software applications, despite a fully manual approach presenting a litany of

problems. Firstly, with more than 70% of outages caused by human error, testing

software manually still leaves companies highly prone to issues. Secondly, it is

exceptionally resource-intensive and requires specialist skills. Given the world

is in the midst of an acute digital talent crisis, many businesses lack the

personnel to dedicate to manual testing. Compounding this challenge is the

intrinsic link between software development and business success. With companies

coming under more pressure than ever to release faster and more regularly, the

sheer volume of software needing testing has skyrocketed, placing a further

burden on resources already stretched to breaking point. Companies should be

testing their software applications 24/7 but the resource-heavy nature of manual

testing makes this impossible. It is also demotivating to perform repeat tasks,

which generally leads to critical errors in the first place.

Millions of organisations rely on manual processes to check the quality of their

software applications, despite a fully manual approach presenting a litany of

problems. Firstly, with more than 70% of outages caused by human error, testing

software manually still leaves companies highly prone to issues. Secondly, it is

exceptionally resource-intensive and requires specialist skills. Given the world

is in the midst of an acute digital talent crisis, many businesses lack the

personnel to dedicate to manual testing. Compounding this challenge is the

intrinsic link between software development and business success. With companies

coming under more pressure than ever to release faster and more regularly, the

sheer volume of software needing testing has skyrocketed, placing a further

burden on resources already stretched to breaking point. Companies should be

testing their software applications 24/7 but the resource-heavy nature of manual

testing makes this impossible. It is also demotivating to perform repeat tasks,

which generally leads to critical errors in the first place. December 2021 Global Tech Policy Briefing

CISA and the National Security Administration (NSA), in the meantime, offered a

second revision to their 5G cybersecurity guidance on December 2. According to

CISA’s statement, “Devices and services connected through 5G networks transmit,

use, and store an exponentially increasing amount of data. This third

installment of the Security Guidance for 5G Cloud Infrastructures four-part

series explains how to protect sensitive data from unauthorized access.” The new

guidelines run on zero-trust principles and reflect the White House’s ongoing

concern with national cybersecurity. ... On December 9, the European

Commission proposed a new set of measures to ensure labor rights for people

working on digital platforms. The proposal will focus on transparency,

enforcement, traceability, and the algorithmic management of what it calls, in

splendid Eurocratese, “digital labour platforms.” The number of EU citizens

working for digital platforms has grown 500 percent since 2016, reaching 28

million, and will likely hit 43 million by 2025. Of the current 28 million, 59

percent work with clients or colleagues in another country.

CISA and the National Security Administration (NSA), in the meantime, offered a

second revision to their 5G cybersecurity guidance on December 2. According to

CISA’s statement, “Devices and services connected through 5G networks transmit,

use, and store an exponentially increasing amount of data. This third

installment of the Security Guidance for 5G Cloud Infrastructures four-part

series explains how to protect sensitive data from unauthorized access.” The new

guidelines run on zero-trust principles and reflect the White House’s ongoing

concern with national cybersecurity. ... On December 9, the European

Commission proposed a new set of measures to ensure labor rights for people

working on digital platforms. The proposal will focus on transparency,

enforcement, traceability, and the algorithmic management of what it calls, in

splendid Eurocratese, “digital labour platforms.” The number of EU citizens

working for digital platforms has grown 500 percent since 2016, reaching 28

million, and will likely hit 43 million by 2025. Of the current 28 million, 59

percent work with clients or colleagues in another country.

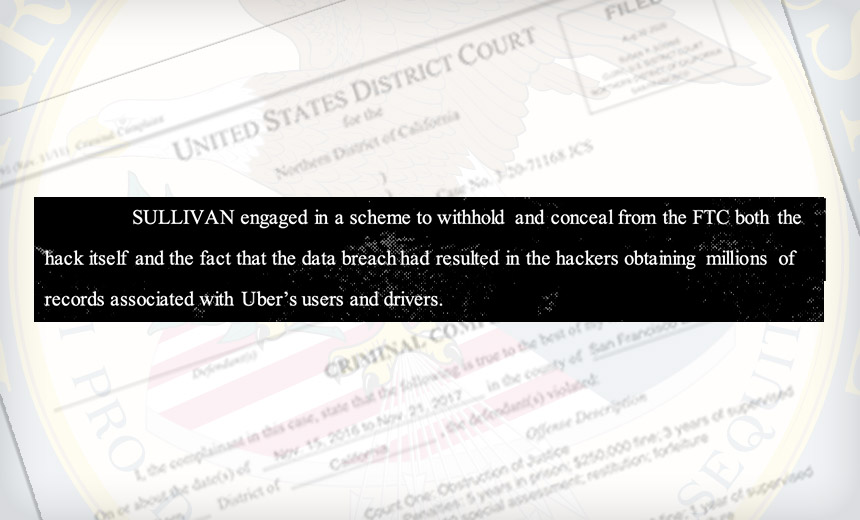

10 Predictions for Web3 and the Cryptoeconomy for 2022

Institutions will play a much bigger role in Defi participation — Institutions are increasingly interested in participating in Defi. For starters, institutions are attracted to higher than average interest-based returns compared to traditional financial products. Also, cost reduction in providing financial services using Defi opens up interesting opportunities for institutions. However, they are still hesitant to participate in Defi. Institutions want to confirm that they are only transacting with known counterparties that have completed a KYC process. Growth of regulated Defi and on-chain KYC attestation will help institutions gain confidence in Defi. ... Defi insurance will emerge — As Defi proliferates, it also becomes the target of security hacks. According to London-based firm Elliptic, total value lost by Defi exploits in 2021 totaled over $10B. To protect users from hacks, viable insurance protocols guaranteeing users’ funds against security breaches will emerge in 2022. ... NFT Based Communities will give material competition to Web 2.0 social networks — NFTs will continue to expand in how they are perceived.Firmware attack can drop persistent malware in hidden SSD area

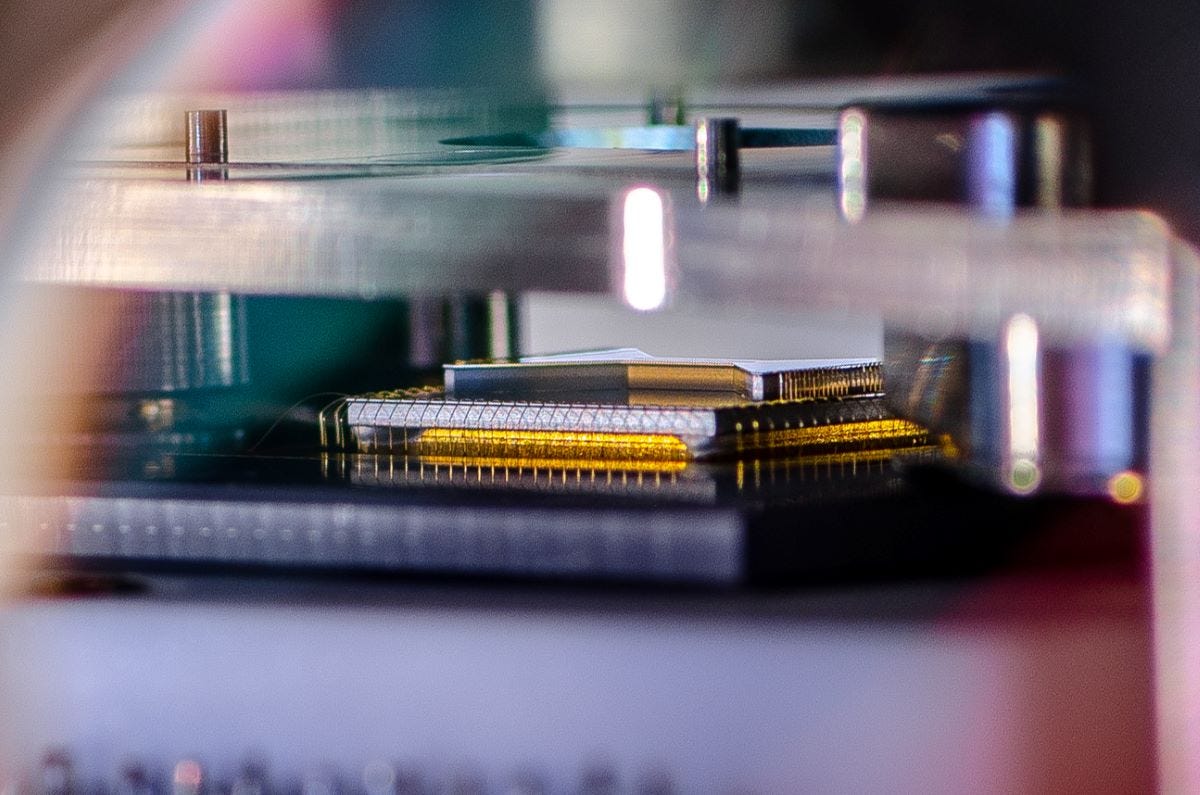

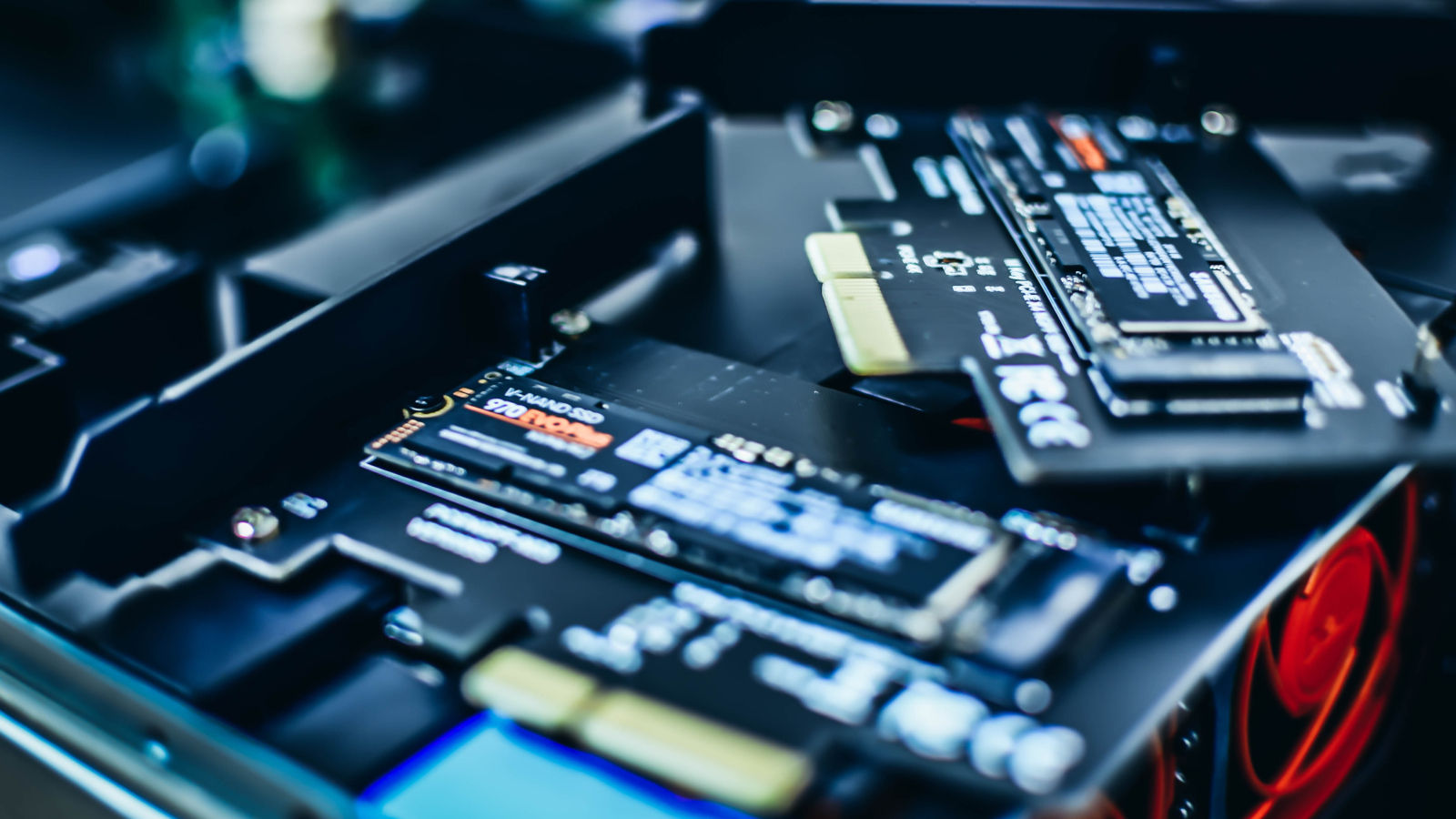

Flex capacity is a feature in SSDs from Micron Technology that enables storage

devices to automatically adjust the sizes of raw and user-allocated space to

achieve better performance by absorbing write workload volumes. It is a

dynamic system that creates and adjusts a buffer of space called

over-provisioning, typically taking between 7% and 25% of the total disk

capacity. The over-provisioning area is invisible to the operating system and

any applications running on it, including security solutions and anti-virus

tools. As the user launches different applications, the SSD manager adjusts

this space automatically against the workloads, depending on how write or

read-intensive they are. ... One attack modeled by researchers at Korea

University in Seoul targets an invalid data area with non-erased information

that sits between the usable SSD space and the over-provisioning (OP) area,

and whose size depends on the two. The research paper explains that a hacker

can change the size of the OP area by using the firmware manager, thus

generating exploitable invalid data space.

Flex capacity is a feature in SSDs from Micron Technology that enables storage

devices to automatically adjust the sizes of raw and user-allocated space to

achieve better performance by absorbing write workload volumes. It is a

dynamic system that creates and adjusts a buffer of space called

over-provisioning, typically taking between 7% and 25% of the total disk

capacity. The over-provisioning area is invisible to the operating system and

any applications running on it, including security solutions and anti-virus

tools. As the user launches different applications, the SSD manager adjusts

this space automatically against the workloads, depending on how write or

read-intensive they are. ... One attack modeled by researchers at Korea

University in Seoul targets an invalid data area with non-erased information

that sits between the usable SSD space and the over-provisioning (OP) area,

and whose size depends on the two. The research paper explains that a hacker

can change the size of the OP area by using the firmware manager, thus

generating exploitable invalid data space.

'Businesses need to build threat intelligence for cybersecurity': Dipesh Kaura, Kaspersky

Organizations across industries are faced with the challenge of cybersecurity and the need to build threat intelligence holds equal importance for every business that thrives in a digital economy. While building threat intelligence is crucial, it is also necessary to have a solution that understands the threat vectors for every business, across every industry. A holistic threat intelligence solution looks at every nitty-gritty of an enterprise's security framework and gets the best actionable insights. A threat intelligence platform must capture and monitor real-time feeds from across an enterprise's digital footprint and turn them into insights to build a preventive posture, instead of a reactive one. It must diagnose and analyze security incidents on hosts and the network and signals from internal systems against unknown threats, thereby minimizing incident response time and disrupt the kill chain before critical systems and data are compromised.IT leadership: 3 ways to show gratitude to teams

If someone on your team takes initiative on a project, let them know that you

appreciate them. Pull them aside, look them in the eye and speak truthfully

about how much their extra effort means to you, the team, and the company.

Make your thank-you’s genuine, direct, and personal. Most individuals value

physical tokens of appreciation in addition to expressed gratitude. If you

choose to offer a gift, make it as personalized as you can. For example, an

Amazon gift card is nice – but a cake from their favorite bakery is even

nicer. Personalization means that you’ve thought about them as a person, taken

the time to consider what they like, and recognize their contributions as an

individual. Contrary to the common belief that we should be lavish with our

praises, I would argue that it’s better to be selective. Recognize behavior

that lives up to your company’s values and reserve the recognition for

situations where it is genuinely deserved. If a leader showers praise when

it’s not really warranted, they devalue the praise that is given when team

members actually go above and beyond.

If someone on your team takes initiative on a project, let them know that you

appreciate them. Pull them aside, look them in the eye and speak truthfully

about how much their extra effort means to you, the team, and the company.

Make your thank-you’s genuine, direct, and personal. Most individuals value

physical tokens of appreciation in addition to expressed gratitude. If you

choose to offer a gift, make it as personalized as you can. For example, an

Amazon gift card is nice – but a cake from their favorite bakery is even

nicer. Personalization means that you’ve thought about them as a person, taken

the time to consider what they like, and recognize their contributions as an

individual. Contrary to the common belief that we should be lavish with our

praises, I would argue that it’s better to be selective. Recognize behavior

that lives up to your company’s values and reserve the recognition for

situations where it is genuinely deserved. If a leader showers praise when

it’s not really warranted, they devalue the praise that is given when team

members actually go above and beyond.

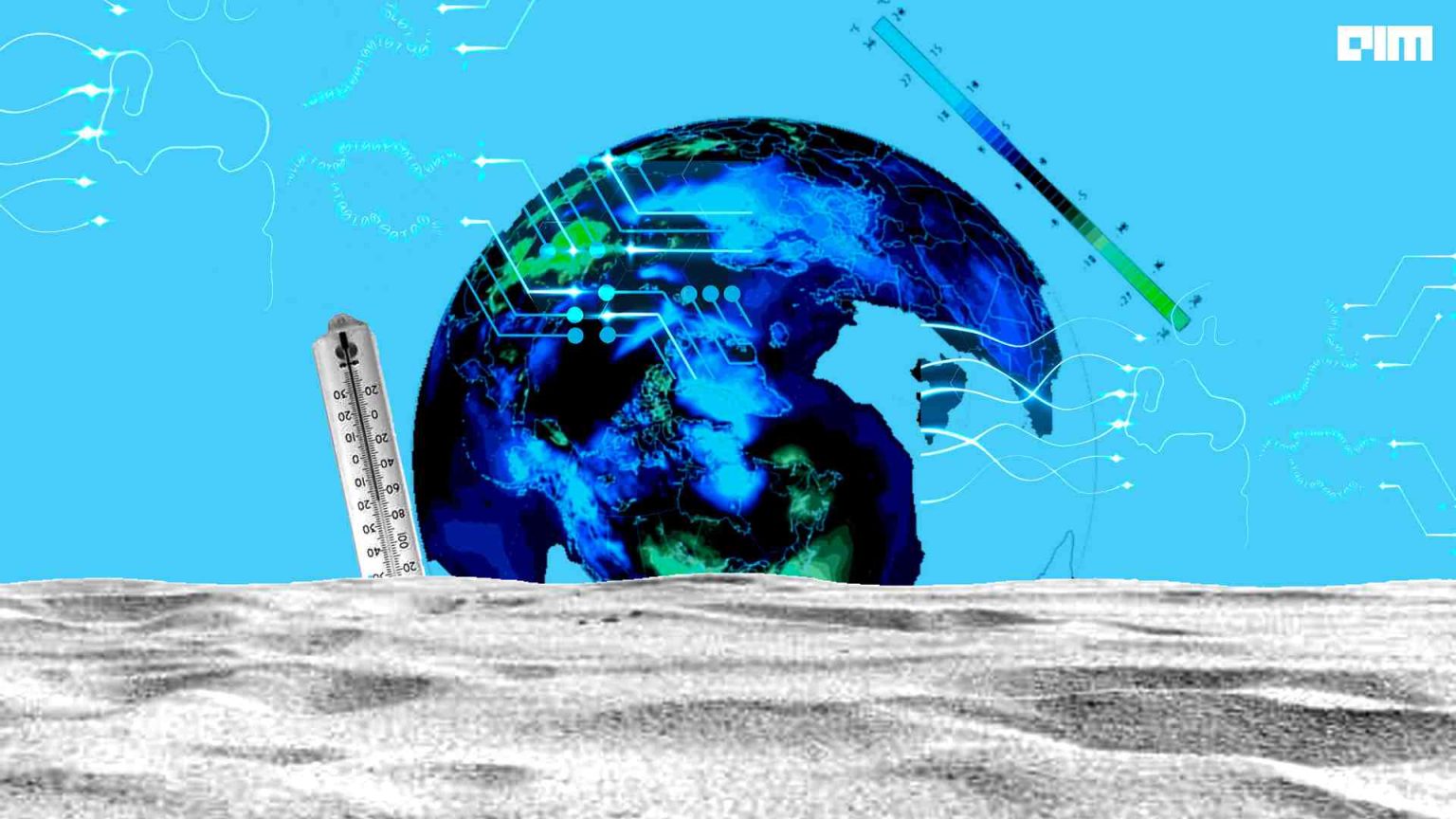

Top 5 AI Trends That Will Shape 2022 and Beyond

Under the umbrella of technology, there are several terms with which you must

be already familiar, such as artificial intelligence, machine learning, deep

learning, blockchain technology, cognitive technology, data processing, data

science, big data, and the list is endless. Just imagine, how would it be to

survive in the pandemic outbreak if there would be no technology? What if

there would be no laptops, PCs, tablets, smartphones, or any sort of gadgets

during COVID-19? How would human beings earn for their survival and living?

What if there would be no Netflix to binge-watch or no social media

application during coronavirus? Undoubtedly, that’s extremely intimidating and

intriguing at the same time. Isn’t it giving you goosebumps wondering how fast

the technology is advancing? Let’s flick through some jaw-dropping statistics

first. Did you know that there are more than 4.88 billion mobile phone users

all across the world now? According to the technology growth statistics,

almost 62% of the world’s population own a smartphone device.

Under the umbrella of technology, there are several terms with which you must

be already familiar, such as artificial intelligence, machine learning, deep

learning, blockchain technology, cognitive technology, data processing, data

science, big data, and the list is endless. Just imagine, how would it be to

survive in the pandemic outbreak if there would be no technology? What if

there would be no laptops, PCs, tablets, smartphones, or any sort of gadgets

during COVID-19? How would human beings earn for their survival and living?

What if there would be no Netflix to binge-watch or no social media

application during coronavirus? Undoubtedly, that’s extremely intimidating and

intriguing at the same time. Isn’t it giving you goosebumps wondering how fast

the technology is advancing? Let’s flick through some jaw-dropping statistics

first. Did you know that there are more than 4.88 billion mobile phone users

all across the world now? According to the technology growth statistics,

almost 62% of the world’s population own a smartphone device.

Introducing the Trivergence: Transformation driven by blockchain, AI and the IoT

Blockchain is the distributed ledger technology underpinning the

cryptocurrency revolution. We call it the internet of value because people can

use blockchain for much more than recording crypto transactions. Distributed

ledgers can store, manage and exchange anything of value — money, securities,

intellectual property, deeds and contracts, music, votes and our personal data

— in a secure, private and peer-to-peer manner. We achieve trust not

necessarily through intermediaries like banks, stock exchanges or credit card

companies but through cryptography, mass collaboration and some clever code.

In short, blockchain software aggregates transaction records into batches or

“blocks” of data, links and time stamps the blocks into chains that provide an

immutable record of transactions with infinite levels of privacy or

transparency, as desired. Each of these foundational technologies is uniquely

and individually powerful. However, when viewed together, each is transformed.

This is a classic case of the whole being greater than the sum of its

parts.

Blockchain is the distributed ledger technology underpinning the

cryptocurrency revolution. We call it the internet of value because people can

use blockchain for much more than recording crypto transactions. Distributed

ledgers can store, manage and exchange anything of value — money, securities,

intellectual property, deeds and contracts, music, votes and our personal data

— in a secure, private and peer-to-peer manner. We achieve trust not

necessarily through intermediaries like banks, stock exchanges or credit card

companies but through cryptography, mass collaboration and some clever code.

In short, blockchain software aggregates transaction records into batches or

“blocks” of data, links and time stamps the blocks into chains that provide an

immutable record of transactions with infinite levels of privacy or

transparency, as desired. Each of these foundational technologies is uniquely

and individually powerful. However, when viewed together, each is transformed.

This is a classic case of the whole being greater than the sum of its

parts.Sustainability will be a key focus as the transport sector transitions in 2022

Delivery is also an area where we expect to see the movement towards e-fleets

grow. We’ve already seen this being trialled, with parcel-delivery company DPD

making the switch to a fully electric fleet in Oxford. It’s estimated that by

replicating this in more cities, DPD could reduce CO2 by 42,000 tonnes by

2025. While third-party delivery companies offer retailers an efficient

service, carrying as many as 320 parcels a day, this model is challenged when

it comes to customers’ growing expectations they can receive deliveries within

hours. Sparked by lockdowns, which led to a 48% increase in online shopping,

the “rapid grocery delivery” trend looks set grow in 2022. Grocery delivery

company Getir, for example, built a fleet of almost 1,000 vehicles in 2021 to

service this need – and is planning to spend £100m more to expand its

offering. Given the current driver recruitment crisis, which is currently

affecting delivery and taxi firms, we are not expecting many other operators

to invest that kind of money into building new fleets though. Instead, you are

more likely to see retailers working with existing fleets.

Delivery is also an area where we expect to see the movement towards e-fleets

grow. We’ve already seen this being trialled, with parcel-delivery company DPD

making the switch to a fully electric fleet in Oxford. It’s estimated that by

replicating this in more cities, DPD could reduce CO2 by 42,000 tonnes by

2025. While third-party delivery companies offer retailers an efficient

service, carrying as many as 320 parcels a day, this model is challenged when

it comes to customers’ growing expectations they can receive deliveries within

hours. Sparked by lockdowns, which led to a 48% increase in online shopping,

the “rapid grocery delivery” trend looks set grow in 2022. Grocery delivery

company Getir, for example, built a fleet of almost 1,000 vehicles in 2021 to

service this need – and is planning to spend £100m more to expand its

offering. Given the current driver recruitment crisis, which is currently

affecting delivery and taxi firms, we are not expecting many other operators

to invest that kind of money into building new fleets though. Instead, you are

more likely to see retailers working with existing fleets.

Quote for the day:

"Cream always rises to the top...so do good leaders." -- John Paul Warren