Singapore releases guidelines for deployment of autonomous vehicles

Permanent Secretary for Transport and chairman of the Committee on Autonomous Road Transport for Singapore, Loh Ngai Seng, said plans were underway to launch a pilot deployment of autonomous vehicles in Punggol, Tengah, and Jurong Innovation District in the early 2020s, and TR 68 would help guide industry players in "the safe and effective deployment" of such vehicles in the city-state. Enterprise Singapore's director-general of quality and excellence group Choy Sauw Kook said: "In addition to safety, TR 68 provides a strong foundation that will ensure interoperability of data and cybersecurity that are necessary for the deployment of autonomous vehicles in an urban environment. The TR 68 will also help to build up the autonomous vehicle ecosystem, including startups and SMEs (small and midsize enterprises) as well as testing, inspection, and certification service providers."

Network programmability in 5G: an invisible goldmine for service providers and industry

)

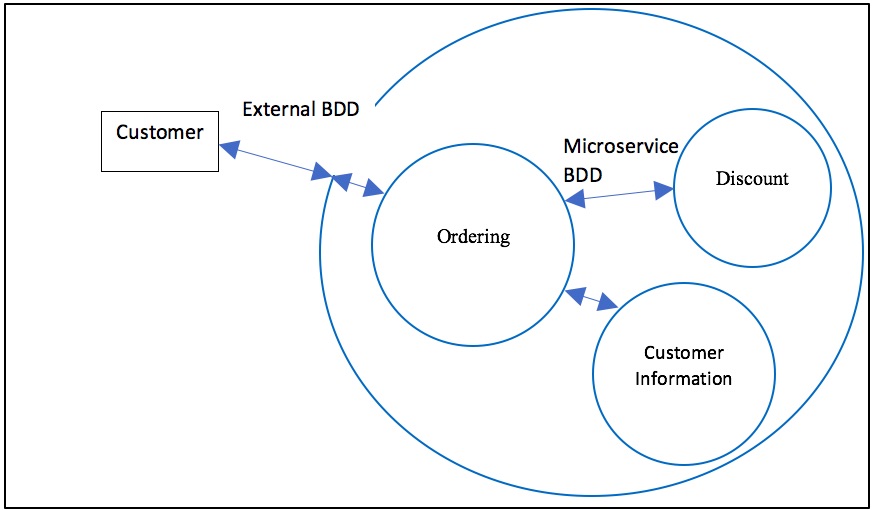

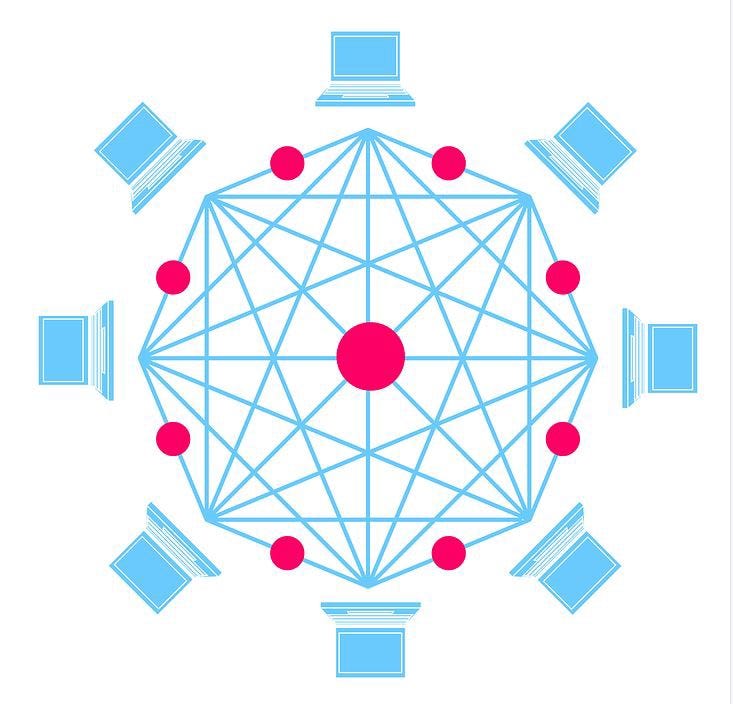

5G promises many disruptive functionalities, such as ultra-low latency communication, high bandwidth/throughput, higher security, and network slicing, all of which embed the potential to address new business opportunities not addressed by service providers today. But another functionality not always mentioned--and that has equal business potential--is network exposure, which can enable new levels of programmability in telecom core networks. Programmability in 5G Core networks allows providers to open up telecom network capabilities and services to third-party developers allowing them to create new use cases that don’t exist today. This is possible thanks to standardized APIs on the new network architecture for 5G. With APIs, a new frontier for business innovation in telecom will surge. Application developer partners will focus on new services applications, while telco service providers will focus on a new dimension of experience called “developer experience” and increase its position in the OTT value chain.

Internet Of Things (IoT): 5 Essential Ways Every Company Should Use It

Strategic decision making is where the senior leadership team identifies the critical questions it needs answering. Operational decision-making is where data and analytics are made available to everyone in the organization, often via a self-service tool, to inform data-driven decision at all levels. More and more companies make IoT-enabled products which connect them directly to their customers’ behaviours and preferences. For example, Fitbit knows how much we all exercise and what our normal sleeping patterns are. Samsung can collect usage data from their smart TVs. Elevator manufacturer Kone learns how their customers are using their elevators and Rolls Royce knows how airlines use the jet engines they make. Even companies that don’t make IoT devices can often gain access to data from other people’s devices, just think app makers that are able to collect user data because of the data collection and connectivity capabilities of the smart phones or tablets that run them. Used correctly, companies can leverage these insights to make quicker and better business decisions.

No-deal Brexit could lead to data issues, MPs told

The no-deal Brexit planning notice warns that the legal framework for transferring personal data from organisations in the EU to organisations in the UK would have to change when the country leaves the EU. This means that although businesses will be able to continue to send personal data from the UK to the EU, and would “at the point of exit continue to allow the free flow of personal data from the UK to the EU”, it may not be the same for the other way around. “We’ve been saying for a while that we would like the adequacy discussions to start as soon as possible. But the EU, as with everything else, is saying they won’t start the discussions until we are a third country. So, I’d be surprised if a decision could be made in under a year,” Derrington told the committee. There are also issues relating to legacy data, which was transferred from the EU to the UK before Brexit.

DARPA explores new computer architectures to fix security between systems

A better solution, then, in today's environment is to accept that users need or want to share data and to figure out how to keep the important bits more private, particularly as the data crosses networks and systems, with all having varying levels of, and types of, security implementations and ownership. The GAPS thrust will be in isolating the sensitive “high-risk” transactions and providing what the group calls “physically provable guarantees” or assurances. A new cross-network architecture, tracking, and data security will be developed that creates “protections that can be physically enforced at system runtime.” How they intend to do that is still to be decided. Radical forms of VPNs — an encrypted pipe through the internet would be today’s attempted solution. Whichever method they choose will be part of a $1.5 billion, five-year investment in government and defense electronics systems. And enterprise and the consumer may benefit. “As cloud systems proliferate, most people still have some information that they want to physically track, not just entrust to the ether,” says Walter Weiss, DARPA program manager, in the release.

There's more to WSL than Ubuntu

By integrating WSL with the updated Windows command-line environment, it's possible to integrate it directly with any application that offers a terminal. You can write code in Visual Studio Code, save it directly to a Linux filesystem, and test it from the built-in terminal, all without leaving your PC. And when it's time to deploy to a build system, you don't need to worry about line-ending formats or having to test code on separate systems. Support for SSH also ensures that you've got secure remote access to any Linux servers, in your data center or in the cloud. If you're using WSL to develop and test server applications, then you'll probably want to install SuSE Enterprise Server. It's a popular Linux server, and can be configured to handle most server tasks. With WSL now supported on Windows Server, you can use it to build test environments for cloud applications before deploying them on Azure or another public cloud. SuSE bundles a one-year developer subscription, which gives you more support resources than its standard community-based support forums.

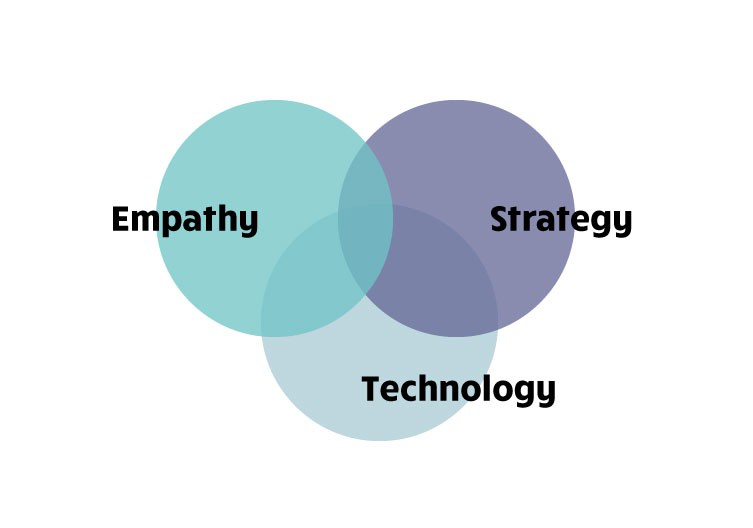

Why we need less people, more skills for digital transformation

The fundamental argument comes down to value. Often in business, a corporate mentality exists in which executives boast about the number of people they have working for their company or on a project because they believe that provides the best value for their clients. This attitude has existed for more than two decades yet companies are still failing to understand that this might not provide the best value for their business or clients. Companies need to do more research to understand what works for them as an individual business, and often this means they don’t need to hire lots of people. Rather, they need the right people. While it may seem reassuring to have a large team working on an expensive project, often the work is easier, smoother and quicker when led by a small team who are highly-skilled, have good experience and who can be there working on the ground together, not spread around or working remotely. This may be more expensive at first, but it is worth it in the long term.

The FTC's cyberinsurance tips: A must-read for small business owners

Dan Smith, president, co-founder, and COO of Zeguro, a cybersecurity company that has grabbed the attention of investors, admits in this PYMNTS article the company came under a spear-phishing attack recently. It was unsuccessful, but it pointed out a very real need. Most small businesses do not think they need cyberinsurance (only 4% in the US currently have it) or do not know it's available. Smith adds that another problem area is that brokers providing the insurance are not spending enough time explaining it or may not understand it themselves.To fix the situation, Smith, in the PYMNTS article, announced that Zeguro will be partnering with the QBE Insurance Group to offer tailored cyberinsurance solutions. According to Smith, the idea is to use the company's expertise and acquired cybersecurity intelligence to craft the appropriate cyberinsurance solution for each client. Insurance on any level is a complicated subject, and then add the complexity of trying to secure a digital infrastructure from cybercriminals—using a partnership like Zeguro and QBE Insurance Group seems like good business.

What programming languages rule the Internet of Things?

Clearly, there’s a consensus set of top-tier IoT programming languages, but all of the top contenders have their own benefits and use cases. Java, the overall most popular IoT programming language, works in a wide variety of environments — from the backend to mobile apps — and dominates in gateways and in the cloud. C is generally considered the key programming language for embedded IoT devices, while C++ is the most common choice for more complex Linux implementations. Python, meanwhile, is well suited for data-intensive applications. Given the complexities, maybe IoT for All put it best. The site noted that, “While Java is the most used language for IoT development, JavaScript and Python are close on Java's heels for different subdomains of IoT development.” Perhaps, the most salient prediction, though, turns up all over the web: IoT development is multi-lingual, and it's likely to remain multi-lingual in the future.

How to accelerate digital identity in the UK

To encourage the reuse of a digital identity, the critical first step involves striking the right balance in the initial creation of a digital identity, based on the appropriate level of trust and friction for a first-time interaction. Digital services must be designed with the appropriate initial levels of trust, subsequently increasing levels of trust when required. It is a mistake to start with the maximum level of trust, which may be too high for the service. Instead, enhance trust as and when required. Digital identity standards allow services to map their increasing identity trust requirements effectively. Digital identity should be used at the point of need, with appropriate controls where absolutely necessary to complete the task. There is evidence that motivated users achieve high levels of success in verifying their identity in the right circumstances. The UK identity standards, built in response to real-world threats and risks, are world-leading, support the European Union’s eIDAS equivalence, and are closely aligned to the US NIST 800-63-A standard.

Quote for the day:

"Leading people is like cooking. Don_t stir too much; It annoys the ingredients_and spoils the food" -- Rick Julian