5 Signs That You’re a Great Developer

Programming directly changes your brain to work in another way, you’re starting

to think more algorithmically and solve problems faster, so it really affects

other aspects of your life Good programmers not only can learn anything else

much faster, especially if we’re talking about tech-related directions, but also

they are great examples of entrepreneurs and CEO. Look at Elon Musk, for

instance, he was a programmer and built his own game when he was 12. ... As we

briefly discussed previously, programming encourages creative thinking and

teaches you how to approach problems in the most effective way. But in order to

do so, you must first be able to solve a lot of these difficulties and have a

passion for doing so; only then will you probably succeed as a developer. If

you’ve just started and thought that easy, then you’re completely wrong. You

just haven’t figured out genuinely challenging problems, and the more you learn,

the more difficult and complex the difficulties get. Because you need to not

only solve it, but also solve it in the most effective way possible, speed up

your algorithm, and optimize everything.

Experimental WebTransport over HTTP/3 support in Kestrel

WebTransport is a new draft specification for a transport protocol similar to

WebSockets that allows the usage of multiple streams per

connection. WebSockets allowed upgrading a whole HTTP TCP/TLS connection to

a bidirectional data stream. If you needed to open more streams you’d spend

additional time and resources establishing new TCP and TLS sessions. WebSockets

over HTTP/2 streamlined this by allowing multiple WebSocket streams to be

established over one HTTP/2 TCP/TLS session. The downside here is that because

this was still based on TCP, any packets lost from one stream would cause delays

for every stream on the connection. With the introduction of HTTP/3 and QUIC,

which uses UDP rather than TCP, WebTransport can be used to establish multiple

streams on one connection without them blocking each other. For example,

consider an online game where the game state is transmitted on one bidirectional

stream, the players’ voices for the game’s voice chat feature on another

bidirectional stream, and the player’s controls are transmitted on a

unidirectional stream.

Software builders across Amazon require consistent, interoperable, and

extensible tools to construct and operate applications at our peculiar scale;

organizations will extend on our solutions for their specialized business

needs. Amazon’s customers benefit when software builders spend time on novel

innovation. Undifferentiated work elimination, automation, and integrated

opinionated tooling reserve human interaction for high judgment

situations. Our tools must be available for use even in the worst of

times, which happens to be when software builders may most need to use them:

we must be available even when others are not. Software builder

experience is the summation of tools, processes, and technology owned

throughout the company, relentlessly improved through the use of

well-understood metrics, actionable insights, and knowledge

sharing. Amazon’s industry-leading technology and access to top experts

in many fields provides opportunities for builders to learn and grow at a rate

unparalleled in the industry. As builders we are in a unique position to

codify Amazon’s values into the technical foundations; we foster a culture of

belonging by ensuring our tools, training, and events are inclusive and

accessible by design.

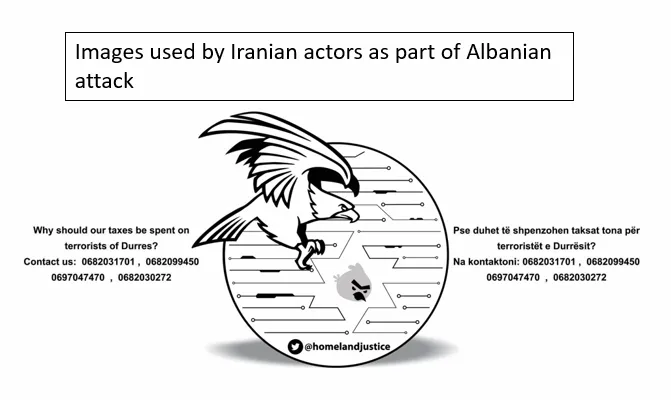

The Troublemaker CISO: How Much Profit Equals One Life?

We take for granted that those who are charged with protecting us are doing so

with our best interest at heart. There is no shaving off another few cents

just to increase value to shareholders over the life of a person. Lucky for

me, there is a shift in the boardrooms and governing bodies to see how

socially responsible you are and whether you are acting in the best interest

of the people and not just the investors. If the members of the board and

governing body are considering these topics when steering a business, isn't it

time to relook at how and why we do things? Are we as CISOs not accountable to

leadership to impress on them the risk that IOT/internet connectivity poses to

critical networks - and especially to healthcare? It is time to be firm in

expressing the risk and saying we would rather spend a bit more money and time

and do it the safe way. And this should be listed as the top risk in the

company. The other big issue I have with this type of network being connected

is one of transparency.

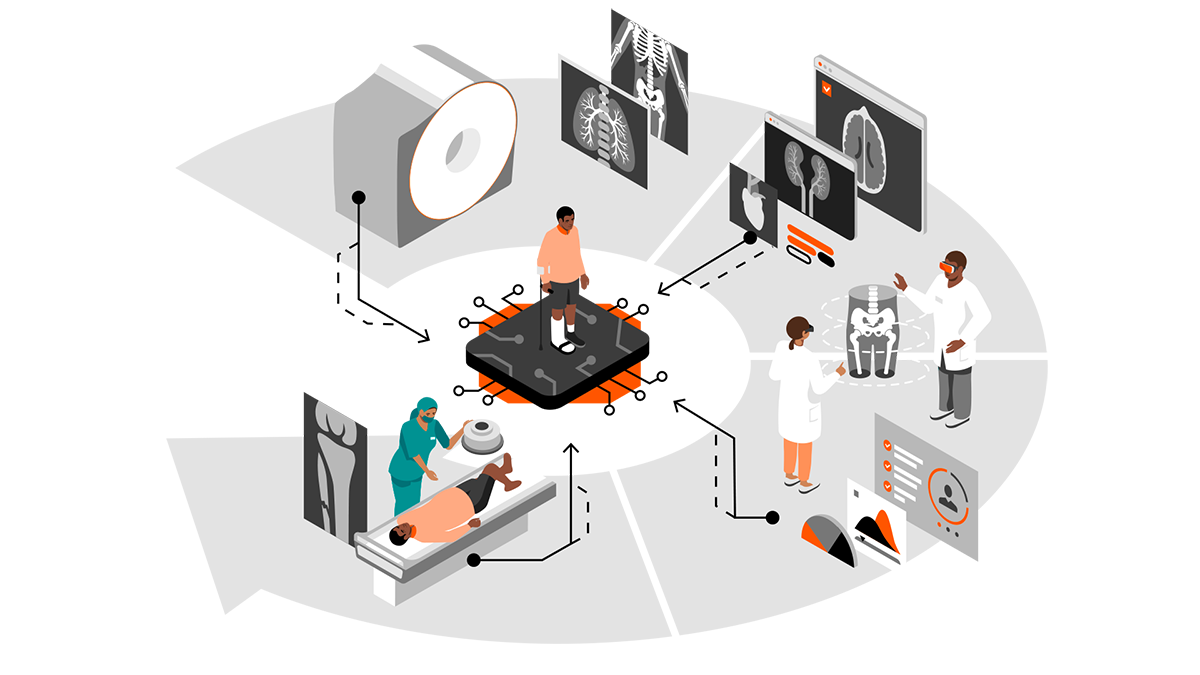

Digital Twins Offer Cybersecurity Benefits

A key difficulty, from a cybersecurity perspective, is the fact drug

production lines are made up of multiple different technologies, running

different operating systems that are often provided by different suppliers.

“Integrating multiple systems from different suppliers can provide expanded

attack surface that can be exploited by cyber adversary,” continues Mylrea. To

address this, Mylrea and Grimes developed what they refer to as “biosecure

digital twins”—replicas of manufacturing lines they use to identify potential

points of attack for hackers. “The digital twin is essentially a high-fidelity

virtual representation of critical manufacturing processes. From a security

perspective, this improves monitoring, detection, and mitigation of stealthy

attacks that can go undetected by most conventional cybersecurity defenses,”

explains Mylrea. “Beyond security, the biosecure digital twin can optimize

performance and productivity by detecting when critical systems deviate from

their ideal state and correct in real time to enable predictive maintenance

that prevent costly faults and safety failures.”

Unlocking cyber skills: This year’s essential back-to-school lesson plan

Technology is continually advancing, which will only create more avenues for

cybersecurity roles in the future. While it’s essential to inform students

about the types of careers in cybersecurity, teachers and career advisors

should be aware of the skills and qualities the sector needs beyond technical

computer and software knowledge. Once this is achieved, it can shed light on

the roles students can go onto. Technical skills are critical in

cybersecurity, yet they can be learned, fostered, and evolved throughout a

student’s career. Schools need to tap into individual students’ strengths in

hopes of encouraging them to pursue cyber positions. Broadly, cybersecurity

enlists leaders, communicators, researchers, critical thinking… the list goes

on. Having the qualities needed to fulfil various roles in the industry can

position a student remarkably when they first start in the industry. Yet, this

comes down to their mentors in high school being able to communicate that a

student’s inquisitive nature or presenting skills can be applied to various

sectors.

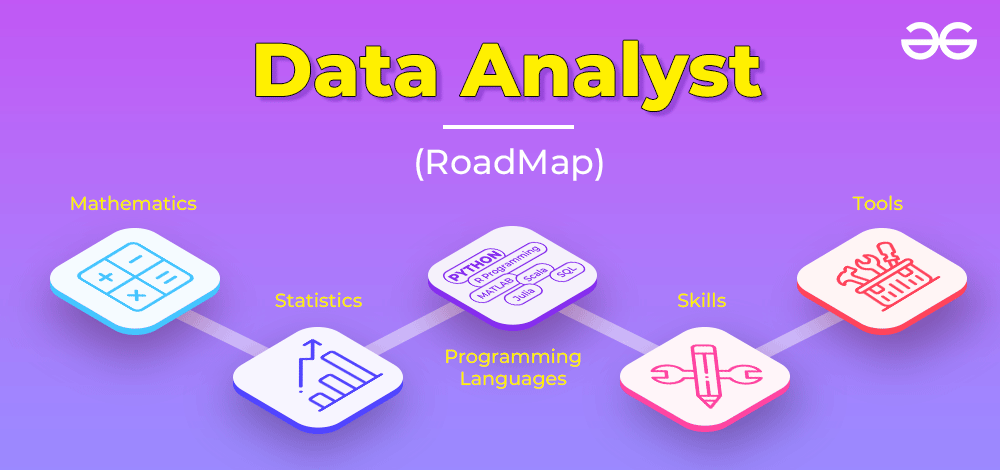

Data literacy: Time to cure data phobia

Data literacy is an incredibly important asset and skill set that should be

demonstrated at all levels of the workplace. In simple terms, data literacy is

the fundamental understanding of what data means, how to interpret it, how to

create it and how to use it both effectively and ethically across business use

cases. Employees who have been trained in and applied their knowledge of how

to use company data demonstrate a high level of data literacy. Although many

people have traditionally associated data literacy skills with data

professionals and experts, it’s becoming necessary for employees from all

departments and job levels to develop certain levels of data

literacy. The Harvard Business Review stated: “Companies need more people

with the ability to interpret data, to draw insights and to ask the right

questions in the first place. These are skills that anyone can develop, and

there are now many ways for individuals to upskill themselves and for

companies to support them, lift capabilities,and drive change. Indeed, the

data itself is clear on this: Data-driven decision-making markedly improves

business performance.”

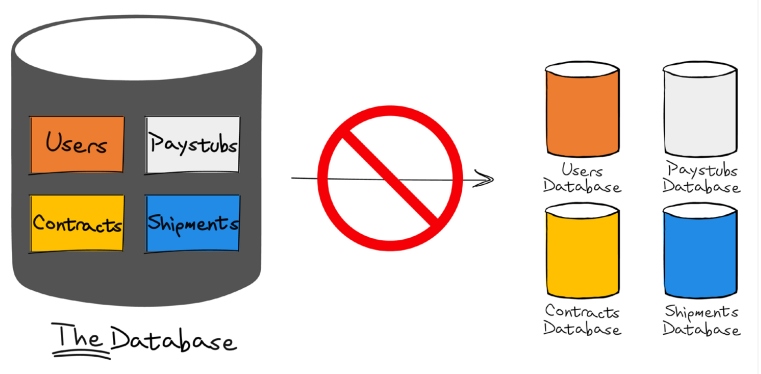

To BYOT & Back Again: How IT Models are Evolving

The growing complexity of IT frameworks is startling. A typical enterprise has

upwards of 1,200 cloud services and hundreds of applications running at any

given moment. On top of that, employees have their own smartphones, and many

use their own routers and laptops. Meanwhile, various departments and groups

-- marketing, finance, HR and others -- subscribe to specialized cloud

services. The difficulties continue to pile up -- particularly as CIOs look to

build out more advanced data and AI frameworks. McKinsey & Company found

that between 10% and 20% of IT budgets are devoted to adding more technology

in an attempt to modernize the enterprise and pay down technical debt. Yet,

part of the problem, it noted, is “undue complexity” and a lack of standards,

particularly at large companies that stretch across regions and countries. In

many cases, orphaned and balkanized systems, data sprawl, data silos, and

complex device management requirements follow. For CIOs seeking simplification

and tighter security, the knee-jerk reaction is often to clamp down on choices

and options.

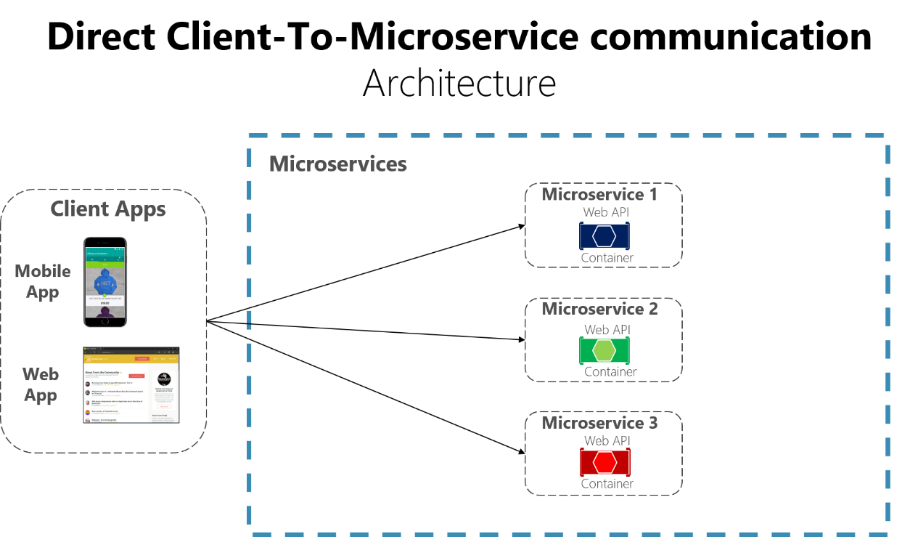

IT leadership: What to prioritize for the remainder of 2022

To deliver product-centric value, it’s best to have autonomous,

cross-functional teams running an Agile framework. Those teams can include

technical practitioners, design thinkers, and business executives. Together,

they can increase business growth by as much as 63%, Infosys’ Radar report

uncovered. Cross-pollination efforts can spread Agile across the entire

enterprise, building credibility and trust among high-level stakeholders

toward an iterative process that can deliver meaningful, if incremental,

business results. Big-bang rollouts, with a raft of modernizations released in

one fell swoop, may seem attractive to management or other stakeholders. But

they carry untold risk: developers scrambling to fix bugs after the fact,

account teams working to retain disgruntled customers. Approach cautiously,

and consider an Agile roadmap of smaller, iterative developments instead of

the momentous release. It also breaks down the considerable task of

application modernization into smaller, bite-sized chunks.

How Policy-as-Code Helps Prevent Cloud Misconfigurations

Policy-as-code is a great cloud configuration solution because it eliminates

the potential for human error and makes it more difficult for hackers to

interfere. Policy compliance is crucial for cloud security, ensuring that

every app and piece of code follows the necessary rules and conditions. The

easiest way to ensure nothing slips through the cracks is to automate the

compliance management process. Policy-as-code is also a good choice in a

federated risk management model. A set of common standards are applied across

a whole organization, although departments or units retain their own methods

and workflows. PaC fits seamlessly into this high-security system by scaling

and automating IT policies throughout a company. Preventing cloud

misconfiguration relies on effectively ensuring every app and line of code is

adhering to an organization’s IT policies. PaC offers some key benefits that

make this possible without being a hassle. Policy-as-code improves the

visibility of IT policies since everything is clearly defined in code

format.

Quote for the day:

"Without courage, it doesn't matter

how good the leader's intentions are." -- Orrin Woodward

/2022/09/21/image/jpeg/gDXGTTprNtIwME2brvsIbvXCQuTYgiMbSRTzQVHh.jpg)