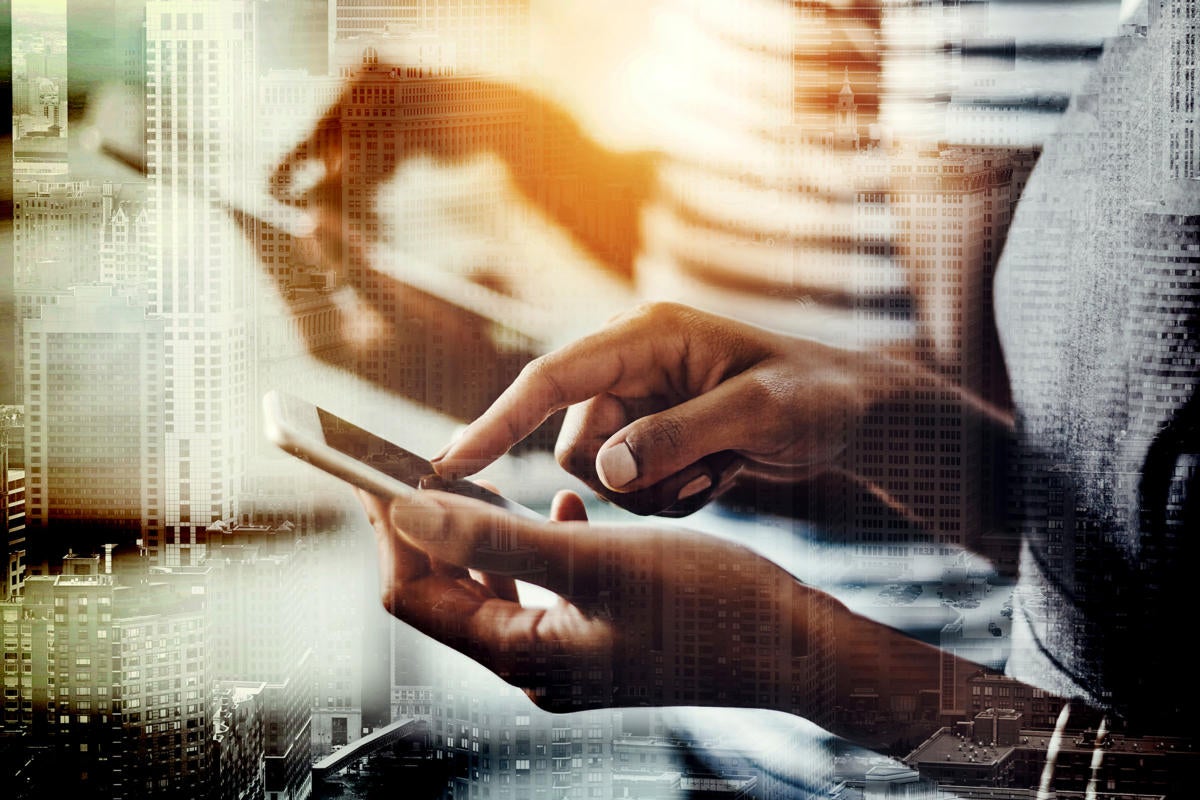

Super apps: the next big thing for enterprise IT?

Enterprise super apps will allow employers to bundle the apps employees use

under one umbrella, he said. This will create efficiency and convenience, where

different departments can select only the apps they want, much like a

marketplace, to customize their working experiences. Other advantages of super

apps for enterprises include providing a more consistent user experience,

combating app fatigue and app sprawl, and enhancing security by consolidating

functions into one company-managed app. Gartner analyst Jason Wong said the

analyst firm is seeing interest in super apps from organizations, including big

box stores and other retailers, that have a lot of frontline workers who rely on

their mobile devices to do their jobs. One company that has adopted a super app

to enhance the experience of its frontline workers and other employees is

TeamHealth, a leading physician practice in the US. TeamHealth is using an

employee super app from MangoApps, which unifies all the tools and resources

employees use daily within one central app.

Meta faces GDPR complaint over processing personal data without 'free consent'

The case centres on whether Meta can legitimately claim to have obtained free

consent from its customers to process their data, as required under GDPR, when

the only alternative is for customers to pay a substantial fee to opt out of

ad-tracking. The complaint will be watched closely by social media companies

such as TikTok, which are reported to be considering offering ad-free services

to customers outside the US to meet the requirements of European data protection

law. Meta denied that it was in breach of European data protection law, citing a

European Court of Justice ruling in July 2023 which it said expressly recognised

that a subscription model was a valid form of consent for an ad-funded service.

Spokesman Matt Pollard referred to a blog post announcing Meta’s subscription

model, which stated, “The option for people to purchase a subscription for no

ads balances the requirements of European regulators while giving users choice

and allowing Meta to continue serving all people in the EU, EEA and

Switzerland”.

India’s Path to Cyber Resilience Through DevSecOps

DevSecOps, a collaborative methodology between development, security, and

operations, places a strong emphasis on integrating security practices into the

software development and deployment processes. In India, the approach has gained

substantial traction due to several reasons, including a security-first mindset,

adherence to compliance requirements and escalating cybersecurity threats. A

survey revealed that the primary business driver for DevSecOps adoption is a

keen focus on business agility, achieved through the rapid and frequent delivery

of application capabilities, as reported by 59 per cent of the respondents. From

a technological perspective, the most significant factor is the enhanced

management of cybersecurity threats and challenges, a factor highlighted by 57

per cent of the participants. Businesses now understand the importance of

proactive security measures. DevSecOps encourages a security-first mentality,

ensuring that security is an integral part of the development process from the

outset.

Cybersecurity and Burnout: The Cybersecurity Professional's Silent Enemy

In the world of cybersecurity, where digital threats are a constant, the mental

health of professionals is an invaluable asset. Mindfulness not only emerges as

a shield against the stress and burnout that pose security risks to

organizations, but it also becomes a key strategy to reduce the costs associated

with lost productivity and staff turnover. By adopting mindfulness practices and

preventing burnout, cybersecurity professionals not only preserve their

well-being, but also contribute to a healthier work environment, improve the

responsiveness and effectiveness of cybersecurity teams, and ensure the

continued success of companies in this critical technology field. Cybersecurity

challenges are multidimensional. They cannot be managed in only one dimension.

Mindfulness is an essential tool to keep us one step ahead. By recognizing the

value of emotional well-being in the fight against cyberattacks, we can build a

stronger and more sustainable defense. Cybersecurity is not only a technical

issue, but also a human one, and mindfulness presents itself as a key piece in

this intricate security puzzle.

Will AI replace Software Engineers?

While AI is automating some tasks previously done by devs, it’s not likely to

lead to widespread job losses. In fact, AI is creating new job opportunities for

software engineers with the skills and expertise to work with AI. According to a

2022 report by the McKinsey Global Institute, AI is expected to create 9 million

new jobs in the United States by 2030. The jobs that are most likely to be lost

to AI are those that are routine and repetitive, such as data entry and coding.

However, software engineers with the skills to work with AI will be in high

demand. ... Embrace AI as a tool to enhance your skills and productivity as a

software engineer. While there's concern about AI replacing software engineers,

it's unlikely to replace high-value developers who work on complex and

innovative software. To avoid being replaced by AI, focus on building

sophisticated and creative solutions. Stay up-to-date with the latest AI and

software engineering developments, as this field is constantly evolving. Adapt

to the changing landscape by acquiring new skills and techniques. Remember that

AI and software engineering can collaborate effectively, as AI complements human

skills.

Bridging the risk exposure gap with strategies for internal auditors

Without a strategic view of the future — including a clear-eyed assessment of

strengths, weaknesses, opportunities, threats, priorities, and areas of leakage

— internal audit is unlikely to recognize actions needed to enable success.

There is no bigger threat to organizational success than a misalignment between

exponentially increasing risks and a failure to respond due to a lack of vision,

resources, or initiative. Create and maintain a good, well-documented strategic

plan for your internal audit function. This can help you organize your thinking,

force discipline in definitions, facilitate implementation, and continue asking

the right questions. Nobody knows for certain what lies ahead, and a

well-developed strategic plan is a key tool for preparing for chaos and

ambiguity. ... Companies may have less time than they think to prepare for

compliance, and internal auditors should be supporting their organizations in

getting the right enabling processes and technologies in place as soon as

possible. This will require a continuing focus on breaking down silos and

improving how internal audit collaborates with its risk and compliance

colleagues.

Generative AI in the Age of Zero-Trust

Enter generative AI. Generative AI models generate content, predictions, and

solutions based on vast amounts of available data. They’re making waves not just

for their ‘wow’ factor, but for their practical applications. It’s only natural

that employees would gravitate to the latest technology offering the ability to

make them more efficient. For cybersecurity, this means potential tools that

offer predictive threat analysis based on patterns, provide automatic code

fixes, dynamically adjust policies in response to evolving threat landscapes and

even automatically respond to active attacks. If used correctly, generative AI

can shoulder some of the burdens of the complexities that have built up over the

course of the zero-trust era. But how can you trust generative AI if you are not

in control of the data that trains it? You can’t, really. ... This is forcing

organizations to start setting generative AI policies. Those that choose the

zero-trust path and ban its use will only repeat the mistakes of the past.

Employees will find ways around bans if it means getting their job done more

efficiently. Those who harness it will make a calculated tradeoff between

control and productivity that will keep them competitive in their respective

markets.

Organizations Must Embrace Dynamic Honeypots to Outpace Attackers

There are a number of ways in which AI-powered honeypots are superior to their

static counterparts. The first is that because they can independently evolve,

they can become far more convincing through automatic evolution. This sidesteps

the problem of constantly making manual adjustments to present the honeypot as a

realistic facsimile. Secondly, as the AI learns and develops, it will become far

more adept at planting traps for unwary attackers, meaning that hackers will not

only have to go slower than usual to try and avoid said traps but once one is

triggered, it will likely provide far richer data to defense teams about what

attackers are clicking on, the information they’re after, how they’re moving

across the site. Finally, using AI tools to design honeypots means that, under

the right circumstances, even tangible assets can be turned into honeypots. ...

Therefore, having tangible assets such as honeypots allows defense teams to

target their energy more efficiently and enables the AI to learn faster, as

there will likely be more attackers coming after a real asset than a fake one.

Almost all developers are using AI despite security concerns, survey suggests

Many developers place far too much trust in the security of code suggestions

from generative AI, the report noted, despite clear evidence that these systems

consistently make insecure suggestions. “The way that code is generated by

generative AI coding systems like Copilot and others feels like magic," Maple

said. "When code just appears and functionally works, people believe too much in

the smoke and mirrors and magic because it appears so good.” Developers can also

value machine output over their own talents, he continued. "There’s almost an

imposter syndrome," he said. ... Because AI coding systems use reinforcement

learning algorithms to improve and tune results when users accept insecure

open-source components embedded in suggestions, the AI systems are more likely

to label those components as secure even if this is not the case, it continued.

This risks the creation of a feedback loop where developers accept insecure

open-source suggestions from AI tools and then those suggestions are not

scanned, poisoning not only their organization’s application code base but the

recommendation systems for the AI systems themselves, it explained.

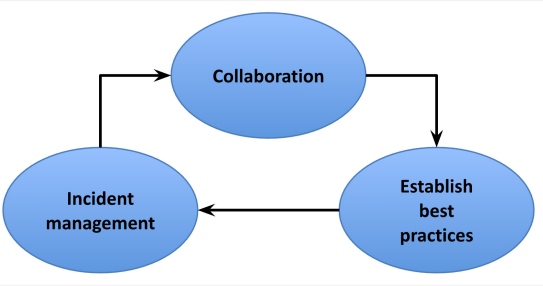

Former Uber CISO Speaks Out, After 6 Years, on Data Breach, SolarWinds

Sullivan says the key mistake he made was not bringing in third-party

investigators and counsel to review how his team handled the breach. "The thing

we didn't do was insist that we bring in a third party to validate all of the

decisions that were made," he says. "I hate to say it, but it's more CYA." Now,

Sullivan advises other CISOs and companies about navigating their

responsibilities in disclosing breaches, especially as the new Securities &

Exchange Commission (SEC) incident reporting requirements are set to take

effect. Sullivan says he welcomes the new regulations. "I think anything that

pushes towards more transparency is a good thing," he says. He recalls that when

he was on former President Barack Obama's Commission on Enhancing National

Cybersecurity, Sullivan was pushing to give companies immunity if they are

transparent early on during security incidents. That hasn't happened until now,

according to Sullivan, who says the jury is still out on the new regulations,

which will require action starting in December.

Quote for the day:

"The distance between insanity and

genius is measured only by success." -- Bruce Feirstein