When designing an AI product, always keep in mind that the machine learning will have the worst consequences. Therefore, the “go back” solution under the worst results is usually as important as, and often more important than the design under the best results. Once the user has a disappointing, frustrated mood, they will easy to give up this feature or even the entire product, and it is difficult to deal with. Therefore, a more important principle is that if you have insufficient confidence in machine intelligence, please choose a “go back” solution for the user when designing the product. How to clearly communicate to the user the benefits of artificial intelligence and how to provide elegant solutions for errors that may arise at any time is a challenge for designers. ... All of the “intelligent” products on the market have a long way to go before true intelligence. At this stage, the most important thing for artificial intelligence products is to build user trust, perhaps starting with small tasks such as accurately forecasting the weather, playing the correct music, and setting the alarm clock the user wants.

Passion For Banking Innovation Fueled By Fintech, Big Tech Disruptors

To be competitive in the changing financial marketplace, banks and credit unions must provide mobile and online banking solutions that exceed peoples’ expectations. While consumers are increasingly satisfied with basic digital services provided by most traditional institutions, there are higher expectations around how institutions must help people reach their financial goals. Meeting higher digital banking expectations could provide a way for banks and credit unions to monetize financial solutions, much as Amazon provides a higher, monetized option with Amazon Prime. The key will be to actually provide an enhanced level of value that digital consumers crave. Unfortunately, while financial institutions hold a massive amount of consumer data, very few draw insights from that raw material — certainly not in a way that significantly improves the customer experience. Without a differentiated experience, the door is open for those organizations that can combine advanced technologies with real-time insights and contextual messaging and engagement.

Welcome to the City 4.0

Applied to cities, digitalization can not only improve efficiency by minimizing the waste of time and resources, but it will simultaneously improve a city’s productivity, secure growth, and drive economic activities. The Finnish capital of Helsinki is currently in the process of proving this. An early adopter of smart city technology and modeling, it launched the Helsinki 3D+ project to create a three-dimensional representation of the city using reality capture technology provided by the software company Bentley Systems for geocoordination, evaluation of options, modeling, and visualization. ... The three-dimensional mesh created by Bentley’s reality modeling software is linked to the IoT-enabled infrastructure components via Siemens’ cloud-based IoT operating system called MindSphere. Thus the city’s underlying infrastructure layer, such as energy, water, transportation, security, buildings, and healthcare, provides data that is fed into a common data layer in order to enable analytics and preventive as well as prescriptive measures. MindSphere is capable of managing huge quantities of data.

The Bitcoin White Paper's Birth Date Should Give Us All a Scare

The bitcoin paper was initially greeted with skepticism by the handful of people who actually read it, and even after Bitcoin was operationalized on January 3, 2009, it was largely ignored for the first year of its existence. Bitcoin hardly got off to an auspicious start. However, Bitcoin steadily attracted more use and interest, and a growing group of people began to see that the innovation created by Satoshi's solution to the long bedeviling 'double-spending problem' in computer science could also serve as a cornerstone for creating a new and better financial system. As I suggested in 2014, regulatory reform would fail to fundamentally address the root causes of the financial crisis and other problems embedded in traditional finance. Regulations enacted in the wake of a crisis are too often easily rolled-back once the waters have calmed, and it can be difficult to sustain over time the momentum of social movements focused around obtuse subjects like financial system reform.

Crash Course: SAML 101 and Identity Federation (With Ping Identity)

Single sign-on allows users to input their credentials once and have it apply to all relevant applications. More specifically, federated identity uses single sign-on to establish employee and user identity, and then—as the user attempt to access applications—the solution transparently and securely shares their credentials with the application. This allows users and employees to skip the usual log-in step and enjoy a seamless digital workplace experience. SAML is part of this standards-based identity federation. SAML alleviates log-in issues by enabling single sign-on and the secure exchange of authentication and authorization information between security domains. At its most basic, when a user attempts to access a service provider with an identity federation solution, the federation software creates a SAML authentication request and delivers it to the appropriate identity provider. The identity provider authenticates the user and creates its own SAML assertion representing the user identity and attributes.

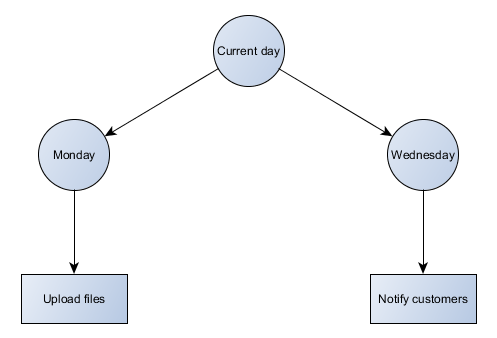

Why businesses must take a strategic view of automation

To drive automation initiatives, Capgemini said business leaders need a bold vision and a clear roadmap to build momentum and bring the organisation behind them. The report stated: “Automation is a technology solution to business transformation, and hence both business and technology leadership should be engaged actively from day one. Automation needs to be tackled as an end-to-end strategic transformation programme as opposed to a series of tactical deployments. “Also, to maximise the benefits and ROI [return on investment] of automation investments, it is essential that processes and business models are standardised and optimised before they are enabled by automation, robotics, and artificial intelligence.” Capgemini also urged businesses to consider establishing a centre of excellence for automation to help drive change across the business.

Emotet malware gang is mass-harvesting millions of emails in mysterious campaign

Ever since last summer, Emotet has been growing, and growing, and growing --both in capabilities and in the number of victims it has infected. The malware has become so ubiquitous nowadays that the US Department of Homeland Security has issued a security advisory over the summer, warning companies about the threat that Emotet poses to their networks. The danger comes from the fact that Emotet has a multitude of smaller modules that it downloads once it gains an initial foothold. Some of these modules, such as its SMB-based spreader that moves laterally throughout networks, can wreak havoc inside large organizations. Furthermore, Emotet also never comes alone, often dropping even more potent threats, such as the TrickBot infostealer, remote access trojans, or, in the worst case scenarios, even ransomware. Notorious is the case of the city of Allentown, where an Emotet infection has spread in every corner of the city's network and downloaded even more malware, and, in the end, the municipality decided to pay nearly $1 million to rebuild its infrastructure from scratch.

Right-to-repair smartphone ruling loosens restrictions on industrial, farm IoT

The new ruling may not give farmers ownership of their farming data, but at least they now have the right to ignore the DRMs and fix their own machines — or to hire independent repair services to do the job — instead of paying “dealer prices” to the vendors’ own repair crews. Per Motherboard, the new ruling “allows breaking digital rights management (DRM) and embedded software locks for ‘the maintenance of a device or system … in order to make it work in accordance with its original specifications’ or for ‘the repair of a device or system … to a state of working in accordance with its original specifications.’” From my perspective, this is indeed a win, but far from a complete victory. Farmers still aren’t allowed to hack into their own tractors to turn them into drag racers (that might be fun to watch!), but at least they can do whatever they need to do in order to make sure the machines aren’t falling down on the job.

Medical Device Security Best Practices From Mayo Clinic

"Because of the way that some of these devices are built so well, from a physical standpoint, you can use some of these machines for 10 or 20 years," he says in an interview with Information Security Media Group. "We're going to have to figure out how we can manage the software over that lifespan as well and make sure that that stays secure." If that cannot be done, he says, "we're going to have to figure out some way to be able to just box things off into a separate area where we've got them isolated, we've increased the monitoring of them and are able to use a lot of other compensating controls." Everyone is looking for a silver bullet - an easy solution to device security, he acknowledges. "We have companies all the time calling us trying to sell us a whole box of silver bullets. But it's going to take a combination of user education - so that people who use these devices on patients have a better cybersecurity awareness - and healthcare delivery organizations implementing compensating controls and having good security practices, as well as the vendors having security by design."

Cybersecurity culture: Arrow in CIOs' quiver to fight cyberthreats

The companies that we've seen successfully change their culture have someone who owns [cybersecurity] culture," Pearlson said after a talk at the SIM Boston Technology Leadership Summit held at Gillette Stadium in Foxborough, Mass., on Tuesday. "Their job is to make sure that the word and the behaviors and the values and the attitudes and the beliefs are adjusted and informed." An important piece of advice: The executive tasked with fostering a cybersecurity culture should be separate from the chief information security officer, because the CISO has a much bigger portfolio, Pearlson said. Pearlson, along with MIT Sloan colleagues Matt Maloney and Keman Huang, gave CIOs at the SIM event a glimpse into their recent research on cybersecurity, which includes learning as much as they can about how attackers interact on the dark web and how to defend against strikes that target weaknesses in people and software.

Quote for the day:

"Challenges in life always seek leaders and leaders seek challenges." -- Wayde Goodall