The Future Of Fintech: The New Normal After The Covid-19 Crisis

For banks, the new normal marks the end of fintech experimentation. Over the

past few years, banks have been obsessed with fintech partnerships. It’s been

a way of convincing themselves (and their boards) that they’re innovating and

not getting left behind as the industry undergoes a digital transformation.

Too many of these efforts, however, have had little impact on the strategic

direction, organizational culture, and bottom line results of the institution.

According to Louise Beaumont: “For banks, partnerships won’t generate the

quantum leap they need to move beyond a product-centric mentality to deliver

next-generation services. At best, they may gain a workable solution that

squats awkwardly in the existing infrastructure. At worst, they’ll fail to

deliver any noticeable difference.” Many so-called partnerships—many of which

aren’t partnerships, but just vendor arrangements—are examples of what Jason

Henrichs of Fintech Forge likes to call the “fintech petting zoo.” The luxury

of experimenting with fintech is gone. Banks will need to accelerate their

investments in fintech to achieve both the top line increases and expense

reductions needed to maintain margins and profitability.

ACLU sues Clearview AI claiming the company's tech crosses ethical bounds

The ACLU alleges that by using face recognition technology, Clearview has

captured more than 3 billion faceprints from images available online, all

without the knowledge or consent of those pictured. "Clearview claims that,

through this enormous database, it can instantaneously identify the subject of

a photograph with unprecedented accuracy, enabling covert and remote

surveillance of Americans on a massive scale," it said. "This technology is so

dangerous, in fact, that this little-known startup 'might end privacy as we

know it'." The ACLU said that Clearview has "created the nightmare scenario

that we've long feared, and has crossed the ethical bounds that many companies

have refused to even attempt" and accused the company of building a mass

database of billions of faceprints without knowledge or consent. "Neither the

United States government nor any American company is known to have ever

compiled such a massive trove of biometrics," it wrote. "Adding fuel to the

fire, Clearview sells access to a smartphone app that allows its customers --

and even those using the app on a trial basis -- to upload a photo of an

unknown person and instantaneously receive a set of matching photos."

GoodData and Visa: A common data-driven future?

One of the initiatives GoodData is taking to help organizations go from

dashboards to data-driven application is the Accelerator Toolkit. The

Accelerator Toolkit is a UI library to enable customized and faster data

analytics, along with educational resources. Stanek mentioned that GoodData

plans to launch a GoodData University initiative soon, to offer more resources

to empower organizations. Another noteworthy development for GoodData is the

evolution of its Semantic Layer data model. A new modeling tool by GoodData

aims to improve collaboration between engineers and analysts to streamline the

start process for enterprise data products. Stanek initially referred to this

as an attempt to establish a single version of the truth. This, however, has

always been an elusive goal. While improving collaboration between engineers

and analysts is commendable, more pragmatically, organizations can aim to

establish shared data models among user groups, rather than global ones.

Stanek did not sound short of ambition, and our conversation touched upon a

number of topics. If you want to listen to it in its entirety, make sure to

subscribe to the Orchestrate all the Things podcast, where it will be released

soon.

Building the foundation for a strong fintech ecosystem in Saudi Arabia

Prior to Co-VID 19 and its sudden need for global digitalisation, there was already potential for Saudi Arabia to have a strong fintech network. It is the largest economy in the region, where its stock market is worth around $549 billion USD, contributing to over half of the region’s total gross domestic product (GDP) in 2018, and is a member of the Group of Twenty (G20); this year it is actually Saudi Arabia that holds the G20 presidency. Also, Saudi has a very young population, where 70 percent of the population in 2017 was under 30 years old. It is also a very tech savvy nation, where it ranks, according to a report by EY, as having the third highest smartphone mobile usage globally and the seventh globally in terms of household internet access. This, coupled with the ongoing economic initiatives and investments as part of Saudi Vision 2030, has put Saudi’s fintech prospects and future growth at the forefront. ... Saudi Arabia has an opportunity to further solidify its position to one day be a leader in fintech. It has already, as part of Vision 2030, set the foundation to create an environment that not only is attracting foreign investment but also providing the tools and guidance to create its own talent and innovation as well.Why Blockchain Needs Kubernetes

Kubernetes and Docker can, and have, abstracted away much of the knowledge

required to get started. IBM and Corda have containerized their blockchain

protocols and various Ethereum images exist - for added granularity, network

component images exist as well, including the Solidity compiler, network stats

dashboard, testnets, miner nodes, block explorers, etc. In time, I expect to

see more and more component network parts containerized and made available.

Deploying blockchains will be a matter of picking a protocol image and the

additional components images, building YAML manifests, and deploying with helm

install. While modularity is necessary for designing complex networks and is

available for those that need it, the choice overload can and will deter

adoption for those that do not have the expertise, time, patience, or

resources to explore blockchain technology. By packaging up elements of

blockchain networks into image files that can be deployed and managed, the

requisite knowledge required to get started will be democratized to those that

are familiar with Docker and Kubernetes.

COVID-19 is teaching investors a thing or two about how important an opportunity “edtech” is

In spite of the billions invested across the world in the latest and greatest innovations, technology hasn’t been able to stop or impact the spread of COVID-19 on any notable scale, something embarrassing to us all. As a result, investors broadly have decided to support the industries and tech where significantly less funding had been placed historically. As an example, we at Perlego have received five times more approaches from new venture capitalists (VCs) and angels since the lockdown. I believe this is for one of two reasons: they either want to help a future society or they’ve seen failures in the likes of medicine, education and ecotech at this time and see these as the new fintechs in the years to come. Regardless of the reason, what is essential is to place more focus on the sectors that were previously seen as poor relations to their shiny counterparts. Investment, growth and the opportunity to succeed must be further developed; such is the necessity for innovation on a global scale. It’s sad that it has taken a global crisis to trigger this thinking.Optimizing MDM With Agile Data Governance

The embarrassing truth is that most organizations cannot answer these

seemingly simple questions, at least without serious effort. In addition, many

organizations have been reporting erroneous customer figures as different

silos and lines of business fail to work cohesively to manage their master

data assets. The annual cost and impact of data quality issues that are rooted

in ungoverned data with little or no formal accountabilities around critical

enterprise data have propelled the need for many organizations to fix their

MDM problem. It’s evident that the need for ‘trusted data’ continues to appear

in nearly all data initiatives. However, most organizations are still

struggling with their MDM rollout simply because it’s addressed from a one

lens angle. It’s one thing to fix the problem by mastering the formerly bad

data; it’s another thing to make the solution sustainable by treating the root

problem of disparate common data. The value of a ‘Stewardship culture‘

around data assets cannot be overemphasized. For MDM to be sustainable

and rightfully implemented, it must be positioned in a governed environment

where stewardship around the mastered data, and the associated culture of data

governance are implemented.

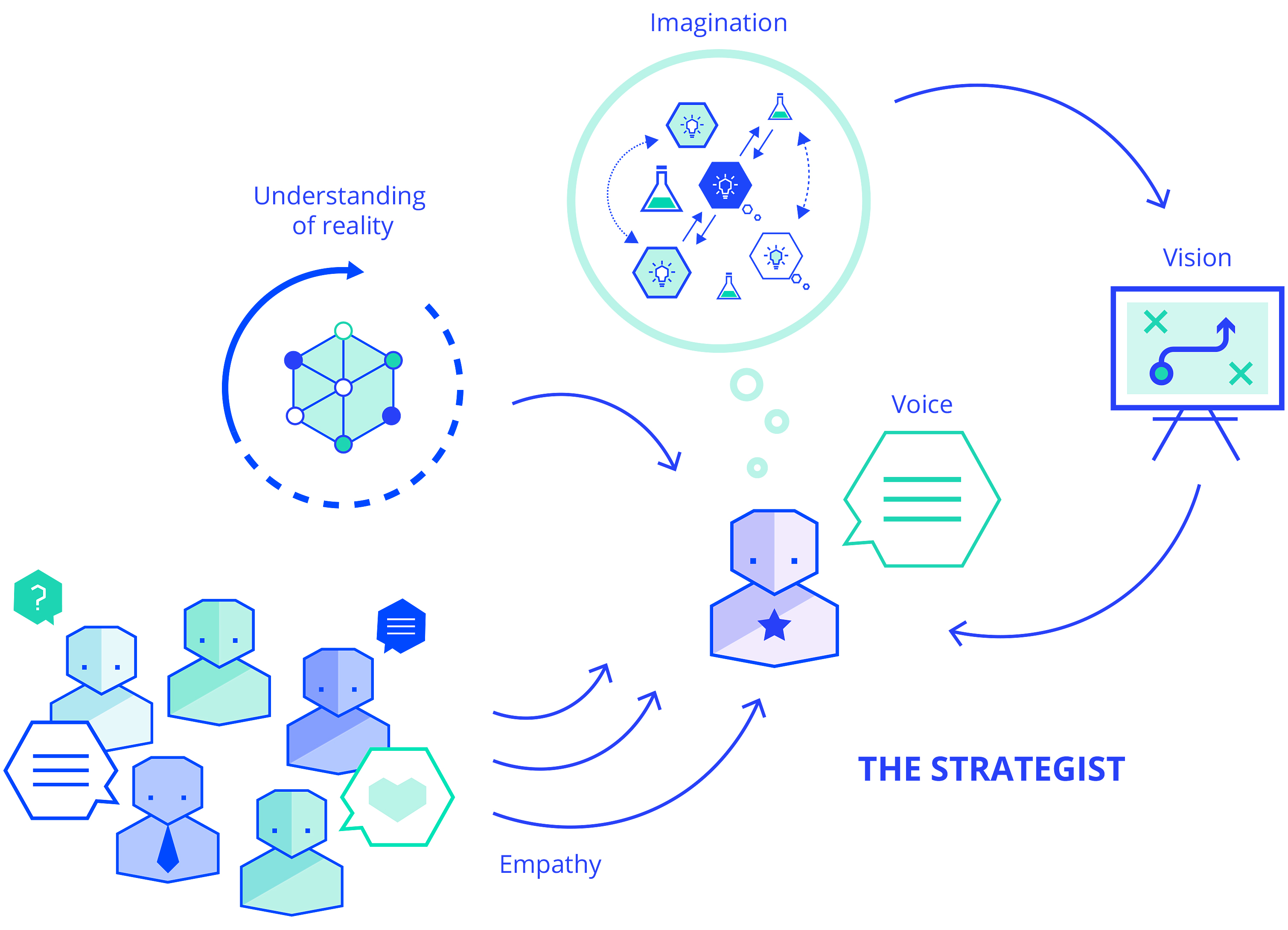

Unify: Architecting the Missing Link in Data Management

No matter what label or acronym the industry attributes, it comes down to a

simple truth that you need a dose of reality before tackling data management.

“All recognize the fact that it is impossible for organizations to physically

centralize all their data. Instead, data virtualization lets organizations

provide one “virtual” place to go for data consumers to access data and IT to

provide it,” says Eve. Next, companies need to have a strategy to tool

up for “next-generation data management.” “Gartner’s advice to consolidate

with their data management tooling in vendor suites such as TIBCO Unify that

combine metadata management, master data management, reference data

management, data catalog, data governance, and data virtualization within one

integrated solution,” says Eve. Data management should not be an IT problem

alone. Businesses can chip in by increasing their citizen data engineering

pool and offering business domain advice. “Work together to assess your needs

and skills. Then be smart about maximizing the value each side can contribute,

for example, IT using TIBCO Data Virtualization to provision hundreds of

reusable data services that the business can quickly mix and match to address

their changing needs,” says Eve.

ZLoader Banking Malware Resurfaces

Zloader has an element that downloads and runs the banking malware component

from its command-and-control server, researchers at Proofpoint say. ZLoader

spread in the wild from June 2016 to February 2018, with a group called TA511

- aka MAN1 or Moskalvzapoe - being one of the top threat actors spreading the

malware, the report adds. The ZLoader malware uses webinjects to steal

credentials, passwords and cookies stores in web browsers, and other sensitive

information from customers of banks and financial institutions, according to

Proofpoint. The malware then lets hackers connect to the infected system

through a virtual network computing client, so they can make fraudulent

transactions from the users device. The researchers note that the latest

variant seemed to be missing some of the advanced features of the original

ZLoader malware, such as code obfuscation and string encryption, among other

features. "Hence, the new malware does not appear to be a continuation of the

2018 strain, but likely a fork of an earlier version," the researchers state.

Opening the doors to greater data value with data catalogue

If data isn’t consistent, comprehensive, and accurate, digital transformation efforts may fall short of objectives in a wide range of areas, such as: Laying the foundations for advanced analytics. Data scientists often spend 80% of their time searching for data, and just 20% on actual AI/ML and modeling. A data catalogue reverses the equation by providing quick data discoverability and access to relevant information. That lets data scientists and business analysts use trusted data to deliver the insights needed for data-driven decision-making. Developing a 360 degree customer experience. Because customer data exists in so many corners of the enterprise, it’s essential for organisations to have a holistic 360-degree view across all sources if they are to truly understand customers as individuals. By identifying all key sources of customer data, a data catalogue provides the foundation for more personalised engagement and improved customer experience. Supporting and accelerating smooth cloud data migration.

Quote for the day: