Do programming certifications still matter?

Hiring is one area where programming certifications definitely play a

role. “One of the key benefits of having programming certifications is that

they provide validation of a candidate's skills and knowledge in a particular

programming language, framework, or technology,” says Aleksa Krstic, CTO at

Localizely, a provider of a cloud-based translation platform. “Certifications

can demonstrate that the individual has met certain standards and has the

expertise required to perform a specific job.” For employers, programming

certifications offer several advantages, Krstic says. “They can help streamline

the hiring process, by providing a benchmark for assessing candidates' skills

and knowledge,” he says. “Certifications can also serve as a way to filter out

applicants who do not meet the minimum requirements.” In cases where

multiple candidates are equally qualified, having a relevant certification can

give one candidate an edge over others, Krstic says. “When it comes to

certifications in general, when we see a junior to mid-level developer armed

with programming certifications, it's a big green light for our hiring team,”

says Michał Kierul is the CEO of software company SoftBlue

Overseeing generative AI: New software leadership roles emerge

In addition to line-of-business expertise, the rise of AI will mean there is

also a growing focus on prompt engineering and in-context learning capabilities.

Databricks' Zutshi says, "This is a newer ability for developers to optimize

prompts for large language models and build new capabilities for customers,

further expanding the reach and capability of AI tools." Yet another area where

software leaders will need to take the lead is AI ethics. Software engineering

leaders "must work with, or form, an AI ethics committee to create policy

guidelines that help teams responsibly use generative AI tools for design and

development," Gartner's Khandabattu reports in her analysis. Software leaders

will need to identify and help "to mitigate the ethical risks of any generative

AI products that are developed in-house or purchased from third-party vendors."

Finally, recruiting, developing, and managing talent will also get a boost from

generative AI, Khandabattu adds. Generative AI applications can speed up hiring

tasks, such as performing a job analysis and transcribing interview summaries.

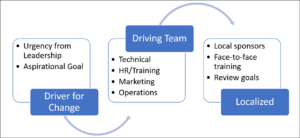

Generative Agile Leadership: What The Fourth Industrial Revolution Needs

Expanding the metaphor of the head, heart and hands, I've developed eight

generative agile leadership (GAL) principles; they are the structure needed to

create resilient teams of happy, contributing people who amplify satisfied

customers and deliver outcomes for a thriving business. ... The GAL principles

come from Peter Senge's learning organization and Ron Westrum’s organizational

cultures. The learning organization is an adaptive entity that expands the

capabilities of people and the whole system. The generative model is a

performance-oriented organizational culture to ensure that people have high

trust and low blame to increase the ability to express new ideas. ... The

great-person leadership style that emphasizes that leaders are made and not

born will not age well in the 4IR. The human-centered generative leadership

model is the best approach to leading the four generations. The GAL principles

are rooted in the idea that leaders should help their employees grow and

develop as individuals. Generative leaders focus on creating a learning

environment and providing their employees with opportunities to reach their

full potential.

IT Must Clean Up Its Own Supply Chain

At the end of our supply chain “clean up” exercise, we were pleased that we

had gained a good handle on our vendor services and products. This would

enable us to operate more efficiently. We were also determined to never fall

into this supply chain quagmire again! To avoid that, we created a set of

ongoing supply chain management practices designed to maintain our supply

chain on a regular basis. We met regularly with vendors, designed a “no

exceptions” contract review as part of every RFP process, and no longer

settled for boilerplate vendor contracts that didn’t have expressly stated

SLAs. We also made it a point to attend key vendor conferences and to actively

participate in vendor client forums, because we believed it would give us an

opportunity to influence vendor product and service directions so they could

better align with our own. End to end, this exercise consumed time, and

resources, but it succeeded in capturing our attention. Attention to IT supply

chains is even more relevant today as IT increasingly gets outsourced to the

cloud

‘Data poisoning’ anti-AI theft tools emerge — but are they ethical?

Hancock said genAI development companies are waiting to see how aggressive “or

not” government regulators will be with IP protections. “I suspect, as is

often the case, we’ll look to Europe to lead here. They’re often a little more

comfortable protecting data privacy than the US is, and then we end up

following suit,” Hancock said. To date, government efforts to address IP

protection against genAI models are at best uneven, according to Litan. “The

EU AI Act proposes a rule that AI model producers and developers must disclose

copyright materials used to train their models. Japan says AI generated art

does not violate copyright laws,” Litan said. “US federal laws on copyright

are still non-existent, but there are discussions between government officials

and industry leaders around using or mandating content provenance standards.”

Companies that develop genAI are more often turning away from indiscriminate

scraping of online content and instead purchasing content to ensure they don’t

run afoul of IP statutes. That way, they can offer customers purchasing their

AI services reassurance they won’t be sued by content creators.

SEC sues SolarWinds and its CISO for fraudulent cybersecurity disclosures

The SolarWinds case could act as a pivotal point for the role of a CISO,

transforming it into one that requires a lot more scrutiny and responsibility.

"SolarWinds incident highlights the responsibility of CISOs of publicly listed

companies in not only managing the cyberattacks but also proactively informing

customers and investors about their cybersecurity readiness and controls,"

said Pareekh Jain, chief analyst at Pareekh Consulting. "This lawsuit

highlights that there were red flags earlier that the CISO failed to disclose.

This will make corporations and CISOs take notice and take proactive security

disclosure more seriously similar to how CFOs take financial information

disclosure seriously." "There are many unknowns here; we don’t know if the

CISO 'succumbed' to pressure from other leaders or if he was complicit in the

hack," said Agnidipta Sarkar, vice president for CISO Advisory at ColorTokens

Inc. "In either case, he is the target. But the reality is that the CISO is a

very complex role. We are constantly required to navigate internal politics

and pushbacks, and unless you are on your toes, you will be at the mercy of

external forces at a scale no other CXO is exposed to."

Why adaptability is the new digital transformation

Sustainability and resilience are mature management disciplines because a lot

of attention has been paid to developing strategies and implementing solutions

to address them. When it comes to adaptability, however, apart from agile

methodologies and adaptation as it relates to climate change, there’s very

little to learn from in terms of the body of work, which is why I addressed

this issue in “A Guide to Adaptive Government: Preparing for Disruption.”

Adaptive systems and resilient systems are often confused and thought of as

interchangeable, but there’s a vast difference between the two concepts.

Whereas an adaptive system restructures or reconfigures itself to best operate

in and optimize for the ambient conditions, a resilient system often simply

has to restore or maintain an existing steady state. In addition, whereas

resilience is a risk management strategy, adaptability is both a risk

management and an innovation strategy. The philosophy behind adaptive systems

is more about innovation than risk management. It assumes from the start, that

there are no steady state conditions to operate within, but that the external

environment is constantly changing.

Bringing Harmony to Chaos: A Dive into Standardization

Companies with different engineering teams working on various products often

emphasize the importance of standardization. This process helps align large

teams, promoting effective collaboration despite their diverse focuses. By

ensuring consistency in non-functional aspects, such as security, cost,

compliance and observability, teams can interact smoothly and operate in

harmony, even with differing priorities. Standardizing these non-functional

elements is key for maintaining system strength and resilience. It helps in

setting consistent guidelines and practices across the company,

minimizing conflicts. The aim is to seamlessly integrate standardization

within these elements to improve adaptability and consistency. However,

achieving this standardization isn’t easy. Differences in operational methods

can lead to inconsistencies. ... The aim of standardization is to create

smooth and uniform processes. However, achieving this isn’t always easy.

Challenges arise from different team goals, changing technology and the

tendency to unnecessarily create something new.

When tightly managing costs, smart founders will be rigorous, not ruthless

Instead of ruthless, indiscriminate cost-cutting, it is wise to be very frugal

about what doesn’t matter while you continue maintaining or even moderately

investing in the things that do matter. When making cuts, never lose sight of

your people. They’re anxious about the future, and you can’t expect to add

more stress and excessive demands to already-stressed workers. ... The

outright elimination of things like team lunches, in-person meetings and

little daily perks creates instant animosity. Thoughtful cuts instead create

visible and tangible reminders of the current environment, especially when

considering how important in-person gatherings are to sustaining a robust

culture in a remote work environment. Instead of quarterly in-person employee

meetups, move to annual and replace the others with a DoorDash gift card and a

video meeting. Curtailing all travel — both sales calls and team meetups — not

only hurts morale, it allows justifiable excuses for missed targets, lost

deals and churned customers.

A Beginner's Guide to Retrieval Augmented Generation (RAG)

Retrieval Augmented Generation is a method that combines the powers of large

pre-trained language models (like the one you're interacting with) with

external retrieval or search mechanisms. The idea is to enhance the capability

of a generative model by allowing it to pull information from a vast corpus of

documents during the generation process. ... RAG has a range of potential

applications, and one real-life use case is in the domain of chat

applications. RAG enhances chatbot capabilities by integrating real-time data.

Consider a sports league chatbot. Traditional LLMs can answer historical

questions but struggle with recent events, like last night's game details. RAG

allows the chatbot to access up-to-date databases, news feeds and player bios.

This means users receive timely, accurate responses about recent games or

player injuries. For instance, Cohere's chatbot provides real-time details

about Canary Islands vacation rentals — from beach accessibility to nearby

volleyball courts. Essentially, RAG bridges the gap between static LLM

knowledge and dynamic, current information.

Quote for the day:

“Vulnerability is the birthplace of

innovation, creativity, and change.” -- Brené Brown