5 Cybersecurity Tactics To Protect The Cloud

Best practices to protect companies’ operations in the cloud are guided by three

fundamental questions. First, who is managing the cloud? Many companies are

moving towards a Managed Service Provider (MSP) model that includes the

monitoring and management of security devices and systems called Managed

Security Service Provider (MSSP). At a basic level, security services offered

include managed firewall, intrusion detection, virtual private network,

vulnerability scanning and anti-malware services, among others. Second, what is

the responsibility shift in this model? There is always a shared responsibility

between companies and their cloud infrastructure providers for managing the

cloud. This applies to private, public, and hybrid cloud models. Typically,

cloud providers are responsible for the infrastructure as a service (IaaS) and

platform as a service (PaaS) layers while companies take charge of the

application layer. Companies are ultimately responsible for deciding the user

management concept for business applications, such as the user identity

governance for human resources and finance applications.

Yugabyte CTO outlines a PostgreSQL path to distributed cloud

30 years ago, open source [databases were not] the norm. If you told people,

“Hey, here’s an open source database,” they’re going to say, “Okay? What does

that mean? What is it? What does it really mean? And why should I be excited?”

And so on. I remember because at Facebook I was a part of the team that built an

open source database called Cassandra, and we had no idea what would happen. We

thought “Okay, here’s this thing that we’re putting out in the open source, and

let’s see what happens.” And this is in 2007. Back in that day, it was important

to use a restrictive license — like GPL — to encourage people to contribute and

not just take stuff from the open source and never give back. So that’s the

reason why a lot of projects ended up with GPL-like licenses. Now, MySQL did a

really good job in adhering to these workloads that came in the web back then.

They were tier two workloads initially. These were not super critical, but over

time they became very critical, and the MySQL community aligned really well and

that gave them their speed. But over time, as you know, open source has become a

staple. And most infrastructure pieces are starting to become open source.

Introducing MVISION Cloud Firewall – Delivering Protection Across All Ports and Protocols

McAfee MVISION Cloud Firewall is a cutting-edge Firewall-as-a-Service solution

that enforces centralized security policies for protecting the distributed

workforce across all locations, for all ports and protocols. MVISION Cloud

Firewall allows organizations to extend comprehensive firewall capabilities to

remote sites and remote workers through a cloud-delivered service model,

securing data and users across headquarters, branch offices, home networks and

mobile networks, with real-time visibility and control over the entire network

traffic. The core value proposition of MVISION Cloud Firewall is characterized

by a next-generation intrusion detection and prevention system that utilizes

advanced detection and emulation techniques to defend against stealthy threats

and malware attacks with industry best efficacy. A sophisticated next-generation

firewall application control system enables organizations to make informed

decisions about allowing or blocking applications by correlating threat

activities with application awareness, including Layer 7 visibility of more than

2000 applications and protocols.

How Data Governance Improves Customer Experience

Customer journey orchestration allows an organization to meaningfully modify and

personalize a customer’s experience in real-time by pulling in data from many

sources to make intelligent decisions about what options and offers to provide.

While this sounds like a best-case scenario for customers and company alike, it

requires data sources to be unified and integrated across channels and

environments. This is where good data governance comes into play. Even though

many automation tasks may fall in a specific department like marketing or

customer service, the data needed to personalize and optimize any of those

experiences is often coming from platforms and teams that span the entire

organization. Good data governance helps to unify all of these sources,

processes and systems and ensures customers receive accurate and impactful

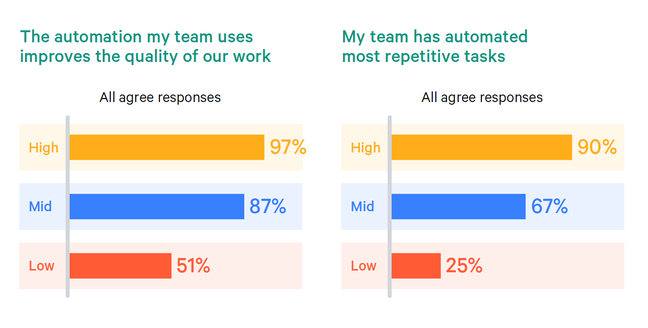

personalization within a wide range of experiences. As you can see, data

governance can have a major influence over how the customer experience is

delivered, measured and enhanced. It can help teams work better together and

help customers get more personalized service.

The Life Cycle of a Breached Database

Our continued reliance on passwords for authentication has contributed to one

toxic data spill or hack after another. One might even say passwords are the

fossil fuels powering most IT modernization: They’re ubiquitous because they are

cheap and easy to use, but that means they also come with significant trade-offs

— such as polluting the Internet with weaponized data when they’re leaked or

stolen en masse. When a website’s user database gets compromised, that

information invariably turns up on hacker forums. There, denizens with computer

rigs that are built primarily for mining virtual currencies can set to work

using those systems to crack passwords. How successful this password cracking is

depends a great deal on the length of one’s password and the type of password

hashing algorithm the victim website uses to obfuscate user passwords. But a

decent crypto-mining rig can quickly crack a majority of password hashes

generated with MD5 (one of the weaker and more commonly-used password hashing

algorithms).

Zero Trust Adoption Report: How does your organization compare?

From the wide adoption of cloud-based services to the proliferation of mobile

devices. From the emergence of advanced new cyberthreats to the recent sudden

shift to remote work. The last decade has been full of disruptions that have

required organizations to adapt and accelerate their security transformation.

And as we look forward to the next major disruption—the move to hybrid work—one

thing is clear: the pace of change isn’t slowing down. In the face of this rapid

change, Zero Trust has risen as a guiding cybersecurity strategy for

organizations around the globe. A Zero Trust security model assumes breach and

explicitly verifies the security status of identity, endpoint, network, and

other resources based on all available signals and data. It relies on contextual

real-time policy enforcement to achieve least privileged access and minimize

risks. Automation and machine learning are used to enable rapid detection,

prevention, and remediation of attacks using behavior analytics and large

datasets.

Container Technology Complexity Drives Kubernetes as a Service

The reason why managed Kubernetes is now gaining traction is obvious, according

to Brian Gracely, senior director product strategy for Red Hat OpenShift. He

pointed out that containers and Kubernetes are relatively new technologies, and

that managed Kubernetes services are even newer. This means that until recently,

companies that wanted or needed to deploy containers and use Kubernetes had no

choice but to invest their own resources in developing in-house expertise. "Any

time we go through these new technologies, it's early adopters that live through

the shortfalls of it, or the lack of features or complexity of it, because they

have an immediate problem they're trying to solve," he said. Like Galabov,

Graceley thinks that part of the move towards Kubernetes as a Service is

motivated by the fact that many enterprises are already leveraging managed

services elsewhere in their infrastructure, so doing the same with their

container deployments only makes sense. "If my compute is managed, my network is

managed and my storage is managed, and we're going to use Kubernetes, my natural

inclination is to say, 'Is there a managed version of Kubernetes' as opposed to

saying, 'I'll just run software on top of the cloud.'" he said. "That's sort of

a normal trend."

NIST calls for help in developing framework managing risks of AI

"While it may be impossible to eliminate the risks inherent in AI, we are

developing this guidance framework through a consensus-driven, collaborative

process that we hope will encourage its wide adoption, thereby minimizing these

risks," Tabassi said. NIST noted that the development and use of new AI-based

technologies, products and services bring "technical and societal challenges and

risks." "NIST is soliciting input to understand how organizations and

individuals involved with developing and using AI systems might be able to

address the full scope of AI risk and how a framework for managing these risks

might be constructed," NIST said in a statement. NIST is specifically looking

for information about the greatest challenges developers face in improving the

management of AI-related risks. NIST is also interested in understanding how

organizations currently define and manage characteristics of AI trustworthiness.

The organization is similarly looking for input about the extent to which AI

risks are incorporated into organizations' overarching risk management,

particularly around cybersecurity, privacy and safety.

Studies show cybersecurity skills gap is widening as the cost of breaches rises

The worsening skills shortage comes as companies are adopting breach-prone

remote work arrangements in light of the pandemic. In its report today, IBM

found that the shift to remote work led to more expensive data breaches, with

breaches costing over $1 million more on average when remote work was indicated

as a factor in the event. By industry, data breaches in health care were most

expensive at $9.23 million, followed by the financial sector ($5.72 million) and

pharmaceuticals ($5.04 million). While lower in overall costs, retail, media,

hospitality, and the public sector experienced a large increase in costs versus

the prior year. “Compromised user credentials were most common root cause of

data breaches,” IBM reported. “At the same time, customer personal data like

names, emails, and passwords was the most common type of information leaked — a

dangerous combination that could provide attackers with leverage for future

breaches.” IBM says that it found that “modern” security approaches reduced

expenses, with AI, security analytics, and encryption being the top three

mitigating factors.

Exploring BERT Language Framework for NLP Tasks

An open-source machine learning framework, BERT, or bidirectional encoder

representation from a transformer is used for training the baseline model of NLP

for streamlining the NLP tasks further. This framework is used for language

modeling tasks and is pre-trained on unlabelled data. BERT is particularly

useful for neural network-based NLP models, which make use of left and right

layers to form relations to move to the next step. BERT is based on Transformer,

a path-breaking model developed and adopted in 2017 to identify important words

to predict the next word in a sentence of a language. Toppling the earlier NLP

frameworks which were limited to smaller data sets, the Transformer could

establish larger contexts and handle issues related to the ambiguity of the

text. Following this, the BERT framework performs exceptionally on deep

learning-based NLP tasks. BERT enables the NLP model to understand the semantic

meaning of a sentence – The market valuation of XX firm stands at XX%, by

reading bi-directionally (right to left and left to right) and helps in

predicting the next sentence.

Quote for the day:

“Whenever you find yourself on the

side of the majority, it is time to pause and reflect.” --

Mark Twain