Under the new proposal, individuals would be required to disclose social media usernames (though not passwords) when applying for a visa to enter the United States, which would affect nearly 15 million people per year, according to the AP report. The proposal would require applicants to disclose five years worth of social media usernames on platforms identified in the application form (Facebook, Twitter, etc.) while providing a separate field for applicants to volunteer the usernames of platforms not specifically identified in the form. Previous implementations of this rule applied only to people individually identified for additional background checks, which the AP report indicates is about 65,000 people annually. The idea of collecting social media information of visa applicants started during the Obama administration following the 2015 San Bernardino attack.

Everything You Were Afraid to Ask About Crypto Taxes

Many cryptocurrency investors have made a fortune the past several years selling high-flying bitcoin and other cryptocurrencies for cash. Unfortunately, far too many of them in the U.S. did not report this taxable income to the IRS. The agency figures hundreds of thousands of U.S. residents did not report income from sales or exchanges of cryptocurrency and it might be able to collect several billion dollars in back taxes, penalties, and interest. ... The character of the gain or loss generally depends on whether the virtual currency is a capital asset in the hands of the taxpayer. If it is, a taxpayer generally realizes a capital gain or loss on the sale. If not, the taxpayer realizes an ordinary gain or loss. The distinction is more than academic. ... When a taxpayer successfully mines virtual currency, the fair market value of the virtual currency generated as of the date of receipt is includable in gross income.

Don’t get surprised by the cloud’s data-egress fees

Keep this in mind: Most companies that use public clouds pay these fees for day-to-day transactions, such as moving data from cloud-based storage to on-premises storage. Those just starting out with cloud won’t feel the sting of these fees, but advanced users could end up pushing and pulling terabytes of data from their cloud provider and end up with a significant egress bill. It’s not major money that will break the budget, but egress fees are often overlooked when doing business planning and when considering the ROI of cloud hosting. Indeed, for at least the next few years, IT organizations will be making their cloud-based applications and data work and play well with on-premises data. That means a lot of data will move back and forth, and that means higher egress fees. My best advice is to put automated cost usage and cost governance tools in place to make sure you understand what’s being charged, and for what services.

Agile’s dark secret? IT has little need for the usual methodologies

Buy-when-you-can/build-when-you-have-to is profoundly different. When IT implements multiple COTS/SaaS packages, existing databases make the job harder, not easier. Each package comes with its own database, and when the software vendors design their databases they don’t take the ones you already have into account because they can’t. They also don’t take the ones other vendors have already designed into account because why would they? So when IT implements a COTS package it has to track down all the existing databases that store the same data the new application manages too — not to take advantage of it, but to figure out how to keep the overlapping data synchronized. When it comes to managing data, internal development and package implementations are completely different.

Cyber threat to energy infrastructure, Kapersky Lab research finds

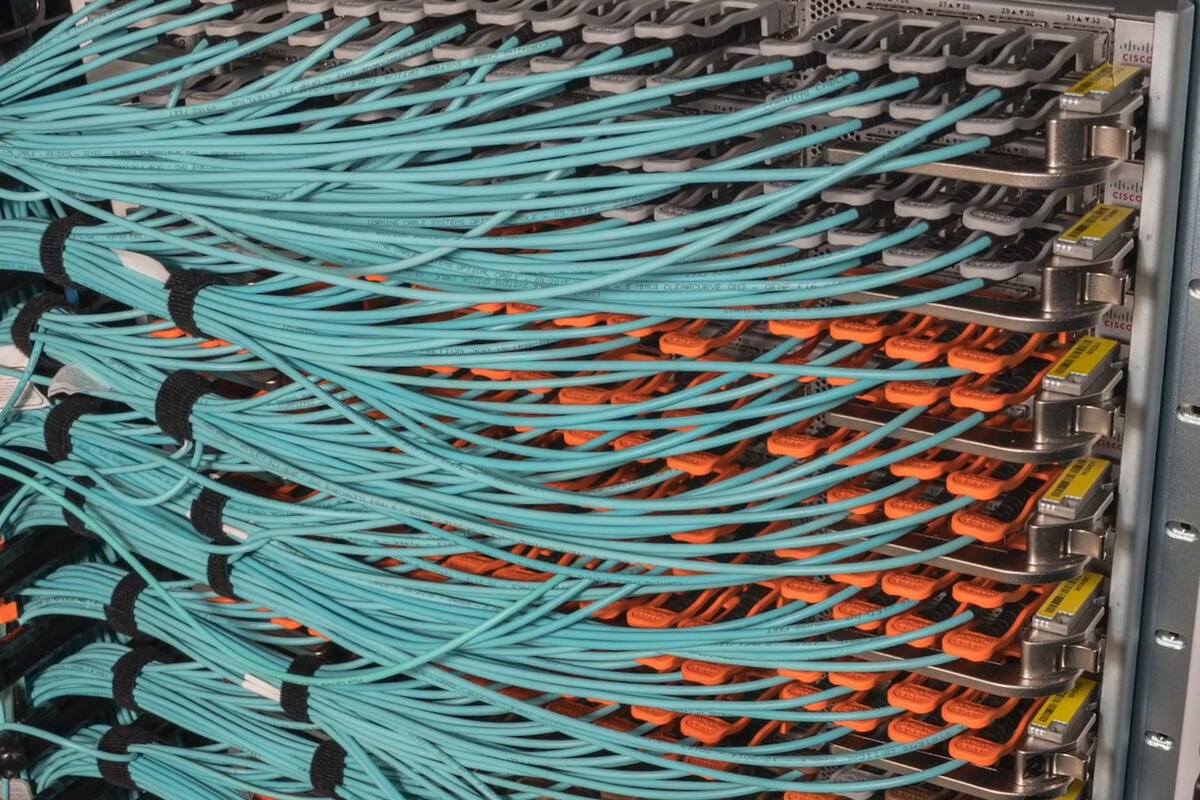

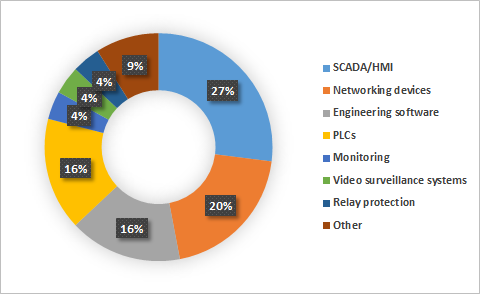

Cyber security incidents and targeted attacks over the past couple of years, along with regulatory initiatives make a strong case for the power and energy companies to start adopting cyber security products and measures for their operational technology (OT) systems. Moreover, the modern power grid is one of the most extensive systems of interconnected industrial objects, with a large number of computers connected to the network and a relatively high degree of exposure to cyber threats, as demonstrated by Kaspersky Lab ICS CERT statistics. In turn, the high percentage of attacked ICS computers in engineering and ICS Integration businesses is another serious problem given the fact that the supply chain attack vector has been used in some devastating attacks in recent years.

ICS cybersecurity: The missing ingredient in the IoT growth equation

There’s a lot to be gained by adopting connected IoT or IIoT technologies within OT networks and industrial control systems (ICS) environments. By using common internet protocols combined with the cost-savings of using connected terminals, industrial operations can utilize real-time analytics and multisite connectivity to improve efficiencies across numerous industrial verticals. So, why have ICS practitioners and stakeholders not adopted these new technologies? One word: security. As OT networks begin to integrate more intelligence, such as intelligent human-machine interface and cloud SCADA, ICS practitioners are now unable to reconcile the new security risks that have been created as a result. Since OT networks control critical infrastructure and processes, network failure inherently comes at a greater consequence than in typical IT networks. The potential for substantial financial loss, environmental damage and even loss of human life resulting from a security breach is a real possibility in the industrial realm.

Why Blockchain adoption has stalled in financial services and banking

To be sure, there's no shortage of hype and hope about what blockchain could do for banks and other financial services firms. As The Financial Times detailed, banks could use blockchain technology for everything from the recording and updating of customer identities to the clearing and settlement of lands and securities. ... The reality of blockchain, however, is that they aren't. While there are patches of blockchain activity—Northern Trust using a distributed ledger to manage private equity deals in Guernsey, and ING attempting to build a blockchain for agricultural commodities—Penny Crosman, reporting for American Banker, has declared that adoption has "stalled" for a variety of reasons. The first, ironically, is that most banks still don't have a clear business case for using it. Beyond confusion as to why the banks should be using blockchain at all, there are also concerns about security, legal issues, and the immaturity of the technology itself.

Data theft is the foremost threat for an insurance company

For an insurance company the data theft is the major threat. “For example, whenever a policy comes for renewal, the policy holders start receiving the calls from multiple companies, mostly your competitors. Here, despite ensuring all the security measures, the human factor remains the weakest link,” Dhanodkar explains. Its imperative to enrich people including employees, customers & third-party vendors with the adequate awareness on latest threats associated with the digitized economy. Mere Bbasic security awareness will not be effective unless the knowledge is upgraded. Aadhaar Act has also got lot of implementations for security framework and we are planning to have series of workshops on Aadhaar Act compliance requirements s for executives” he said. The CISO’s role demands sound understanding of “Technology and business. Being a CISO I cannot keep blocking everything but must act as an enabler, he added.

Should software developers have a code of ethics?

Teaching people to ask the right questions involves understanding what the questions are, says Burton, and that everyone’s values are different; some individuals have no problem working on software that runs nuclear reactors, or developing targeting systems for drones, or smart bombs, or military craft. “The truth is, we’ve been here before, and we’re already making strides toward mitigating risks and unintended consequences. We know we have to be really careful about how we’re using some of these technologies. It’s not even a question of can we build it anymore, because we know the technology and capability is out there to build whatever we can think of. The questions should be around should it be built, what are the fail safes, and what can we do to make sure we’re having the least harmful impact we can?” he says. Burton believes, despite the naysayers, that AI, machine learning and automation can actually help solve these ethical problems by freeing up humans to contemplate more fully the impacts of the technology they’re building.

How utility industries can leverage location data, AI and IoT

Organizations are looking at how AI and IoT can reduce cost, drive efficiencies, and enhance competitive advantage and support emerging business models. It is also clearly observed that some technical innovations from the mainstream of the IT world, or from other industries, are creating opportunities to leverage technology that did not exist previously in the industry. The industry has, in the past, pursued a siloed approach to applications and technologies. This is characterized by the separation of the engineering and operations groups from IT, and the use of stand-alone, best-of-breed applications within the overall scope of IT. As ubiquitous connectivity continues to permeate technology sectors, an increasing need to unite energy technologies, operational technologies (such as sensors and smart devices) and IT (such as big data, advanced analytics and asset performance management [APM]) with consumer technologies is observed in the industry.

Quote for the Day:

"If you're not failing once in a while, it probably means you're not stretching yourself." -- Lewis Pugh