All You Need to Know About Unsupervised Reinforcement Learning

Unsupervised learning can be considered as the approach to learning from the

huge amount of unannotated data and reinforcement learning can be considered as

the approach to learning from the very low amount of data. A combination of

these learning methods can be considered as unsupervised reinforcement learning

which is basically a betterment in reinforcement learning. In this article, we

are going to discuss unsupervised Reinforcement learning in detail along with

special features and application areas. ... When we talk about the basic process

followed by unsupervised learning, we define objective functions on it such that

the process can be capable of performing categorization of the unannotated data

or unlabeled data. There are various problems that can be dealt with using

unsupervised learning. Some of them are as follows: Label creation, annotation,

and maintenance is a changing discipline that also requires a lot of time and

effort; Many domains require expertise in annotation like law, medicine,

ethics, etc; In reinforcement learning, reward annotation is also

confusing.

Explainable AI (XAI) Methods Part 1 — Partial Dependence Plot (PDP)

Partial Dependence (PD) is a global and model-agnostic XAI method. Global

methods give a comprehensive explanation on the entire data set, describing the

impact of feature(s) on the target variable in the context of the overall data.

Local methods, on the other hand, describes the impact of feature(s) on an

observation level. Model-agnostic means that the method can be applied to any

algorithm or model. Simply put, PDP shows the marginal effect or contribution of

individual feature(s) to the predictive value of your black box model ...

Unfortunately, PDP is not some magic wand that you can waver in any occasion. It

has a major assumption that is made. The so-called assumption of independence is

the biggest issue with PD plots. ... “If the feature for which you computed the

PDP is not correlated with the other features, then the PDPs perfectly represent

how the feature influences the prediction on average. In the uncorrelated case,

the interpretation is clear: The partial dependence plot shows how the average

prediction in your dataset changes when the j-th feature is changed.”

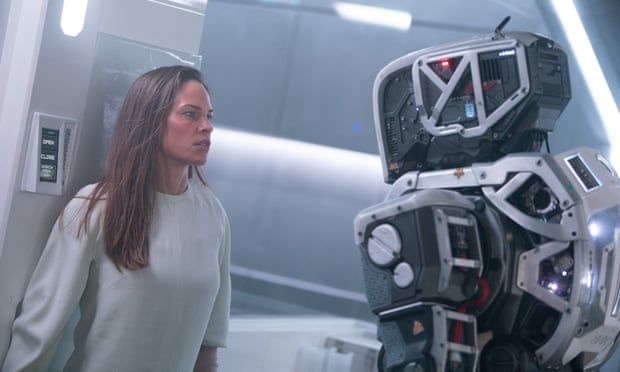

Worried about super-intelligent machines? They are already here

For anyone who thinks that living in a world dominated by super-intelligent

machines is a “not in my lifetime” prospect, here’s a salutary thought: we

already live in such a world! The AIs in question are called corporations. They

are definitely super-intelligent, in that the collective IQ of the humans they

employ dwarfs that of ordinary people and, indeed, often of governments. They

have immense wealth and resources. Their lifespans greatly exceed that of mere

humans. And they exist to achieve one overriding objective: to increase and

thereby maximise shareholder value. In order to achieve that they will

relentlessly do whatever it takes, regardless of ethical considerations,

collateral damage to society, democracy or the planet. One such

super-intelligent machine is called Facebook. ... “We connect people.

Period. That’s why all the work we do in growth is justified. All the

questionable contact importing practices. All the subtle language that helps

people stay searchable by friends. All of the work we have to do to bring more

communication in. The work we will likely have to do in China some day. All of

it.”

Supervised vs. Unsupervised vs. Reinforcement Learning: What’s the Difference?

Reinforcement learning is a technique that provides training feedback using a

reward mechanism. The learning process occurs as a machine, or Agent, that

interacts with an environment and tries a variety of methods to reach an

outcome. The Agent is rewarded or punished when it reaches a desirable or

undesirable State. The Agent learns which states lead to good outcomes and which

are disastrous and must be avoided. Success is measured with a score (denoted as

Q, thus reinforcement learning is sometimes called Q-learning) so that the Agent

can iteratively learn to achieve a higher score. Reinforcement learning can be

applied to the control of a simple machine like a car driving down a winding

road. The Agent would observe its current State by taking measurements such as

current speed, direction relative to the road, and distances to the sides of the

road. The Agent can take actions that change its state like turning the wheel or

applying the gas or brakes.

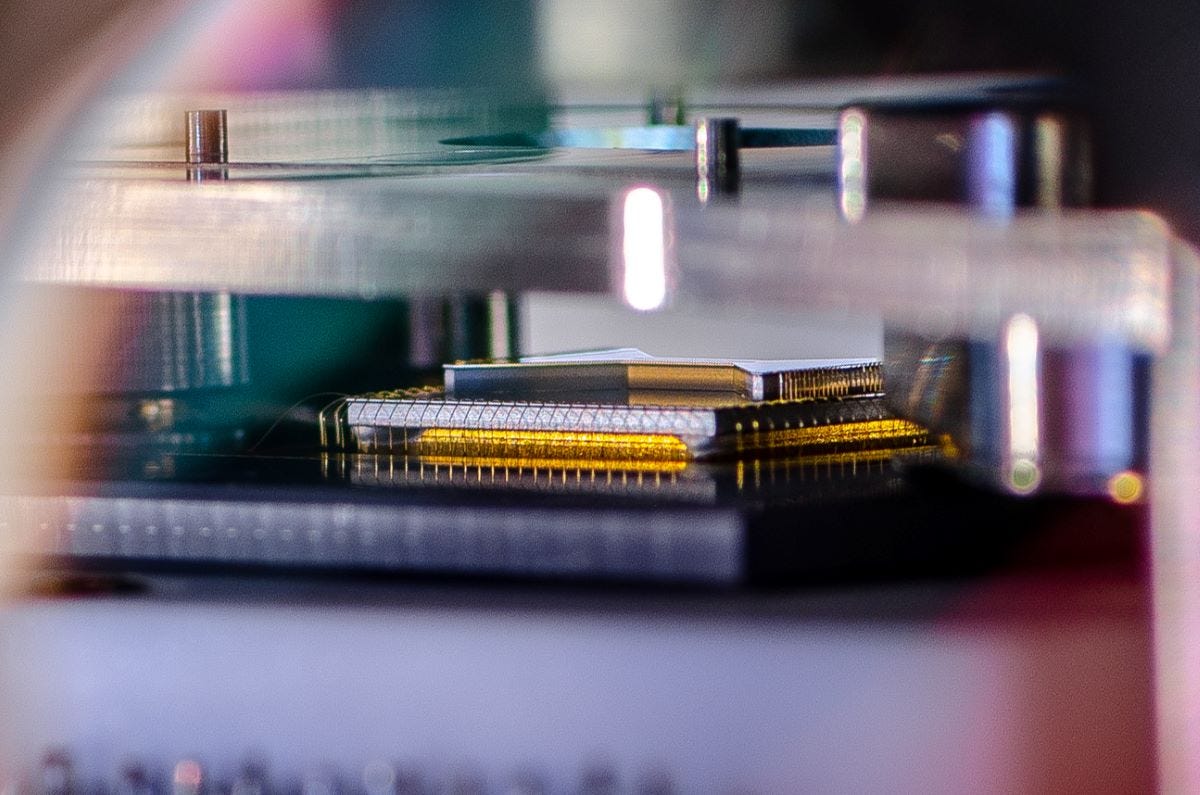

Quantum computers: Eight ways quantum computing is going to change the world

Discovering new drugs takes so long: scientists mostly adopt a trial-and-error

approach, in which they test thousands of molecules against a target disease in

the hope that a successful match will eventually be found. Quantum computers,

however, have the potential to one day resolve the molecular simulation problem

in minutes. The systems are designed to be able to carry out many calculations

at the same time, meaning that they could seamlessly simulate all of the most

complex interactions between particles that make up molecules, enabling

scientists to rapidly identify candidates for successful drugs. This would mean

that life-saving drugs, which currently take an average 10 years to reach the

market, could be designed faster -- and much more cost-efficiently.

Pharmaceutical companies are paying attention: earlier this year, healthcare

giant Roche announced a partnership with Cambridge Quantum Computing (CQC) to

support efforts in research tackling Alzheimer's disease.

What is a honeypot crypto scam and how to spot it?

Even though it looks like a part of the network, it is isolated and monitored.

Because legitimate users have no motive to access a honeypot, all attempts to

communicate with it are regarded as hostile. Honeypots are frequently deployed

in a network's demilitarized zone (DMZ). This strategy separates it from the

leading production network while keeping it connected. A honeypot in the DMZ may

be monitored from afar while attackers access it, reducing the danger of a

compromised main network. To detect attempts to infiltrate the internal network,

honeypots can be placed outside the external firewall, facing the internet. The

actual location of the honeypot depends on how intricate it is, the type of

traffic it wants to attract and how close it is to critical business resources.

It will always be isolated from the production environment, regardless of where

it is placed. Logging and viewing honeypot activity provides insight into the

degree and sorts of threats that a network infrastructure confronts while

diverting attackers' attention away from real-world assets.

From DeFi to NFTs to metaverse, digital assets revolution is remaking the world

This decentralised concept offers both opportunities and challenges. How could a

system work among a group of participants—there could be bad apples—if they were

given the option of pseudonymity? Who will update the ledger? How will we reach

a uniform version of truth? Bitcoin solved a lot of the long-standing issues

with cryptographic consensus methods with a combination of private and public

keys, and carefully aligned economic incentives. Suppose User A wants to

transfer 1 bitcoin to User B. The transaction data would be authenticated,

verified, and moved to the ‘mempool’ (memory pool is a holding room for all

unconfirmed transactions), where they will be collected in groups or ‘blocks’.

One block becomes one entry in the Bitcoin ledger, and around 3,000 transactions

will appear in one block. The ledger would be updated every 10 minutes, and the

system would converge on the latest single version of truth. The next big

question is, who in the system gets to write the next entry in the ledger? That

is where the consensus protocol comes into play.

The privacy dangers of web3 and DeFi – and the projects trying to fix them

Less discussed is the impact of web3 and DeFi on user privacy. Proponents argue

that web3 will improve user privacy by putting individuals in control of their

data, via distributed personal data stores. But critics say that the transparent

nature of public distributed ledgers, which make transactions visible to all

participants, is antithetical to privacy. “Right now, web3 requires you to give

up privacy entirely,” Tor Bair, co-founder of private blockchain The Secrecy

Network, tweeted earlier this year. “NFTs and blockchains are all

public-by-default and terrible for ownership and security.” Participants in

public blockchains don’t typically need to make their identities known, but

researchers have demonstrated how transactions recorded on a blockchain could be

linked to individuals. A recent paper by researchers at browser maker Brave and

Imperial College London found that many DeFi apps incorporate third-party web

services that can access the users’ Ethereum addresses. “We find that several

DeFi sites rely on third parties and occasionally even leak your Ethereum

address to those third parties – mostly to API and analytics providers,” the

researchers wrote.

The Importance of People in Enterprise Architecture

All the employees in the organization should have a shared understanding of the

overarching future state and be empowered to update the future state for their

part of the whole. The communication and democratization discussed in the AS-IS

section is also necessary for TO-BE. People need regular, self-service access to

a continuously evolving future-state architecture description. Each person

should have access that provides views specific to that person, their role, and

links to other people who will collaborate to promote that understanding and

evolve the design. Progressive companies are moving away from the plan-build-run

mentality and this is changing the role of the architecture review board (ARB)

that is operated by a central EA team. These traditionally act as a bureaucratic

toll-gate, performing their role after the design is finished to ensure all

system qualities are accounted for and the design is aligned with the future

state approach. However, democratizing the enterprise architecture role and

sharing design autonomy now requires collaboration on the initial phase of

design at the start of an increment. This collaboration is to ensure the

reasoning behind the enterprise-wide future-state is understood, and the desired

system qualities are carefully evaluated.

The Best Way to Manage Unstructured Data Efficiently

A lot of people seem to place a lot of focus on data analysis techniques and

machine learning models when building a high-quality ML production pipeline.

However, what a lot of people miss is that storage is one of the most

important aspects of your pipeline. This is because the pipeline has 3 main

components: collecting data, storing it, and consuming it. Effective storage

methods do not only boost storage capabilities but also help in more efficient

collection and consumption. The ease of searching with customizable metadata

is available in object storage and helps in doing both of those. Not only do

you want to choose the correct storage tech, but you also want to choose the

correct provider. AWS comes to mind as one of the best object storage

providers mainly because its infrastructure provides smooth service and ease

of scaling. Furthermore, for effective consumption of data, there must be a

software layer that runs on top of this storage for data aggregation and

collection purposes. This is also an important choice and needs to be

discussed in another article dedicated to the topic.

Quote for the day:

"Effective team leaders adjust their

style to provide what the group can't provide for itself." --

Kenneth Blanchard

No comments:

Post a Comment