Quote for the day:

"Leadership is absolutely about inspiring action, but it is also about guarding against mis-action." -- Simon Sinek

Attackers Target the Foundations of Crypto: Smart Contracts

Central to the attack is a malicious smart contract, written in the Solidity

programming language, with obfuscated functionality that transfers stolen funds

to a hidden externally owned account (EOA), says Alex Delamotte, the senior

threat researcher with SentinelOne who wrote the analysis. ... The decentralized

finance (DeFi) ecosystem relies on smart contracts — as well as other

technologies such as blockchains, oracles, and key management — to execute

transactions, manage data on a blockchain, and allow for agreements between

different parties and intermediaries. Yet their linchpin status also makes smart

contracts a focus of attacks and a key component of fraud. "A single

vulnerability in a smart contract can result in the irreversible loss of funds

or assets," Shashank says. "In the DeFi space, even minor mistakes can have

catastrophic financial consequences. However, the danger doesn’t stop at

monetary losses — reputational damage can be equally, if not more, damaging."

... Companies should take stock of all smart contracts by maintaining a detailed

and up-to-date record of all deployed smart contracts, verifying every contract,

and conducting periodic audits. Real-time monitoring of smart contracts and

transactions can detect anomalies and provide fast response to any potential

attack, says CredShields' Shashank.

Central to the attack is a malicious smart contract, written in the Solidity

programming language, with obfuscated functionality that transfers stolen funds

to a hidden externally owned account (EOA), says Alex Delamotte, the senior

threat researcher with SentinelOne who wrote the analysis. ... The decentralized

finance (DeFi) ecosystem relies on smart contracts — as well as other

technologies such as blockchains, oracles, and key management — to execute

transactions, manage data on a blockchain, and allow for agreements between

different parties and intermediaries. Yet their linchpin status also makes smart

contracts a focus of attacks and a key component of fraud. "A single

vulnerability in a smart contract can result in the irreversible loss of funds

or assets," Shashank says. "In the DeFi space, even minor mistakes can have

catastrophic financial consequences. However, the danger doesn’t stop at

monetary losses — reputational damage can be equally, if not more, damaging."

... Companies should take stock of all smart contracts by maintaining a detailed

and up-to-date record of all deployed smart contracts, verifying every contract,

and conducting periodic audits. Real-time monitoring of smart contracts and

transactions can detect anomalies and provide fast response to any potential

attack, says CredShields' Shashank.Is AI the end of IT as we know it?

CIOs have always been challenged by the time, skills, and complexities

involved in running IT operations. Cloud computing, low-code development

platforms, and many DevOps practices helped IT teams move “up stack,” away

from the ones and zeros, to higher-level tasks. Now the question is whether AI

will free CIOs and IT to focus more on where AI can deliver business value,

instead of developing and supporting the underlying technologies. ... Joe

Puglisi, growth strategist and fractional CIO at 10xnewco, offered this

pragmatic advice: “I think back to the days when you wrote an assembly and it

took a lot of time. We introduced compilers, higher-level languages, and now

we have AI that can write code. This is a natural progression of capabilities

and not the end of programming.” The paradigm shift suggests CIOs will have to

revisit their software development lifecycles for significant shifts in

skills, practices, and tools. “AI won’t replace agile or DevOps — it’ll

supercharge them with standups becoming data-driven, CI/CD pipelines

self-optimizing, and QA leaning on AI for test creation and coverage,” says

Dominik Angerer, CEO of Storyblok. “Developers shift from coding to curating,

business users will describe ideas in natural language, and AI will build

functional prototypes instantly. This democratization of development brings

more voices into the software process while pushing IT to focus on oversight,

scalability, and compliance.”

CIOs have always been challenged by the time, skills, and complexities

involved in running IT operations. Cloud computing, low-code development

platforms, and many DevOps practices helped IT teams move “up stack,” away

from the ones and zeros, to higher-level tasks. Now the question is whether AI

will free CIOs and IT to focus more on where AI can deliver business value,

instead of developing and supporting the underlying technologies. ... Joe

Puglisi, growth strategist and fractional CIO at 10xnewco, offered this

pragmatic advice: “I think back to the days when you wrote an assembly and it

took a lot of time. We introduced compilers, higher-level languages, and now

we have AI that can write code. This is a natural progression of capabilities

and not the end of programming.” The paradigm shift suggests CIOs will have to

revisit their software development lifecycles for significant shifts in

skills, practices, and tools. “AI won’t replace agile or DevOps — it’ll

supercharge them with standups becoming data-driven, CI/CD pipelines

self-optimizing, and QA leaning on AI for test creation and coverage,” says

Dominik Angerer, CEO of Storyblok. “Developers shift from coding to curating,

business users will describe ideas in natural language, and AI will build

functional prototypes instantly. This democratization of development brings

more voices into the software process while pushing IT to focus on oversight,

scalability, and compliance.”From Indicators to Insights: Automating Risk Amplification to Strengthen Security Posture

Security analysts don’t want more alerts. They want more relevant ones.

Traditional SIEMs generate events using their own internal language that involve

things like MITRE tags, rule names and severity scores. But what frontline

responders really want to know is which users, systems, or cloud resources are

most at risk right now. That’s why contextual risk modeling matters. Instead of

alerting on abstract events, modern detection should aggregate risk around

assets including users, endpoints, APIs, or services. This shifts the SOC

conversation from “What alert fired?” to “Which assets should I care about

today?” ... The burden of alert fatigue isn’t just operational but also

emotional. Analysts spend hours chasing shadows, pivoting across tools, chasing

one-off indicators that lead nowhere. When everything is an anomaly, nothing is

actionable. Risk amplification offers a way to reduce the unseen yet heavy

weight on security analysts and the emotional toll it can take by aligning

high-risk signals to high-value assets and surfacing insights only when multiple

forms of evidence converge. Rather than relying on a single failed login or

endpoint alert, analysts can correlate chains of activity whether they be login

anomalies, suspicious API queries, lateral movement, or outbound data flows –

all of which together paint a much stronger picture of risk.

Security analysts don’t want more alerts. They want more relevant ones.

Traditional SIEMs generate events using their own internal language that involve

things like MITRE tags, rule names and severity scores. But what frontline

responders really want to know is which users, systems, or cloud resources are

most at risk right now. That’s why contextual risk modeling matters. Instead of

alerting on abstract events, modern detection should aggregate risk around

assets including users, endpoints, APIs, or services. This shifts the SOC

conversation from “What alert fired?” to “Which assets should I care about

today?” ... The burden of alert fatigue isn’t just operational but also

emotional. Analysts spend hours chasing shadows, pivoting across tools, chasing

one-off indicators that lead nowhere. When everything is an anomaly, nothing is

actionable. Risk amplification offers a way to reduce the unseen yet heavy

weight on security analysts and the emotional toll it can take by aligning

high-risk signals to high-value assets and surfacing insights only when multiple

forms of evidence converge. Rather than relying on a single failed login or

endpoint alert, analysts can correlate chains of activity whether they be login

anomalies, suspicious API queries, lateral movement, or outbound data flows –

all of which together paint a much stronger picture of risk.

The Immune System of Software: Can Biology Illuminate Testing?

CSO hiring on the rise: How to land a top security exec role

“Boards want leaders who can manage risk and reputation, which has made soft

skills — such as media handling, crisis communication, and board or financial

fluency — nearly as critical as technical depth,” Breckenridge explains. ...

“Organizations are seeking cybersecurity leaders who combine technical depth, AI

fluency, and strong interpersonal skills,” Fuller says. “AI literacy is now a

baseline expectation, as CISOs must understand how to defend against AI-driven

threats and manage governance frameworks.” ... Offers of top pay and authority

to CSO candidates obviously come with high expectations. Organizations are

looking for CSOs with a strong blend of technical expertise, business acumen,

and interpersonal strength, Fuller says. Key skills include cloud security,

identity and access management (IAM), AI governance, and incident response

planning. Beyond technical skills, “power skills” such as communication,

creativity, and problem-solving are increasingly valued, Fuller explains. “The

ability to translate complex risks into business language and influence

board-level decisions is a major differentiator. Traits such as resilience,

adaptability, and ethical leadership are essential — not only for managing

crises but also for building trust and fostering a culture of security across

the enterprise,” he says.

“Boards want leaders who can manage risk and reputation, which has made soft

skills — such as media handling, crisis communication, and board or financial

fluency — nearly as critical as technical depth,” Breckenridge explains. ...

“Organizations are seeking cybersecurity leaders who combine technical depth, AI

fluency, and strong interpersonal skills,” Fuller says. “AI literacy is now a

baseline expectation, as CISOs must understand how to defend against AI-driven

threats and manage governance frameworks.” ... Offers of top pay and authority

to CSO candidates obviously come with high expectations. Organizations are

looking for CSOs with a strong blend of technical expertise, business acumen,

and interpersonal strength, Fuller says. Key skills include cloud security,

identity and access management (IAM), AI governance, and incident response

planning. Beyond technical skills, “power skills” such as communication,

creativity, and problem-solving are increasingly valued, Fuller explains. “The

ability to translate complex risks into business language and influence

board-level decisions is a major differentiator. Traits such as resilience,

adaptability, and ethical leadership are essential — not only for managing

crises but also for building trust and fostering a culture of security across

the enterprise,” he says.From legacy to SaaS: Why complexity is the enemy of enterprise security

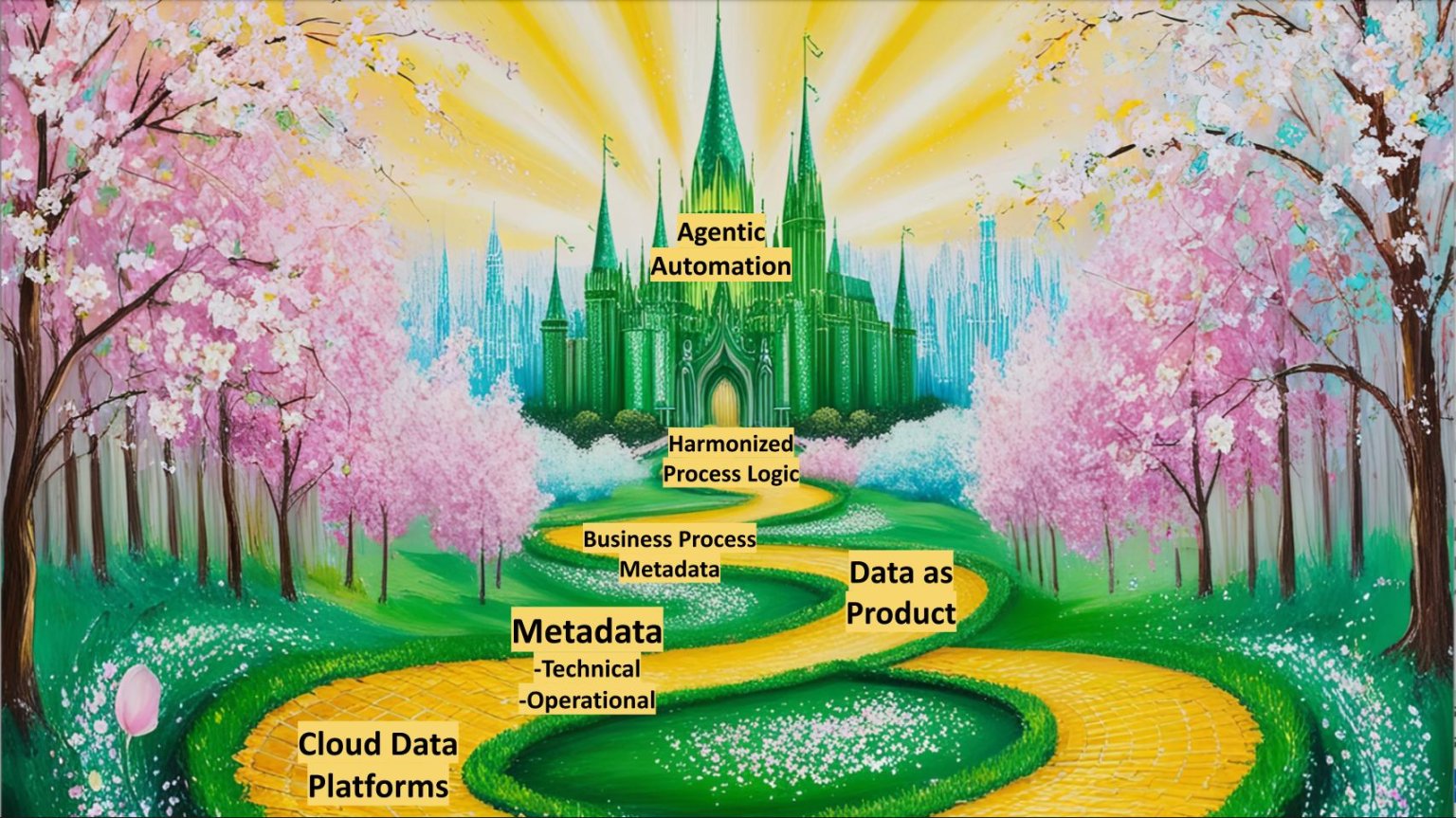

By modernizing, i.e., moving applications to a more SaaS-like consumption model, the network perimeter and associated on-prem complexity tends to dissipate, which is actually a good thing, as it makes ZTNA easier to implement. As the main entry point into an organization’s IT system becomes the web application URL (and browser), this reduces attackers’ opportunities and forces them to focus on the identity layer, subverting authentication, phishing, etc. Of course, a higher degree of trust has to be placed (and tolerated) in SaaS providers, but at least we now have clear guidance on what to look for when transitioning to SaaS and cloud: identity protection, MFA, and phishing-resistant authentication mechanisms become critical—and these are often enforced by default or at least much easier to implement compared to traditional systems. ... The unwillingness to simplify technology stack by moving to SaaS is then combined with a reluctant and forced move to the cloud for some applications, usually dictated by business priorities or even ransomware attacks (as in the BL case above). This is a toxic mix which increases complexity and reduces the ability for a resource-constrained organization to keep security risks at bay.Why Metadata Is the New Interface Between IT and AI

A looming risk in enterprise AI today is using the wrong data or proprietary

data in AI data pipelines. This may include feeding internal drafts to a public

chatbot, training models on outdated or duplicate data, or using sensitive files

containing employee, customer, financial or IP data. The implications range from

wasted resources to data breaches and reputational damage. A comprehensive

metadata management strategy for unstructured data can mitigate these risks by

acting as a gatekeeper for AI workflows. For example, if a company wants to

train a model to answer customer questions in a chatbot, metadata can be used to

exclude internal files, non-final versions, or documents marked as confidential.

Only the vetted, tagged, and appropriate content is passed through for embedding

and inference. This is a more intelligent, nuanced approach than simply dumping

all available files into an AI pipeline. With rich metadata in place,

organizations can filter, sort, and segment data based on business requirements,

project scope, or risk level. Metadata augments vector labeling for AI

inferencing. A metadata management system helps users discover which files to

feed the AI tool, such as health benefits documents in an HR chatbot while

vector labeling gives deeper information as to what’s in each document.

A looming risk in enterprise AI today is using the wrong data or proprietary

data in AI data pipelines. This may include feeding internal drafts to a public

chatbot, training models on outdated or duplicate data, or using sensitive files

containing employee, customer, financial or IP data. The implications range from

wasted resources to data breaches and reputational damage. A comprehensive

metadata management strategy for unstructured data can mitigate these risks by

acting as a gatekeeper for AI workflows. For example, if a company wants to

train a model to answer customer questions in a chatbot, metadata can be used to

exclude internal files, non-final versions, or documents marked as confidential.

Only the vetted, tagged, and appropriate content is passed through for embedding

and inference. This is a more intelligent, nuanced approach than simply dumping

all available files into an AI pipeline. With rich metadata in place,

organizations can filter, sort, and segment data based on business requirements,

project scope, or risk level. Metadata augments vector labeling for AI

inferencing. A metadata management system helps users discover which files to

feed the AI tool, such as health benefits documents in an HR chatbot while

vector labeling gives deeper information as to what’s in each document.

Ask a Data Ethicist: What Should You Know About De-Identifying Data?

Simply put, data de-identification is removing or obscuring details from a dataset in order to preserve privacy. We can think about de-identification as existing on a continuum... Pseudonymization is the application of different techniques to obscure the information, but allows it to be accessed when another piece of information (key) is applied. In the above example, the identity number might unlock the full details – Joe Blogs of 123 Meadow Drive, Moab UT. Pseudonymization retains the utility of the data while affording a certain level of privacy. It should be noted that while the terms anonymize or anonymization are widely used – including in regulations – some feel it is not really possible to fully anonymize data, as there is always a non-zero chance of reidentification. Yet, taking reasonable steps on the de-identification continuum is an important part of compliance with requirements that call for the protection of personal data. There are many different articles and resources that discuss a wide variety of types of de-identification techniques and the merits of various approaches ranging from simple masking techniques to more sophisticated types of encryption. The objective is to strike a balance between the complexity of the the technique to ensure sufficient protection, while not being burdensome to implement and maintain.5 ways business leaders can transform workplace culture - and it starts by listening

Antony Hausdoerfer, group CIO at auto breakdown specialist The AA, said

effective leaders recognize that other people will challenge established ways of

working. Hearing these opinions comes with an open management approach. "You

need to ensure that you're humble in listening, but then able to make decisions,

commit, and act," he said. "Effective listening is about managing with humility

with commitment, and that's something we've been very focused on recently."

Hausdoerfer told ZDNET how that process works in his IT organization. "I don't

know the answer to everything," he said. "In fact, I don't know the answer to

many things, but my team does, and by listening to them, we'll probably get the

best outcome. Then we commit to act." ... Bev White, CEO at technology and

talent solutions provider Nash Squared, said open ears are a key attribute for

successful executives. "There are times to speak and times to listen --

good leaders recognize which is which," she said. "The more you listen, the more

you will understand how people are really thinking and feeling -- and with so

many great people in any business, you're also sure to pick up new information,

deepen your understanding of certain issues, and gain key insights you need."

Antony Hausdoerfer, group CIO at auto breakdown specialist The AA, said

effective leaders recognize that other people will challenge established ways of

working. Hearing these opinions comes with an open management approach. "You

need to ensure that you're humble in listening, but then able to make decisions,

commit, and act," he said. "Effective listening is about managing with humility

with commitment, and that's something we've been very focused on recently."

Hausdoerfer told ZDNET how that process works in his IT organization. "I don't

know the answer to everything," he said. "In fact, I don't know the answer to

many things, but my team does, and by listening to them, we'll probably get the

best outcome. Then we commit to act." ... Bev White, CEO at technology and

talent solutions provider Nash Squared, said open ears are a key attribute for

successful executives. "There are times to speak and times to listen --

good leaders recognize which is which," she said. "The more you listen, the more

you will understand how people are really thinking and feeling -- and with so

many great people in any business, you're also sure to pick up new information,

deepen your understanding of certain issues, and gain key insights you need."Beyond Efficiency: AI's role in reshaping work and reimagining impact

The workplace of the future is not about humans versus machines; it's about

humans working alongside machines. AI's real value lies in augmentation:

enabling people to do more, do better, and do what truly matters. Take

recruitment, for example. Traditionally time-intensive and often vulnerable to

unconscious bias, hiring is being reimagined through AI. Today, organisations

can deploy AI to analyse vast talent pools, match skills to roles with

precision, and screen candidates based on objective data. This not only reduces

time-to-hire but also supports inclusive hiring practices by mitigating biases

in decision-making. In fact, across the employee lifecycle, it personalises

experiences at scale. From career development tools that recommend roles and

learning paths aligned with individual aspirations, to chatbots that provide

real-time HR support, AI makes the employee journey more intuitive, proactive,

and empowering. ... AI is not without its challenges. As with any transformative

technology, its success hinges on responsible deployment. This includes robust

governance, transparency, and a commitment to fairness and inclusion. Diversity

must be built into the AI lifecycle, from the data it's trained on to the

algorithms that guide its decisions.

The workplace of the future is not about humans versus machines; it's about

humans working alongside machines. AI's real value lies in augmentation:

enabling people to do more, do better, and do what truly matters. Take

recruitment, for example. Traditionally time-intensive and often vulnerable to

unconscious bias, hiring is being reimagined through AI. Today, organisations

can deploy AI to analyse vast talent pools, match skills to roles with

precision, and screen candidates based on objective data. This not only reduces

time-to-hire but also supports inclusive hiring practices by mitigating biases

in decision-making. In fact, across the employee lifecycle, it personalises

experiences at scale. From career development tools that recommend roles and

learning paths aligned with individual aspirations, to chatbots that provide

real-time HR support, AI makes the employee journey more intuitive, proactive,

and empowering. ... AI is not without its challenges. As with any transformative

technology, its success hinges on responsible deployment. This includes robust

governance, transparency, and a commitment to fairness and inclusion. Diversity

must be built into the AI lifecycle, from the data it's trained on to the

algorithms that guide its decisions.