Quote for the day:

"You may only succeed if you desire succeeding; you may only fail if you do not mind failing." --Philippos

Neural Networks – Intuitively and Exhaustively Explained

The process of thinking within the human brain is the result of communication

between neurons. You might receive stimulus in the form of something you saw,

then that information is propagated to neurons in the brain via

electrochemical signals. The first neurons in the brain receive that stimulus,

then each neuron may choose whether or not to "fire" based on how much

stimulus it received. "Firing", in this case, is a neurons decision to send

signals to the neurons it’s connected to. ... Neural networks are,

essentially, a mathematically convenient and simplified version of neurons

within the brain. A neural network is made up of elements called

"perceptrons", which are directly inspired by neurons. ... In AI there are

many popular activation functions, but the industry has largely converged on

three popular ones: ReLU, Sigmoid, and Softmax, which are used in a variety of

different applications. Out of all of them, ReLU is the most common due to its

simplicity and ability to generalize to mimic almost any other function. ...

One of the fundamental ideas of AI is that you can "train" a model. This is

done by asking a neural network (which starts its life as a big pile of random

data) to do some task. Then, you somehow update the model based on how the

model’s output compares to a known good answer.

Why honeypots deserve a spot in your cybersecurity arsenal

In addition to providing critical threat intelligence for defenders, honeypots

can often serve as helpful deception techniques to ensure attackers focus on

decoys instead of valuable and critical organizational data and systems. Once

malicious activity is identified, defenders can use the findings from the

honeypots to look for indicators of compromise (IoC) in other areas of their

systems and environments, potentially catching further malicious activity and

minimizing the dwell time of attackers. In addition to threat intelligence and

attack detection value, honeytokens often have the benefit of having minimal

false positives, given they are highly customized decoy resources deployed with

the intent of not being accessed. This contrasts with broader security tooling,

which often suffers from high rates of false positives from low-fidelity alerts

and findings that burden security teams and developers. ... Enterprises need to

put some thought into the placement of the honeypots. It is common for them to

be used in environments and systems that may be potentially easier for attackers

to access, such as publicly exposed endpoints and systems that are internet

accessible, as well as internal network environments and systems. The former, of

course, is likely to get more interaction and provide broader generic

insights.

IoT Technology: Emerging Trends Impacting Industry And Consumers

An emerging IoT trend is the rise of emotion-aware devices that use sensors and

artificial intelligence to detect human emotions through voice, facial

expressions or physiological data. For businesses, this opens doors to

hyper-personalized customer experiences in industries like retail and

healthcare. For consumers, it means more empathetic tech—think stress-relieving

smart homes or wearables that detect and respond to anxiety. ... The increasing

prevalence of IoT tech means that it is being increasingly deployed into “less

connected” environments. As a result, the user experience needs to be adapted so

that it’s not wholly dependent on good connectivity—instead, priorities must

include how to gracefully handle data gaps and robust fallbacks with missing

control instructions. ... IoT systems can now learn user preferences, optimizing

everything from home automation to healthcare. For businesses, this means deeper

customer engagement and loyalty; for consumers, it translates to more intuitive,

seamless interactions that enhance daily life. ... While not a newly emerging

trend, the Industrial Internet of Things is an area of focus for manufacturers

seeking greater efficiency, productivity and safety. Connecting machines and

systems with a centralized work management platform gives manufacturers access

to real-time data.

When digital literacy fails, IT gets the blame

By insisting that requisite digital skills and system education are mastered

before a system cutover occurs, the CIO assumes a leadership role in the

educational portion of each digital project, even though IT itself may not be

doing the training. Where IT should be inserting itself is in the area of system

skills training and testing before the system goes live. The dual goals of a

successful digital project should be two-fold: a system that’s complete and

ready to use; and a workforce that’s skilled and ready to use it. ... IT

business analysts, help desk personnel, IT trainers, and technical support

personnel all have people-helping and support skills that can contribute to

digital education efforts throughout the company. The more support that users

have, the more confidence they will gain in new digital systems and business

processes — and the more successful the company’s digital initiatives will be.

... Eventually, most of the technical glitches were resolved, and doctors,

patients, and support medical personnel learned how to integrate virtual visits

with regular physical visits and with the medical record system. By the time the

pandemic hit in 2019, telehealth visits were already well under way. These

visits worked because the IT was there, the pandemic created an emergency

scenario, and, most importantly, doctors, patients, and medical support

personnel were already trained on using these systems to best advantage.

What you need to know about developing AI agents

“The success of AI agents requires a foundational platform to handle data

integration, effective process automation, and unstructured data management,”

says Rich Waldron, co-founder and CEO of Tray.ai. “AI agents can be architected

to align with strict data policies and security protocols, which makes them

effective for IT teams to drive productivity gains while ensuring compliance.”

... One option for AI agent development comes directly as a service from

platform vendors that use your data to enable agent analysis, then provide the

APIs to perform transactions. A second option is from low-code or no-code,

automation, and data fabric platforms that can offer general-purpose tools for

agent development. “A mix of low-code and pro-code tools will be used to build

agents, but low-code will dominate since business analysts will be empowered to

build their own solutions,” says David Brooks, SVP of Evangelism at Copado.

“This will benefit the business through rapid iteration of agents that address

critical business needs. Pro coders will use AI agents to build services and

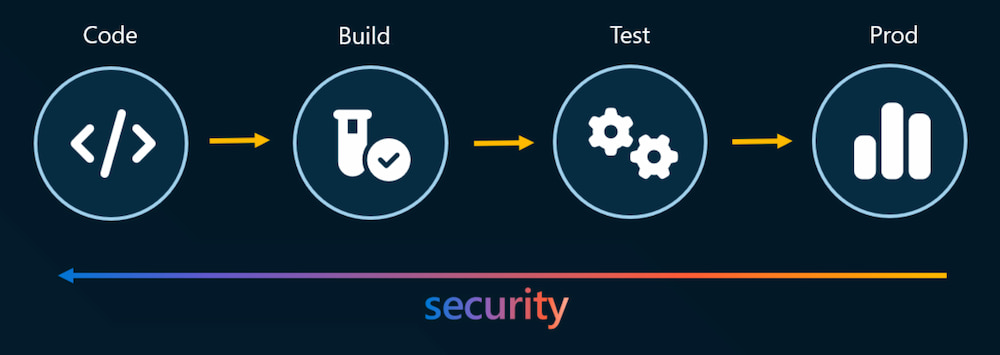

integrations that provide agency.” ... Organizations looking to be early

adopters in developing AI agents will likely need to review their data

management platforms, development tools, and smarter devops processes to enable

developing and deploying agents at scale.

The Path of Least Resistance to Privileged Access Management

While PAM allows organizations to segment accounts, providing a barrier between

the user’s standard access and needed privileged access and restricting access

to information that is not needed, it also adds a layer of internal and

organizational complexity. This is because of the impression it removes user’s

access to files and accounts that they have typically had the right to use, and

they do not always understand why. It can bring changes to their established

processes. They don’t see the security benefit and often resist the approach,

seeing it as an obstacle to doing their jobs and causing frustration amongst

teams. As such, PAM is perceived to be difficult to introduce because of this

friction. ... A significant gap in the PAM implementation process lies in the

lack of comprehensive awareness among administrators. They often do not have a

complete inventory of all accounts, the associated access levels, their

purposes, ownership, or the extent of the security issues they face. ...

Consider a scenario where a company has a privileged Windows account with access

to 100 servers. If PAM is instructed to discover the scope of this Windows

account, it might only identify the servers that have been accessed previously

by the account, without revealing the full extent of its access or the actions

performed.

Quantum networking advances on Earth and in space

“The most established use case of quantum networking to date is quantum key

distribution — QKD — a technology first commercialized around 2003,” says Monga.

“Since then, substantial advancements have been achieved globally in the

development and production deployment of QKD, which leverages secure quantum

channels to exchange encryption keys, ensuring data transfer security over

conventional networks.” Quantum key distribution networks are already up and

running, and are being used by companies, he says, in the U.S., in Europe, and

in China. “Many commercial companies and startups now offer QKD products,

providing secure quantum channels for the exchange of encryption keys, which

ensures the safe transfer of data over traditional networks,” he says. Companies

offering QKD include Toshiba, ID Quantique, LuxQuanta, HEQA Security, Think

Quantum, and others. One enterprise already using a quantum network to secure

communications is JPMorgan Chase, which is connecting two data centers with a

high-speed quantum network over fiber. It also has a third quantum node set up

to test next-generation quantum technologies. Meanwhile, the need for secure

quantum networks is higher than ever, as quantum computers get closer to prime

time.

What are the Key Challenges in Mobile App Testing?

One of the major issues in mobile app testing is the sheer variety of devices in

the market. With numerous models, each having different screen sizes, pixel

densities, operating system (OS) versions and hardware specifications, ensuring

the app is responsive across all devices becomes a task. Testing for

compatibility on every device and OS can be tiresome and expensive. While tools

like emulators and cloud-based testing platforms can help, it remains essential

to conduct tests on real devices to ensure accurate results. ... In addition to

device fragmentation, another key challenge is the wide range of OS versions. A

device may run one version of an OS while another runs on a different version,

leading to inconsistencies in app performance. Just like any other software,

mobile apps need to function seamlessly across multiple OS versions, including

Android, iPhone Operating System (iOS) and other platforms. Furthermore, OS are

updated frequently, which can cause apps to break or not function. ... Mobile

app users interact with apps under various network conditions, including Wi-Fi,

4G, 5G or limited connectivity. Testing how an app performs in different network

conditions is crucial to ensure it does not hang or load slowly when the

connection is weak.

Reimagining KYC to Meet Regulatory Scrutiny

Implementing AI and ML allows KYC to run in the background rather than having

staff manually review information as they can, said Jennifer Pitt, senior

analyst for fraud and cybersecurity with Javelin Strategy & Research. “This

allows the KYC team to shift to other business areas that require more human

interaction like investigations,” Pitt said. Yet use of AI and ML remains low at

many banks. Currently, fraudsters and cybercriminals are using generative

adversarial networks - machine learning models that create new data that mirrors

a training set - to make fraud less detectable. Fraud professionals should

leverage generative adversarial networks to create large datasets that closely

mirror actual fraudulent behavior. This process involves using a generator to

create synthetic transaction data and a discriminator to distinguish between

real and synthetic data. By training these models iteratively, the generator

improves its ability to produce realistic fraudulent transactions, allowing

fraud professionals to simulate emerging fraud types and account takeovers, and

enhance detection models’ sensitivity to these evolving threats. Instead of

waiting to gather sufficient historical data from known fraudulent behaviors,

GANs enable a more proactive approach, helping fraud teams quickly understand

new fraud trends and patterns, Pitt said.

How Agentic AI Will Transform Banking (and Banks)

Agentic AI has two intertwined vectors. For banks, one path is internal, and

focused on operational efficiency for tasks including the automation of routine

data entry and compliance and regulatory checks, summaries of email and reports,

and the construction of predictive models for trading and risk management to

bolster insights into market dynamics, fraud and credit and liquidity risk. The

other path is consumer facing, and revolves around managing customer

relationships, from automated help desks staffed by chatbots to personalized

investment portfolio recommendations. Both trajectories aim to improve

efficiency and reduce costs. Agentic AI "could have a bigger impact on the

economy and finance than the internet era," Citigroup wrote in a January 2025

report

that calls the technology the "Do It For Me" Economy. ... Meanwhile, automated

AI decisions could inadvertently violate laws and regulations on consumer

protection, anti-money laundering or fair lending laws. Agentic AI that can

instruct an agent to make a trade based on bad data or assumptions could lead to

financial losses and create systemic risk within the banking system. "Human

oversight is still needed to oversee inputs and review the decisioning process,"

Davis says.

/filters:no_upscale()/articles/metrics-quality-software/en/resources/9figure-2-1673957572756.jpg)