5 Must-Have Skills For Remote Work

When teams work remotely, at least half of all communication is done via

writing rather than speaking. This means communicating through emails, Slack,

or texting. It even applies to using the chat function while you’re on a video

call. You need to be able to communicate clearly no matter what platform

you’re using. ... Working remotely doesn’t mean working alone. You’re still

going to be part of a team, which means working with colleagues on projects

and tasks. Without a physical space to gather, collaboration can be a bit more

challenging. Communication skills and collaboration skills go hand in hand, as

communication plays a huge role in successful collaboration. Find the right

balance of video meetings, phone calls, and messages to ensure ample but not

overwhelming communication. ... You might be working with colleagues who are

in a different time zone which impacts deadlines, when meetings can be

scheduled, and even when you can get in touch with those colleagues. If you’re

assigned to work with a new team, you might have to adapt to the way that team

works.

How to secure your project with one of the world’s top open source tools

Dynamic application security testing (DAST) is a highly effective way to find

certain types of vulnerabilities, like cross site scripting (XSS) and SQL

injection (SQLi). However many of the commercial DAST tools are expensive to

use and often only used when a project is getting ready to ship, if they are

used at all. ZAP can be integrated into a project’s CI/CD pipeline from the

start, ensuring that many common vulnerabilities are detected and can be fixed

very early on in the project lifecycle. Testing in development also means that

you can avoid the need to handle tools and features designed to make

automation difficult, like single sign-on (SSO) and web application firewalls

(WAFs). ... For web applications, or any projects that provide a web based

interface, you can use ZAP or another DAST tool. But don’t forget to use

static application security testing (SAST) tools as well. These are

particularly useful if they are introduced when starting a project. If SAST

tools are used against more mature projects then they often flag a large

number of potential issues, which makes it difficult to focus on the most

critical ones.

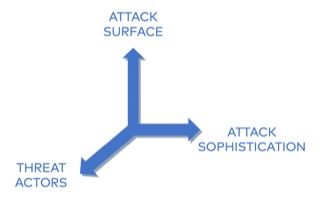

Using the Attack Cycle to Up Your Security Game

Attack sophistication is directly proportional to the goals of the attackers

and the defensive posture of the target. A ransomware ring will target the

least-well-defended and the most likely to pay (ironically, cyber insurance

can create a perverse incentive in some situations.) because there is an

opportunity cost and return on investment calculation for every attack. A

nation-state actor seeking breakthrough biotech intellectual property will be

patient and well-capitalized, developing new zero-day exploits as they launch

a concerted effort to penetrate a network's secrets. One of the most

famous of these attacks, Stuxnet, exploited vulnerabilities in SCADA systems

to cripple Iran's nuclear program. The attack was thought to have penetrated

the air gap network via infected USB thumb drives. As awareness of these

complex, multi-stage attacks has risen, startups have increased innovation -

such as the behavior analytics space where complex machine-learning algorithms

determine "normal" behaviors and look for that one bad actor. Threat actors

are the individuals and organizations engaged in the actual attack. In the

broadest sense of the term, they are not always malicious.

The FI and fintech opportunity with open banking

What’s different now is that over the last two or three years the industry has

come together to collaborate on evolving the ecosystem. One example is the

formation of an industry group called the Financial Data Exchange. As a

result, financial institutions, financial data aggregators, and related

parties are developing standards for access, authentication, and transparency

that will provide end-to-end governance to keep the ecosystem safe and fair,

and consumer data secure. ... “Banks are looking for technology innovation to

address both back office challenges, get faster and leaner, reduce costs, but

also to increase engagement with their customers,” Costello says. “Certainly

at times like this we see how important digital engagement is.” As some FIs

are closing branches to reduce costs, digital engagement becomes essential.

And if it’s done right, it works. And the opportunity for innovation abounds.

The better multi-factor authentication and authorization that comes with open

banking means that the bank has a higher degree of confidence that the person

with whom they’re engaging is the account holder. Now that they have a higher

degree of trust, they can offer a higher degree of engagement.

Reduced cost, responsive apps from micro front-end architecture

Early micro front-end projects have focused on how to provide better

separation of logic and UI elements into smaller, more dynamic components. But

modern micro front ends have moved far beyond the idea of loose coupling code

to full scale Kubernetes-based deployment. There's even been a recent trend of

micro front ends containerized as microservices and delivered directly to the

client. For example, the H2 app by Glofox recently adopted this approach to

implement a PaaS for health and fitness apps, which gyms and health clubs then

customize and provide to clients. The app uses the edgeSDK from Mimik

Technology Inc., to manage the containerized micro front-end microservices

deployment to run natively across iOS, Android and Windows devices. In

addition, a micro front-end deployment reduces the server load. It only

consumes client-side resources, which improves response times in apps

vulnerable to latency issues. Users once had to connect to databases or remote

servers for most functions, but a micro front end greatly reduces that

dependency.

8 Tips for Crafting Ransomware Defenses and Responses

For any attack that involves ransomware, the fallout can be much more

extensive than simply dealing with the malware. And organizations that don't

quickly see the big picture will struggle to recover as quickly and

cost-effectively as they might otherwise be able to do (see: Ransomware +

Exfiltration + Leaks = Data Breach). That's why understanding not just what

ransomware attackers did inside a network, but what they might still be

capable of doing - inside the network, as well as by leaking - is an essential

part of any incident response plan, security experts say. So too is

identifying how intruders got in - or might still get in - and ensure those

weaknesses cannot be exploited again, says Alan Brill, senior managing

director in Kroll's cyber risk practice. "If you don't lock it down, it's very

simple: You're still vulnerable," he tells Information Security Media Group.

"If you lock down what you thought was the issue but you were wrong - it

wasn't the issue - that they weren't just putting ransomware in your system

but they've been in there for a month examining your system, exfiltrating data

and lining up how to do the most damage when they launched the ransomware, you

may not even know what happened."

We've forgotten the most important thing about AI. It's time to remember it again

Leufer has just put the final touches to a new project to debunk common AI

myths, which he has been working on since he received his Mozilla fellowship –

an award designed for web activists and technology policy experts. And one of

the most pervasive of those myths is that AI systems can and act of their own

accord, without supervision from humans. It certainly doesn't help that

artificial intelligence is often associated with humanoid robots, suggesting

that the technology can match human brains. An AI system deployed, say, to

automate insurance claims, is very unlikely to come in the form of a

human-looking robot, and yet that is often the portrayal that is made of the

technology, regardless of its application. Leufer calls those

"inappropriate robots", often shown carrying out human tasks that would never

be necessary for an automaton. Among the most common offenders feature robots

typing on keyboards and robots wearing headphones or using laptops. The powers

we ascribe to AI as a result even have legal ramifications: there is an

ongoing debate about whether an AI system should own intellectual property, or

whether automatons should be granted citizenship.

Scaling Distributed Teams by Drawing Parallels from Distributed Systems

The biggest bottleneck for any distributed team is decision-making. Similar to distributed systems, if we apply “deliver accountability and receive autonomy,” the bottleneck is removed eventually. For this to happen, there should be a lot of transparency and information sharing. So the teams and individuals are enabled to make decisions independently. Clarity is harder with a distributed team. Distributed systems send heartbeats very frequently and detailed reports at a lesser frequency. Communication is the key. Distributed standups are a better way of determining progress. Apart from that, move one-to-one conversations and decision-making to a common channel. We tried a concept called the end of the day update. Everyone posts their progress at the end of their day (considering different time zones). We believe it gives a better view of what each person is working on and the overall progress, even before they come to standups. At EverestEngineering, the coaches are responsible for improving the health of the channel. A healthy distributed team has a lot of discussions on slack channels and quick calls. You can see a lot of decisions made in the channel. There are enough reactions and threads for a question.How to build a quantum workforce

The growth means companies are looking to hire applicants for quantum

computing jobs and that the country needs to build a quantum workforce.

Efforts are underway; earlier this month, more than 5,000 students around the

world applied to IBM's Qiskit Global Summer School for future quantum software

developers. And the National Science Foundation and White House Office of

Science and Technology Policy held a workshop in March designed to identify

essential concepts to help students engage with quantum information science

(QIS). But industry experts speaking on the topic during an IBM virtual

roundtable Wednesday said K-12 students are not being prepared to go to

schools with the requisite curriculum to work in this industry. Academia and

industry must work in tandem to engage the broadest number of students to get

them prepared to do these kinds of jobs that will be needed in the future,

said Jeffrey Hammond, vice president and principal analyst at Forrester

Research, who moderated the discussion. It was only four years ago that

quantum computing became available in the cloud, giving more people access,

noted panelist Abe Asfaw, global lead of quantum education at IBM Quantum.

A Developer-Centric Approach to Modern Edge Data Management

A substantial majority of embedded developers in the IoT and complex

instrumentation space use C, C++, or C# to handle data processing and local

analytics. That’s in part because of how easy it is to handle direct I/O for

devices and internal systems components as well as more complex

digitally-enhanced machinery through some variations of inp() and outp()

statements. It’s also easy to manipulate collected data using familiar file

system statements such as fopen(), fclose(), fread(), and fwrite(). This is the

path of least resistance. Almost anyone who takes a programming class (or just

takes the time to learn how) can use these statements to interact with data at

the file system level. The problem is that file systems are very simple. They

don’t do much by themselves. When it comes down to document and record

management, indexing, sorting, creating and managing tables, and so on, there’s

only one operative statement: DoItYourself(). And we’re not even talking about

rare or rocket science-level activities, here. These are everyday activities

that that you’d find in any database system. Wait! It’s the D-word! May as well

be the increment of the ASCII character pointer by two to the … you know what

word.

Quote for the day: