Why root causes matter in cybersecurity

In the cybersecurity industry, unfortunately, there is no official directory

of root causes. Many vendors categorize certain attacks as root causes when in

reality, these are often outcomes or symptoms. For example, ransomware, remote

access, stolen credentials, etc., are all symptoms, not root causes. The root

cause behind remote access or stolen credentials is most likely human error or

some vulnerability. ... The true root cause is human error. People are prone

to mistakes, ignorance, and biases. We open malicious attachments, click on

wrong links, surf the wrong websites, use weak credentials, and reuse

passwords across multiple sites. We use unauthorized software, make public our

private details via posting on social media for bad actors to scrape and

harvest. We take security far too much for granted. Human error in

cybersecurity is a much larger problem than previously anticipated or

documented. To clamp down on human error, organizations must train employees

enough so they can develop a security instinct and improve security habits.

Clear policies and procedures must be in place, so everyone understands their

responsibility and accountability towards the business.

Running Automation Tests at Scale Using Java

As customer decision making is now highly dependent on digital experience as

well, organisations are increasingly investing in quality of that digital

experience. That means establishing high internal QA standards and most

importantly investing in Automation Testing for faster release cycles. So, how

does this concern you as a developer or tester? Having automation skills on

your resume is highly desirable in the current employment market.

Additionally, getting started is quick. Selenium is the ideal framework for

beginning automation testing. It is the most popular automated testing

framework and supports all programming languages. This post will discuss

Selenium, how to set it up, and how to use Java to create an automated test

script. Next, we will see how to use a java based testing framework like

TestNG with Selenium and perform parallel test execution at scale on the cloud

using LambdaTest.

How Generative AI Can Support DevOps and SRE Workflows

Querying a bunch of different tools for logs and a bunch of different

observability data and outputs manually requires a lot of time and knowledge,

which isn’t necessarily efficient. Where is that metric? Which dashboard is

in? What’s the machine name? How do other people typically refer to it? What

kind of time window do people typically look at here? And so forth. “All that

context has been done before by other people,” Nag said. And generative AI can

enable engineers to use natural language prompts to find exactly what they

need — and often kick off the next steps in subsequent actions or workflows

automatically as well, often without ever leaving Slack ... The cloud native

ecosystem is vast (and continually growing) — keeping up with the intricacies

of everything is almost impossible. With generative AI, Nag said, no one

actually needs to know the ins and outs of dozens of different systems and

tools. A user can simply say “scale up this pod by two replicas or configure

this Lambda [function] this way.

Diverse threat intelligence key to cyberdefense against nation-state attacks

Most threat intelligence houses currently originate from the West or are

Western-oriented, and this can result in bias or skewed representations of the

threat landscape, noted Minhan Lim, head of research and development at Ensign

Labs. The Singapore-based cybersecurity vendor was formed through a joint

venture between local telco StarHub and state-owned investment firm, Temasek

Holdings. "We need to maintain neutrality, so we're careful about where we

draw our data feeds," Lim said in an interview with ZDNET. "We have data feeds

from all reputable [threat intel] data sources, which is important so we can

understand what's happening on a global level." Ensign also runs its own

telemetry and SOCs (security operations centers), including in Malaysia and

Hong Kong, collecting data from sensors deployed worldwide. Lim added that the

vendor's clientele comprises multinational corporations (MNCs), including

regional and China-based companies, that have offices in the U.S., Europe, and

South Africa.

Where Does Zero Trust Fall Short? Experts Weigh In

The strategy of ZT can be applied to all of those areas and, if done correctly

and intelligently, then a solid strategic approach can be beneficial. There is

no ZT product that can simply make those areas secure, however. I would also

suggest that the largest area of threat is privileged access, as that is the

most common avenue of lateral movement and increased compromise historically.”

... “It’s a multifaceted issue when determining the greatest threat among the

areas where zero trust falls short. At the core, privileged access stands out

as the most alarming vulnerability. These users, often likened to having ‘keys

to the kingdom,’ possess the capabilities to access confidential data, modify

configurations and undertake actions that could severely jeopardize an

organization. “However, an underlying concern that might be overlooked is the

reason behind the extensive distribution of privileged access. In many

situations, this excessive access stems from challenges tied to legacy

systems, IoT devices, third-party services, and emerging technologies and

applications.

Data Management Challenges In Heterogeneous Systems

When you look at the whole chiplet ecosystem, there are certain blocks we feel

can be generalized and made into chiplets, or known good die, that can be

brought into the market. The secret sauce is custom piece of silicon, and they

can design and own the recipe around that. But there are generic components in

any SoC — memory, interconnects, processors. You can always fragment it in a

way that there are some general components, which you can leverage from the

general market, and which will help everyone. That brings the cost of building

your system down so you can focus on problems around your secret sauce. ... We

need something like a three-tier data management system, where with tier one

everyone can access data and share it, and tier three is only for people in a

company. But I don’t know when we’ll get there because data management is a

real tough problem. ... We may need new approaches. Just looking at this from

the hyperscale cloud perspective, which is huge, with complex

hardware/software systems and things coming in from many vendors, how do we

protect it?

Companies are already feeling the pressure from upcoming US SEC cyber rules

Calculating the financial ramifications of a cybersecurity incident under the

upcoming rules placed pressure on corporate leaders to collaborate more

closely with CISOs and other cybersecurity professionals within their

organizations. Right now, a "gulf exists between boards and CFOs and their

cybersecurity defense teams, their chief information security officers,"

Gerber says. "The two aren’t speaking the same language yet." Gerber thinks

that "what companies and CFOs are realizing is that they need to get their

teams into these exercises so that they can practice making their

determinations as accurately and clearly as they can and early as they can."

"I think that the general counsels and the CISOs have been at arm’s length of

each, and I’m going to tell you one extreme," Sanna says. "One CISO told us

that their legal or general counsel did not want them to assess cyber risk in

financial terms so they could claim ignorance and not have to report it."

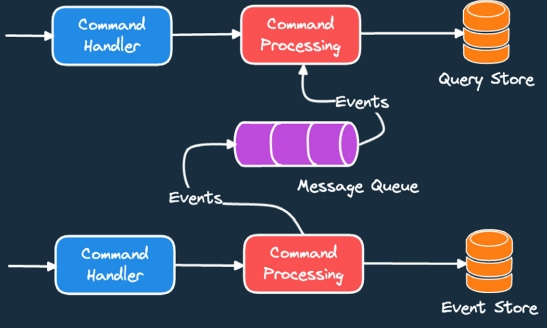

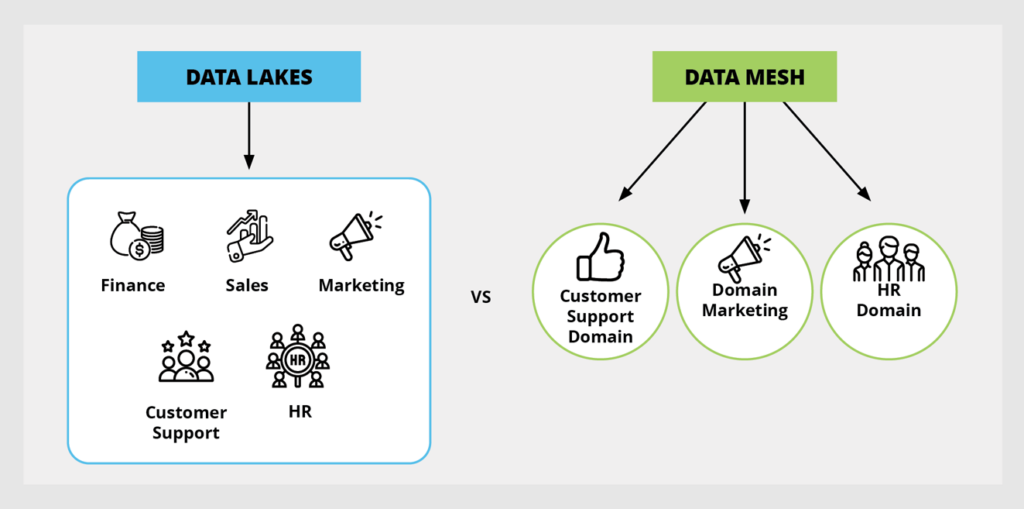

A Guide to Data-Driven Design and Architecture

Data-driven architecture involves designing and organizing systems,

applications, and infrastructure with a central focus on data as a core

element. Within this architectural framework, decisions concerning system

design, scalability, processes, and interactions are guided by insights and

requirements derived from data. Fundamental principles of data-driven

architecture include: Data-centric design – Data is at the core of design

decisions, influencing how components interact, how data is processed, and how

insights are extracted. Real-time processing – Data-driven architectures often

involve real-time or near real-time data processing to enable quick insights

and actions. Integration of AI and ML – The architecture may incorporate AI

and ML components to extract deeper insights from data. Event-driven approach

– Event-driven architecture, where components communicate through events, is

often used to manage data flows and interactions.

The Search for Certainty When Spotting Cyberattacks

Exacerbating the problem is the availability of malware and ransomware

services for sale on the Dark Web, Taylor said, which can arm bad actors with

the means of doing digital harm even if they lack coding skills of their own.

That makes it harder to profile and identify specific attackers, he said,

because thousands of bad actors might buy the same tools to attack systems.

“We can’t identify where it’s coming from very easily,” Taylor said, because

almost anybody could be a hacker. “You don’t have to be the expert anymore.

You don’t have to be the cyber gang that’s very technically adept at

developing all these tools.” That means cyberattacks may be launched from

unexpected angles. For example, he said, gangs could outsource their hacking

needs via such resources, or individuals who are simply bored at home might

pick up such tools from the Dark Web to create phishing campaigns. “It becomes

harder and harder to profile the threat.”

How Listening to the Customer Can Boost Innovation

Product development should not rely solely on customer input. Development

teams should also take product metrics into account. Most, if not all, SaaS

products today track a wealth of product metrics that show how customers use

and engage with products. These insights can drive product development and

strategy. For example, by providing insights on how individual customers are

interacting with products, development teams can see what features customers

are and aren’t using, or perhaps struggling with. This can validate whether

customer requests to improve certain features are correct. Metrics can also

show whether new products or services are performing well and having a

positive impact on business outcomes. From a business perspective, you want

new services to improve engagement, retention and sentiment, and metrics can

show the benefits of listening to the customer by demonstrating how new

services are helping to improve revenue growth.

Quote for the day:

"Become the kind of leader that people

would follow voluntarily, even if you had no title or position." --Brian Tracy