AI for security is here. Now we need security for AI

As the mass adoption and application of AI are still fairly new, the security of

AI is not yet well understood. In March 2023, the European Union Agency for

Cybersecurity (ENISA) published a document titled Cybersecurity of AI and

Standardisation with the intent to “provide an overview of standards (existing,

being drafted, under consideration and planned) related to the cybersecurity of

AI, assess their coverage and identify gaps” in standardization. Because the EU

likes compliance, the focus of this document is on standards and regulations,

not on practical recommendations for security leaders and practitioners. There

is a lot about the problem of AI security online, although it looks

significantly less compared to the topic of using AI for cyber defense and

offense. Many might argue that AI security can be tackled by getting people and

tools from several disciplines including data, software and cloud security to

work together, but there is a strong case to be made for a distinct

specialization. When it comes to the vendor landscape, I would categorize AI/ML

security as an emerging field. The summary that follows provides a brief

overview of vendors in this space.

As the mass adoption and application of AI are still fairly new, the security of

AI is not yet well understood. In March 2023, the European Union Agency for

Cybersecurity (ENISA) published a document titled Cybersecurity of AI and

Standardisation with the intent to “provide an overview of standards (existing,

being drafted, under consideration and planned) related to the cybersecurity of

AI, assess their coverage and identify gaps” in standardization. Because the EU

likes compliance, the focus of this document is on standards and regulations,

not on practical recommendations for security leaders and practitioners. There

is a lot about the problem of AI security online, although it looks

significantly less compared to the topic of using AI for cyber defense and

offense. Many might argue that AI security can be tackled by getting people and

tools from several disciplines including data, software and cloud security to

work together, but there is a strong case to be made for a distinct

specialization. When it comes to the vendor landscape, I would categorize AI/ML

security as an emerging field. The summary that follows provides a brief

overview of vendors in this space.Enterprises Die for Domain Expertise Over New Technologies

Domain expertise is important to build a complete ecosystem that can scale.

This can help businesses leverage relevant knowledge and datasets to develop

custom solutions. This is why enterprises look for enablers that can bring in

the domain expertise for particular use cases. ... One of the challenges that

companies encounter today is how to utilise data effectively as per their

business needs. According to a global survey conducted by Oracle and Seth

Stephens-Davidowitz, 91% of respondents in India reported a ten-fold increase

in the number of decisions they make every day over the past three years. As

individuals attempt to navigate this increased decision-making, 90% reported

being inundated with more data from various sources than ever before. “Some

interesting findings we came across was that respondents who wanted

technological assistance also said that the technology should know its

workflow and what it is trying to accomplish,” Joey Fitts, vice president,

Analytics Product Strategy, Oracle told ET.

Domain expertise is important to build a complete ecosystem that can scale.

This can help businesses leverage relevant knowledge and datasets to develop

custom solutions. This is why enterprises look for enablers that can bring in

the domain expertise for particular use cases. ... One of the challenges that

companies encounter today is how to utilise data effectively as per their

business needs. According to a global survey conducted by Oracle and Seth

Stephens-Davidowitz, 91% of respondents in India reported a ten-fold increase

in the number of decisions they make every day over the past three years. As

individuals attempt to navigate this increased decision-making, 90% reported

being inundated with more data from various sources than ever before. “Some

interesting findings we came across was that respondents who wanted

technological assistance also said that the technology should know its

workflow and what it is trying to accomplish,” Joey Fitts, vice president,

Analytics Product Strategy, Oracle told ET.Amazon’s quiet open source revolution

Let’s remember that the open source spadework is not done. For example, AWS

makes a lot of money from its Kubernetes service but still barely scrapes into

the top 10 contributors for the past year. The same is true for other banner

open source projects that AWS has managed services for, such as OpenTelemetry,

or projects its customers depend on, such as Knative (AWS comes in at #12).

What about Apache Hadoop, the foundation for AWS Elastic MapReduce? AWS has

just one committer. For Apache Airflow, the numbers are better. This is

glass-half-empty thinking, anyway. The fact that AWS has any committers to

these projects is an important indicator that the company is changing. A few

years back, there would have been zero committers to these projects. Now there

are one or many. All of this signals a different destination for AWS. The

company has always been great at running open source projects as services for

its customers. As I found while working there, most customers just want

something that works. But getting it to “just work” in the way customers want

requires that AWS get its hands dirty in the development of the project.

Let’s remember that the open source spadework is not done. For example, AWS

makes a lot of money from its Kubernetes service but still barely scrapes into

the top 10 contributors for the past year. The same is true for other banner

open source projects that AWS has managed services for, such as OpenTelemetry,

or projects its customers depend on, such as Knative (AWS comes in at #12).

What about Apache Hadoop, the foundation for AWS Elastic MapReduce? AWS has

just one committer. For Apache Airflow, the numbers are better. This is

glass-half-empty thinking, anyway. The fact that AWS has any committers to

these projects is an important indicator that the company is changing. A few

years back, there would have been zero committers to these projects. Now there

are one or many. All of this signals a different destination for AWS. The

company has always been great at running open source projects as services for

its customers. As I found while working there, most customers just want

something that works. But getting it to “just work” in the way customers want

requires that AWS get its hands dirty in the development of the project.Response and resilience in operational-risk events

The findings have several urgent implications for leaders as they think about the overall resilience of their institutions, how to minimize the risk of such events occurring, and how to respond when crises do hit. The findings strongly suggest that broad market forces and industry dynamics can magnify adverse effects. Effective crisis and mitigation planning has to take account of these factors. Experience supports this view. In the not-so-distant past, especially before the financial crisis of 2008–09, many companies approached operational-risk measures from a regulatory perspective, with an economy of effort, if not formalistically. Incurring costs and paying fines for unforeseen breaches and events were accordingly counted as the cost of doing business. Amid crises, furthermore, communications were sometimes aimed at minimizing true losses—an approach that risked a damaging cycle of upward revisions. The present environment, however, is unforgiving of such approaches. An accelerated pace of change, especially in digitization and social media, magnifies the negative effects of missteps in the aftermath of crisis events.Developers Need a Community of Practice — and Wikis Still Work

This subject has flattened out a bit since the pandemic, after which fewer

developers worked next to each other and keeping remote members connected is

more the norm. A good Community of Practice should just look like a private

Stack Overflow, with discussions on topics of concern to devs across the

organization. This applies to most organizations that have siloed teams. If

you are part of a one-team company, then a CoP should not be something you

need right now — just be ready to be proactive when you are part of a bigger

setup. The first seeds are usually sown when “best practice” is discussed, and

managers realize that there is no point in having just one team getting things

right. This is the time to establish a developer CoP, before something awkward

gets imposed from above. The topics are often the complications that an

organization stubbornly brings to existing tech; like understanding arcane

branching policies, or working with an old version of software because it is

the only sanctioned version, etc.

This subject has flattened out a bit since the pandemic, after which fewer

developers worked next to each other and keeping remote members connected is

more the norm. A good Community of Practice should just look like a private

Stack Overflow, with discussions on topics of concern to devs across the

organization. This applies to most organizations that have siloed teams. If

you are part of a one-team company, then a CoP should not be something you

need right now — just be ready to be proactive when you are part of a bigger

setup. The first seeds are usually sown when “best practice” is discussed, and

managers realize that there is no point in having just one team getting things

right. This is the time to establish a developer CoP, before something awkward

gets imposed from above. The topics are often the complications that an

organization stubbornly brings to existing tech; like understanding arcane

branching policies, or working with an old version of software because it is

the only sanctioned version, etc. Five Leadership Mindsets For Navigating Organizational Complexity: Rethinking Chaos And Opportunity

The world is unlikely to suddenly settle down. With that in mind, the context

around chaotic moments changes. It’s no longer about just dealing with what’s

in front of you; it’s about writing the script for the team to respond to

future disruptions. So don’t just deal with it as a leader. Start viewing

disruptions as valuable learning experiences that build resilience and

adaptability within your organization. And once you have navigated through,

take a moment to create a playbook for the future. Use retrospection with your

team to find out the specific things that worked and the things that didn’t.

... “I don’t deal well with change” is a bad personal strategy, and I

recommend that you drop any ideas that adaptability is an innate trait

possessed only by a select few. With that said, I've found that learning

requires experience. Social and business safety nets are key, so employees can

learn with less fear. Encourage your employees to challenge their comfort

zones, experiment with new approaches and learn from setbacks to develop the

skills and strategies necessary for navigating change effectively.

The world is unlikely to suddenly settle down. With that in mind, the context

around chaotic moments changes. It’s no longer about just dealing with what’s

in front of you; it’s about writing the script for the team to respond to

future disruptions. So don’t just deal with it as a leader. Start viewing

disruptions as valuable learning experiences that build resilience and

adaptability within your organization. And once you have navigated through,

take a moment to create a playbook for the future. Use retrospection with your

team to find out the specific things that worked and the things that didn’t.

... “I don’t deal well with change” is a bad personal strategy, and I

recommend that you drop any ideas that adaptability is an innate trait

possessed only by a select few. With that said, I've found that learning

requires experience. Social and business safety nets are key, so employees can

learn with less fear. Encourage your employees to challenge their comfort

zones, experiment with new approaches and learn from setbacks to develop the

skills and strategies necessary for navigating change effectively.Why Don’t We Have 128-Bit Computers Yet?

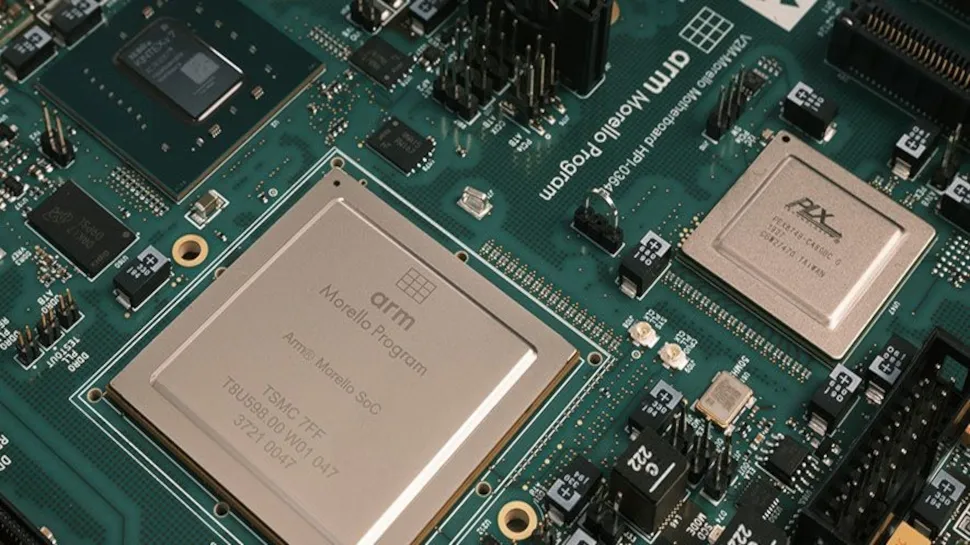

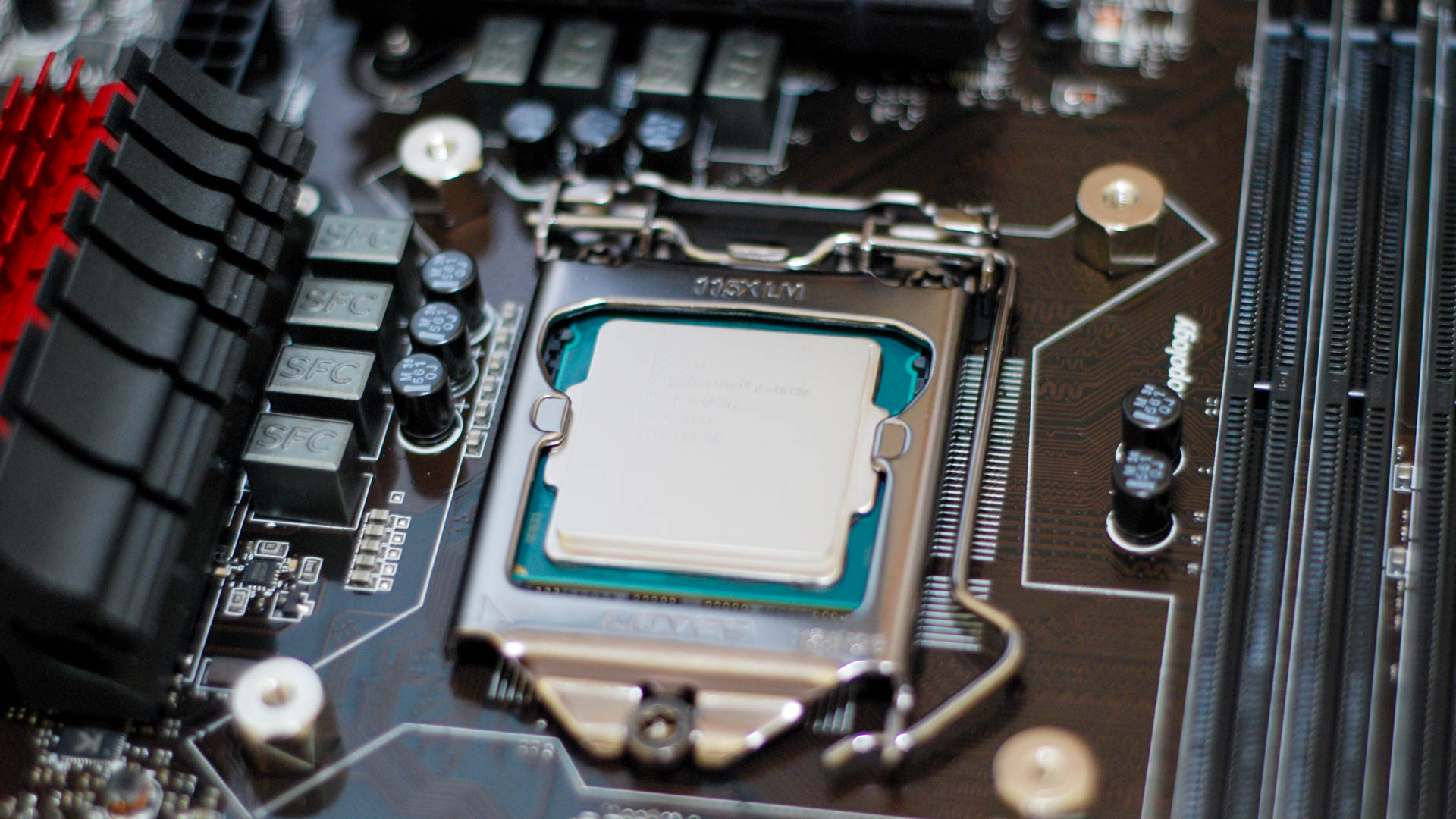

It’s practically impossible to predict the future of computing, but there are

a few reasons why 128-bit computers may never be needed:Diminishing returns:

As a processor’s bit size increases, the performance and capabilities

improvements tend to become less significant. In other words, the improvement

from 64- to 128- bits isn’t anywhere as dramatic as going from 8-bit to 16-bit

CPUs, for example. Alternative solutions: There may be alternative ways to

address the need for increased processing power and memory addressability,

such as using multiple processors or specialized hardware rather than a

single, large processor with a high bit size. Physical limitations: It may

turn out to be impossible to create a complex modern 128-bit processor due to

technological or material constraints. Cost and resources: Developing and

manufacturing 128-bit processors could be cost-prohibitive and

resource-intensive, making mass production unprofitable. While it’s true that

the benefits of moving from 64-bit to 128-bit might not be worth it today, new

applications or technologies might emerge in the future that could push the

development of 128-bit processors.

It’s practically impossible to predict the future of computing, but there are

a few reasons why 128-bit computers may never be needed:Diminishing returns:

As a processor’s bit size increases, the performance and capabilities

improvements tend to become less significant. In other words, the improvement

from 64- to 128- bits isn’t anywhere as dramatic as going from 8-bit to 16-bit

CPUs, for example. Alternative solutions: There may be alternative ways to

address the need for increased processing power and memory addressability,

such as using multiple processors or specialized hardware rather than a

single, large processor with a high bit size. Physical limitations: It may

turn out to be impossible to create a complex modern 128-bit processor due to

technological or material constraints. Cost and resources: Developing and

manufacturing 128-bit processors could be cost-prohibitive and

resource-intensive, making mass production unprofitable. While it’s true that

the benefits of moving from 64-bit to 128-bit might not be worth it today, new

applications or technologies might emerge in the future that could push the

development of 128-bit processors.Secret CSO: Rani Kehat, Radiflow

The success of your cybersecurity is difficult to measure. For example, many

believe that if you haven’t been hacked, your cybersecurity efforts must be

working. This isn’t the case – it may well be that you just haven’t been

hacked yet. Thankfully, there are methods to measure how well security

practices are working; effectiveness of controls, corporate awareness and

reporting of suspicious events, and mitigation RPO are among the most helpful

here. ... API security is the best. APIs have become integral to programming

web-based interactions, which means hackers have zeroed in on them as a key

target. Zero Trust, on the other hand, has become a buzzword that in theory

should reduce vulnerabilities but in reality is not practical to implement,

slows down application performance, and hampers productivity. ... To get

formal professional certifications. Not only have these helped advance my

career at every stage, but they have also ensured that my security knowledge

remains up to date against constantly developing hacker tactics and

techniques.

“Blockchain itself can be used within a private ‘walled garden’ as well,” Ian

Foley, chief business officer at data storage blockchain firm Arweave, told

VentureBeat. “It is a technology structure that brings immutability and

maintains data provenance. Centralized cloud vendors are also developing

blockchain solutions, but they lack the benefits of decentralization.

Decentralized cloud infrastructures are always independent of centralized

environments, enabling enterprises and individuals to access everything

they’ve stored without going through a specific application.” Decentralized

storage platforms use the power of blockchain technology to offer transparency

and verifiable proof for data storage, consumption and reliability through

cryptography. This eliminates the need for a centralized provider and gives

users greater control over their data. With decentralized storage, data is

stored in a wide peer-to-peer (P2P) network, offering transfer speeds that are

generally faster than traditional centralized storage systems.

“Blockchain itself can be used within a private ‘walled garden’ as well,” Ian

Foley, chief business officer at data storage blockchain firm Arweave, told

VentureBeat. “It is a technology structure that brings immutability and

maintains data provenance. Centralized cloud vendors are also developing

blockchain solutions, but they lack the benefits of decentralization.

Decentralized cloud infrastructures are always independent of centralized

environments, enabling enterprises and individuals to access everything

they’ve stored without going through a specific application.” Decentralized

storage platforms use the power of blockchain technology to offer transparency

and verifiable proof for data storage, consumption and reliability through

cryptography. This eliminates the need for a centralized provider and gives

users greater control over their data. With decentralized storage, data is

stored in a wide peer-to-peer (P2P) network, offering transfer speeds that are

generally faster than traditional centralized storage systems.

Quote for the day:

"Effective team leaders adjust their style to provide what the group can't provide for itself." -- Kenneth Blanchard

How blockchain technology is paving the way for a new era of cloud computing

“Blockchain itself can be used within a private ‘walled garden’ as well,” Ian

Foley, chief business officer at data storage blockchain firm Arweave, told

VentureBeat. “It is a technology structure that brings immutability and

maintains data provenance. Centralized cloud vendors are also developing

blockchain solutions, but they lack the benefits of decentralization.

Decentralized cloud infrastructures are always independent of centralized

environments, enabling enterprises and individuals to access everything

they’ve stored without going through a specific application.” Decentralized

storage platforms use the power of blockchain technology to offer transparency

and verifiable proof for data storage, consumption and reliability through

cryptography. This eliminates the need for a centralized provider and gives

users greater control over their data. With decentralized storage, data is

stored in a wide peer-to-peer (P2P) network, offering transfer speeds that are

generally faster than traditional centralized storage systems.

“Blockchain itself can be used within a private ‘walled garden’ as well,” Ian

Foley, chief business officer at data storage blockchain firm Arweave, told

VentureBeat. “It is a technology structure that brings immutability and

maintains data provenance. Centralized cloud vendors are also developing

blockchain solutions, but they lack the benefits of decentralization.

Decentralized cloud infrastructures are always independent of centralized

environments, enabling enterprises and individuals to access everything

they’ve stored without going through a specific application.” Decentralized

storage platforms use the power of blockchain technology to offer transparency

and verifiable proof for data storage, consumption and reliability through

cryptography. This eliminates the need for a centralized provider and gives

users greater control over their data. With decentralized storage, data is

stored in a wide peer-to-peer (P2P) network, offering transfer speeds that are

generally faster than traditional centralized storage systems.A True Leader Doesn't Just Talk the Talk — They Walk the Walk. Here's How to Lead from the Front.

So, what does "walking the walk" look like? Depending on their position within the company, it looks different for everyone. Let's say you're the CEO of a company. To provide valuable insights and opinions, you need to be proficient in the product you're selling and stay current on industry trends and news. If you were managing a customer service team, what would you do? Participating in difficult conversations can help your team understand what's expected of them. As a leader, it is imperative that you set an excellent example for your team members and achieve results. Leaders must lead by example and practice what they preach. Talking about honesty, integrity and accountability is easy, but it's much harder to embody them daily. Regarding work-life balance, taking time off and setting boundaries are essential. You must cultivate a culture of listening to your team to foster a culture of open communication.Quote for the day:

"Effective team leaders adjust their style to provide what the group can't provide for itself." -- Kenneth Blanchard