How disaster recovery can serve as a strategic tool

“You can count on us” is a popular business mantra. But what does that mean exactly? Consider this thought experiment: You and a competitor are hit with the same incident, but one of you gets back up more quickly. Fast recovery will give you a competitive advantage, if you can pay the price. “The smaller your RTO and RPO values are, the more your applications will cost to run,” says Google Cloud in a how-to discussion of DR. Any solution should also be well tested. “Your customers expect your systems to be online 24x7,” says Scott Woodgate, director, Microsoft Azure, in this press release. ... A solid DR plan can also facilitate transformational-based efficiencies. Let's say your leadership has business reasons for migrating to a new data center or transitioning to a hybrid cloud. Part of planning a migration is prepping for user experience and systems being down. If you are willing to use your DR assets during the transition, once the cloud or new physical sites are ready, you can fail back from DR, thus minimizing disruption. As an IT pro, you may not want to define these events as disasters, but business leaders prefer using existing resources to investing in swing gear.

The cybersecurity incident response team: the new vital business team

We live and do business in a world fraught with cyber risks. Every day, companies and consumers are targeted with attacks of varying sophistication, and it has become increasingly apparent that everyone is considered fair game. Organisations of all sizes and industries are falling victim, and the cyber risk is quickly becoming one of the most prevalent threats. When disruptions do occur from cyberattacks or other data incidents they not only have a direct financial impact, but an ongoing effect on reputation. For example, Carphone Warehouse fell victim to a cyberattack in 2015, which resulted in the compromising of data belonging to more than three million customers and 1,000 employees. While it suffered financial losses from the remedial costs, which included a £400,000 fine from the Information Commissioner’s Office (ICO), it also led to consumers questioning whether their data was truly secure with the retailer and if it was simply safer to shop elsewhere. That loss in consumer confidence is incredibly difficult to claw back, particularly at a time when grievances can be aired on social media and be shared hundreds or thousands of times.

Managing IoT resources with access control

The first place to start in establishing an effective IoT security strategy is by ensuring that you are able to see and track every device on the network. Issues from patching to monitoring to quarantining all start with establishing visibility from the moment a device touches the network. Access control technologies need to be able to automatically recognize IoT devices, determine if they have been compromised and then provide controlled access based on factors such as the type of device, whether or not it is user-based and, if so, the role of the user. And they need to be able to do this at digital speeds. Another access control factor to consider is location. Access control devices need to be able to determine whether an IoT device is connecting remotely and, if not, where in the network it is logging in from. Different access may be required depending on whether a device is connecting remotely, or even from the lobby, a conference room, a secured lab or a warehouse facility. Location-based access policies are especially relevant for organizations with branch offices or an SD-WAN system in place.

Artificial intelligence: Why a digital base is critical

The adoption of AI, we found, is part of a continuum, the latest stage of investment beyond core and advanced digital technologies. To understand the relationship between a company’s digital capabilities and its ability to deploy the new tools, we looked at the specific technologies at the heart of AI. Our model tested the extent to which underlying clusters of core digital technologies (cloud computing, mobile, and the web) and of more advanced technologies (big data and advanced analytics) affected the likelihood that a company would adopt AI. As Exhibit 1 shows, companies with a strong base in these core areas were statistically more likely to have adopted each of the AI tools—about 30 percent more likely when the two clusters of technologies are combined.5These companies presumably were better able to integrate AI with existing digital technologies, and that gave them a head start. This result is in keeping with what we have learned from our survey work. Seventy-five percent of the companies that adopted AI depended on knowledge gained from applying and mastering existing digital capabilities to do so.

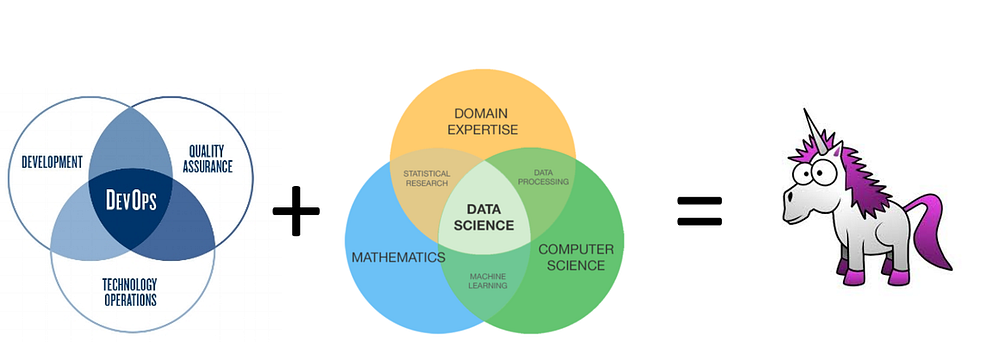

The 5 Clustering Algorithms Data Scientists Need to Know

Clustering is a Machine Learning technique that involves the grouping of data points. Given a set of data points, we can use a clustering algorithm to classify each data point into a specific group. In theory, data points that are in the same group should have similar properties and/or features, while data points in different groups should have highly dissimilar properties and/or features. Clustering is a method of unsupervised learning and is a common technique for statistical data analysis used in many fields. In Data Science, we can use clustering analysis to gain some valuable insights from our data by seeing what groups the data points fall into when we apply a clustering algorithm. Today, we’re going to look at 5 popular clustering algorithms that data scientists need to know and their pros and cons! K-Means is probably the most well know clustering algorithm. It’s taught in a lot of introductory data science and machine learning classes. It’s easy to understand and implement in code! Check out the graphic below for an illustration.

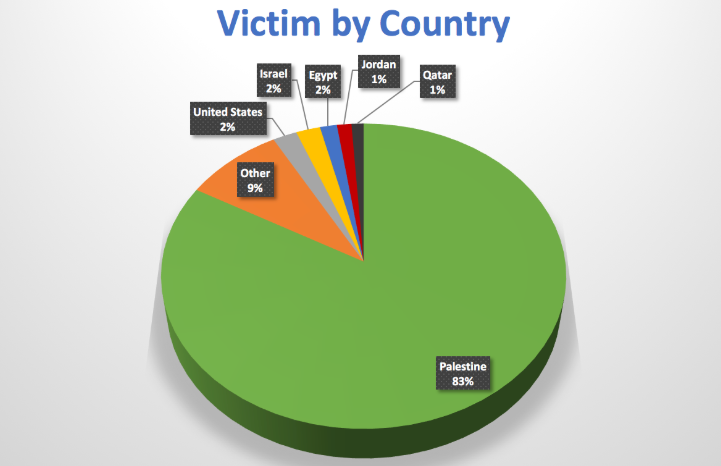

Ransomware Attack Leads to Discovery of Lots More Malware

The investigation concluded the unauthorized persons would have had the ability to access all of the Blue Springs computer systems, the clinic notes. "However, at this time, we have not received any indication that the information has been used by an unauthorized individual." The U.S. Department of Health and Human Service's HIPAA Breach Reporting Tool website, or "wall of shame," indicates that Blue Spring on July 10 reported the breach as a hacking/IT incident involving its electronic medical records and network server that exposed data on nearly 45,000 individuals. Blue Spring's front desk receptionist, who did not want to be identified by name, told Information Security Media Group Friday that the investigation into the ransomware attack had not yet determined the source of the ransomware attack, the source of the other malware discovered, whether the other malware might have been present on the practice's systems before the ransomware attack, or whether the infections were all part of the same attack. She said the practice chose to "rebuild" its systems and did not pay a ransom.

CIOs reveal their security philosophies

“Overly strict security creates a different risk — throttling information exchange and creativity can threaten a company’s competitive viability,” Johnson adds. “Poorly managed reactions to breaches — and all firms have been breached in some way — can lead to other business deterioration.” “Security is as much a human challenge as it is a technical challenge,” he concludes. “Dependable cybersecurity requires a three-part strategy of 1) superb technical implementation of the basics, 2) consistent education aimed at increasing awareness of employees, vendors, and executives, and 3) building a security team that is as motivated, skilled, and innovative as the bad guys.” In this edition of Transformation Nation, CIOs delineate their own IT security philosophies — dispatches from the front lines of cybersecurity strategy. The implications of a breach for corporate reputation, economic well-being, and personal security are immense. Through these accounts, CIOs reveal the many tension points in application and communication that they grapple with every day

GDPR means it is time to revisit your email marketing strategies

No matter how private you think your emails are, every email you send and receive is stored on a remote hard drive you have no control over. If your email provider doesn’t encrypt your emails from end-to-end, (most don’t), all company emails are at risk. Encrypting employee email communications plays a huge role in maintaining GDPR compliance. The average employee won’t think twice about emailing co-workers about sensitive issues that may include data from the business database. For example, someone might send a customer’s credit card information to the sales department for processing a return. To protect your internal emails and maintain GDPR compliance, buying general encryption services isn’t enough. You need to know exactly how and when the data is and isn’t being encrypted. Not all encryption services are complete. For instance, if you’re using Microsoft 365, you’ve probably heard of a data protection product called Azure RMS. This product uses TLS security to encrypt email messages the moment they leave a user’s device. Unfortunately, when the messages reach Microsoft’s servers, they are stored unprotected.

Google, Cisco amp-up enterprise cloud integration

The Cisco/Google combination – which is currently being tested by an early access enterprise customer, according to Google – will let IT managers and application developers use Cisco tools to manage their on-premises environments and link it up with Google’s public IaaS cloud which offers orchestration, security and ties to a vast developer community. In fact the developer community is one area the companies have targeted recently by announcing a Cisco & Google Cloud Challenge, which is offering prizes worth over $160,000 to develop what Cisco calls “game-changing” apps using Cisco’s Container Platform with Google Cloud services. Cisco says the goal is to bring together its DevNet community and Google’s Technology Partners to bring new hybrid-cloud applications for enterprise customers. Cisco VP & CTO of DevNet Susie Wee wrote in a blog that in preparation for the Challenge, DevNet is offering workshops, office hours, and sandboxes using Cisco Container Platform with Google Cloud services to help customers and developers learn how to connect cloud data from a private cloud to the Google Cloud Platform or even data from edge devices to run analytics and employ machine learning.

Why 'Sophisticated' Leadership Matters -- Especially Now

When challenged by complexity, many leaders try to implement best practices such as lean management, restructuring or re-engineering. Such investments may indeed be necessary, but they are rarely sufficient. This is because the root cause of most stalls is that the leader has run up against the limits of his or her leadership sophistication. In other words, the leader is failing to reinvent him- or herself as the new kind of leader the organization now needs. This usually means that the leader doesn’t fully appreciate that intelligence, hard work and technical knowledge must now take a back seat to enhanced personal, interpersonal, political and strategic leadership capabilities. In other words, you will stall not because the complex challenges you face require changes in your organization. But rather because the sophisticated challenges require change in yourself. So how can you become a more sophisticated leader? Try pulling back, elevating your viewpoint and figuring out how you can take yourself to the next level.

Quote for the day:

"Next generation leaders are those who would rather challenge what needs to change and pay the price than remain silent and die on the inside." -- Andy Stanley

/https%3A%2F%2Fblueprint-api-production.s3.amazonaws.com%2Fuploads%2Fcard%2Fimage%2F815871%2F755acf4d-a6d5-4d7e-9216-92df6290695b.jpg)