Tech to the aid of justice delivery

Obsolete statutes which trigger unnecessary litigation need to be eliminated as

they are being done currently with over 1,500 statutes being removed in the last

few years. Furthermore, for any new legislation, a sunset review clause should

be made a mandatory intervention, such that after every few years, it is

reviewed for its relevance in the society. A corollary to this is scaling

decriminalisation of minor offences after determining as shown by Kadish SH in

his seminal paper ‘The Crisis of Overcriminalization’, whether the total public

and private costs of criminalisation outweigh the benefits? Non-compliance with

certain legal provisions which don’t involve mala fide intent can be addressed

through monetary compensation rather than prison time, which inevitably

instigates litigation. Finally, among the plethora of ongoing litigations

in the Indian court system, a substantial number are those that don’t require

interpretation of the law by a judge, but simply adjudication on facts. These

can take the route of ODR, which has the potential for dispute avoidance by

promoting legal education and inducing informed choices for initiating

litigation and also containment by making use of mediation, conciliation or

arbitration, and resolving disputes outside the court system.

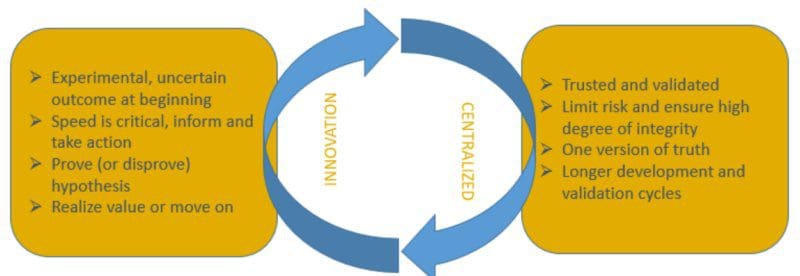

Leading future-ready organizationsTo break through these barriers to Agile, companies need a restart.

They need to continue to expand on the initial progress they’ve made but focus

on implementing a wider, more holistic approach to Agile. Every aspect of the

organization must be engaged in an ongoing cyclical process of “discover and

evaluate, prioritize, build and operate, analyze…and repeat.” ... Organizations

that leverage digital decoupling are able to get on independent release cycles

and unlock new ways of working with legacy systems. Based on our work with

clients, we’ve seen that this can result in up to 30% reduction in cost of

change, reduced coordination overhead, and increased speed of planning and pace

of delivery. ... In our work with clients, we see firsthand how

cross-functional teams and automation of application delivery and operations

contributes to increased pace of delivery, improved employee productivity, and

up to 30% reduction in deployment time. Additionally, scaling DevOps enables

fast and reliable releases of new features to production within short iterations

and includes optimizing processes and upskilling people, which is the starting

point for a collaborative and liquid enterprise. .... Moving talent and partners

into a non-hierarchal and blended talent sourcing and management model can

result in 10-20% increase in capacity.

F5 Big-IP Vulnerable to Security-Bypass Bug

The vulnerability specifically exists in one of the core software components of

the appliance: The Access Policy Manager (APM). It manages and enforces access

policies, i.e., making sure all users are authenticated and authorized to use a

given application. Silverfort researchers noted that APM is sometimes used to

protect access to the Big-IP admin console too. APM implements Kerberos as an

authentication protocol for authentication required by an APM policy, they

explained. “When a user accesses an application through Big-IP, they may be

presented with a captive portal and required to enter a username and password,”

researchers said, in a blog posting issued on Thursday. “The username and

password are verified against Active Directory with the Kerberos protocol to

ensure the user is who they claim they are.” During this process, the user

essentially authenticates to the server, which in turn authenticates to the

client. To work properly, KDC must also authenticate to the server. KDC is a

network service that supplies session tickets and temporary session keys to

users and computers within an Active Directory domain.

4 Business Benefits of an Event-Driven Architecture (EDA)

Using an event-driven architecture can significantly improve developmental

efficiency in terms of both speed and cost. This is because all events are

passed through a central event bus, which new services can easily connect with.

Not only can services listen for specific events, triggering new code where

appropriate, but they can also push events of their own to the event bus,

indirectly connecting to existing services. ... If you want to increase the

retention and lifetime value of customers, improving your application’s user

experience is a must. An event-driven architecture can be incredibly beneficial

to user experience (albeit indirectly) since it encourages you to think about

and build around… events! ... Using an event-driven architecture can also reduce

the running costs of your application. Since events are pushed to services as

they happen, there’s no need for services to poll each other for state changes

continuously. This leads to significantly fewer calls being made, which reduces

bandwidth consumption and CPU usage, ultimately translating to lower operating

costs. Additionally, those using a third-party API gateway or proxy will pay

less if they are billed per-call.

Gartner says low-code, RPA, and AI driving growth in ‘hyperautomation’

Gartner said process-agnostic tools such as RPA, LCAP, and AI will drive the

hyperautomation trend because organizations can use them across multiple use

cases. Even though they constitute a small part of the overall market, their

impact will be significant, with Gartner projecting 54% growth in these

process-agnostic tools. Through 2024, the drive toward hyperautomation will lead

organizations to adopt at least three out of the 20 process-agonistic types of

software that enable hyperautomation, Gartner said. The demand for low-code

tools is already high as skills-strapped IT organizations look for ways to move

simple development projects over to business users. Last year, Gartner forecast

that three-quarters of large enterprises would use at least four low-code

development tools by 2024 and that low-code would make up more than 65% of

application development activity. Software automating specific tasks, such as

enterprise resource planning (ERP), supply chain management, and customer

relationship management (CRM), will also contribute to the market’s growth,

Gartner said.

When cryptography attacks – how TLS helps malware hide in plain sight

Lots of things that we rely on, and that are generally regarded as bringing

value, convenience and benefit to our lives…can be used for harm as well as

good. Even the proverbial double-edged sword, which theoretically gave ancient

warriors twice as much fighting power by having twice as much attack surface,

turned out to be, well, a double-edged sword. With no “safe edge” at the rear, a

double-edged sword that was mishandled, or driven back by an assailant’s

counter-attack, became a direct threat to the person wielding it instead of to

their opponent. ... The crooks have fallen in love with TLS as well. By using

TLS to conceal their malware machinations inside an encrypted layer,

cybercriminals can make it harder for us to figure out what they’re up to.

That’s because one stream of encrypted data looks much the same as any other.

Given a file that contains properly-encrypted data, you have no way of telling

whether the original input was the complete text of the Holy Bible, or the

compiled code of the world’s most dangerous ransomware. After they’re encrypted,

you simply can’t tell them apart – indeed, a well-designed encryption algorithm

should convert any input plaintext into an output ciphertext that is

indistinguishable from the sort of data you get by repeatedly rolling a die.

Decoupling Software-Hardware Dependency In Deep Learning

Working with distributed systems, data processing such as Apache Spark,

Distributed TensorFlow or TensorFlowOnSpark, adds complexity. The cost of

associated hardware and software go up too. Traditional software engineering

typically assumes that hardware is at best a non-issue and at worst a static

entity. In the context of machine learning, hardware performance directly

translates to reduced training time. So, there is a great incentive for the

software to follow the hardware development in lockstep. Deep learning often

scales directly with model size and data amount. As training times can be very

long, there is a powerful motivation to maximise performance using the latest

software and hardware. Changing the hardware and software may cause issues in

maintaining reproducible results and run up significant engineering costs while

keeping software and hardware up to date. Building production-ready systems with

deep learning components pose many challenges, especially if the company does

not have a large research group and a highly developed supporting

infrastructure. However, recently, a new breed of startups have surfaced to

address the software-hardware disconnect.

4 tips for launching a successful data strategy

Your business partners know that data can be powerful, and they know that they

want it, but they do not always know, specifically, what data they need and how

to use it. The IT organization knows how to collect, structure, secure, and

serve up the data, but they are not typically responsible for defining how best

to leverage the data. This gap between serving up the data and using the data

can be as wide as the Ancient Mariner’s ocean (sorry), over which the CIO needs

to build a bridge. ... But how do we attract those brilliant data scientists who

can build the data dashboard straw man? To counter the challenge of a really

tight market for these rare birds, Nick Daffan, CIO of Verisk Analytics,

suggests giving data scientists what we all want: interesting work that creates

an impact. “Data scientists want to get their hands on data that has both depth

and breadth, and they want to work with the most advanced tools and methods,"

Daffan says. "They also want to see their models implemented, which means being

able to help their business partners and customers use the data in a productive

way.”

How to boost internal cyber security training

A big part of maintaining engagement among staff when it comes to cyber security

is explaining how the consequences of insufficient protection could affect

employees in particular. “Unless individuals feel personally invested, they tend

not to concern themselves with the impact of a breach,” said James Spiteri,

principal security specialist at Elastic. “Provide training that moves beyond

theory and shows the risks and implications through actual practice to help

engage the individual. For example, simulating an attack to show how an insecure

password or bad security hygiene on personal accounts can lead to unwanted

access of people’s personal information such as photos or payment details could

be very effective in changing behaviours. “Teams need to find relatable tools to

help break down the complexities of cyber security. Showcasing cyber security

problems through relatable items like phones, and everyday situations such as

connecting to public Wi-fi, can help spread awareness of employees’ digital

footprint and how easy it is to spread information without being aware of

it.”

Shedding light on the threat posed by shadow admins

Threat actors seek shadow admin accounts because of their privilege and the

stealthiness they can bestow upon attackers. These accounts are not part of a

group of privileged users, meaning their activities can go unnoticed. If an

account is part of an Active Directory (AD) group, AD admins can monitor it, and

unusual behaviour is therefore relatively straightforward to pinpoint. However,

shadow admins are not members of a group since they gain a particular privilege

by a direct assignment. If a threat actor seizes control of one of these

accounts, they immediately have a degree of privileged access. This access

allows them to advance their attack subtly and craftily seek further privileges

and permissions while escaping defender scrutiny. Leaving shadow admin accounts

on an organization’s AD is a considerable risk that’s best compared to handing

over the keys to one’s kingdom to do a particular task and then forgetting to

track who has the keys and when to ask for it back. It pays to know who exactly

has privileged access, which is where AD admin groups help. Conversely, the

presence of shadow admin accounts could be a sign that an attack is underway.

Quote for the day:

"Leaders are more powerful role models

when they learn than when they teach." -- Rosabeth Moss Kantor