AI ‘Emotion Recognition’ Can’t Be Trusted

/cdn.vox-cdn.com/uploads/chorus_image/image/64824601/acastro_180730_1777_facial_recognition_0001.0.jpg)

If emotion recognition becomes common, there’s a danger that we will simply accept it and change our behavior to accommodate its failings. In the same way that people now act in the knowledge that what they do online will be interpreted by various algorithms (e.g., choosing to not like certain pictures on Instagram because it affects your ads), we might end up performing exaggerated facial expressions because we know how they’ll be interpreted by machines. That wouldn’t be too different from signaling to other humans. Barrett says that perhaps the most important takeaway from the review is that we need to think about emotions in a more complex fashion. The expressions of emotions are varied, complex, and situational. She compares the needed change in thinking to Charles Darwin’s work on the nature of species and how his research overturned a simplistic view of the animal kingdom. “Darwin recognized that the biological category of a species does not have an essence, it’s a category of highly variable individuals,” says Barrett. “Exactly the same thing is true of emotional categories.”

With customer protection in mind, regulators are staying ahead of this technology and introducing the first wave of AI regulations meant to address AI transparency. This is a step in the right direction in terms of helping customers trust AI-driven experiences while enabling businesses to reap the benefits of AI adoption. This first group of regulations relates to the understanding of an AI-driven, automated decision by a customer. This is especially important for key decisions like lending, insurance and health care but is also applicable to personalization, recommendations, etc. The General Data Protection Regulation (GDPR), specifically Articles 13 and 22, was the first regulation about automated decision-making that states anyone given an automated decision has the right to be informed and the right to a meaningful explanation. According to clause 2(f) of Article 13: "[Information about] the existence of automated decision-making, including profiling ... and ... meaningful information about the logic involved [is needed] to ensure fair and transparent processing."

Apple iPhones Hacked by Websites Exploiting Zero-Day Flaws

Google reported two serious flaws - CVE-2019-7287 & CVE-2019-7286 - to Apple on Feb. 1, setting a seven-day deadline before releasing them publicly, since they were apparently still zero-day vulnerabilities as well as being used in active, in-the-wild attacks. Apple patched the flaws via iOS 12.1.4, released on Feb. 7, together with a security alert. Hacking modern operating systems - including iOS - typically requires chaining together exploits for multiple flaws. In the case of mobile operating systems, for example, attackers may require working exploits that allow them to initially access a device - typically via a WebKit-based browser - and then to escape sandboxes and jailbreak the device to install a malicious piece of code. All told, Google says that it counted five exploit chains that made use of 14 vulnerabilities: "seven for the iPhone's web browser, five for the kernel and two separate sandbox escapes." The identified exploits could have been used to hack devices running iOS 10, which was released on Sept. 13, 2016, and nearly every newer version of iOS, through to the latest version of iOS 12.

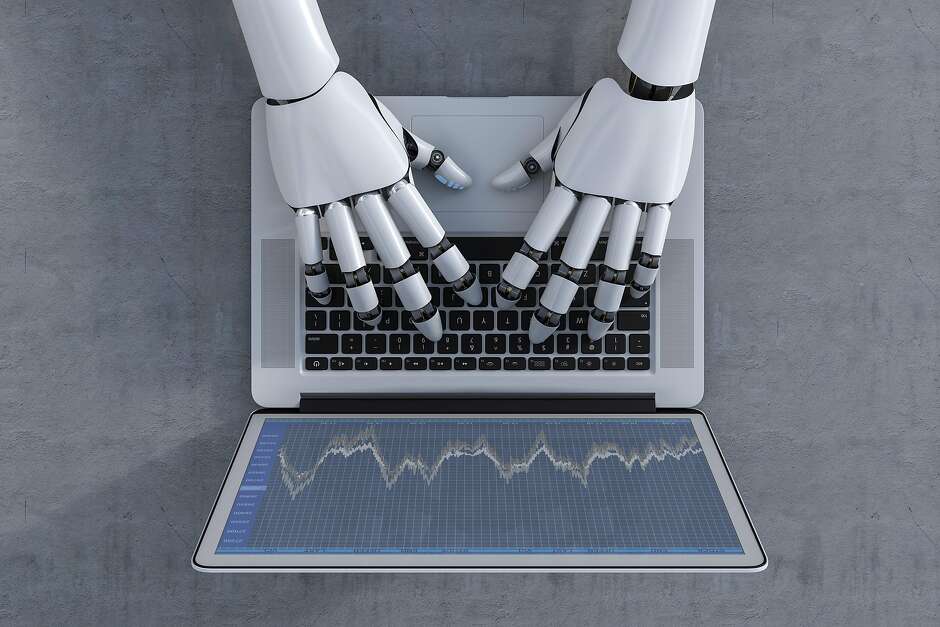

The challenge: creating a better future of work

With appropriate policies, any job can become a good job. There’s nothing about today’s low-wage service jobs, home-care work and gig jobs that means we can’t make them good jobs, like we have done before. The jobs of the future are upon us today. We can’t turn the clock back and resurrect all of the manufacturing jobs that have disappeared. But we can create the good jobs of the future. Rather than wondering what kinds of jobs we will be doing for robot bosses, we need to decide what we want work and jobs to be doing for us, our families and our communities in the future. The state can take the lead in charting a new path forward that works for all Californians. In an executive order creating a Future of Work Commission, Gov. Gavin Newsom emphasized the need to “modernize the social compact between the government, the private sectors and workers.” We can begin to formulate policies that set guardrails on how robots and artificial intelligence can be used to improve the quality of jobs, not just replace them. We can look beyond upskilling workers to upgrading jobs.

Electronic word-of-mouth can make or break a product launch

eWOM can also affect product strategy. Executives at GM scrapped plans for a type of Buick crossover after reading tweets criticizing the design. And beauty products retailer Sephora canceled the release of a Starter Witch Kit — an innovative product that combined perfumes with tarot cards and a crystal ball, among other items — after critics accused the brand of trivializing witchcraft as a religious practice. So what’s the key to getting product launches to go viral, generating positive eWOM across the Internet? Researchers have yet to connect the dots between innovativeness, a firm’s marketing strategies, and the sentiments expressed through eWOM channels, particularly as they relate to the success of new products. But a new study aims to make those connections and provides suggestions for creating effective viral marketing campaigns for new products. To arrive at their findings, the authors conducted a two-phase study. The first phase analyzed a data set of millions of eWOM posts on message boards, forums, and social media platforms such as Facebook, Twitter, and Instagram.

Why 2-factor authentication isn't foolproof

Two-factor authentication is certainly more effective than just a username and password. But the risks of attack and data breach remain if 2FA is poorly implemented, especially in cases where appropriate checks aren't included before the authentication challenges are presented. Password leakage and credential misuse is on the rise, and attackers are continuously devising new ways to improperly access organizations and systems. We need to embrace evolving approaches to identity security that improves security posture while simultaneously keeping a simple user experience. Modern, adaptive, risk-based approaches that leverage real-time metadata and threat detection techniques have to be the standard. Intelligence needs to be built into the authentication process that leverage dynamic controls in real time. They also need the ability to block authentication requests when they are considered to be high risk. These risk factors include detecting anonymous proxy usage, detection of malicious IP addresses, dynamic geo-controls, device controls, and analyzing for unusual access patterns or overly privileged accounts.

Rating IoT devices to gauge their impact on your network

Devices with low-bandwidth requirements include smart-building devices such as connected door locks and light switches that mostly say “open” or “closed” or “on” or “off.” Fewer demands on a given data link opens up the possibility of using less-capable wireless technology. Low-power WAN and Sigfox might not have the bandwidth to handle large amounts of traffic, but they are well suited for connections that don’t need to move large amounts of data in the first place, and they can cover significant areas. The range of Sigfox is 3 to 50 km depending on the terrain, and for Bluetooth, it’s 100 meters to 1,000 meters, depending on the class of Bluetooth being used. Conversely, an IoT setup such as multiple security cameras connected to a central hub to a backend for image analysis will require many times more bandwidth. In such a case the networking piece of the puzzle will have to be more capable and, consequently, more expensive. Widely distributed devices could demand a dedicated LTE connection, for example, or perhaps even a microcell of their own for coverage.

Addressing Large, Complex Unresolved Problems With AI

Tracking the demand for skills in the market and the educational infrastructure available to supply those skills, through a Skills Repository. This will help keep education concurrent with current market demands and ensure much better alignment between academia and corporates; Automate routine, time-consuming tasks – from creating and grading test papers, developing personalized benchmarks for each student, identifying gaps in student development, tracking aptitude and attentiveness within each subject, and enabling teachers to focus on curriculum development, coaching and mentoring, and improving behavioral and personality aspects of students; ... Review and summary-creation of long drawn cases and their history can be done through natural language processing and voice recognition; Routing Right-to-Information and governance-related citizen requests through intelligent bots, thus making it more efficient to get critical information; Employ Anomaly Detection frameworks to surface fraudulent transactions – especially among land deals.

TrickBot Variant Enables SIM Swapping Attacks: Report

The operators of this version of TrickBot are able to intercept a victim's PIN as well as other credentials when they attempt to log onto the websites of the three wireless carriers, according to the report. This allows for a so-called SIM attack, which involves taking a victim's phone number and porting it to another SIM card that is then under the control of the attackers. Then an attacker can collect one-time passwords or trick telecom employees into giving out information about the victim through social engineering techniques. These moves create opportunities for further attacks, such as account takeover schemes. "Interception of short message service (SMS)-based authentication tokens or password resets is frequently used during account takeover fraud," the SecureWorks report notes. Over the past year, SIM swapping has been used in the U.K. for attempted account takeover attacks that have targeted banks and other financial institutions. Account takeover attacks can pave the way for credential stuffing - a technique used to guess passwords and users names to steal data

Great Global Meetings: Navigating Cultural Differences

Team members will know their cultural differences are getting in the way, but they don’t have a safe or honest way to talk about them. Without a chance for team members to work through these differences, a collision course is inevitable. By missing the opportunity to openly explore how cultural differences affect its ability to collaborate, a team may become mired in cultural misunderstandings and handcuffed by invalid assumptions. Many may be afraid of saying the wrong thing or asking a question that may be offensive. Global team leaders should initiate candid discussions about cross-cultural differences as early as possible, ideally when a new team is forming. Cultural differences will affect collaboration one way or another, so it’s best to have team members familiarize themselves with each others’ cultures right up front, so they can decide how they want to work together moving forward. Allocate time for checkpoints at key junctures in the conversation. Pause periodically to let all participants absorb what’s just been said. Some people—Americans in particular—often feel compelled to puncture silence with a comment.

Quote for the day:

"Tend to the people, and they will tend to the business." -- John C. Maxwell