Quote for the day:

“Wisdom equals knowledge plus courage. You have to not only know what to do and when to do it, but you have to also be brave enough to follow through.” -- Jarod Kintz

The Hidden Data Cost: Why Developer Soft Skills Matter More Than You Think

The logic is simple but under-discussed: developers who struggle to communicate

with product owners, translate goals into architecture, or anticipate

system-wide tradeoffs are more likely to build the wrong thing, need more

rework, or get stuck in cycles of iteration that waste time and resources. These

are not theoretical risks, they’re quantifiable cost drivers. According to

Lumenalta’s findings, organizations that invest in well-rounded senior

developers, including soft skill development, see fewer errors, faster time to

delivery, and stronger alignment between technical execution and business value.

... The irony? Most organizations already have technically proficient talent

in-house. What they lack is the environment to develop those skills that drive

high-impact outcomes. Senior developers who think like “chess masters”—a term

Lumenalta uses for those who anticipate several moves ahead—can drastically

reduce a project’s TCO by mentoring junior talent, catching architecture risks

early, and building systems that adapt rather than break under pressure. ... As

AI reshapes every layer of tech, developers who can bridge business goals and

algorithmic capabilities will become increasingly valuable. It’s not just about

knowing how to fine-tune a model, it’s about knowing when not to.

The logic is simple but under-discussed: developers who struggle to communicate

with product owners, translate goals into architecture, or anticipate

system-wide tradeoffs are more likely to build the wrong thing, need more

rework, or get stuck in cycles of iteration that waste time and resources. These

are not theoretical risks, they’re quantifiable cost drivers. According to

Lumenalta’s findings, organizations that invest in well-rounded senior

developers, including soft skill development, see fewer errors, faster time to

delivery, and stronger alignment between technical execution and business value.

... The irony? Most organizations already have technically proficient talent

in-house. What they lack is the environment to develop those skills that drive

high-impact outcomes. Senior developers who think like “chess masters”—a term

Lumenalta uses for those who anticipate several moves ahead—can drastically

reduce a project’s TCO by mentoring junior talent, catching architecture risks

early, and building systems that adapt rather than break under pressure. ... As

AI reshapes every layer of tech, developers who can bridge business goals and

algorithmic capabilities will become increasingly valuable. It’s not just about

knowing how to fine-tune a model, it’s about knowing when not to.Why AV is an overlooked cybersecurity risk

As cyber attackers become more sophisticated, they’re shifting their attention

to overlooked entry points like AV infrastructure. A good example is YouTuber

Jim Browning’s infiltration of a scam call center, where he used unsecured CCTV

systems to monitor and expose criminals in real time. This highlights the

potential for AV vulnerabilities to be exploited for intelligence gathering. To

counter these risks, organizations must adopt a more proactive approach.

Simulated social engineering and phishing attacks can help assess user awareness

and expose vulnerabilities in behavior. These simulations should be backed by

ongoing training that equips staff to recognize manipulation tactics and

understand the value of security hygiene. ... To mitigate the risks posed by

vulnerable AV systems, organizations should take a proactive and layered

approach to security. This includes regularly updating device firmware and

underlying software packages, which are often left outdated even when new

versions are available. Strong password policies should be enforced,

particularly on devices running webservers, with security practices aligned to

standards like the OWASP Top 10. Physical access to AV infrastructure must also

be tightly controlled to prevent unauthorized LAN connections.

As cyber attackers become more sophisticated, they’re shifting their attention

to overlooked entry points like AV infrastructure. A good example is YouTuber

Jim Browning’s infiltration of a scam call center, where he used unsecured CCTV

systems to monitor and expose criminals in real time. This highlights the

potential for AV vulnerabilities to be exploited for intelligence gathering. To

counter these risks, organizations must adopt a more proactive approach.

Simulated social engineering and phishing attacks can help assess user awareness

and expose vulnerabilities in behavior. These simulations should be backed by

ongoing training that equips staff to recognize manipulation tactics and

understand the value of security hygiene. ... To mitigate the risks posed by

vulnerable AV systems, organizations should take a proactive and layered

approach to security. This includes regularly updating device firmware and

underlying software packages, which are often left outdated even when new

versions are available. Strong password policies should be enforced,

particularly on devices running webservers, with security practices aligned to

standards like the OWASP Top 10. Physical access to AV infrastructure must also

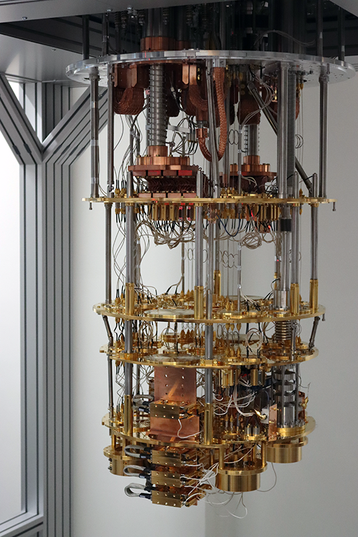

be tightly controlled to prevent unauthorized LAN connections. EU Presses for Quantum-Safe Encryption by 2030 as Risks Grow

The push comes amid growing concern about the long-term viability of

conventional encryption techniques. Current security protocols rely on complex

mathematical problems — such as factoring large numbers — that would take

today’s classical computers thousands of years to solve. But quantum computers

could potentially crack these systems in a fraction of the time, opening the

door to what cybersecurity experts refer to as “store now, decrypt later”

attacks. In these attacks, hackers collect encrypted data today with the

intention of breaking the encryption once quantum technology matures. Germany’s

Federal Office for Information Security (BSI) estimates that conventional

encryption could remain secure for another 10 to 20 years in the absence of

sudden breakthroughs, The Munich Eye reports. Europol has echoed that forecast,

suggesting a 15-year window before current systems might be compromised. While

the timeline is uncertain, European authorities agree that proactive planning is

essential. PQC is designed to resist attacks from both classical and quantum

computers by using algorithms based on different kinds of hard mathematical

problems. These newer algorithms are more complex and require different

computational strategies than those used in today’s standards like RSA and

ECC.

The push comes amid growing concern about the long-term viability of

conventional encryption techniques. Current security protocols rely on complex

mathematical problems — such as factoring large numbers — that would take

today’s classical computers thousands of years to solve. But quantum computers

could potentially crack these systems in a fraction of the time, opening the

door to what cybersecurity experts refer to as “store now, decrypt later”

attacks. In these attacks, hackers collect encrypted data today with the

intention of breaking the encryption once quantum technology matures. Germany’s

Federal Office for Information Security (BSI) estimates that conventional

encryption could remain secure for another 10 to 20 years in the absence of

sudden breakthroughs, The Munich Eye reports. Europol has echoed that forecast,

suggesting a 15-year window before current systems might be compromised. While

the timeline is uncertain, European authorities agree that proactive planning is

essential. PQC is designed to resist attacks from both classical and quantum

computers by using algorithms based on different kinds of hard mathematical

problems. These newer algorithms are more complex and require different

computational strategies than those used in today’s standards like RSA and

ECC.

MongoDB Doubles Down on India's Database Boom

Chawla says MongoDB is helping Indian enterprises move beyond legacy systems through two distinct approaches. "The first one is when customers decide to build a completely new modern application, gradually sunsetting the old legacy application," he explains. "We work closely with them to build these modern systems." ... Despite this fast-paced growth, Chawla points out several lingering myths in India. "A lot of customers still haven't realised that if you want to build a modern application especially one that's AI-driven you can't build it on a relational structure," he explains. "Most of the data today is unstructured and messy. So you need a database that can scale, can handle different types of data, and support modern workloads." ... Even those trying to move away from traditional databases often fall into the trap of viewing PostgreSQL as a modern alternative. "PostgreSQL is still relational in nature. It has the same row-and-column limitations and scalability issues." He also adds that if companies want to build a future-proof application especially one that infuses AI capabilities they need something that can handle all data types and offers native support for features like full-text search, hybrid search, and vector search. Other NoSQL players such as Redis and Apache Cassandra also have significant traction in India.AI only works if the infrastructure is right

The successful implementation of artificial intelligence is therefore closely

linked to the underlying infrastructure. But how you define that AI

infrastructure is open to debate. An AI infrastructure always consists of

different components, which is clearly reflected in the diverse backgrounds of

the participating parties. As a customer, how can you best assess such an AI

infrastructure? ... For companies looking to get started with AI infrastructure,

a phased approach is crucial. Start small with a pilot, clearly define what you

want to achieve, and expand step by step. The infrastructure must grow with the

ambitions, not the other way around. A practical approach must be based on the

objectives. Then the software, middleware, and hardware will be available. For

virtually every use case, you can choose from the necessary and desired

components. ... At the same time, the AI landscape requires a high degree of

flexibility. Technological developments are rapid, models change, and business

requirements can shift from quarter to quarter. It is therefore essential to

establish an infrastructure that is not only scalable but also adaptable to new

insights or shifting objectives. Consider the possibility of dynamically scaling

computing capacity up or down, compressing models where necessary, and deploying

tooling that adapts to the requirements of the use case.

The successful implementation of artificial intelligence is therefore closely

linked to the underlying infrastructure. But how you define that AI

infrastructure is open to debate. An AI infrastructure always consists of

different components, which is clearly reflected in the diverse backgrounds of

the participating parties. As a customer, how can you best assess such an AI

infrastructure? ... For companies looking to get started with AI infrastructure,

a phased approach is crucial. Start small with a pilot, clearly define what you

want to achieve, and expand step by step. The infrastructure must grow with the

ambitions, not the other way around. A practical approach must be based on the

objectives. Then the software, middleware, and hardware will be available. For

virtually every use case, you can choose from the necessary and desired

components. ... At the same time, the AI landscape requires a high degree of

flexibility. Technological developments are rapid, models change, and business

requirements can shift from quarter to quarter. It is therefore essential to

establish an infrastructure that is not only scalable but also adaptable to new

insights or shifting objectives. Consider the possibility of dynamically scaling

computing capacity up or down, compressing models where necessary, and deploying

tooling that adapts to the requirements of the use case.

Software abstraction: The missing link in commercially viable quantum computing

Quantum Infrastructure Software delivers this essential abstraction, turning bare-metal QPUs into useful devices, much the way data center providers integrate virtualization software for their conventional systems. Current offerings cover all of the functions typically associated with the classical BIOS up through virtual machine Hypervisors, extending to developer tools at the application level. Software-driven abstraction of quantum complexity away from the end users lets anyone, irrespective of their quantum expertise, leverage quantum computing for the problems that matter most to them. ... With a finely tuned quantum computer accessible, a user must still execute many tasks to extract useful answers from the QPU, in analogy with the need for careful memory management required to gain practical acceleration with GPUs. Most importantly, in executing a real workload, they must convert high-level “assembly-language” logical definitions of quantum applications into hardware-specific “machine-language” instructions that account for the details of the QPU in use, and deploy countermeasures where errors might leak in. These are typically tasks that can only be handled by (expensive!) specialists in quantum-device operation.Guest Post: Why AI Regulation Won’t Work for Quantum

Artificial intelligence regulation has been in the regulatory spotlight for the

past seven to ten years and there is no shortage of governments and global

institutions, as well as corporations and think tanks, putting forth regulatory

frameworks in response to this widely buzzy tech. AI makes decisions in a “black

box,” creating a need for “explainability” in order to fully understand how

determinations by these systems affect the public. With the democratization of

AI systems, there is the potential for bad actors to create harm in a

decentralized ecosystem. ... Because quantum systems do not learn on their own,

evolve over time, or make decisions based on training data, they do not pose the

same kind of existential or social threats that AI does. Whereas the

implications of quantum breakthroughs will no doubt be profound, especially in

cryptography, defense, drug development, and material science, the core risks

are tied to who controls the technology and for what purpose. Regulating who

controls technology and ensuring bad actors are disincentivized from using

technology in harmful ways is the stuff of traditional regulation across many

sectors, so regulating quantum should prove somewhat less challenging than

current AI regulatory debates would suggest.

Artificial intelligence regulation has been in the regulatory spotlight for the

past seven to ten years and there is no shortage of governments and global

institutions, as well as corporations and think tanks, putting forth regulatory

frameworks in response to this widely buzzy tech. AI makes decisions in a “black

box,” creating a need for “explainability” in order to fully understand how

determinations by these systems affect the public. With the democratization of

AI systems, there is the potential for bad actors to create harm in a

decentralized ecosystem. ... Because quantum systems do not learn on their own,

evolve over time, or make decisions based on training data, they do not pose the

same kind of existential or social threats that AI does. Whereas the

implications of quantum breakthroughs will no doubt be profound, especially in

cryptography, defense, drug development, and material science, the core risks

are tied to who controls the technology and for what purpose. Regulating who

controls technology and ensuring bad actors are disincentivized from using

technology in harmful ways is the stuff of traditional regulation across many

sectors, so regulating quantum should prove somewhat less challenging than

current AI regulatory debates would suggest.Validation is an Increasingly Critical Element of Cloud Security

Security engineers simply don’t have the time or resources to familiarize

themselves with the vast number of cloud services available today. In the past,

security engineers primarily needed to understand Windows and Linux internals,

Active Directory (AD) domain basics, networks and some databases and storage

solutions. Today, they need to be familiar with hundreds of cloud services, from

virtual machines (VMs) to serverless functions and containers at different

levels of abstraction. ... It’s also important to note that cloud environments

are particularly susceptible to misconfigurations. Security teams often

primarily focus on assessing the performance of their preventative security

controls, searching for weaknesses in their ability to detect attack activity.

But this overlooks the danger posed by misconfigurations, which are not caused

by bad code, software bugs, or malicious activity. That means they don’t fall

within the definition of “vulnerabilities” that organizations typically test

for—but they still pose a significant danger. ... Securing the cloud isn’t

just about having the right solutions in place — it’s about determining whether

they are functioning correctly. But it’s also about making sure attackers don’t

have other, less obvious ways into your network.

Security engineers simply don’t have the time or resources to familiarize

themselves with the vast number of cloud services available today. In the past,

security engineers primarily needed to understand Windows and Linux internals,

Active Directory (AD) domain basics, networks and some databases and storage

solutions. Today, they need to be familiar with hundreds of cloud services, from

virtual machines (VMs) to serverless functions and containers at different

levels of abstraction. ... It’s also important to note that cloud environments

are particularly susceptible to misconfigurations. Security teams often

primarily focus on assessing the performance of their preventative security

controls, searching for weaknesses in their ability to detect attack activity.

But this overlooks the danger posed by misconfigurations, which are not caused

by bad code, software bugs, or malicious activity. That means they don’t fall

within the definition of “vulnerabilities” that organizations typically test

for—but they still pose a significant danger. ... Securing the cloud isn’t

just about having the right solutions in place — it’s about determining whether

they are functioning correctly. But it’s also about making sure attackers don’t

have other, less obvious ways into your network.

Build and Deploy Scalable Technical Architecture a Bit Easier

A critical challenge when transforming proof-of-concept systems into

production-ready architecture is balancing rapid development with future

scalability. At one organization, I inherited a monolithic Python application

that was initially built as a lead distribution system. The prototype performed

adequately in controlled environments but struggled when processing real-world

address data, which, by their nature, contain inconsistencies and edge cases.

... Database performance often becomes the primary bottleneck in scaling

systems. Domain-Driven Design (DDD) has proven particularly valuable for

creating loosely coupled microservices, with its strategic phase ensuring that

the design architecture properly encapsulates business capabilities, and the

tactical phase allowing the creation of domain models using effective design

patterns. ... For systems with data retention policies, table partitioning

proved particularly effective, turning one table into several while maintaining

the appearance of a single table to the application. This allowed us to

implement retention simply by dropping entire partition tables rather than

performing targeted deletions, which prevented database bloat. These

optimizations reduced average query times from seconds to milliseconds, enabling

support for much higher user loads on the same infrastructure.

A critical challenge when transforming proof-of-concept systems into

production-ready architecture is balancing rapid development with future

scalability. At one organization, I inherited a monolithic Python application

that was initially built as a lead distribution system. The prototype performed

adequately in controlled environments but struggled when processing real-world

address data, which, by their nature, contain inconsistencies and edge cases.

... Database performance often becomes the primary bottleneck in scaling

systems. Domain-Driven Design (DDD) has proven particularly valuable for

creating loosely coupled microservices, with its strategic phase ensuring that

the design architecture properly encapsulates business capabilities, and the

tactical phase allowing the creation of domain models using effective design

patterns. ... For systems with data retention policies, table partitioning

proved particularly effective, turning one table into several while maintaining

the appearance of a single table to the application. This allowed us to

implement retention simply by dropping entire partition tables rather than

performing targeted deletions, which prevented database bloat. These

optimizations reduced average query times from seconds to milliseconds, enabling

support for much higher user loads on the same infrastructure.

What AI Policy Can Learn From Cyber: Design for Threats, Not in Spite of Them

The narrative that constraints kill innovation is both lazy and false. In

cybersecurity, we’ve seen the opposite. Federal mandates like the Federal

Information Security Modernization Act (FISMA), which forced agencies to map

their systems, rate data risks, and monitor security continuously, and

state-level laws like California’s data breach notification statute created the

pressure and incentives that moved security from afterthought to design

priority. ... The irony is that the people who build AI, like their

cybersecurity peers, are more than capable of innovating within meaningful

boundaries. We’ve both worked alongside engineers and product leaders in

government and industry who rise to meet constraints as creative challenges.

They want clear rules, not endless ambiguity. They want the chance to build

secure, equitable, high-performing systems — not just fast ones. The real risk

isn’t that smart policy will stifle the next breakthrough. The real risk is that

our failure to govern in real time will lock in systems that are flawed by

design and unfit for purpose. Cybersecurity found its footing by designing for

uncertainty and codifying best practices into adaptable standards. AI can do the

same if we stop pretending that the absence of rules is a virtue.

The narrative that constraints kill innovation is both lazy and false. In

cybersecurity, we’ve seen the opposite. Federal mandates like the Federal

Information Security Modernization Act (FISMA), which forced agencies to map

their systems, rate data risks, and monitor security continuously, and

state-level laws like California’s data breach notification statute created the

pressure and incentives that moved security from afterthought to design

priority. ... The irony is that the people who build AI, like their

cybersecurity peers, are more than capable of innovating within meaningful

boundaries. We’ve both worked alongside engineers and product leaders in

government and industry who rise to meet constraints as creative challenges.

They want clear rules, not endless ambiguity. They want the chance to build

secure, equitable, high-performing systems — not just fast ones. The real risk

isn’t that smart policy will stifle the next breakthrough. The real risk is that

our failure to govern in real time will lock in systems that are flawed by

design and unfit for purpose. Cybersecurity found its footing by designing for

uncertainty and codifying best practices into adaptable standards. AI can do the

same if we stop pretending that the absence of rules is a virtue.