How retailers can manage data loss threats during remote work

While an e-commerce store often relies on many software tools to help make

day-to-day operations a little easier, it's likely that the number of apps being

used has gone up with the increase in remote work. However, separate software

tools don't always play nice together, and the level of access and control they

have over your data might surprise you. Some even have the ability to delete

your data without warning. At least once a year, e-commerce merchants should

audit all the applications connected to their online store. Terms and conditions

can change so it's best you understand any changes in the last 365 days. List

all the pros and cons of each integration and decide if any tradeoffs are worth

it. SaaS doesn't save everything. Software-as-a-service (SaaS) tools

will always ensure the nuts and bolts of the platform work. However, protecting

all the data stored inside a SaaS or cloud solution like BigCommerce or Shopify

rests on the shoulders of users. If you don't fully back up all the content and

information in your store, there's absolutely no guarantee it will be there the

next time you log in. This model isn't limited to just e-commerce platforms.

Accounting software like QuickBooks, productivity tools like Trello and even

code repositories like GitHub all follow the same model.

Don't make these cyber resiliency mistakes

Manea begins by sharing the well-worn axiom that defenders must protect every

possible opening where attackers only need one way in. If realistic, that truism

alone should be enough to replace a prevention attitude with one based on

resilience. Manea then suggests caution. "Make sure you understand your

organizational constraints—be they technological, budgetary, or even

political—and work to minimize risk with the resources that you're given. Think

of it as a game of economic optimization." ... Put simply, a digital threat-risk

assessment is required. Manea suggests that a team including representatives

from the IT department, business units, and upper management work together to

create a security-threat model of the organization—keeping in mind: What

would an attacker want to achieve?; What is the easiest way for an attacker

to achieve it?; and What are the risks, their severity, and their

likelihood? An accurate threat model allows IT-department personnel to implement

security measures where they are most needed and not waste resources. "Once

you've identified your crown jewels and the path of least resistance, focus on

adding obstacles to that path," he said.

Researchers have developed a deep-learning algorithm that can de-noise images

In conventional deep-learning-based image processing techniques, the number and

network between layers decide how many pixels in the input image contribute to

the value of a single pixel in the output image. This value is immutable after

the deep-learning algorithm has been trained and is ready to de-noise new

images. However, Ji says fixing the number for the input pixels, technically

called the receptive field, limits the performance of the algorithm. “Imagine a

piece of specimen having a repeating motif, like a honeycomb pattern. Most

deep-learning algorithms only use local information to fill in the gaps in the

image created by the noise,” Ji says. “But this is inefficient because the

algorithm is, in essence, blind to the repeating pattern within the image since

the receptive field is fixed. Instead, deep-learning algorithms need to have

adaptive receptive fields that can capture the information in the overall image

structure.” To overcome this hurdle, Ji and his students developed another

deep-learning algorithm that can dynamically change the size of the receptive

field. In other words, unlike earlier algorithms that can only aggregate

information from a small number of pixels, their new algorithm, called global

voxel transformer networks (GVTNets), can pool information from a larger area of

the image if required.

Manufacturers Take the Initiative in Home IoT Security

Although ensuring basic connectivity between endpoint devices and the many

virtual assistants they connect to would seem to be a basic necessity, many

consumers have encountered issues getting their devices to work together

effectively. While interoperability and security standards exist, there are none

in place that provide consumers the assurance their smart home device will

seamlessly and securely connect. To respond to consumer concerns, “Project

Connected Home over IP” was launched in December 2019. Initiated by Amazon,

Apple, Google and the Zigbee Alliance, this working group focuses on developing

and promoting a standard for interoperability that emphasizes security. The

project aims to enable communication across mobile apps, smart home devices and

cloud services, defining a specific set of IP-based networking technologies for

device certification. The goal is not only to improve compatibility but to

ensure that all data is collected and managed safely. Dozens of smart home

manufacturers, chip manufacturers and security experts are participating in the

project. Since security is one of the key pillars of the group’s objectives,

DigiCert was invited to provide security recommendations to help ensure devices

are properly authenticated and communication is handled confidentially.

Has 5G made telecommunications sustainable again?

The state of the personal communications market as we enter 2021 bears

undeniable similarity to that of the PC market (personal computer, if you've

forgotten) in the 1980s. When the era of graphical computing began in earnest,

the major players at that time (e.g., Microsoft, Apple, IBM, Commodore) tried to

leverage the clout they had built up to that point among consumers, to help them

make the transition away from 8-bit command lines and into graphical

environments. Some of those key players tried to leverage more than just their

market positions; they sought to apply technological advantages as well — in one

very notable instance, even if it meant contriving that advantage artificially.

Consumers are always smarter than marketing professionals presume they are. Two

years ago, one carrier in particular (which shall remain nameless, in deference

to folks who complain I tend to jump on AT&T's case) pulled the proverbial

wool in a direction that was supposed to cover consumers' eyes. The "5G+"

campaign divebombed, and as a result, there's no way any carrier can

cosmetically alter the appearance of existing smartphones, to give their users

the feeling of standing on the threshold of a new and forthcoming sea change.

Learn SAML: The Language You Don't Know You're Already Speaking

SAML streamlines the authentication process for signing into SAML-supported

websites and applications, and it's the most popular underlying protocol for

Web-based SSO. An organization has one login page and can configure any Web app,

or service provider (SP), supporting SAML so its users only have to authenticate

once to log into all its Web apps (more on this process later). The protocol has

recently made headlines due to the "Golden SAML" attack vector, which was

leveraged in the SolarWinds security incident. This technique enables the

attacker to gain access to any service or asset that uses the SAML

authentication standard. Its use in the wild underscores the importance of

following best practices for privileged access management. A need for a standard

like SAML emerged in the late 1990s with the proliferation of merchant websites,

says Thomas Hardjono, CTO of Connection Science and Engineering at the

Massachusetts Institute of Technology and chair of OASIS Security Services,

where the SAML protocol was developed. Each merchant wanted to own the

authentication of each customer, which led to the issue of people maintaining

usernames and passwords for dozens of accounts.

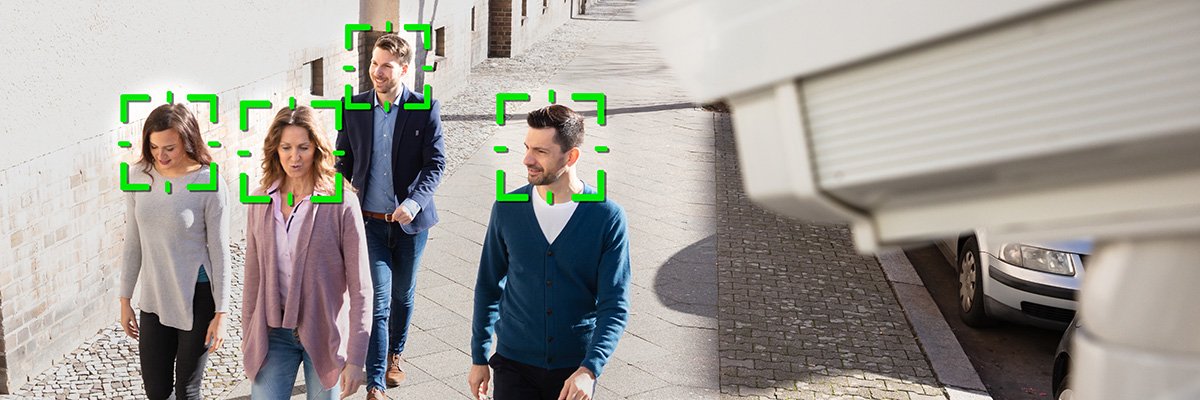

Biometrics ethics group addresses public-private use of facial recognition

“To maintain public confidence, the BFEG recommends that oversight mechanisms

should be put in place,” it said. “The BFEG suggests that an independent ethics

group should be tasked to oversee individual deployments of biometric

recognition technologies by the police and the use of biometric recognition

technologies in public-private collaborations (P-PCs). “This independent ethics

group would require that any proposed deployments and P-PCs are reviewed when

they are established and monitored at regular intervals during their operation.”

Other recommendations included that police should only be able to share data

with “trustworthy private organisations”, specific members of which should also

be thoroughly vetted; that data should only be shared with, or accessible to,

the absolute minimum number of people; and that arrangements should be made for

the safe and secure sharing and storage of biometric data. The BFEG’s note also

made clear that any public-private collaborations must be able to demonstrate

that they are necessary, and that the data sharing between the organisations is

proportionate.

Security Threats to Machine Learning Systems

The collection of good and relevant data is a very important task. For the

development of a real-world application, data is collected from various sources.

This is where an attacker can insert fraudulent and inaccurate data, thus

compromising the machine learning system. So, even before a model has been

created, by inserting a very large chuck of fraudulent data the whole system can

be compromised by the attacker, this is a stealthy channel attack. This is the

reason why the data collectors should be very diligent while collecting the data

for machine learning systems. ... Data poisoning directly affects two important

aspects of data, data confidentiality, and data trustworthiness. Many a time the

data used for training a system might contain confidential and sensitive

information. By poisoning attack, the confidentiality of the data is lost. It is

often believed that maintaining the confidentially of data is a challenging area

of study by itself, the additional aspect of machine learning makes the task of

securing the confidentiality of the data becomes that much more important.

Another important aspect affected by data poisoning is data trustworthiness.

Fuzzing (fuzz testing) tutorial: What it is and how can it improve application security?

We know when a programmer is developing code, they have different computations

depending upon what the user gives them. So here the program is the maze and

then we have, let's just pretend, a little robot up here and input to the

program is going to be directions for our robot through the maze. So for

example, we can give the robot the directions, I'm going to write it up here,

down, left, down, right. And he's going to take two rights, just meaning he's

going to go to the right twice. And then he's going to go down a bunch of

times. So you can think about giving our little robot this input and robot is

going to take that as directions and he's going to take this path through the

program. He's going to go down, left, down first right, second right, then a

bunch of downs. And when you look at this, we had a little bug here. They can

verify that this is actually okay. There's no actual bug here. And this is

what's happening when a developer writes a unit test. So what they're doing is

they're coming up with an input and they're making sure that it gets the right

output. Now, a problem is, if you think about this maze, we've only checked

one path through this maze and there's other potential lurking bugs out there.

The three steps for smart cities to unlock their full IoT potential

In theory, if a city applied uniform standards across all of its IoT-connected

devices, it could achieve full interoperability. Nevertheless, we believe that

cities and regulators should focus on defining common communication standards to

support technical interoperability. The reason: Although different versions

exist, communications standards are generally mature and widely used by IoT

players. In contrast, the standards that apply to messaging and data formats—and

are needed for syntactic interoperability—are less mature, and semantic

standards remain in the early stages of development and are highly fragmented.

Some messaging and data format standards are starting to gain broad acceptance,

and it shouldn’t be long before policymakers can prudently adopt the leading

ones. With that scenario in mind, planners should ignore semantic standards

until clear favorites emerge. Building a platform that works across use cases

can improve interoperability. The platform effectively acts as an orchestrator,

translating interactions between devices so that they can share data and work.

In a city context, a cross-vertical platform offers significant benefits over

standardization.

Quote for the day:

"Education makes a people difficult to

drive, but easy to lead; impossible to enslave, but easy to govern." --

Lorn Brougham