How eBPF will solve Service Mesh - Goodbye Sidecars

Why have we not created a service mesh in the kernel before? Some people have

been semi-jokingly stating that kube-proxy is the original service mesh. There

is some truth to that. Kube-proxy is a good example of how close the Linux

kernel can get to implementing a service mesh while relying on traditional

network-based functionality implemented with iptables. However, it is not

enough, the L7 context is missing. Kube-proxy operates exclusively on the

network packet level. L7 traffic management, tracing, authentication, and

additional reliability guarantees are required for modern applications.

Kube-proxy cannot provide this at the network level. eBPF changes this equation.

It allows to dynamically extend the functionality of the Linux kernel. We have

been using eBPF for Cilium to build a highly efficient network, security, and

observability datapath that embeds itself directly into the Linux kernel.

Applying this same concept, we can solve service mesh requirements at the kernel

level as well. In fact, Cilium already implements a variety of the required

concepts such as identity-based security, L3-L7 observability &

authorization, encryption, and load-balancing.

General and Scalable Parallelization for Neural Networks

Because different model architectures may be better suited to different

parallelization strategies, GSPMD is designed to support a large variety of

parallelism algorithms appropriate for different use cases. For example, with

smaller models that fit within the memory of a single accelerator, data

parallelism is preferred, in which devices train the same model using different

input data. In contrast, models that are larger than a single accelerator’s

memory capacity are better suited for a pipelining algorithm (like that employed

by GPipe) that partitions the model into multiple, sequential stages, or

operator-level parallelism (e.g., Mesh-TensorFlow), in which individual

computation operators in the model are split into smaller, parallel operators.

GSPMD supports all the above parallelization algorithms with a uniform

abstraction and implementation. Moreover, GSPMD supports nested patterns of

parallelism. For example, it can be used to partition models into individual

pipeline stages, each of which can be further partitioned using operator-level

parallelism.

What 2022 can hold for the developer experience

To improve the developer experience, and ultimately retain and attract talent,

businesses should begin to make changes to reduce the strain placed on

developers and help them achieve a healthier work-life balance. The introduction

of fairly simple initiatives such as flexi-time and offering mental health days

can help to reduce the risk of burnout and show developers that they are valued

members of the business whose needs are being listened to. Additionally,

organisations could look to provide extra resource and adopt the tools and

technology to enable developers to automate parts of their workload. Solutions

such as data platforms that make use of machine learning (ML) are a prime

example of this. This use of this type of technology would enable developers to

easily add automation and predictions to applications without them needing to be

experts in ML. Adopting technologies that embed ML capabilities can also help to

simplify the process of building, testing, and deploying ML models and speed up

the process of integrating them into production applications.

2022 Cybersecurity Risk Mitigation Roadmap For CISO & CIO As Business Drivers

As companies become aware of the need for data protection, their leaders are

likely to increase the adoption of encryption; which will find its way into

organizations’ basic cyber security architecture in 2022. This will have a

ripple effect, and we can expect newer and updated applications providing data

encryption solutions to be launched for businesses in the coming year. One of

the most disruptive technologies in decades, blockchain technology will be at

the heart of shifting from a centralized server-based internet system to

transparent cryptographic networks. AI has matured from an experimental topic to

mainstream technology. As a result, 2022 will see better accessibility of

Artificial Intelligence (AI) based tools for creating robust cybersecurity

protocols within an organization. In addition, we expect the new lineup of

technology tools to be more cost-effective and yet more effective than ever

before. Last but not least, 2022 will see a mix of remote work and on-site

physical presence, thereby continuing with the trends of cybersecurity adapted

during 2021.

Intel reports new computing breakthroughs as it pursues Moore’s Law

Intel made the announcement at the IEEE International Electron Devices

Meeting (IEDM) 2021. In the press release, Intel talked at length about its

three areas of pathfinding and the breakthroughs that prove it’s on track to

continue following its roadmap through 2025 and beyond. The company is

focusing on several areas of research and reports significant progress in

essential scaling technologies that will help it deliver more transistors in

its future products. Intel’s engineers have been working on solutions to

increase the interconnect density in chip packaging by at least 10 times.

Intel also mentioned that in July 2022, at the Intel Accelerated event, it

plans to introduce Foveros Direct. This will provide an order of magnitude

increase in the interconnect density for 3D stacking through enabling sub-10

micron bump pitches. The tech giant is calling for other manufacturers to

work together in order to establish new industry standards and testing

procedures, allowing for the creation of a new hybrid bonding chiplet

ecosystem.

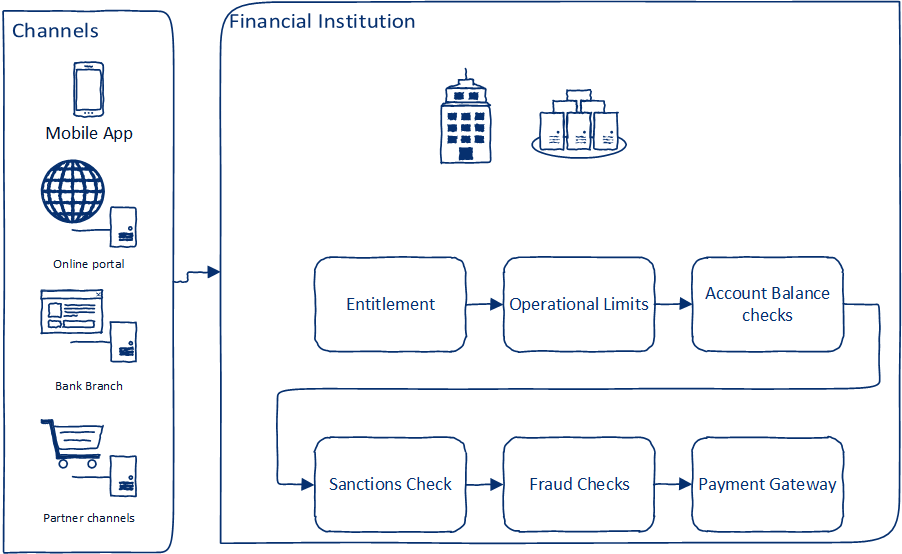

Designing High-Volume Systems Using Event-Driven Architectures

Thanks to the latest development in Event-Driven Architecture (EDA)

platforms such as Kafka and data management techniques such as Data Meshes

and Data Fabrics, designing microservices-based applications is now much

easier. However, to ensure these microservices-based applications perform at

requisite levels, it is important to ensure critical Non-Functional

Requirements (NFRs) are taken into consideration during the design time

itself. In a series of blog articles, my colleagues Tanmay Ambre, Harish

Bharti along with myself are attempting to describe a cohesive approach on

Design for NFR. We take a use-case-based approach. In the first installment,

we describe designing for “performance” as the first critical NFR. This

article focuses on architectural and design decisions that are the basis of

high-volume, low-latency processing. To make these decisions clear and easy

to understand, we describe their application to a high-level use case of

funds transfer. We have simplified the use case to focus mainly on

performance.

How Hoppscotch is building an open source ‘API development ecosystem’

The Hoppscotch platform constitutes multiple integrated API development tools,

aimed at engineers, software developers, quality assurance (QA) testers, and

product managers. It includes a web client, which is pitched as an “online

collaborative API playground,” enabling multiple developers or teams to build,

test, and share APIs. A separate command line interface (CLI) tool, meanwhile,

is designed for integrating automated test runners as part of CI/CD pipelines.

And then there is the API documentation generator, which helps developers

create, publish, and maintain all the necessary API documentation in real

time. Hoppscotch for teams, which is currently in public beta, allows

companies to create individual groups for specific use-cases. For example, it

can create a team for its entire in-house workforce, where anyone can share

APIs and related communications with anyone else. They can also create smaller

groups for specific teams, such as QA testers, or for external vendors and

partners where sensitive data needs to be kept separate from specific projects

they are involved in.

Synthetic Quantum Systems Help Solve Complex Real-World Applications

Quantum Simulation is the most promising use of Pasqal’s QPU, in which the

quantum processor is utilized to obtain knowledge about a quantum system of

interest. It seems reasonable to employ a quantum system as a computational

resource for quantum issues, as Richard Feynman pointed out in the 20th

century. Neutral atom quantum processors will aid pure scientific discovery,

and there are several sectors of application at the industrial level, such as

the creation of novel materials for energy storage and transport, or chemical

computations for drug development. “At Pasqal, we are not only scientists, we

are not only academic, we industrialize our technology. By working with

quantum technology, we want to build and sell a product which is reliable, and

which helps to solve complex industrial problems in many contexts,” said

Reymond. Among Pasqal’s customers is EDF, the French electricity utility. In

the energy sector, Pasqal is working with EDF to develop innovative solutions

for smart mobility.

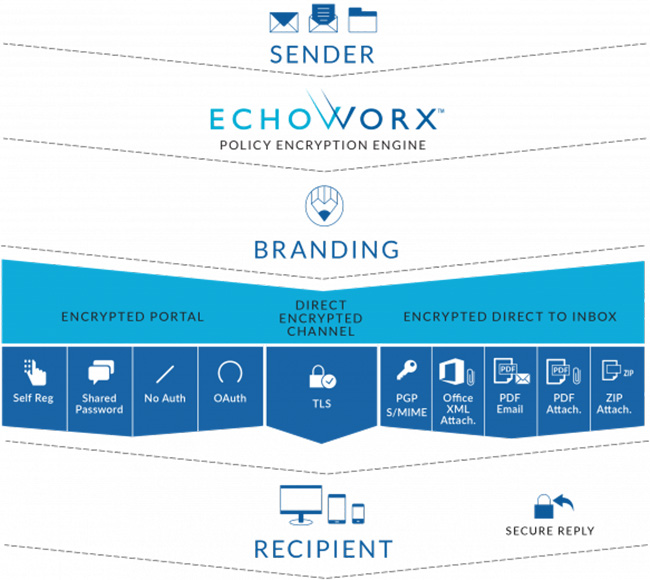

Enterprise email encryption without friction? Yes, it’s possible

It is often (grudgingly) acknowledged in security circles that sometimes

security must be partly sacrificed for better usability. But with Echoworx you

can have the best of both worlds: a seamless, secure experience for

organizations, their partners, vendors, and customers. “Our customers are

mostly very large global enterprises in the finance, insurance, manufacturing,

retail, and several other verticals,” says Derek Christiansen, Echoworx’s

Engagement Manager. “When working with them, we must be sensitive to their

needs. We do this by offering like-for-like encryption when we can, and by

tailoring the integration of encryption to their existing flows.” ... The only

thing that the customer needs to do is direct any email that needs encryption

to the company’s infrastructure. “They can use their own data classification.

That can be something as simple as an Office 365 rule. It’s also common to use

a keyword (e.g., the word ‘secure’) in the email subject to route the message.

We also have an optional plugin for Outlook that makes it really easy for

senders,” Christiansen notes.

7 Critical Machine Intelligence Exams and The Hidden Link of MLOps with Product Management

The past 12 months have seen many machine learning operations tools gaining

prominent popularity. Interestingly, one feature is notably absent or rarely

mentioned in the discussion: quality assurance. Academia has already initiated

research in machine learning system testing. In addition, several vendors

provide data quality support or leverage data testing libraries or data

quality frameworks. Automated deployment does exist as well in many tools. But

how about canary deployments of models and whatever happened with unit and

integration testing in the machine learning universe? Many of these quality

assurance proposals originate from an engineering mindset. However, more and

more specialists without an engineering background perform a lot of model

engineering. Further, recall that a separate person or team frequently runs

the quality assurance activities. Supposedly so that engineers can place their

trust in others to catch mistakes. More cynical characters might insist that

engineers need to be controlled and checked.

Quote for the day:

"Leaders dig into their business to

learn painful realities rather than peaceful illusion." --

Orrin Woodward

No comments:

Post a Comment