Quote for the day:

"Small daily improvements over time lead to stunning results." -- Robin Sharma

When it comes to AI, bigger isn’t always better

Developers were already warming to small language models, but most of the

discussion has focused on technical or security advantages. In reality, for many

enterprise use cases, smaller, domain-specific models often deliver faster, more

relevant results than general-purpose LLMs. Why? Because most business problems

are narrow by nature. You don’t need a model that has read TS Eliot or that can

plan your next holiday. You need a model that understands your lead times,

logistics constraints, and supplier risk. ... Just like in e-commerce or IT

architecture, organizations are increasingly finding success with best-of-breed

strategies, using the right tool for the right job and connecting them through

orchestrated workflows. I contend that AI follows a similar path, moving from

proof-of-concept to practical value by embracing this modular, integrated

approach. Plus, SLMs aren’t just cheaper than larger models, they can also

outperform them. ... The strongest case for the future of generative AI? Focused

small language models, continuously enriched by a living knowledge graph. Yes,

SLMs are still early-stage. The tools are immature, infrastructure is catching

up, and they don’t yet offer the plug-and-play simplicity of something like an

OpenAI API. But momentum is building, particularly in regulated sectors like law

enforcement where vendors with deep domain expertise are already driving

meaningful automation with SLMs.

Developers were already warming to small language models, but most of the

discussion has focused on technical or security advantages. In reality, for many

enterprise use cases, smaller, domain-specific models often deliver faster, more

relevant results than general-purpose LLMs. Why? Because most business problems

are narrow by nature. You don’t need a model that has read TS Eliot or that can

plan your next holiday. You need a model that understands your lead times,

logistics constraints, and supplier risk. ... Just like in e-commerce or IT

architecture, organizations are increasingly finding success with best-of-breed

strategies, using the right tool for the right job and connecting them through

orchestrated workflows. I contend that AI follows a similar path, moving from

proof-of-concept to practical value by embracing this modular, integrated

approach. Plus, SLMs aren’t just cheaper than larger models, they can also

outperform them. ... The strongest case for the future of generative AI? Focused

small language models, continuously enriched by a living knowledge graph. Yes,

SLMs are still early-stage. The tools are immature, infrastructure is catching

up, and they don’t yet offer the plug-and-play simplicity of something like an

OpenAI API. But momentum is building, particularly in regulated sectors like law

enforcement where vendors with deep domain expertise are already driving

meaningful automation with SLMs.Building Sovereign Data‑Centre Infrastructure in India

Beyond regulatory drivers, domestic data centre capacity delivers critical

performance and compliance advantages. Locating infrastructure closer to users

through edge or regional facilities has evidently delivered substantial

performance gains, with studies demonstrating latency reductions of more than

80 percent compared to centralised cloud models. This proximity directly

translates into higher service quality, enabling faster digital payments,

smoother video streaming, and more reliable enterprise cloud applications.

Local hosting also strengthens resilience and simplifies compliance by

reducing dependence on centralised infrastructure and obligations, such as

rapid incident reporting under Section 70B of the Information Technology

(Amendment) Act, 2008, that are easier to fulfil when infrastructure is

located within the country. ... India’s data centre expansion is constrained

by key challenges in permitting, power availability, water and cooling,

equipment procurement, and skilled labour. Each of these bottlenecks has

policy levers that can reduce risk, lower costs, and accelerate delivery. ...

AI-heavy workloads are driving rack power densities to nearly three times

those of traditional applications, sharply increasing cooling demand. This

growth coincides with acute groundwater stress in many Indian cities, where

freshwater use for industrial cooling is already constrained.

Beyond regulatory drivers, domestic data centre capacity delivers critical

performance and compliance advantages. Locating infrastructure closer to users

through edge or regional facilities has evidently delivered substantial

performance gains, with studies demonstrating latency reductions of more than

80 percent compared to centralised cloud models. This proximity directly

translates into higher service quality, enabling faster digital payments,

smoother video streaming, and more reliable enterprise cloud applications.

Local hosting also strengthens resilience and simplifies compliance by

reducing dependence on centralised infrastructure and obligations, such as

rapid incident reporting under Section 70B of the Information Technology

(Amendment) Act, 2008, that are easier to fulfil when infrastructure is

located within the country. ... India’s data centre expansion is constrained

by key challenges in permitting, power availability, water and cooling,

equipment procurement, and skilled labour. Each of these bottlenecks has

policy levers that can reduce risk, lower costs, and accelerate delivery. ...

AI-heavy workloads are driving rack power densities to nearly three times

those of traditional applications, sharply increasing cooling demand. This

growth coincides with acute groundwater stress in many Indian cities, where

freshwater use for industrial cooling is already constrained. How AI is helping one lawyer get kids out of jail faster

Anderson said his use of AI saves up to 94% of evidence review time for his

juvenile clients age 12-18. Anderson can now prepare for a bail hearing in half

an hour versus days. The time saved by using AI also results in thousands of

dollars in time saved. While the tools for AI-based video analysis are many,

Anderson uses Rev, a legal-tech AI tool that transcribes and indexes video

evidence to quickly turn overwhelming footage into accurate, searchable

information. ... “The biggest ROI is in critical, time-sensitive situations,

like a bail hearing. If a DA sends me three hours of video right after my client

is arrested, I can upload it to Rev and be ready to make a bail argument in half

an hour. This could be the difference between my client being held in custody

for a week versus getting them out that very day. The time I save allows me to

focus on what I need to do to win a case, like coming up with a persuasive

argument or doing research.” ... “We are absolutely at an inflection point. I

believe AI is leveling the playing field for solo and small practices. In the

past, all of the time-consuming tasks of preparing for trial, like transcribing

and editing video, were done manually. Rev has made it so easy to do on the fly,

by myself, that I don’t have to anticipate where an officer will stray in their

testimony. I can just react in real time. This technology empowers a small

practice to have the same capabilities as a large one, allowing me to focus on

the work that matters most.”

Anderson said his use of AI saves up to 94% of evidence review time for his

juvenile clients age 12-18. Anderson can now prepare for a bail hearing in half

an hour versus days. The time saved by using AI also results in thousands of

dollars in time saved. While the tools for AI-based video analysis are many,

Anderson uses Rev, a legal-tech AI tool that transcribes and indexes video

evidence to quickly turn overwhelming footage into accurate, searchable

information. ... “The biggest ROI is in critical, time-sensitive situations,

like a bail hearing. If a DA sends me three hours of video right after my client

is arrested, I can upload it to Rev and be ready to make a bail argument in half

an hour. This could be the difference between my client being held in custody

for a week versus getting them out that very day. The time I save allows me to

focus on what I need to do to win a case, like coming up with a persuasive

argument or doing research.” ... “We are absolutely at an inflection point. I

believe AI is leveling the playing field for solo and small practices. In the

past, all of the time-consuming tasks of preparing for trial, like transcribing

and editing video, were done manually. Rev has made it so easy to do on the fly,

by myself, that I don’t have to anticipate where an officer will stray in their

testimony. I can just react in real time. This technology empowers a small

practice to have the same capabilities as a large one, allowing me to focus on

the work that matters most.”

AI-powered Pentesting Tool ‘Villager’ Combines Kali Linux Tools with DeepSeek AI for Automated Attacks

The emergence of Villager represents a significant shift in the cybersecurity

landscape, with researchers warning it could follow the malicious use of Cobalt

Strike, transforming from a legitimate red-team tool into a weapon of choice for

malicious threat actors. Unlike traditional penetration testing frameworks that

rely on scripted playbooks, Villager utilizes natural language processing to

convert plain text commands into dynamic, AI-driven attack sequences. Villager

operates as a Model Context Protocol (MCP) client, implementing a sophisticated

distributed architecture that includes multiple service components designed for

maximum automation and minimal detection. ... This tool’s most alarming feature

is its ability to evade forensic detection. Containers are configured with a

24-hour self-destruct mechanism that automatically wipes activity logs and

evidence, while randomized SSH ports make detection and forensic analysis

significantly more challenging. This transient nature of attack containers,

combined with AI-driven orchestration, creates substantial obstacles for

incident response teams attempting to track malicious activity. ... Villager’s

task-based command and control architecture enables complex, multi-stage attacks

through its FastAPI interface operating on port 37695.

The emergence of Villager represents a significant shift in the cybersecurity

landscape, with researchers warning it could follow the malicious use of Cobalt

Strike, transforming from a legitimate red-team tool into a weapon of choice for

malicious threat actors. Unlike traditional penetration testing frameworks that

rely on scripted playbooks, Villager utilizes natural language processing to

convert plain text commands into dynamic, AI-driven attack sequences. Villager

operates as a Model Context Protocol (MCP) client, implementing a sophisticated

distributed architecture that includes multiple service components designed for

maximum automation and minimal detection. ... This tool’s most alarming feature

is its ability to evade forensic detection. Containers are configured with a

24-hour self-destruct mechanism that automatically wipes activity logs and

evidence, while randomized SSH ports make detection and forensic analysis

significantly more challenging. This transient nature of attack containers,

combined with AI-driven orchestration, creates substantial obstacles for

incident response teams attempting to track malicious activity. ... Villager’s

task-based command and control architecture enables complex, multi-stage attacks

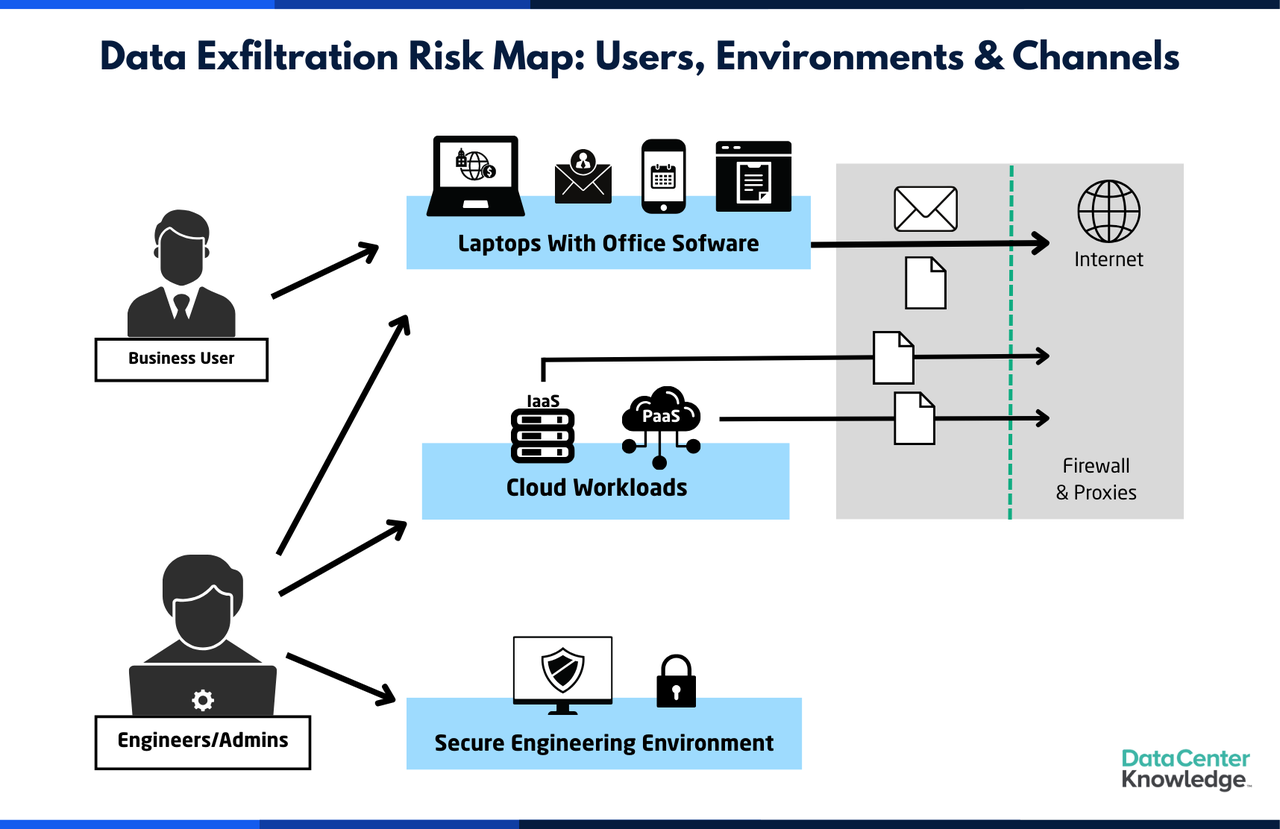

through its FastAPI interface operating on port 37695.Cloud DLP Playbook: Stopping Data Leaks Before They Happen

To get started on a cloud DLP strategy, organizations must answer two key

questions: Which users should be included in the scope?; and Which communication

channels should the DLP system cover Addressing these questions can help

organizations create a well-defined and actionable cloud DLP strategy that

aligns with their broader security and compliance objectives. ... Unlike

business users, engineers and administrators require elevated access and

permissions to perform their jobs effectively. While they might operate under

some of the same technical restrictions, they often have additional capabilities

to exfiltrate files. ... While DLP tools serve as the critical last line of

defense against active data exfiltration attempts, organizations should not rely

only on these tools to prevent data breaches. Reducing the amount of sensitive

data circulating within the network can significantly lower risks. ... Network

DLP inspects traffic originating from laptops and servers, regardless of whether

it comes from browsers, tools, applications, or command-line operations. It also

monitors traffic from PaaS components and VMs, making it a versatile system for

cloud environments. While network DLP requires all traffic to pass through a

network component, such as a proxy, it is indispensable for monitoring data

transfers originating from VMs and PaaS services.

To get started on a cloud DLP strategy, organizations must answer two key

questions: Which users should be included in the scope?; and Which communication

channels should the DLP system cover Addressing these questions can help

organizations create a well-defined and actionable cloud DLP strategy that

aligns with their broader security and compliance objectives. ... Unlike

business users, engineers and administrators require elevated access and

permissions to perform their jobs effectively. While they might operate under

some of the same technical restrictions, they often have additional capabilities

to exfiltrate files. ... While DLP tools serve as the critical last line of

defense against active data exfiltration attempts, organizations should not rely

only on these tools to prevent data breaches. Reducing the amount of sensitive

data circulating within the network can significantly lower risks. ... Network

DLP inspects traffic originating from laptops and servers, regardless of whether

it comes from browsers, tools, applications, or command-line operations. It also

monitors traffic from PaaS components and VMs, making it a versatile system for

cloud environments. While network DLP requires all traffic to pass through a

network component, such as a proxy, it is indispensable for monitoring data

transfers originating from VMs and PaaS services.

Weighing the true cost of transformation

“Most costs aren’t IT costs, because digital transformation isn’t an IT

project,” he says. “There’s the cost of cultural change in the people who will

have to adopt the new technologies, and that’s where the greatest corporate

effort is required.” Dimitri also highlights the learning curve costs.

Initially, most people are naturally reluctant to change and inefficient with

new technology. ... “Cultural transformation is the most significant and costly

part of digital transformation because it’s essential to bring the entire

company on board,” Dimitri says. ... Without a structured approach to change,

even the best technological tools fail as resistance manifests itself in subtle

delays, passive defaults, or a silent return to old processes. Change,

therefore, must be guided, communicated, and cultivated. Skipping this step is

one of the costliest mistakes a company can make in terms of unrealized value.

Organizations must also cultivate a mindset that embraces experimentation,

tolerates failure, and values continuous learning. This has its own associated

costs and often requires unlearning entrenched habits and stepping out of

comfort zones. There are other implicit costs to consider, too, like the stress

of learning a new system and the impact on staff morale. If not managed with

empathy, digital transformation can lead to burnout and confusion, so ongoing

support through a hyper-assistance phase is needed, especially during the first

weeks following a major implementation.

“Most costs aren’t IT costs, because digital transformation isn’t an IT

project,” he says. “There’s the cost of cultural change in the people who will

have to adopt the new technologies, and that’s where the greatest corporate

effort is required.” Dimitri also highlights the learning curve costs.

Initially, most people are naturally reluctant to change and inefficient with

new technology. ... “Cultural transformation is the most significant and costly

part of digital transformation because it’s essential to bring the entire

company on board,” Dimitri says. ... Without a structured approach to change,

even the best technological tools fail as resistance manifests itself in subtle

delays, passive defaults, or a silent return to old processes. Change,

therefore, must be guided, communicated, and cultivated. Skipping this step is

one of the costliest mistakes a company can make in terms of unrealized value.

Organizations must also cultivate a mindset that embraces experimentation,

tolerates failure, and values continuous learning. This has its own associated

costs and often requires unlearning entrenched habits and stepping out of

comfort zones. There are other implicit costs to consider, too, like the stress

of learning a new system and the impact on staff morale. If not managed with

empathy, digital transformation can lead to burnout and confusion, so ongoing

support through a hyper-assistance phase is needed, especially during the first

weeks following a major implementation.

5 Costly Customer Data Mistakes Businesses Will Make In 2025

As AI continues to reshape the business technology landscape, one thing remains

unchanged: Customer data is the fuel that fires business engines in the drive

for value and growth. Thanks to a new generation of automation and tools, it

holds the key to personalization, super-charged customer experience, and

next-level efficiency gains. ... In fact, low-quality customer data can actively

degrade the performance of AI by causing “data cascades” where seemingly small

errors are replicated over and over, leading to large errors further along the

pipeline. That isn't the only problem. Storing and processing huge amounts of

data—particularly sensitive customer data—is expensive, time-consuming and

confers what can be onerous regulatory obligations. ... Synthetic customer data

lets businesses test pricing strategies, marketing spend, and product features,

as well as virtual behaviors like shopping cart abandonment, and real-world

behaviors like footfall traffic around stores. Synthetic customer data is far

less expensive to generate and not subject to any of the regulatory and privacy

burdens that come with actual customer data. ... Most businesses are only

scratching the surface of the value their customer data holds. For example,

Nvidia reports that 90 percent of enterprise customer data can’t be tapped for

value. Usually, this is because it’s unstructured, with mountains of data

gathered from call recordings, video footage, social media posts, and many other

sources.

As AI continues to reshape the business technology landscape, one thing remains

unchanged: Customer data is the fuel that fires business engines in the drive

for value and growth. Thanks to a new generation of automation and tools, it

holds the key to personalization, super-charged customer experience, and

next-level efficiency gains. ... In fact, low-quality customer data can actively

degrade the performance of AI by causing “data cascades” where seemingly small

errors are replicated over and over, leading to large errors further along the

pipeline. That isn't the only problem. Storing and processing huge amounts of

data—particularly sensitive customer data—is expensive, time-consuming and

confers what can be onerous regulatory obligations. ... Synthetic customer data

lets businesses test pricing strategies, marketing spend, and product features,

as well as virtual behaviors like shopping cart abandonment, and real-world

behaviors like footfall traffic around stores. Synthetic customer data is far

less expensive to generate and not subject to any of the regulatory and privacy

burdens that come with actual customer data. ... Most businesses are only

scratching the surface of the value their customer data holds. For example,

Nvidia reports that 90 percent of enterprise customer data can’t be tapped for

value. Usually, this is because it’s unstructured, with mountains of data

gathered from call recordings, video footage, social media posts, and many other

sources.Vibe coding is dead: Agentic swarm coding is the new enterprise moat

“Even Karpathy’s vibe coding term is legacy now. It’s outdated,” Val Bercovici,

chief AI officer of WEKA, told me in a recent conversation. “It’s been

superseded by this concept of agentic swarm coding, where multiple agents in

coordination are delivering… very functional MVPs and version one apps.” And

this comes from Bercovici, who carries some weight: He’s a long-time

infrastructure veteran who served as a CTO at NetApp and was a founding board

member of the Cloud Native Compute Foundation (CNCF), which stewards Kubernetes.

The idea of swarms isn't entirely new — OpenAI's own agent SDK was originally

called Swarm when it was first released as an experimental framework last year.

But the capability of these swarms reached an inflection point this summer. ...

Instead of one AI trying to do everything, agentic swarms assign roles. A

"planner" agent breaks down the task, "coder" agents write the code, and a

"critic" agent reviews the work. This mirrors a human software team and is the

principle behind frameworks like Claude Flow, developed by Toronto-based Reuven

Cohen. Bercovici described it as a system where "tens of instances of Claude

code in parallel are being orchestrated to work on specifications,

documentation... the full CICD DevOps life cycle." This is the engine behind the

agentic swarm, condensing a month of teamwork into a single hour.

“Even Karpathy’s vibe coding term is legacy now. It’s outdated,” Val Bercovici,

chief AI officer of WEKA, told me in a recent conversation. “It’s been

superseded by this concept of agentic swarm coding, where multiple agents in

coordination are delivering… very functional MVPs and version one apps.” And

this comes from Bercovici, who carries some weight: He’s a long-time

infrastructure veteran who served as a CTO at NetApp and was a founding board

member of the Cloud Native Compute Foundation (CNCF), which stewards Kubernetes.

The idea of swarms isn't entirely new — OpenAI's own agent SDK was originally

called Swarm when it was first released as an experimental framework last year.

But the capability of these swarms reached an inflection point this summer. ...

Instead of one AI trying to do everything, agentic swarms assign roles. A

"planner" agent breaks down the task, "coder" agents write the code, and a

"critic" agent reviews the work. This mirrors a human software team and is the

principle behind frameworks like Claude Flow, developed by Toronto-based Reuven

Cohen. Bercovici described it as a system where "tens of instances of Claude

code in parallel are being orchestrated to work on specifications,

documentation... the full CICD DevOps life cycle." This is the engine behind the

agentic swarm, condensing a month of teamwork into a single hour.

The Role of Human-in-the-Loop in AI-Driven Data Management

Human-in-the-loop (HITL) is no longer a niche safety net—it’s becoming a

foundational strategy for operationalizing trust. Especially in healthcare and

financial services, where data-driven decisions must comply with strict

regulations and ethical expectations, keeping humans strategically involved in

the pipeline is the only way to scale intelligence without surrendering

accountability. ... The goal of HITL is not to slow systems down, but to apply

human oversight where it is most impactful. Overuse can create workflow

bottlenecks and increase operational overhead. But underuse can result in

unchecked bias, regulatory breaches, or loss of public trust. Leading

organizations are moving toward risk-based HITL frameworks that calibrate

oversight based on the sensitivity of the data and the consequences of error.

... As AI systems become more agentic—capable of taking actions, not just making

predictions—the role of human judgment becomes even more critical. HITL

strategies must evolve beyond spot-checks or approvals. They need to be embedded

in design, monitored continuously, and measured for efficacy. For data and

compliance leaders, HITL isn’t a step backward from digital transformation. It

provides a scalable approach to ensure that AI is deployed

responsibly—especially in sectors where decisions carry long-term consequences.

Human-in-the-loop (HITL) is no longer a niche safety net—it’s becoming a

foundational strategy for operationalizing trust. Especially in healthcare and

financial services, where data-driven decisions must comply with strict

regulations and ethical expectations, keeping humans strategically involved in

the pipeline is the only way to scale intelligence without surrendering

accountability. ... The goal of HITL is not to slow systems down, but to apply

human oversight where it is most impactful. Overuse can create workflow

bottlenecks and increase operational overhead. But underuse can result in

unchecked bias, regulatory breaches, or loss of public trust. Leading

organizations are moving toward risk-based HITL frameworks that calibrate

oversight based on the sensitivity of the data and the consequences of error.

... As AI systems become more agentic—capable of taking actions, not just making

predictions—the role of human judgment becomes even more critical. HITL

strategies must evolve beyond spot-checks or approvals. They need to be embedded

in design, monitored continuously, and measured for efficacy. For data and

compliance leaders, HITL isn’t a step backward from digital transformation. It

provides a scalable approach to ensure that AI is deployed

responsibly—especially in sectors where decisions carry long-term consequences.

AI vs Gen Z: How AI has changed the career pathway for junior developers

Ethical dilemmas aside, an overreliance on AI obviously causes an atrophy of

skills for young thinkers. Why spend time reading your textbooks when you can

get the answers right away? Why bother working through a particularly difficult

homework problem when you can just dump it into an AI to give you the answer? To

form the critical thinking skills necessary for not just a fruitful career, but

a happy life, must include some of the discomfort that comes from not knowing.

AI tools eliminate the discovery phase of learning—that precious, priceless part

where you root around blindly until you finally understand. ... The truth is

that AI has made much of what junior developers of the past did redundant. Gone

are the days of needing junior developers to manually write code or debug,

because now an already tenured developer can just ask their AI assistant to do

it. There’s even some sentiment that AI has made junior developers less

competent, and that they’ve lost some of the foundational skills that make for a

successful entry-level employee. See above section on AI in school if you need a

refresher on why this might be happening. ... More optimistic outlooks on the AI

job market see this disruption as an opportunity for early career professionals

to evolve their skillsets to better fit an AI-driven world. If I believe in

nothing else, I believe in my generation’s ability to adapt, especially to

technology.

Ethical dilemmas aside, an overreliance on AI obviously causes an atrophy of

skills for young thinkers. Why spend time reading your textbooks when you can

get the answers right away? Why bother working through a particularly difficult

homework problem when you can just dump it into an AI to give you the answer? To

form the critical thinking skills necessary for not just a fruitful career, but

a happy life, must include some of the discomfort that comes from not knowing.

AI tools eliminate the discovery phase of learning—that precious, priceless part

where you root around blindly until you finally understand. ... The truth is

that AI has made much of what junior developers of the past did redundant. Gone

are the days of needing junior developers to manually write code or debug,

because now an already tenured developer can just ask their AI assistant to do

it. There’s even some sentiment that AI has made junior developers less

competent, and that they’ve lost some of the foundational skills that make for a

successful entry-level employee. See above section on AI in school if you need a

refresher on why this might be happening. ... More optimistic outlooks on the AI

job market see this disruption as an opportunity for early career professionals

to evolve their skillsets to better fit an AI-driven world. If I believe in

nothing else, I believe in my generation’s ability to adapt, especially to

technology.

/articles/software-engineers-excel-AI/en/smallimage/software-engineers-excel-AI-thumbnail-1756904662117.jpg)

/articles/ransomware-resilient-storage-cyber-defense/en/smallimage/ransomware-resilient-storage-cyber-defense-thumbnail-1755589602893.jpg)