Some of the important measures demanded from the government to help the startups include a rental subsidy for workspaces used by startups which are regulated/owned/managed by government agencies; blanket suspension of all deadlines including tax payment deadlines and filing deadlines until at least four weeks post lifting of all city lockdown. The industry body said that the pandemic has created a significant liquidity crunch for the sector and to ensure timely payment of salaries to employees, the banks may voluntarily provide for an overdraft facility or interest-free and equity convertible funding to startups. Nasscom has demanded a one-time provident fund opt-out option for employees. "The Government can consider providing an option to the employees for a onetime PF opt-out option for the next financial year 2020-21. In such a case, both the employee and employer's contributions towards the PF may be transferred directly to the employee. This will result in an increase in the take-home pay of the employees," said Nasscom in the representation made to the government.

Reference Architecture for Healthcare – Introduction and Principles

The good news is that information technology can solve problems of fragmentation, through smart process management, and the exchange of standardized information, to name a few. A Blueprint for the Healthcare Industry: The aim must be to help organizations provide health services with better outcomes, at lower cost, and improved patient and staff experience. We need a toolbox that is flexible, adaptable to individual needs, and that can serve a network of partners that team up to deliver care. The Patient Perspective: As a patient with a chronic disease, I monitor my health condition daily. I manage my medication with the help of my devices and adjust my lifestyle accordingly. My care providers should work with me to manage my disease. The Health Professional Perspective: As a Healthcare professional, I need to team up to coordinate delivery of care. I create, use, and share information with other care providers within a given episode of care, and across different treatment periods. The Architect and Planner Perspective: As a user of the reference architecture, I need an easy-to-use toolbox that is readily available and helps me in my daily work. It needs to align with the regulations of our industry.

Maybe the biggest challenge we face as a society is our ability to unlearn – to let go of – outdated concepts and beliefs in order to adopt new approaches. Our everyday lives are dominated by outdated concepts: change the oil every 3,000 miles, don’t wear white before Memorial Day, only senior management has the best ideas, don’t eat dessert until you’ve cleaned your plate, trade wars are easy to win, leeches work wonders on headaches, etc. Well, I’m going to throw down the gauntlet and challenge everyone to open their minds to the possibility of new ideas and new learning. That does not mean you should blindly believe, but instead, should invest the time to study, unlearn and learn new approaches and concepts. “You can’t climb a ladder if you’re not willing to let go of the rung below you.” As the new Chief Innovation Officer at Hitachi Vantara, leveraging ideation and innovation to derive and drive new sources of customer, product and operational value is more important than ever. So, Hitachi Vantara employees and customers, be prepared to change your frames; to challenge conventional thinking with respect to how we blend new concepts – AI / ML, Big Data, IOT – with tried and true ideas – Economics, Design Thinking – to create new sources of value.

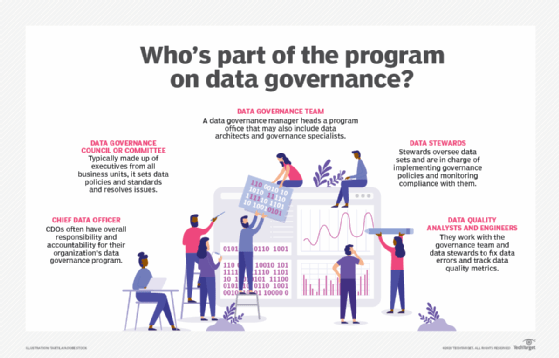

How data governance and data management work together

Although data governance provides a framework of controls for effective data management, it is just one component of the overall practice. Dan Everett, VP of product and solution marketing at Informatica, accurately described the relationship between data management and governance in a blog post. He said data governance must be implemented to be effective, while data management facilitates policy enforcement. Business size often determines how the data governance and data management responsibilities are organized and assigned. But size shouldn't be a determining factor for treating data as an enterprise asset, establishing effective data governance policies and performing high-quality data management. ... The initial data governance policies and data management procedures will most likely have gaps that lead to data quality issues. In addition, ensuring enterprise data is correct and used properly throughout the organization is fluid by nature. In other words, "things change." Data usage is highly dynamic and data governance controls and data management procedures may not always provide the guidance and best practices needed to guarantee data quality across all data stores.

“Growing awareness around data privacy issues has compelled consumers to seek more control over their data and take some action to protect their privacy online. However, with over half of Brits saying they don’t know how to safeguard their online privacy, there is still a clear need for education on how people can keep themselves, and their data, safe online.” The extensive study found that 86% claimed to have taken at least one step to protect themselves online, such as clearing or disabling cookies, limiting what they share on social media platforms, and not using public Wi-Fi. Almost exactly the same proportion said they could still do more to protect themselves. In terms of what keeps consumers awake at night, NortonLifeLock found that 65% of Brits believe facial recognition technology will be misused and abused, and 42% believe it will do more harm than good – even though the majority also seem to support its use, with over 70% supporting its use by law enforcement.

What are deepfakes – and how can you spot them?

Deepfake technology can create convincing but entirely fictional photos from scratch. A non-existent Bloomberg journalist, “Maisy Kinsley”, who had a profile on LinkedIn and Twitter, was probably a deepfake. Another LinkedIn fake, “Katie Jones”, claimed to work at the Center for Strategic and International Studies, but is thought to be a deepfake created for a foreign spying operation. Audio can be deepfaked too, to create “voice skins” or ”voice clones” of public figures. Last March, the chief of a UK subsidiary of a German energy firm paid nearly £200,000 into a Hungarian bank account after being phoned by a fraudster who mimicked the German CEO’s voice. The company’s insurers believe the voice was a deepfake, but the evidence is unclear. Similar scams have reportedly used recorded WhatsApp voice messages. ... Poor-quality deepfakes are easier to spot. The lip synching might be bad, or the skin tone patchy. There can be flickering around the edges of transposed faces. And fine details, such as hair, are particularly hard for deepfakes to render well, especially where strands are visible on the fringe.

Spike in Remote Work Leads to 40% Increase in RDP Exposure to Hackers

As Covid-19 continues to wreak havoc globally, companies are keeping their employees at home. To ensure compliance and stay atop security standards, teleworkers have to patch into their company’s infrastructure using remote desktop protocol (RDP) and virtual private networks (VPN). But not everyone uses these solutions securely. Research by the folks behind Shodan, the search engine for Internet-connected devices, reveals that IT departments globally are exposing their organizations to risk as more companies go remote due to COVID-19. “The Remote Desktop Protocol (RDP) is a common way for Windows users to remotely manage their workstation or server. However, it has a history of security issues and generally shouldn’t be publicly accessible without any other protections (ex. firewall whitelist, 2FA),” writes Shodan creator John Matherly. After pulling new data regarding devices exposed via RDP and VPN, Matherly found that the number of devices exposing RDP to the Internet on standard ports jumped more than 40 percent over the past month to 3,389. In an attempt to foil hackers, IT administrators sometimes put an insecure service on a non-standard port (aka security by obscurity), Matherly notes.

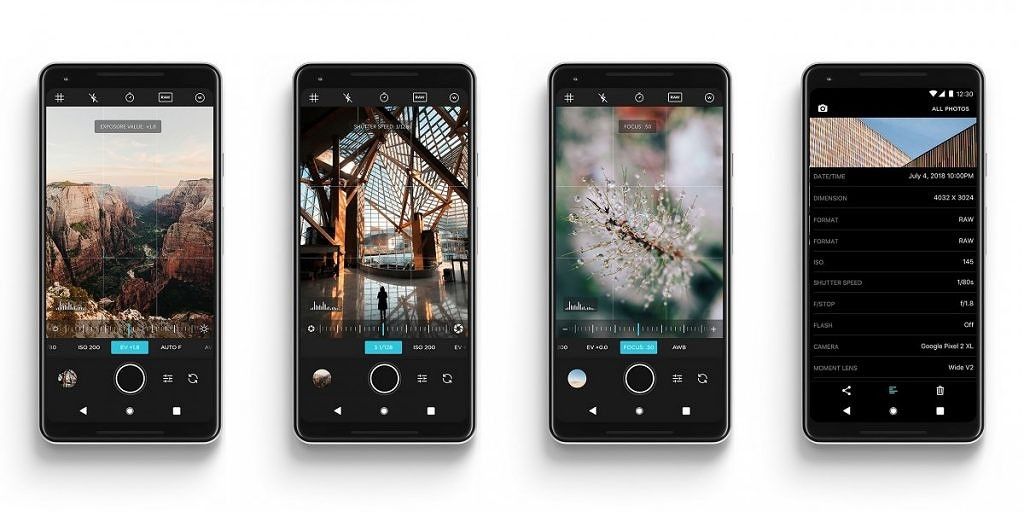

Google’s CameraX Android API will let third-party apps use the best features of the stock camera

The benefit of using CameraX as a wrapper for the Camera2 API is that, internally, it resolves any device-specific compatibility issues that may arise. This alone will be useful for camera app developers since it can reduce boilerplate code and time spent researching camera problems. That’s not all that CameraX can do, though. While that first part is mostly only interesting to developers, there’s another part that applies to both developers and end users: Vendor Extensions. This is Google’s answer to the camera feature fragmentation on Android. Device manufacturers can opt to ship extension libraries with their phones that allow CameraX (and developers and users) to leverage native camera features. For example, say you really like Samsung’s Portrait Mode effect, but you don’t like the camera app itself. If Samsung decides to implement a CameraX Portrait Mode extension in its phones, any third-party app using CameraX will be able to use Samsung’s Portrait Mode. Obviously, this isn’t just confined to that one feature. Manufacturers can theoretically open up any of their camera features to apps using CameraX.

Personal details for the entire country of Georgia published online

Personal information such as full names, home addresses, dates of birth, ID numbers, and mobile phone numbers were shared online in a 1.04 GB MDB (Microsoft Access database) file. The leaked data was spotted by the Under the Breach, a data breach monitoring and prevention service, and shared with ZDNet over the weekend. The database contained 4,934,863 records including details for millions of deceased citizens -- as can be seen from the screenshot below. Georgia's current population is estimated at 3.7 million, according to a 2019 census. It is unclear if the forum user who shared the data is the one who obtained it. The data's source also remains a mystery. On Sunday, ZDNet initially reported this leak over as coming from Georgia's Central Election Commission (CEC), but in a statement on Monday, the commission denied that the data originated from its servers, as it contained information that they don't usually collect.

AlphaFold Algorithm Predicts COVID-19 Protein Structures

AlphaFold is composed of three distinct layers of deep neural networks. The first layer is composed of a variational autoencoder stacked with an attention model, which generates realistic-looking fragments based on a single sequence’s amino acids. The second layer is split into two sublayers. The first sublayer optimizes inter-residue distances using a 1D CNN on a contact map, which is a 2D representation of amino acid residue distance by projecting the contact map onto a single dimension to input into the CNN. The second sublayer optimizes a scoring network, which is how much the generated substructures look like a protein using a 3D CNN. After regularizing, they add a third neural network layer that scores the generated protein against the actual model. The model conducted training on the Protein Data Bank, which is a freely accessible database that contains the three-dimensional structures for larger biological molecules such as proteins and nucleic acids.

Quote for the day:

"A leader knows what's best to do. A manager knows merely how best to do it." -- Ken Adelman