OmniML releases platform for building lightweight ML models for the edge

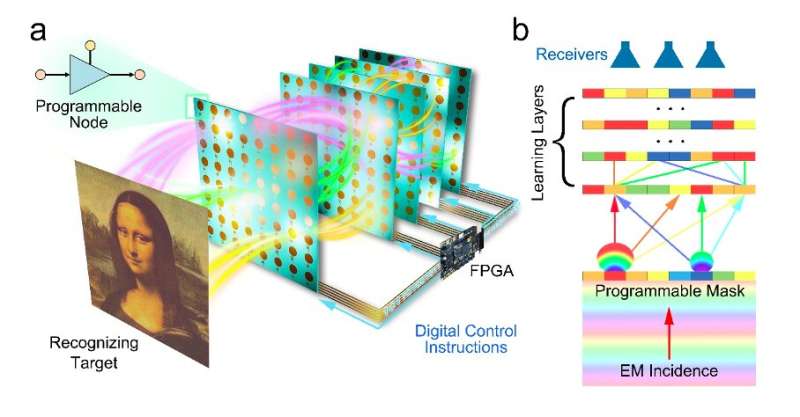

“Today’s AI is too big, as modern deep learning requires a massive amount of

computational resources, carbon footprint, and engineering efforts. This makes

AI on edge devices extremely difficult because of the limited hardware

resources, the power budget, and deployment challenges,” said Di Wu, cofounder

and CEO of OmniML. “The fundamental cause of the problem is the mismatch between

AI models and hardware, and OmniML is solving it from the root by adapting the

algorithms for edge hardware,” Wu said. “This is done by improving the

efficiency of a neural network using a combination of model compression, neural

architecture rebalances, and new design primitives.” This approach, which grew

out of the research of Song Han, an assistant professor of electrical

engineering and computer science at MIT, uses a “deep compression” technique

that reduces the size of the neural network without losing accuracy, so the

solution can better optimize ML models for different chips and devices at the

networks edge.

Kestra: A Scalable Open-Source Orchestration and Scheduling Platform

It is built upon well-known tools like Apache Kafka and ElasticSearch. The Kafka

architecture provides scalability: every worker in Kestra cluster is implemented

as a Kafka consumer and the state of the execution of a workflow is managed by

an executor implemented with Kafka Streams. ElasticSearch is used as a database

that allows displaying, searching and aggregating all the data. The concept of a

workflow, called Flow in Kestra, is at the heart of the platform. It is a list

of tasks defined with a descriptive language based on yaml. It can be used to

describe simple workflows but it allows more complex scenarios such as dynamic

tasks and flow dependencies. Flows can be based on events such as results of

other flows, detection of files from Google Cloud Storage or results of a SQL

query. Flows can also be scheduled at regular intervals based on a cron

expression. Furthermore, Kestra exposes an API to trigger a workflow from any

application or simply start it directly from the Web UI.

Chaos Engineering Was Missing from Harness’ CI/CD Before ChaosNative Purchase

Chaos engineering has emerged as an increasingly essential process to maintain

reliability for applications in cloud native environments. Unlike pre-production

testing, chaos engineering involves determining when and how software might

break in production by testing it in a non-production scenario. Think of chaos

engineering as an overlap between reliability testing and experimenting with

code and applications across a continuous integration/continuous delivery

(CI/CD) pipeline, by obtaining metrics and data about how an application might

fail when certain errors are induced. Specific to ChaosNative’s offerings that

Harness has purchased, ChaosNative Litmus Enterprise has helped DevOps and site

reliability engineers (SREs) to adopt chaos engineering tools that are

self-managed, while the cloud service, ChaosNative Litmus Cloud, offers a hosted

LitmusChaos control plane. Indeed, chaos engineering has become increasingly

critical for DevOps teams, especially those seeking to increase agility by being

able to apply chaos engineering to the very beginning of the production

cycle.

CIO interview: Craig York, CTO, Milton Keynes University Hospital

“Going into multiple systems is a pain for our clinicians,” he says. “It’s not

very efficient, and you need to keep track of the different patients that you’re

looking at across the same timeframe. We have embedded the EDRM system within

Cerner Millennium, so our EPR is the system of record for our clinicians. “You

click a button and it logs you into the medical records and you can scan through

those as you wish. You’re in the right record, there’s more efficiency, and

there’s better patient safety as well.” The hospital’s internal IT team

undertook the project working in close collaboration with software developers at

CCube Solutions. York says it was a complex project, but by working together to

achieve set goals, the integration of EDRM and EPR systems is now delivering big

benefits for the hospital. “Sometimes in healthcare, we underplay how

complicated things are,” he says. “The work with CCube is an example of where

we’ve asked an organisation to step up and deliver on our requirements, and

they’ve done it and they’ve proved their capabilities. We are now reaping

rewards from that effort, so I’m thankful to them for that.”

Continuous Machine Learning

Now imagine a machine learning (ML) and data scientists team trying to achieve

the same, but with an ML model. There are a few complexities involved

Developing ML model isn’t the same as developing a software. Most of the code

is essentially a black-box, difficult to pinpoint issues in ML code. Verifying

ML code is an art unto itself, static code checks and code quality checks used

in software code aren’t sufficient, we need data checks, sanity checks and

bias verification. ... CML injects CI/CD style automation into the workflow.

Most of the configurations are defined in a cml.yaml config file kept in the

repository. In the example below this file specifies what actions are supposed

to be performed when the feature branch is ready to be merged with the main

branch. When a pull request is raised, the GitHub Actions utilize this

workflow and perform activities specified in the config file - like run the

train.py file or generate an accuracy report. CML works with a set of

functions called CML Functions. These are predefined bits of code that help

our workflow like allowing these reports to be published as comments or even

launching a cloud runner to execute the rest of the workflow.

Cybercriminals’ phishing kits make credential theft easier than ever

Phishing kits make it easier for cybercriminals without technical knowledge to

launch phishing campaigns. Yet another reason lies in the fact that phishing

pages are frequently detected after a few hours of existing and are quickly

shut down by providers. The hosting providers are often alerted by internet

users who receive phishing emails and pull the phishing page down as soon as

possible. Phishing kits make it possible to host multiple copies of phishing

pages faster, enabling the fraud to stay up for longer. Finally, some phishing

kits provide anti-detection systems. They might be configured to refuse

connections from known bots belonging to security or anti-phishing companies,

or search engines. Once indexed by a search engine, a phishing page is

generally taken down or blocked faster. Countermeasures used by some kits

might also be using geolocation. A phishing page targeting one language should

not be opened by someone using another language. And some phishing kits are

using slight or heavy obfuscation to avoid being detected by automated

anti-phishing solutions.

What is the Spanning Tree Protocol?

Spanning Tree is a forwarding protocol for data packets. It’s one part traffic

cop and one part civil engineer for the network highways that data travels

through. It sits at Layer 2 (data link layer), so it is simply concerned with

moving packets to their appropriate destination, not what kind of packets are

being sent, or the data that they contain. Spanning Tree has become so

ubiquitous that its use is defined in the IEEE 802.1D networking standard. As

defined in the standard, only one active path can exist between any two

endpoints or stations in order for them to function properly. Spanning Tree is

designed to eliminate the possibility that data passing between network

segments will get stuck in a loop. In general, loops confuse the forwarding

algorithm installed in network devices, making it so that the device no longer

knows where to send packets. This can result in the duplication of frames or

the forwarding of duplicate packets to multiple destinations. Messages can get

repeated. Communications can bounce back to a sender. It can even crash a

network if too many loops start occurring, eating up bandwidth without any

appreciable gains while blocking other non-looped traffic from getting

through.

5 elements of employee experience that impact customer experience and revenue growth

Companies are leaving money on the table - Breaking silos between employee

experience and customer experience can lead to a massive opportunity for

revenue growth of up to 50% or more. Companies think they have to choose

between prioritizing employee or customer experiences - And customer

experience is winning. Approximately nine in 10 C-suite members (88%) say

employees are encouraged to focus on customers' needs above all else, even

though the C-suite knows that a powerful customer experience starts with an

employee-first approach. Five core elements of employee experience impact

customer experience and growth - Trust, C-Suit Accountability, Alignment,

Recognition, and Seamless Technology. There is a disconnect between C-suite

perception and employee experience - 71% of C-suite leaders report their

employees are engaged with their work when in reality, only 51% of employees

say they are; 70% of leaders report their employees are happy, while only

44% of employees report they are.

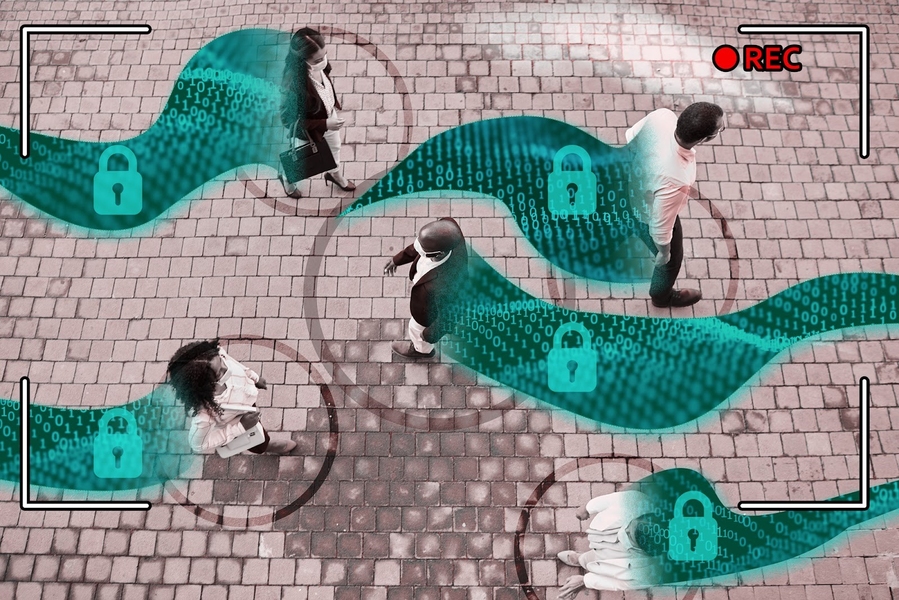

Don’t take data for granted

Thanks to the emergence of cloud-native architecture, where we containerize,

deploy microservices, and separate the data and compute tiers, we now bring

all that together and lose the complexity. Dedicate some nodes as Kafka sinks,

generate change data captures feed on other nodes, and persisted data on other

nodes, and it’s all under a single umbrella on the same physical or virtual

cluster. And so as data goes global, we have to worry about governing it.

Increasingly, there are mandates for keeping data inside the country of

origin, and depending on the jurisdiction, varying rights of privacy and

requirements for data retention. Indirectly, restrictions on data movement

across national boundaries is prompting the question of hybrid cloud. There

are other rationales for data gravity, especially with established back office

systems managing financial and customer records, where the interdependencies

between legacy applications may render it impractical to move data into a

public cloud. Those well-entrenched ERP systems and the like represent the

final frontier for cloud adoption.

What makes a digital transformation project ethical?

One of the best ways to approach ethical digital transformation is to look to

your community. This is your core user base and might be made up of customers,

peers, and your own people from within your organisation. Though it can be a

time-consuming process, engaging with the community on your digital

transformation plans has a number of benefits when driving forward an ethical

initiative. Crucially, consulting both internal and external stakeholders can

help to identify any unanticipated policy concerns or technical issues. This

is inherently valuable from a technical standpoint, as building out channels

of communication and feedback allows you to fix mistakes while remaining agile

and constructive. Raising community concerns is especially important when

ethics are a part of your organisation’s mission statement. Not only does

gathering feedback highlight any potential hidden concerns around the digital

products you will be building, but engagement also goes hand in hand with both

perceived and actual transparency, as gathering valuable feedback requires a

degree of openness about the project.

Quote for the day:

"We get our power from the people we

lead, not from our stars and our bars." -- J. Stanford