Microsoft says cloud demand waning, plans to infuse AI into products

Microsoft Azure and other cloud services grew 38% in constant currency terms on

a year-on-year basis, slowing down by 4% from the previous sequential quarter.

“As I noted earlier, we exited Q2 with Azure growth in the mid-30s in constant

currency. And from that, we expect Q3 growth to decelerate roughly four to five

points in constant currency,” Amy Hood, chief financial officer at Microsoft,

said during an earnings call. The growth in cloud number is expected to slow

down further through the year, warned Microsoft Chief Executive Satya Nadella.

“As I meet with customers and partners, a few things are increasingly clear.

Just as we saw customers accelerate their digital spend during the pandemic, we

are now seeing them optimize that spend,” Nadella said during the earnings call,

adding that enterprises were exercising caution in spending on cloud. Explaining

further about enterprises optimizing their spend, Nadella said that enterprises

wanted to get the maximum return on their investment and save expenses to put

into new workloads.

Why Software Talent Is Still in Demand Despite Tech Layoffs, Downturn and a Potential Recession

We live in a world run by software programs. With increasing digitization, there

will always be a demand for software solutions. In particular, software

developers are in high demand within the tech industry. In the age of data,

firms need software developers who will analyze the data to create software

solutions. They will also use the data to understand user needs, monitor

performance and modify the programs accordingly. Software developers have skills

that prove them valuable in many industries. As long as an industry needs

software solutions, a developer can provide and customize them to the firms that

need them. ... Many tech workers suffered a terrible blow in 2022. Their

prestigious jobs at giant tech firms vanished, leaving many stranded and

confused. However, there is still a significant demand for tech professionals in

our technological world, particularly software developers. Software development

is the bedrock of the tech industry. Software engineers with valuable skill

sets, experience and drive will quickly find other positions and

opportunities.

Cybercrime Ecosystem Spawns Lucrative Underground Gig Economy

Improving defenses have forced attackers to improve their tools and techniques,

driving the need for more technical specialists, explains Polina Bochkareva, a

security services analyst at Kaspersky. "Business related to illegal activities

is growing on underground markets, and technologies are developing along with

it," she says. "All this leads to the fact that attacks are also developing,

which requires more skilled workers." The underground jobs data highlights the

surge in activity in cybercriminal services and the professionalization of the

cybercrime ecosystem. Ransomware groups have become much more efficient as they

have turned specific facets of operations into services, such as offering

ransomware-as-a-service (RaaS), running bug bounties, and creating sales teams,

according to a December report. In addition, initial access brokers have

productized the opportunistic compromise of enterprise networks and systems,

often selling that access to ransomware groups. Such division of labor requires

technically skilled people to develop and support the complex features, the

Kaspersky report stated.

3 ways to stop cybersecurity concerns from hindering utility infrastructure modernization efforts

Cybersecurity is a priority across industries and borders, but several factors

add to the complexity of the unique environment in which utilities operate.

Along with a constant barrage of attacks, as a regulated industry, utilities

face several new compliance and reporting mandates, such as the Cyber Incident

Reporting for Critical Infrastructure Act of 2022 (CIRCIA). Other security

considerations include aging OT, which can be challenging to update and to

protect, the lack of control over third-party technologies and IoT devices such

as smart home devices and solar panels, and finally, the biggest threat of all:

human error. These risk factors put extra pressure on utilities, as one

successful attack can have deadly consequences. The instance of a hacker

attempting to poison (thankfully unsuccessfully) the water supply in Oldsmar,

Florida is one example that comes to mind. Utilities have a lot to contend with

even before adding data analytics into the mix. However, it is interesting to

point out that consumers are significantly less worried about the privacy of

data collected by utilities.

Why cybersecurity teams are central to organizational trust

No business is an island; it depends on many partners (whether formal business

partners or some other relationship) – a fact highlighted by the widespread

supply chain challenges across many industries over the past couple of years.

The security of software supply chains – which is to say, dependencies on

upstream libraries and other code used by organizations in their software – is

a topic of considerable focus today up to and including from the U.S.

executive branch. It’s still arguably not getting the attention it deserves,

though. The aforementioned 2023 Global Tech Outlook report found that, among

the funding priorities within security, third-party or supply chain risk

management came in at the very bottom, with just 12 percent of survey

respondents saying it was a top priority. Deb Golden, who leads Deloitte’s

U.S. Cyber and Strategic Risk practice, told the authors that there needs to

be more scrutiny over supply chains. “Organizations are accountable for

safeguarding information and share a responsibility to respond and manage

broader network threats in near real-time,” she said.

Global Microsoft cloud-service outage traced to rapid BGP router updates

The withdrawal of BGP routes prior to the outage appeared largely to impact

direct peers, ThousandEyes said. With a direct path unavailable during the

withdrawal periods, the next best available path would have been through a

transit provider. Once direct paths were readvertised, the BGP best-path

selection algorithm would have chosen the shortest path, resulting in a

reversion to the original route. These re-advertisements repeated several

times, causing significant route-table instability. “This was rapidly

changing, causing a lot of churn in the global internet routing tables,” said

Kemal Sanjta, principal internet analyst at ThousandEyes, in a webcast

analysis of the Microsoft outage. “As a result, we can see that a lot of

routers were executing best path selection algorithm, which is not really a

cheap operation from a power-consumption perspective.” More importantly, the

routing changes caused significant packet loss, leaving customers unable to

reach Microsoft Teams, Outlook, SharePoint, and other applications.

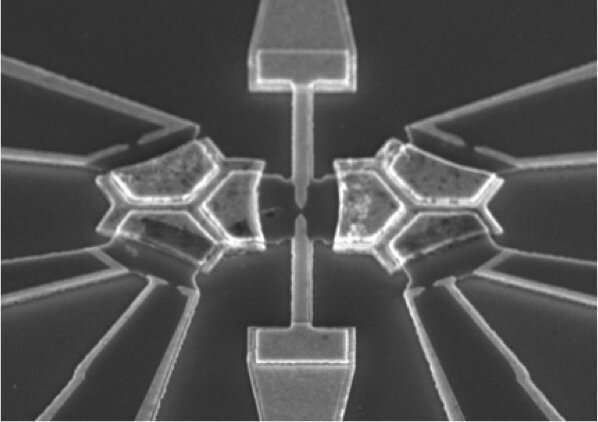

New analog quantum computers to solve previously unsolvable problems

The essential idea of these analog devices, Goldhaber-Gordon said, is to build

a kind of hardware analogy to the problem you want to solve, rather than

writing some computer code for a programmable digital computer. For example,

say that you wanted to predict the motions of the planets in the night sky and

the timing of eclipses. You could do that by constructing a mechanical model

of the solar system, where someone turns a crank, and rotating interlocking

gears represent the motion of the moon and planets. In fact, such a mechanism

was discovered in an ancient shipwreck off the coast of a Greek island dating

back more than 2000 years. This device can be seen as a very early analog

computer. Not to be sniffed at, analog machines were used even into the late

20th century for mathematical calculations that were too hard for the most

advanced digital computers at the time. But to solve quantum physics problems,

the devices need to involve quantum components. The new Quantum

Will Your Company Be Fined in the New Data Privacy Landscape?

“Some large US companies are continuing to be dealt pretty significant fines,”

she says. “The regulation and fining of companies like Meta and others have

raised consumer awareness of privacy rights. I think we’re approaching a

perfect storm in the US where the rest of the world is moving toward a more

consumer-protective landscape, so the US is following in suit.” This includes

activity by state policymakers as well as responses to cybersecurity breaches,

Simberkoff says. She sees the conversation on data privacy being driven by

increasingly complex regulatory requirements and consumer awareness of data

privacy, which can include identity theft or stolen credit card information.

“I think, frankly, companies like Apple help that dialogue forward because

they’ve made privacy one of their key issues in advertising,” says Simberkoff.

The elevation of data privacy policies and consumer awareness might, at first

blush, seem detrimental to data-driven businesses, but it could just require

new operational approaches. “I think what we’re going to end up seeing is a

different way of thinking about these things,” she says.

What is the role of a CTO in a start-up?

The role of the CTO in a start-up can vary greatly from an equivalent position

in a more established scale-up business. While in both scenarios the position

concerns leadership of all technological decisions within a business, there

are considerable differences in the focus and nature of the role. “Start-ups

tend to be disruptive and faced-paced, with the goal of quick growth over

long-term strategy development. So, start-up CTOs are often responsible for

building the technological infrastructure from the ground up,” said Ryan

Jones, co-founder of OnlyDataJobs. “Whereas in an established company, a CTO

might be responsible for reviewing and improving the current technology stack

and data infrastructure, in a start-up, these structures might not exist. So,

the onus is on the CTO to create and implement an entire technological

infrastructure and strategy. This also means that a hands-on approach is

required. “Because start-up CTOs may be the only technologically minded

individual within the company, they’re often required to go back on the tools

and do the actual work required themselves rather than delegating to a

team.”

Your Tech Stack Doesn’t Do What Everyone Needs It To. What Next?

IT needs to collaborate with citizen developers throughout the process to

ensure maximum safety and efficiency. From the beginning, it’s important to

confirm the team’s overall approach, select the right tools, establish roles,

set goals, and discuss when citizen developers should ask for support from IT.

Appointing a leader for the citizen developer program is a great way to help

enforce these policies and hold the team accountable for meeting agreed-upon

milestones. To encourage collaboration and make citizen automation a daily

practice, it’s important to work continuously to identify pain points and

manual work within business processes that can be automated. IT should

regularly communicate with teams across the business, finance and HR

departments to find opportunities for automation, clearly mapping out what

change would look like for those impacted. Gaining buy-in from other team

leaders is critical, so citizen developers and IT need to become internal

advocates for the benefits of automation.Another non-negotiable ground rule is

that citizen developers should only use IT-sanctioned tools

platforms.

Quote for the day:

"If a window of opportunity appears,

don't pull down the shade." -- Tom Peters