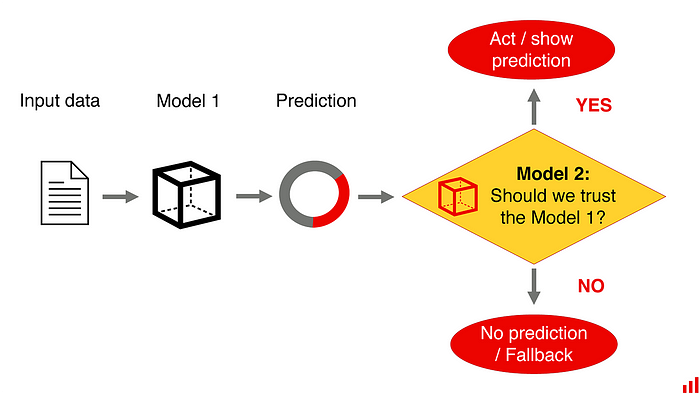

How The World Is Updating Legislation in the Face Of Persistent AI Advances

Recently, 13 states across the US placed a ban on the use of facial recognition

technology by the police. Interestingly, 12 of these 13 cities were

democrat-elect, implying the cultural difference within a country itself. The

European Union is the gold standard when we talk about data privacy and laws

governing the various aspects of technology. To protect individuals’ rights and

freedom the article 22 of the GDPR, “Automated individual decision making,

including profiling,” has ensured the availability of manual intervention in

automated decision making in cases where individual’s rights and freedoms are

affected. The first paragraph, “The data subject shall have the right not to be

subject to a decision based solely on automated processing, including profiling,

which produces legal effects concerning him or her or similarly significantly

affects him or her,” and the third paragraph, “the data controller shall

implement suitable measures to safeguard the data subject’s rights and freedoms

and legitimate interests, at least the right to obtain human intervention on the

part of the controller, to express his or her point of view and to contest the

decision,” provides the right for manual intervention to individuals.

Why ML Capabilities Of GCP Is Way Ahead Of AWS & Azure

TPUs are Google’s custom-developed application-specific integrated circuits

(ASICs) to accelerate ML workloads. A big advantage for GCP is Google’s strong

commitment to AI and ML. “The models that used to take weeks to train on GPU

or any other hardware can put out in hours with TPU. AWS and Azure do have AI

services, but to date, AWS and Azure have nothing to match the performance of

the Google TPU,” said Jeevan Pandey, CTO, TelioLabs. ... Google cloud’s

open-source contributions, especially in tools like Kubernetes –a portable,

extensible, open-source platform for managing containerized workloads and

services, facilitating declarative configuration and automation– have worked

to their advantage. ... Google cloud’s speech and translate APIs are much more

widely used than their counterparts. According to Gartner’s 2021 Magic

Quadrant, Google cloud has been named the leader for Cloud AI services.

Pre-trained ML models can be instantly used to classify objects in an image

into millions of predefined categories. Additionally, one of the top ML

services from Google cloud is Vision AI, powered by AutoML.

How Robotic Processing Automation can improve healthcare at scale

RPA isn’t just a boon for patient-facing organizations—healthcare vendors are

getting in on the action, too. For example, the company I work for faced the

daunting challenge of transferring over 1 million pieces of patient data from

one EMR to another. As any medical professional can attest, switching EMRs is a

notoriously time-consuming process. So, we invested in RPA to bring efficiency

to an otherwise manual and laborious task. In the end, we saved valuable

time—and a significant chunk of change. ... One of the biggest contributors to

burnout is the ever-increasing administrative work stemming from non-clinical

tasks like documentation, insurance authorizations, and scheduling—all things

that can be done faster and more accurately with RPA. And when providers are

freed from the monotony, they have more time to focus on the parts of the job

that they really enjoy. This, in turn, boosts morale and productivity, thus

enhancing care delivery and optimizing patient outcomes overall. For those

working in health care, the demand for digital solutions like RPA feels like the

dawning of the new era—albeit one that is met with mixed emotions.

The many lies about reducing complexity part 2: Cloud

Managers in IT are sensitive to it, as complexity generally is their biggest

headache. Hence, in IT, people are in a perennial fight to make the complexity

bearable. One method that has been popular for decades has been

standardisation and rationalisation of the digital tools we use, a basic

“let’s minimise the number of applications we use”. This was actually part 1

of this story: A tale of application rationalisation (not). That story from

2015 explains how many rationalisation efforts were partly lies. (And while

we’re at it: enjoy this Dilbert cartoon that is referenced therein.) Most of

the time multiple applications were replaced by a single platform (in short: a

platform is software that can run other software) and the applications had to

be ‘rewritten’ to work ‘inside’ that platform. So you ended up with one extra

platform, the same number of applications and generally a few new extra ways

of ‘programming’, specific for that platform. That doesn’t mean it is all

lies. The new platform is generally dedicated to a certain type of

application, which makes programming these applications simpler. But the

situation is not as simple as the platform vendors argue.

Implementing Nanoservices in ASP.NET Core

There is no precise definition of how big or small a microservice should be.

Although microservice architecture can address a monolith's shortcomings, each

microservice might grow large over a while. Microservice architecture is not

suitable for applications of all types. Without proper planning, microservices

can grow as large and cumbersome as the monolith they are meant to replace. A

nanoservice is a small, self-contained, deployable, testable, and reusable

component that breaks down a microservice into smaller pieces. A nanoservice, on

the other hand, does not necessarily reflect an entire business function. Since

they are smaller than microservices, different teams can work on multiple

services at a given point in time. A nanoservice should perform one task only

and expose it through an API endpoint. If you need your nanoservices to do more

work for you, link them with other nanoservices. Nanoservices are not a

replacement for microservices - they complement the shortcomings of

microservices.

Five Data Governance Trends for Digital-Driven Business Outcomes in 2021

Knowledge of data-in-context, data processes, best techniques to provision, as

well as tools enabling these methods of self-service are crucial to democratize

data. However, with technology advancements, including virtualization,

self-service discovery catalogs, and data delivery mechanisms, the internal data

consumers can shop and provision for data in shorter cycles. In 2020, it took

organizations anywhere between a week to three weeks to provision complex data

that includes integration from multiple sources. Also, an increase in data

awareness will help data consumers explore further available dark data that can

provide predictive insights to create new user-stories that can propel customer

journeys. ... A lack of focus is common across organizations as they assume Data

Governance as an extension of either compliance or a risk function. Data

Literacy will, in fact, change the attitude of business owners towards having to

actively manage and govern data. There are immediate and cumulative benefits

from actively governing data either by defining data or fixing bad quality data.

But there is a need for a value-realization framework to actively manage the

benefits of Data Management services.

Best practices for securing the CPaaS technology stack

Certifications are certainly important to consider when evaluating options, but

even so, certifications don’t mean security. It is a best practice to check on

the maturity of these vendor-specific certifications, as some companies go

through a process of self-certification that doesn’t necessarily ensure the

level of security your organization needs. Sending a thoughtful questionnaire to

multiple vendors can be helpful for scoring these vendor’s security, offering a

holistic and specific viewpoint to be considered by an organization’s IT team.

On the customer end, in-house security and engineering staff can prep for CPaaS

implementation by becoming familiar with the use of APIs and the authentication

methods, communications protocols and the data that flows to and from them.

Hackers routinely perform reconnaissance to find unprotected APIs and exploit

them. Once CPaaS is incorporated into the hybrid work model technology stack, it

is a best practice for an organization to focus its sights on its endpoint

management. The use of a centralized endpoint management system that pushes

patches for BIOS, operating systems, and applications is necessary for

protecting the cloud network and customer data once a laptop connects.

3 SASE Misconceptions to Consider

Solution architecture is important, and yes, you want to minimize the number of

daisy chains to reduce complexity. However, it doesn't mean you cannot have any

daisy chains in your solution. In fact, dictating zero daisy chains can have

consequences — not for performance, but for security. SASE consolidates a wide

array of security technologies into one service, yet each of those technologies

is a standalone segment today — with its own industry leaders and laggards. Any

buyer who dictates "no daisy chains" is trusting that one single SASE provider

can (all by itself) build the best technologies across a constellation of

capabilities that is only growing larger. Being beholden to one company is not

pragmatic given that the occasional daisy chain greatly increases the ability to

unite best-of-breed technologies under one service provider's umbrella. ... SASE

revolves around the cloud and is undoubtedly about speed and agility achieved

through cloud-deployed security. But SASE doesn't mean the cloud is the only way

to go and you should ignore everything else. Instead, IT leaders must take a

more practical position, using the best technology given the situation and

problem.

Advice for Someone Moving From SRE to Backend Engineering

The work you’re doing as an SRE will partly depend on your company culture.

Without a doubt, some organizations will relegate their SREs to driving existing

processes like watching the on-call make sure there are no tickets, running

deployments, etc. This can make folks feel like they aren’t progressing.

However, today there are a lot more things you can do as an SRE than you once

could. You used to just have Bash. Now you have many automation opportunities

that will hone your programming skills. You can configure Kubernetes and

Terraform. There's a bunch of code-oriented tools that you can use. You can

orchestrate your stuff in Python. You could also use something Shoreline if you

want it, which is “programming for operations,” and allows you to think of the

world in terms of control loops, and how you can automate there. DevOps has also

increased the Venn diagram overlap between SRE and Backend engineering.

Previously, it was engineers using version control and engineers using package

managers, which was separate from SREs using deployment systems and SREs using

Linux administration tools.

Inspect & Adapt – Digging into Our Foundations of Agility

When we need to change we usually feel a resistance against it. Take the current

pandemic for instance. The simple action of wearing a facemask in public has

caused indisputable resistance in many of us. Cognitively we understand that

there is a benefit to doing so, even if there were long discussions on exactly

how beneficial it would be. But emotionally it did not come natural and easy to

most. Do you remember how it felt the first time you wore a facemask when

entering the supermarket? It was not very pleasant, was it? But even when we are

the driver for change we might find resistance against it. New year’s

resolutions come to mind again. The majority of new year's resolutions are

abandoned come February, even though the desired results have not been achieved.

In other words, the resistance to change might sometimes show up late to the

party. What might be missing here is endurance and resilience to small throw

backs. I believe that we need a thorough understanding in which situation

we currently are. This sounds simple and easy. And on a mid-level it is. "We

need to come out of the pandemic with a net positive", a director of a company

might say.

Quote for the day:

"It's very important in a leadership

role not to place your ego at the foreground and not to judge everything

in relationship to how your ego is fed." -- Ruth J. Simmons