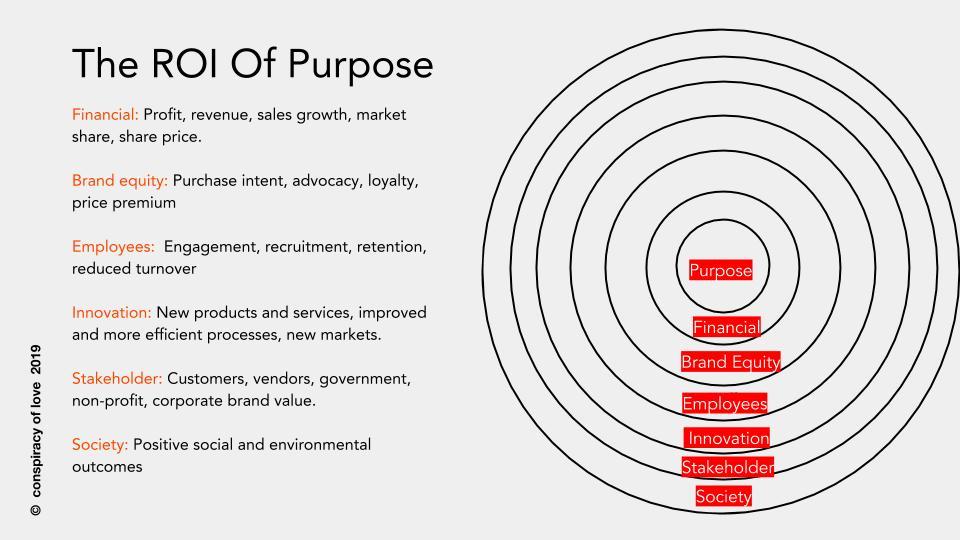

The Power Of Purpose: The ROI Of Purpose

The impact of a purpose-driven initiative on the health of the brand is also another key area to be measured. The ‘silver bullet’ question which is most important is drawing a clear correlation between purpose and sales. However given the complexity of the purchase funnel, I believe that at the very least measuring ‘Purchase Intent’ (‘Does this initiative make you more or less likely to purchase this brand’) is the closest proxy. ... Often, one of the biggest upsides of purpose-driven initiatives is the effect it has on the employees of the company in terms of morale and motivation - not to mention recruiting new talent, especially Millennials and Gen-Z who are increasingly motivated by the opportunity to work for a company that creates meaningful social and environmental impact (leading to lower recruiting costs). While each company has its own metrics for measuring employee engagement, a common one worth measuring is the impact on turnover: Does the initiative make employees more or less likely to stay with the company? Benevity’s research shows that employees are 57% more likely to stay with a company which offers volunteering and fundraising opportunities, leading to significant cost reductions

Why CIOs should focus on trimming their internal email footprint

Reducing business’ reliance on email is just one part of a wider shift in the way companies need to operate going forward. Stanley Louw, UK and Ireland head of digital and innovation at Avanade, believes organisations need a strategy that is digital, not a digital strategy. “The way we have always provided IT for work is actually holding us back,” he said. “You have to apply the sample principles of customer experience to employee experience. What is the experience employees need to do their job? CIOs have to start partnering with HR.” But in Louw’s experience, IT departments still approach desktop IT from a pure IT perspective, which makes their approach to the desktop archaic, very much based in legacy approaches to desktop management. Industry momentum around focusing on customer experience has changed the way businesses look at their customer, he said, adding: “You also need to look internally and start by modernising platforms.”

Chief Integration Officer!

As organizations go for technology-leveraged strategic transformation, they expect technology to help them maximize business value, as an organization. This is different from better decision-making or operational efficiency or a specific new capability at a functional level. The whole value accrued to the organization must be more than the sum of parts. Someone needs to drive that. That someone, for a very few selected organizations, is a dedicated Chief Digital Officer. But more than 95% of organizations do not have a CDO role; most of them do not intend to have one. Yet, they still need someone to put all the pieces together to create organizational value. That integration has to be done by someone who thoroughly understands technology and its direction as well as business. In most organizations, CIO is the best person to drive that role. The reason why it has not happened so widely is not as much because the top management has doubt over CIOs’ capability as it is because the CIOs are not ready to move on from nuts and bolts because that may mean giving up control over a big chunk of budget on IT infrastructure.

Why Proxies Are Important for Microservices

The dynamic nature of microservices applications presents challenges when implementing reverse proxies. Services can come and go as they are revisioned or scaled and will have random IP addresses assigned. The synchronization of the available services and the configuration of the reverse proxy is essential to ensure error-free operation. One solution is to use a service registry (e.g. etcd) and have each service maintain its registration while it is running. The reverse proxy watches the service registry to keep its configuration up to date. Kubernetes does all of this automatically for you as part of its automation. The Kube DNS process maintains the service registry with an address (A) and service (SRV) record for each service. The Kube Proxy process routes and load-balances requests across all instances of the services. With all incoming request traffic for a microservices application typically passing through proxies, it is essential to monitor the performance and health of those proxies. Instana sensors include support for Envoy Monitoring, Nginx Monitoring, and Traefik Monitoring, with more proxy technologies coming.

Browser OS could turn the browser into the new desktop

A potential challenge is the browser becomes the desktop for the end user, and that's something folks have to get used to. But to Google's credit and its partnerships with vendors like VMware or Citrix, the UX challenge becomes almost invisible. We'll see how enterprises continue to approach this opportunity, which is ultimately more secure. For certain use cases, field services for example, if a browser OS-based device either dies or gets broken or lost, no data is lost. A user can just go get a new Chromebook and sign in back where he or she left off. That's an unheard-of value proposition -- that begin-where-you-left-off concept is powerful. One other problem enterprises may face is around Microsoft legacy infrastructure -- particularly around endpoint management. Microsoft has moved away from that to help bridge the divide, and Windows 10 is doing well. We'll see a lot more migration happening this year as the Windows 7 sunset comes closer.

This new Android ransomware infects you through SMS messages

Depending on the infected device's language setting, the messages will be sent in one of 42 possible language versions, and the contact's name is also included in the message automatically. If the link is clicked and the malicious app is installed manually, it often displays material such as a sex simulator. However, the real purpose is quietly running in the background. The app contains hardcoded command-and-control (C2) settings, as well as Bitcoin wallet addresses, within its source code. However, Pastebin is used by the attackers as a conduit for dynamic retrieval. Once the propagation messages have been sent, Filecoder then scans the infected device to find all storage files and will encrypt the majority of them. Filecoder will encrypt file types including text files and images but fails to include Android-specific files such as .apk or .dex. ESET believes that the encryption list is no more than a copy-and-paste job from WannaCry, a far more severe and prolific form of ransomware. A ransom note is then displayed, with demands ranging from approximately $98 to $188 in cryptocurrency. There is no evidence that files will be lost after the time threatened.

Google bolsters hybrid cloud proposition for enterprises through VMware partnership

Google Cloud CEO Thomas Kurian confirmed the news in a blog post, in which he described the move as “another significant step” in his firm’s drive to bolster the enterprise appeal of its public cloud platform. In recent years, this has seen the Google Cloud team roll out a series of data security and functionality improvements to its platform, and move to introduce industry-specific services. Such moves have resulted in Google Cloud developing into an $8bn annual revenue run rate company that is growing “at significant pace”, as was confirmed by Google CEO Sundar Pichai during a conference call to discuss its parent company’s second-quarter results earlier this month. “Customers are choosing Google Cloud for a variety of reasons,” said Pichai on the call, transcribed by Seeking Alpha. “Reliability and uptime are critical.” He also made reference to the “flexibility” that organisations also need when moving to the cloud, so they can proceed in their “own way”.

The Future of API Management

There will be a continued emphasis on the developer and recognizing the developer is king and making the job easier for them. We understood this for the public, but we still need to improve internally. In the form of a service catalog for internal developers to make it easier for them to ramp up and get benefits of existing APIs. New architecture — Everything is driven by containers, container platforms, and K8s is leading to microservices architecture with new approaches to control traffic with sidecar approaches to manage traffic like Envoy and Istio to provide service mesh to manage applications within the container cluster. As these things come up, there will be a proliferation of types of control points with multiple form factors. We embrace Envoy as the new gateway. Right now we live in a mixed world and it’s important to consider how service mesh and API management will overlap. API management is about the relationship of providing a service and multiple consumers of that service. The more scale, the more important the formal API management platform.

A zero day is a security flaw that has not yet been patched by the vendor and can be exploited and turned into a powerful weapon. Governments discover, purchase, and use zero days for military, intelligence and law enforcement purposes — a controversial practice, as it leaves society defenseless against other attackers who discover the same vulnerability. Zero days command high prices on the black market, but bug bounties aim to encourage discovery and reporting of security flaws to the vendor. The patching crisis means zero days are becoming less important, and so-called 0ld-days become almost as effective. ... Not all zero days are complicated or expensive, however. The popular Zoom videoconferencing software had a nasty zero day that "allows any website to forcibly join a user to a Zoom call, with their video camera activated, without the user's permission," according to the security researcher's write up. "On top of this, this vulnerability would have allowed any web page to DoS (Denial of Service) a Mac by repeatedly joining a user to an invalid call." The Zoom for Mac client also installs a web server on your laptop that can reinstall the Zoom client without your knowledge if it's ever been installed before.

One thing that is widely agreed upon by the security pros – as Kubernetes adoption and deployment grows, so will the security risks. There have been multiple recent events in the cloud and mobile dev spaces where these environments were compromised by attackers. This included everything from disruption, crypto mining, ransomware, and data stealing. Of course, these types of deployments are just as susceptible to exploits and attacks from attackers and insiders as the traditional environments. Thus, it is more important to ensure your large-scale Kubernetes environment has the right deployment architecture and that you use security best practices for all these deployments. As Kubernetes is more widely adopted, it becomes a prime target for threat actors. “The rapid rise in adoption of Kubernetes is likely to uncover gaps that previously went unnoticed on the one hand, and on the other hand gain more attention from bad actors due to a higher profile,” says Amir Jerbi, CTO at Aqua Security.

Quote for the day:

"Leaders begin with a different question than others. Replacing who can I blame with how am I responsible?" -- Orrin Woodward