Why governance, risk, and compliance must be integrated with cybersecurity

Incorporating cybersecurity practices into a GRC framework means connected teams

and integrated technical controls for the University of Phoenix, where GRC and

cybersecurity sit within the same team, according to Larry Schwarberg, the VP of

information security. At the university, the cybersecurity risk management

framework is primarily created out of a consolidated view of NIST 800-171 and

ISO 27001 standards, with this being used to guide other elements of its overall

posture. “The results of the risk management framework feed other areas of

compliance from external and internal auditors,” Schwarberg says. The

cybersecurity team works closely with legal and ethics, compliance and data

privacy, internal audit and enterprise risk functions to assess overall

compliance with in-scope regulatory requirements. “Since our cybersecurity and

GRC roles are combined, they complement each other and the roles focus on

evaluating and implementing security controls based on risk appetite for the

organization,” Schwarberg says. The role of leadership is to provide awareness,

communication, and oversight to teams to ensure controls have been implemented

and are effective.

India's talent crunch: Why choose build approach over buying?

The primary challenge is the need for more workers equipped with digital skill

sets. Despite the high demand for these skills, the current workforce needs to

gain the requisite abilities, especially considering the constant evolution of

technology. The lack of niche skill sets essential for working with advanced

technologies like AI, blockchain, cloud, and data science further contributes to

this gap. The turning point, however, is now within reach as businesses and

professionals recognise the crucial need for upskilling and reskilling. At DXC

India, we have embraced a strategy that prioritises internal talent development,

favouring the 'build' approach over the 'buy' strategy. By upskilling our

existing workforce with relevant, in-demand skills, we address our talent needs

and foster individual career growth. This method is particularly effective as

experienced employees can swiftly acquire new skills and undergo cross-training.

This agility is an asset in navigating the rapidly evolving business landscape,

benefiting employees and customers. Identifying the specific talent required and

subsequently building that talent pool forms the crux of this strategy.

Why does AI have to be nice? Researchers propose ‘Antagonistic AI’

“There was always something that felt off about the tone, behavior and ‘human

values’ embedded into AI — something that felt deeply ingenuine and out of

touch with our real-life experiences,” Alice Cai, co-founder of Harvard’s

Augmentation Lab and researcher at the MIT Center for Collective Intelligence,

told VentureBeat. She added: “We came into this project with a sense that

antagonistic interactions with technology could really help people — through

challenging [them], training resilience, providing catharsis.” But it also

comes from an innate human characteristic that avoids discomfort, animosity,

disagreement and hostility. Yet antagonism is critical; it is even what Cai

calls a “force of nature.” So, the question is not “why antagonism?,” but

rather “why do we as a culture fear antagonism and instead desire cosmetic

social harmony?,” she posited. Essayist and statistician Nassim Nicholas

Taleb, for one, presents the notion of the “antifragile,” which argues that we

need challenge and context to survive and thrive as humans. “We aren’t simply

resistant; we actually grow from adversity,” Arawjo told VentureBeat.

How companies can build consumer trust in an age of privacy concerns

Aside from reworking the way they interact with customers and their data,

businesses should also tackle the question of personal data and privacy with a

different mindset – that of holistic identity management. Instead of companies

holding all the data, holistic identity management offers the opportunity to

“flip the script” and put the power back in the hands of consumers. Customers

can pick and choose what to share with businesses, which helps build greater

trust. ... Greater privacy and greater personalization may seem to be at odds,

but they can go hand in hand. Rethinking their approach to data collection and

leveraging new methods of authentication and identity management can help

businesses create this flywheel of trust with customers. This will be all the

more important with the rise of AI. “It’s never been cheaper or easier to

store data, and AI is incredibly good at going through vast amounts of data

and identifying patterns of aspects that actual humans wouldn’t even be able

to see,” Gore explains. “If you take that combination of data that never dies

and the AI that can see everything, that’s when you can see that it’s quite

easy to misuse AI for bad purposes. ...”

Testing Event-Driven Architectures with Signadot

With synchronous architectures, context propagation is a given, supported by

multiple libraries across multiple languages and even standardized by the

OpenTelemetry project. There are also several service mesh solutions,

including Istio and Linkerd, that handle this type of routing perfectly. But

with asynchronous architectures, context propagation is not as well defined,

and service mesh solutions simply do not apply — at least, not now: They

operate at the request or connection level, but not at a message level. ...

One of the key primitives within the Signadot Operator is the routing key, an

opaque value assigned by the Signadot Service to each sandbox and route group

that’s used to route requests within the system. Asynchronous applications

also need to propagate routing keys within the message headers and use them to

determine the workload version responsible for processing a message. ... This

is where Signadot’s request isolation capability really shows its utility:

This isn’t easily simulated with a unit test or stub, and duplicating an

entire Kafka queue and Redis cache for each testing environment can create

unacceptable overhead.

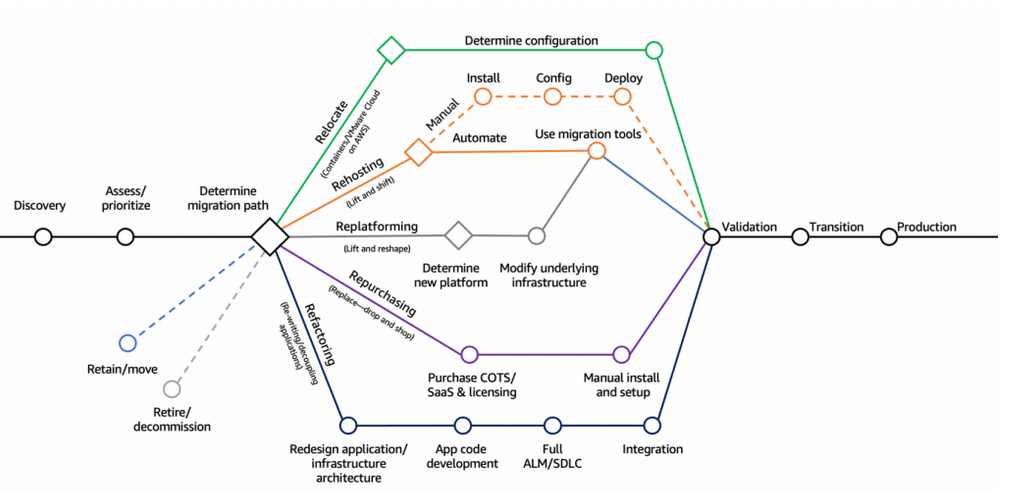

The 7 Rs of Cloud Migration Strategy: A Comprehensive Overview

With the seven Rs as your compass, it’s time to chart your course through the

inevitable challenges that arise on any AWS migration journey. By anticipating

these roadblocks and proactively addressing them, you can ensure a smoother

and more successful transition to the cloud. ... Navigating the vast and

ever-evolving AWS ecosystem can be daunting, especially for organizations with

limited cloud experience. This complexity, coupled with a potential skill gap

in your team, can lead to inefficient resource utilization, suboptimal

architecture choices, and delayed timelines. ... Migrating sensitive data and

applications to the cloud requires meticulous attention to security protocols

and compliance regulations. Failure to secure your assets can lead to data

breaches, reputational damage, and hefty fines. ... While leveraging the full

range of AWS services can offer significant benefits, over-reliance on

proprietary solutions can create an unhealthy dependence on a single vendor.

This can limit your future flexibility and potentially increase costs. ...

While AWS offers flexible pricing models and optimization tools, managing

cloud costs effectively requires ongoing monitoring and proactive

adjustments.

What is a chief data officer? A leader who creates business value from data

The chief data officer (CDO) is a senior executive responsible for the

utilization and governance of data across the organization. While the chief

data officer title is often shortened to CDO, the role shouldn’t be confused

with chief digital officer, which is also frequently referred to as CDO. ...

Although some CIOs and CTOs find CDOs encroach on their turf, Carruthers says

the boundaries are distinct. CDOs are responsible for areas such as data

quality, data governance, master data management, information strategy, data

science, and business analytics, while CIOs and CTOs manage and implement

information and computer technologies, and manage technical operations,

respectively. ... The chief data officer is responsible for the fluid that

goes in the bucket and comes out; that it goes to the right place, and that

it’s the right quality and right fluid to start with. Neither the bucket nor

the water work without each other. ... Gomis says he’s seen chief data

officers come from marketing backgrounds, and that some are MBAs who’ve never

worked in data analytics before. “Most of them have failed, but the companies

that hired them felt that the influencer skillset was more important than the

data analytics skillset,” he says.

The UK must become intentional about data centers to meet its digital ambitions

For the UK to maintain its leadership position in DC’s, it’s not enough to

just leave it to chance. A number of trends are now deciding investment flows

both within the UK and on the global stage. First, land and power

availability. Access to land and power is becoming increasingly constrained in

London and surrounding areas. For example, properties in Slough have gone up

by 44 percent since 2019, and the Greater London Authority has told some

developers there won’t be electrical capacity to build in certain areas of the

city until 2035. Data centers use large quantities of electricity, the

equivalent of towns or small cities, in some cases, to power servers and

ensure resilience in service. In West London, Distribution Network Operators

have started to raise concerns about the availability of powerful grid supply

points to meet the rapid influx of requests from data center operators wanting

to co-locate adjacent to fiber optic cables that pass along the M4 corridor,

and then cross the Atlantic. In response to these power and space concerns,

the hyperscalers have already started to favor countries in

Scandinavia.

Rubrik CIO on GenAI’s Looming Technical Debt

This is a case of, “Hey, there’s a leak in the boat, and what are you going to

do about it? Are you going to let things get drowned? Or are you going to make

sure that there is an equal amount of water that leaves the boat?” So, you

have to apply that thinking to your annual plan. Typically, I’ll say that

there’s going to be a percentage of resources, budget, and effort I’m going to

put into reducing tech debt … And that’s where you start competing with other

business initiatives. You will have a bunch of business stakeholders that

might look at that as something that should just be kicked down the road

because they want to use that funding for something else. That’s where, I

believe, educating a lot of my business leaders on what that does to the

organization. When I don’t address that tech debt, on a regular basis,

production SLAs start to deteriorate. ... There’s going to be some

consolidation and some standardization across the board. So, the first couple

of years are going to be rocky very everybody. But that doesn’t scare us,

because we’re going to put a more robust governance on top of this new area.

We need to have a lot more debates about this internally and say, “Let’s be

cautious, guys. Because this is coming from all sides.”

How organizations can navigate identity security risks in 2024

IT, identity, cloud security and SecOps teams need to collaborate around a set

of security and lifecycle management processes to support business objectives

around security, timely access delivery and operational efficiency. These

processes are best optimized by automating manual tasks, while ensuring that

the ownership and accountability for manual tasks is well understood. In

addition, quantifying and tracking business outcomes in terms of metrics

highlights IAM’s effectiveness and identifies areas that need improvement or

more automation. Utilizing IAM for cloud and Software as a Service (SaaS)

applications introduces a spectrum of challenges, rooted in silos of identity.

Each system or application has its own identity model and its own concept of

various identity settings and permissions: accounts, credentials, groups,

roles, entitlements and other access policies. Misconfigured permissions and

settings heighten the likelihood of data breaches. To address these

complexities, organizations need business users and security teams to

collaborate on an identity management and governance framework and overarching

processes for policy-based authentication, SSO, lifecycle management, security

and compliance. Automation can streamline these processes and help ensure

effective access controls.

Quote for the day:

“People may hear your voice, but they

feel your attitude.” -- John Maxwell