Transform Data Leadership: Core Skills for Chief Data Officers in 2024

The chief data officer is tasked not only with unlocking the value of data but explaining the importance of data as the lifeblood of the company across all levels. They must be effective storytellers who can interpret data in such a way that business stakeholders take notice. An effective CDO pairs storytelling with the supporting data and makes it easy to share insights with stakeholders and get their buy-in. For instance, how effective a CDO is in getting departmental buy-in might boil down to the ongoing department "showback reports" they can produce. "Credibility and integrity are two other important traits in addition to effective communication skills, as it is crucial for the CDO to gain the trust of their peers," Subramanian says. ... To garner support for data initiatives and drive organizational buy-in, chief data officers must be able to communicate complex data concepts in a clear and compelling manner to diverse stakeholders, including executives, business leaders, and technical teams. "CDOs have to serve as a bridge between the tech and operational aspects of the organization as they work to drive business value and increase data literacy and awareness," says Schwenk.

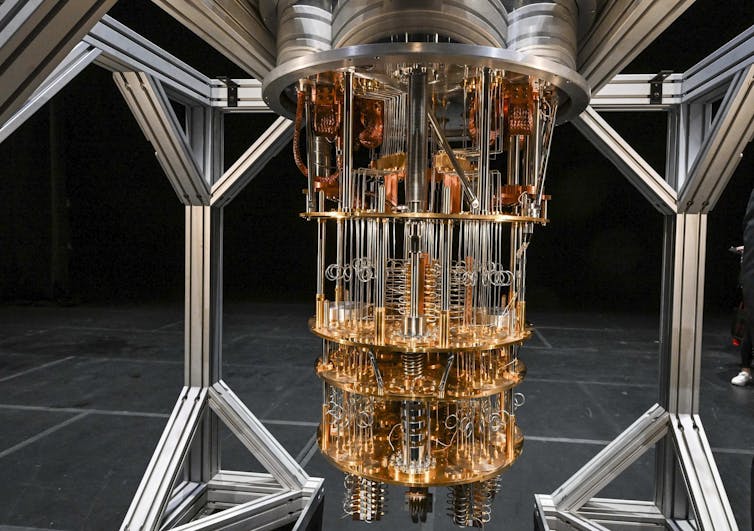

Mind-bending maths could stop quantum hackers, but few understand it

The task of cracking much current online security boils down to the mathematical problem of finding two numbers that, when multiplied together, produce a third number. You can think of this third number as a key that unlocks the secret information. As this number gets bigger, the amount of time it takes an ordinary computer to solve the problem becomes longer than our lifetimes. Future quantum computers, however, should be able to crack these codes much more quickly. So the race is on to find new encryption algorithms that can stand up to a quantum attack. ... Most lattice-based cryptography is based on a seemingly simple question: if you hide a secret point in such a lattice, how long will it take someone else to find the secret location starting from some other point? This game of hide and seek can underpin many ways to make data more secure. A variant of the lattice problem called “learning with errors” is considered to be too hard to break even on a quantum computer. As the size of the lattice grows, the amount of time it takes to solve is believed to increase exponentially, even for a quantum computer.

Secure by Design: UK Enforces IoT Device Cybersecurity Rules

The connected-device law kicks in following repeat attacks against devices with

known or easily guessable passwords, which have led to repeat distributed

denial-of-service attacks that have affected major institutions, including the

BBC as well as major U.K. banks such as Lloyds and the Royal Bank of Scotland.

Officials said the law is designed not just for consumer protection but also to

improve national cybersecurity resilience, including against malware that

targets IoT devices, such as Mirai and its spinoffs, all of which can exploit

default passwords in devices. Western officials have also warned that Chinese

and Russian nation-state hacking groups exploit known vulnerabilities in

consumer-grade network devices. U.S. authorities earlier this year disrupted a

Chinese botnet used by a group tracked as Volt Typhoon, warning that Beijing

threat actors used infected small office and home office routers to cloak their

hacking activities. "It's encouraging to see growing emphasis on implementing

best practices in securing IoT devices before they leave the factory," said

Kevin Curran, a professor of cybersecurity at Ulster University in Northern

Ireland.

Will New Government Guidelines Spur Adoption of Privacy-Preserving Tech?

“The risks of AI are real, but they are manageable when thoughtful governance

practices are in place as enablers, not obstacles, to responsible innovation,”

Dev Stahlkopf, Cisco’s executive VP and chief legal officer, said in the report.

One of the big potential ways to benefit from privacy-preserving technology is

enabling multiple parties to share their most valuable and sensitive data, but

do so in a privacy-preserving manner. “My data alone is good,” Hughes said. “My

data plus your data is better, because you have indicators that I might not see,

and vice versa. Now our models are smarter as a result.” Carmakers could benefit

by using privacy-preserving technology to combine sensor data collected from

engines. “I’m Mercedes. You’re Rolls-Royce. Wouldn’t it be great if we combined

our engine data to be able to build a model on top of that could identify and

predict maintenance schedules better and therefore recommend a better

maintenance schedule?” Hughes said. Privacy-preserving tech could also improve

public health through the creation of precision medicine techniques or new

medications.

GQL: A New ISO Standard for Querying Graph Databases

The basis for graph computing is the property graph, which is superior in

describing dynamically changing data. Graph databases have been widely used for

decades, and only recently, the form has generated new interest in being a

pivotal component in Large Language Model-based Generative AI apps. A graph

model can visualize complex, interconnected systems. The downside of LLMs is

that they are black boxes of a sort, Rathle explained. “There’s no way to

understand the reasoning behind the language model. It is just following a

neural network and doing it’s doing its thing,” he said. A knowledge graph can

serve as external memory, a way to visualize how the LLM constructed its

worldview. “So I can trace through the graph and see why it arrived with that

answer,” Rathle said. Graph databases are also widely used in the health care

companies for drug discovery and by aircraft and other manufacturers as a way to

visualize complex system design, Rathle said. “You have all these cascading

dependencies and that calculation works really well in the graph,” Rathle

said.

Microsoft deputy CISO says gen AI can give organizations the upper hand

One of the major promises is a reduction in the fraud that often occurs around

clinical trials. Bad actors have a vested interest in whether the drug will

pass FDA inspections – literally. In other words, falsified results and

insider trading is a big risk. Applying AI-powered security to their

operational technology in the manufacturing plant or lab can monitor the

equipment and not only detect signs of failure, but alert the company to

potential tampering. At the same time, they’re also looking at ways to improve

drug and polymer research. “They build better products and shorten the cycle

of go-to-market for drugs. That’s worth billions of dollars,” he said. “They

have a patent that only lasts 10 years. If they can get to market faster, they

can hold on to that market share more before it goes to the public.” But that

transformation of the SOC is potentially the most impactful of use cases,

especially as cybercriminals adopt generative AI and go to work without the

guardrails that encumber organizations. “We’ve seen a dramatic adoption of

what I would call open-source AI from attackers to be able to use and build

models,” Bissell said.

What IT leaders need to know about the EU AI Act

Lawyers and other observers of the EU AI Act point to a couple major issues

that could trip up CIOs. First, the transparency rules could be difficult to

comply with, particularly for organizations that don’t have extensive

documentation about their AI tools or don’t have a good handle on their

internal data. The requirements to monitor AI development and use will add

governance obligations for companies using both high-risk and general-purpose

AIs. Secondly, although parts of the EU AI Act wouldn’t go into effect until

two years after the final passage, many of the details affecting regulations

have yet to be written. In some cases, regulators don’t have to finalize the

rules until six months before the law goes into effect. The transparency and

monitoring requirements will be a new experience for some organizations,

Domino Data Lab’s Carlsson says. “Most companies today face a learning curve

when it comes to capabilities for governing, monitoring, and managing the AI

lifecycle,” he says. “Except for the most advanced AI companies or in heavily

regulated industries like financial services and pharma, governance often

stops with the data.”

Standard Chartered CEO on why cybersecurity has become a 'disproportionately huge topic' at board meetings

Working together with the CISO team and the risk cyber team as well — of

course, they're technical experts themselves, and I think they’ve done an

excellent job of putting the technical program around our own defense

mechanisms. But probably the most challenging thing was to get the broad

business — the people who weren't technical experts — to understand what role

they have to play in our cybersecurity defenses. ... It was really interesting

to go through that exercise early on and see how unfamiliar some of the

business heads were with what their own crown jewels were. Once you identify

and are clear on what the crown jewel is, then you have to be part of the

defense mechanism around those. Each one of these things costs money or

reduces flexibility or, if done incorrectly, impacts the customer experience.

Really working through that and getting them involved in structuring their

business around the cyber risk, it's an ongoing process, I don't think we'll

ever be done with that. We've made huge progress in the past six, seven years,

I'd say, as cyber risks have increased.

The 5 components of a DataOps architecture

If the data comes from multiple sources with timestamps, you can blend it into

one database. Use the aggregated data to produce summarized data at various

levels of granularity, such as daily aggregate reports. Databases can produce

satellite tables, link them together via keys and automatically update them. For

example, user ID is the key for personal information in a user transactions

table. If the data is in a standardized field, use metadata to indicate the type

of each field -- text, integer and category -- labels and size. ... DataOps

is not just about the acquisition and use of business data. Archive rarely used

or no longer relevant data, such as inactive accounts. Some satellite tables may

need regular updates, such as lists of blocklisted IP addresses. Back up data

regularly, and update lists of users who can access it. Data teams should decide

the best schedule for the various updates and tests to guarantee continuous data

integrity. The DataOps process may seem complex, but chances are you already

have plenty of data and some of the components in place. Adding security layers

may even be easy.

Kubernetes: Future or Fad?

If you asked somebody at the beginning of containerization, it was all about how

containers have to be stateless, and what we do today. We deploy databases into

Kubernetes, and we love it. Cloud-nativeness isn’t just stateless anymore, but

I’d argue a good one-third of the container workloads may be stateful today

(with ephemeral or persistent state), and it will keep increasing. The beauty of

orchestration, automatic resource management, self-healing infrastructure, and

everything in between is just too incredible to not use for “everything.”

Anyhow, whatever happens to Kubernetes itself (maybe it will become an

orchestration extension of the OCI?!), I think it will disappear from the eyes

of the users. It (or its successor) will become the platform to build container

runtime platforms. But to make that happen, debug features need to be made

available. At the moment you have to look way too deep into Kubernetes or agent

logs to find out and fix issues. The one who never had to find out why a Let’s

Encrypt certificate isn’t updating may raise a hand now. To bring it to a close,

Kubernetes certainly isn’t a fad, but I strongly hope it's not going to be our

future either. At least not in its current incarnation.

Quote for the day:

''The best preparation for tomorrow is

doing your best today.'' -- H. Jackson Brown, Jr.