Most hyped network technologies and how to deal with them

Hype four is zero trust. Security is justifiably hot, and at the same time

there never seems to be an end to the new notions that come along. Zero trust,

according to technologists, is problematic not only because senior management

tends to jump on it without thinking, but because there isn’t even a

consistent view of the technology being presented. “Trust-washing,” said one

professional, “has taken over my security meetings,” meaning that they’re

spending too much time addressing all the claims vendors are making.

Technologists say the best approach to a project to address this hype issue

starts by redefining “zero” trust as “explicit trust” and making it clear that

this means that it will be necessary to add tools and processes to validate

users, resources, and their relationships. This will mean impacting the line

organizations whose users and applications are being protected, in that they

will have to define and take the necessary steps to establish trust.

Zero-trust enhancements are best implemented through a vendor already

established in the security or network connectivity space, so start by

reviewing each of the tools available from these incumbent vendors.

Don’t Build Microservices, Pursue Loose Coupling

While it is true that microservices strategies do support loose coupling,

they’re not the only way. Simpler architectural strategies can afford smaller

or newer projects the benefits of loose coupling in a more sustainable way,

generating less overhead than building up microservices-focused

infrastructure. Architectural choices are as much about the human component of

building and operating software systems as they are about technical concerns

like scalability and performance. And the human component is where

microservices can fall short. When designing a system, one should distinguish

between intentional complexity (where a complex problem rightfully demands a

complex solution) and unintentional complexity (where an overly complex

solution creates unnecessary challenges). It’s true that firms like Netflix

have greatly benefited from microservices-based architectures with intentional

complexity. But an up-and-coming startup is not Netflix, and trying to follow

in the streaming titan’s footsteps can introduce a great degree of

unintentional complexity.

MPs say UK at real risk of falling behind on AI regulation

Noting current trialogue negotiations taking place in the EU over its

forthcoming AI Act and the Biden administration's voluntary agreements with

major tech firms over AI safety, the SITC chair Greg Clark told reporters at a

briefing that time is running out for the government to establish its own

AI-related powers and oversight mechanisms. “If there isn’t even quite a

targeted and minimal enabling legislation in this session, in other words in

the next few months, then the reality [for the introduction of UK AI

legislation] is probably going to be 2025,” he said, adding it would be

“galling” if the chance to enact new legislation was not taken simply because

“we are timed out”. “If the government’s ambitions are to be realised and its

approach is to go beyond talks, it may well need to move with greater urgency

in enacting the legislative powers it says will be needed.” He further added

that any legislation would need to be attuned to the 12 AI governance

challenges laid out in the committee’s report, which relate to various

competition, accountability and social issues associated with AI’s

operation.

The Agile Architect: Mastering Architectural Observability To Slay Technical Debt

Architectural observability requires two other key phases: analysis and

observation. The former provides another layer of a deeper understanding of

the software architecture, while the latter maintains an updated system

picture. These intertwined phases, reflecting Agile methodologies' adaptive

nature, foster effective system management. ... The cyclic

'analyzing-observing' process starts with a deep dive into the nitty-gritty of

the software architecture. By analyzing the information gathered about the

application, we can identify elements like domains within the app, unnecessary

code, or problematic classes. Using methodical exploration helps architects

simplify their applications and better understand their static and dynamic

behavior. The 'observation' phase, like a persistent scout, keeps an eye on

architectural drift and changes, helping architects identify problems early

and stay up-to-date with the current architectural state. In turn, this

information feeds back into further analysis, refining the understanding of

the system and its dynamics.

Operation 'Duck Hunt' Dismantles Qakbot

The FBI dubbed the operation behind the takedown "Duck Hunt," a play on the

Qakbot moniker. The operation is "the most significant technological and

financial operation ever led by the Department of Justice against a botnet,"

said United States Attorney Martin Estrada of the Central District of

California. International partners in the investigation include France,

Germany, the Netherlands, the United Kingdom, Romania and Latvia. "Almost

every country in the world was affected by Qakbot, either through direct

infected victims or victims attacked through the botnet," said senior FBI and

DOJ officials. Officials said Qakbot spread primarily through email phishing

campaigns, and FBI probes revealed Qakbot infrastructure and victim computers

had spread around the world. Qakbot played a role in approximately 40

different ransomware attacks over the past 18 months that caused $58 million

in losses, Estrada said. "You can imagine that the losses have been many

millions more through the life of the Qakbot," which cyber defenders first

detected in 2008, Estrada added. "Today, all that ends," he said.

How CISOs can shift from application security to product security

The fact that product security has worked its way onto enterprise

organizational charts is not a repudiation of traditional application security

testing, just an acknowledgement that modern software delivery needs a

different set of eyes beyond the ones trained on the microscope of appsec

testing. As technology leaders have recognized that applications don’t operate

in a vacuum, product security has become the go-to team to help watch the gaps

between individual apps. Members of this team also serve as security advocates

who can help instill security fundamentals into the repeatable development

processes and ‘software factory’ that produces all the code. The emergence of

product security is analogous to the addition of site reliability engineering

early in the DevOps movement, says Scott Gerlach, co-founder and CSO at API

security testing firm StackHawk. “As software was delivered more rapidly,

reliability needed to be engineered into the product from inception through

delivery. Today, security teams typically have minimal interactions with

software during development.

CIOs are worried about the informal rise of generative AI in the enterprise

What can CISOs and corporate security experts do to put some sort of limits on

this AI outbreak? One executive said that it’s essential to toughen up basic

security measures like “a combination of access control,

CASB/proxy/application firewalls/SASE, data protection, and data loss

protection.” Another CIO pointed to reading and implementing some of the

concrete steps offered by the National Institute of Standards and Technology

Artificial Intelligence Risk Management Framework report. Senior leaders must

recognize that risk is inherent in generative AI usage in the enterprise, and

proper risk mitigation procedures are likely to evolve. Still, another

respondent mentioned that in their company, generative AI usage policies have

been incorporated into employee training modules, and that policy is

straightforward to access and read. The person added, “In every vendor/client

relationship we secure with GenAI providers, we ensure that the terms of the

service have explicit language about the data and content we use as input not

being folded into the training foundation of the 3rd party service.”

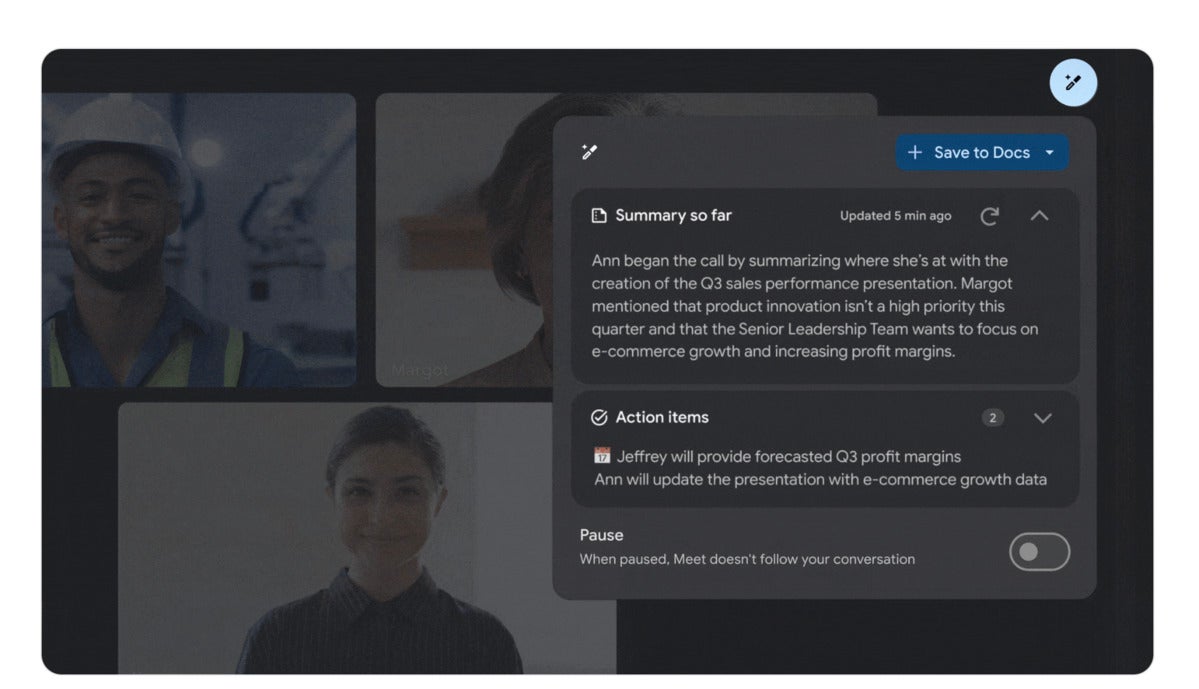

Google’s Duet AI now available for Workspace enterprise customers

The launch of Duet AI means Google has beaten Microsoft to market with genAI

tools for its office software suite. Microsoft is currently trialing its own

Copilot AI assistant for Microsoft 365 applications such as Word, Excel and

Teams. The Microsoft 365 Copilot, based on OpenAI’s ChatGPT, will also cost

$30 per user each month when it’s made available later this year or in early

2024. “Google's choice to price Duet at $30 is surprising, given that it's the

same price as Microsoft Copilot,” said J. P. Gownder, vice president and

principal analyst on Forrester's Future of Work team. “Both offerings promise

to improve employee productivity, but Google Workspace is positioned as a

lower-cost alternative to Microsoft 365 in the first place. Its products

contain perhaps 70% to 80% of the features of their counterparts in the

Microsoft 365 office programs suite.” However, as with Microsoft’s genAI

feature, Gownder expects Duet will provide customers with improvements around

productivity and employee experience, even if it’s too early to make firm

judgements on either product.

Empowering Female Cybersecurity Talent in the Supply Chain

While young women and other minority individuals today are taught they can

have a successful career in any industry, having the right support from

educators, peers, and co-workers are key factors in the eventual decision to

enter – and stay in – technical fields. Around 74% of middle school females

are interested in STEM subjects. Yet, when they reach high school, interest

drops, further proving the need for unwavering awareness efforts and support

at an early age. According to a recent report from the NSF's NCSES, more women

worked in STEM jobs over the past decade compared to previous years, proving

progress in the right direction. Despite this increase, a lack of external

support and awareness leaves adolescents exploring different paths. Since many

decide their majors as early as age 18, promoting technical roles in college

can even be considered too late. Therefore, it’s imperative that leaders

encourage young talent by communicating and rewarding the skillsets needed to

hold these roles and showcase the career paths available.

Machine Learning Use Cases for Data Management

In the financial services sector, ML algorithms in fraud detection and risk

assessment are expected to enhance security measures and mitigate potential

risks. By leveraging advanced Data Management techniques, ML algorithms can

analyze vast amounts of financial data to identify patterns and anomalies that

may indicate fraudulent activities. These algorithms can adapt and learn from

new emerging fraud patterns, enabling financial institutions to take immediate

action. Additionally, ML algorithms can aid in risk assessment by analyzing

historical data, market trends, and customer behavior to predict potential

risks accurately. ... In the manufacturing sector, ML is revolutionizing

quality control and predictive maintenance processes. ML algorithms can

analyze vast amounts of data collected from sensors, machines, and production

lines to identify patterns and anomalies. This enables manufacturers to detect

defects in real-time, ensuring product quality while minimizing waste and

rework. Moreover, ML algorithms can predict equipment failures by analyzing

historical data on machine performance.

Quote for the day:

"The leader has to be practical and a

realist, yet must talk the language of the visionary and the idealist." --

Eric Hoffer