Beyond “Agree to Disagree”: Why Leaders Need to Foster a Culture of Productive Disagreement and Debate

The business imperative of nurturing a culture of productive disagreement is

clear. The good news is that senior leaders can play a highly influential role

in this regard. By integrating the concepts of openness and healthy debate into

their own and their organization’s language they can institutionalize new norms.

Their actions can help to further reset the rules of engagement by serving as a

model for employees to follow. ... Leaders should incorporate the concept of

productive debate into corporate value statements and the way they address

colleagues, employees, and shareholders. Michelin, for example, built debate

into its value statement. One of its organizational values is “respect for

facts,” which it describes as follows: “We utilize facts to learn, honestly

challenge our beliefs….” Another company that espouses debate as value is

Bridgewater. Founder Ray Dalio ingrained principles and subprinciples such as

“be radically open-minded” and “appreciate the art of thoughtful disagreement”

in the investment management company’s culture.

Using technology to power the future of banking

Because I believe that anyone that wants to be a CIO or a CTO, particularly in

the way that the industry is progressing, you need to understand technology. So,

staying close to the technology and curious and wanting to solve those problems

has helped me. But there's another part to it, too. In every one of my roles,

there have been times when I've seen something that wasn't necessarily working

and I had ideas and wanted to help, but it might’ve been outside of my

responsibility. I've always leaned in to help, even though I knew that it was

going to help someone else in the organization, because it was the right thing

to do and it helped the company, it helped other people. So, it ended up

building stronger relationships, but also building my skillset. I think that's

been a part of my rise too, and it's something that's just incredibly powerful

from a cultural perspective. That’s something that I love here. Everybody is in

it together to work that way. But I also think that it just speaks volumes about

an individual, and people gravitate to want to work with people that operate

that way.

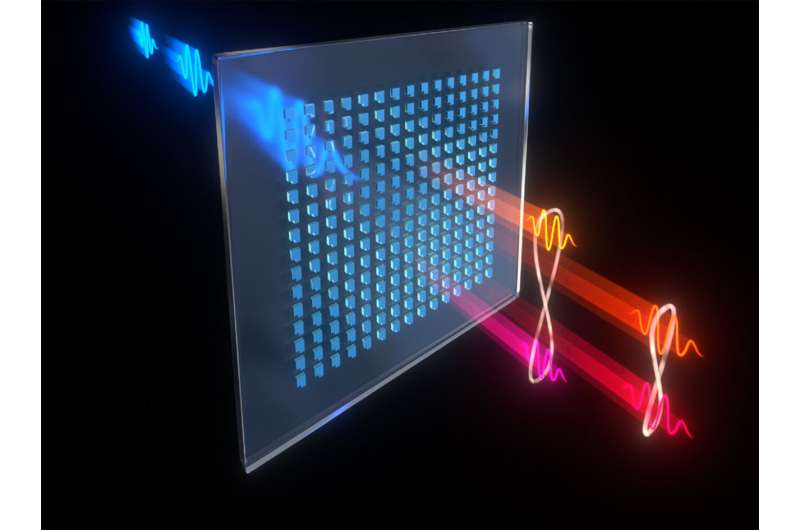

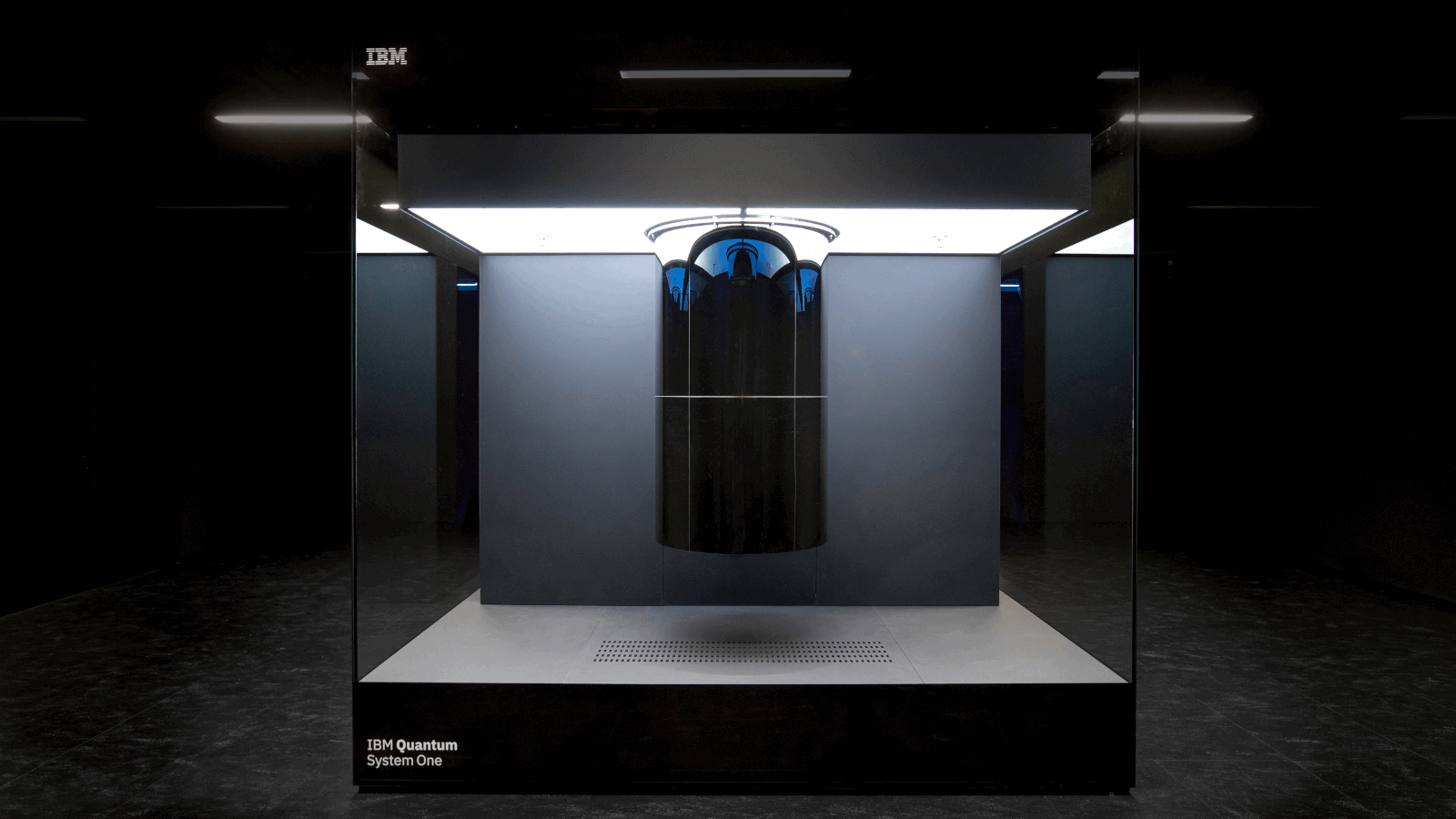

Physics breakthrough could lead to new, more efficient quantum computers

According to the researchers, this technique for generating stable qubits could

have massive implications for the entire field of quantum computing, but

especially for scalability and noise-reduction: At this stage, our system faces

mostly technical limitations, such as optical losses, finite cooperativity and

imperfect Raman pulses. Even modest improvements in these respects would put us

within reach of loss and fault tolerance thresholds for quantum error

correction. It’ll take some time to see how well this experimental generation of

qubits translates into an actual computation device, but there’s plenty of

reason to be optimistic. There are numerous different methods by which qubits

can be made, and each lends to its own unique machine architecture. The upside

here is that the scientists were able to generate their results with a single

atom. This indicates that the technique would be useful outside of computing.

If, for example, it could be developed into a two-atom system, it could lead to

a novel method for secure quantum communication.

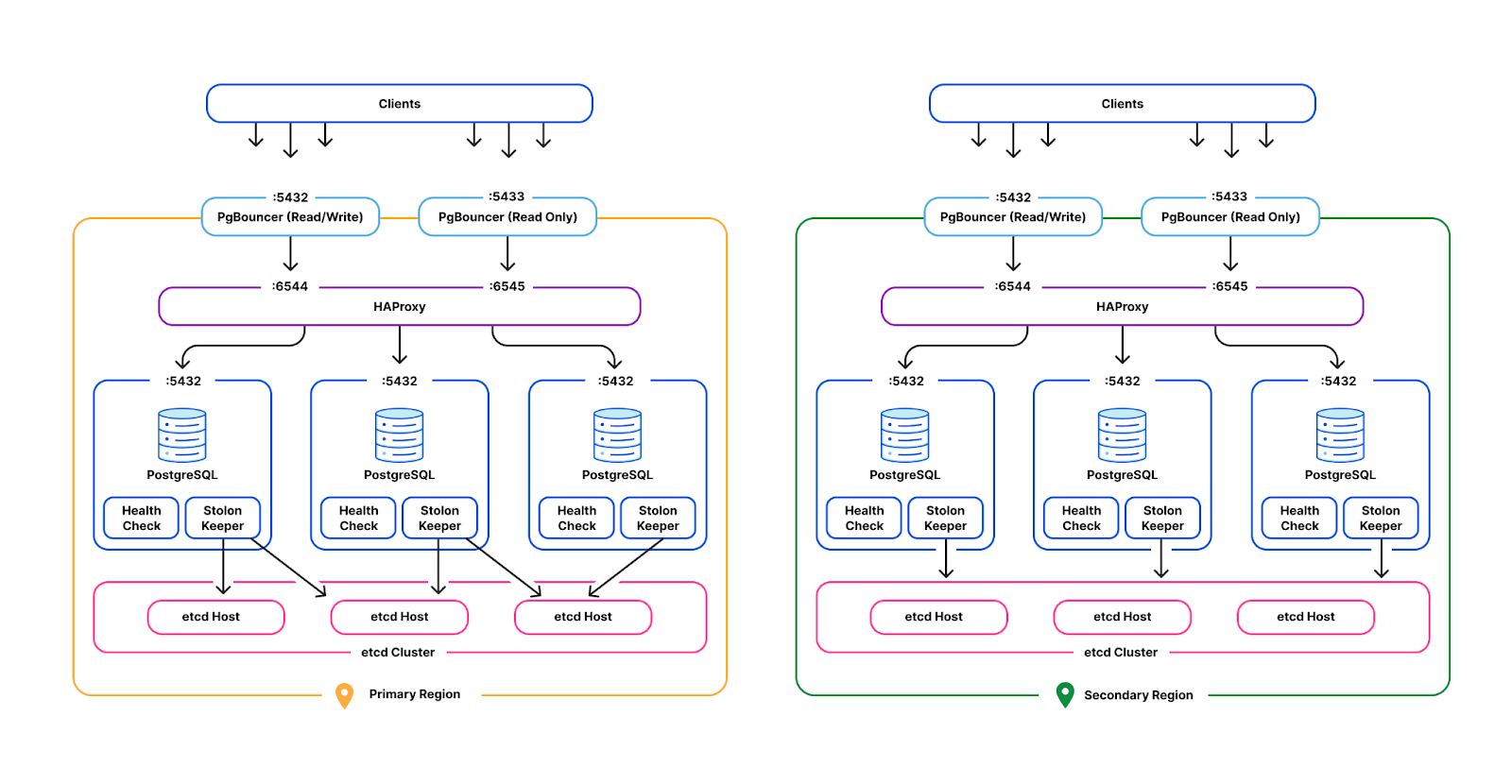

Organizations security: Highlighting the importance of compliant data

When choosing a web data collection platform or network, it’s important that

security professionals use a compliance-driven service provider to safeguard the

integrity of their network and operations. Compliant data collection networks

ensure that security operators have a safe and suitable environment in which to

perform their work without being compromised by potential bad actors using the

same network or proxy infrastructure. These data providers institute extensive

and multifaceted compliance processes that include a number of internal as well

as external procedures and safeguards, such as manual reviews and third-party

audits, to identify non-compliant active patterns and ensure that all use of the

network follows the overall compliance guidelines. This of course also includes

abiding by the data gathering guidelines established by international

regulators, such as the European Union and the US State of California, as well

as enforcing others who follow public web scraping best practices for compliant

and reliable web data scraping or collection.

TensorFlow, PyTorch, and JAX: Choosing a deep learning framework

It’s not like TensorFlow has stood still for all that time. TensorFlow 1.x was

all about building static graphs in a very un-Python manner, but with the

TensorFlow 2.x line, you can also build models using the “eager” mode for

immediate evaluation of operations, making things feel a lot more like PyTorch.

At the high level, TensorFlow gives you Keras for easier development, and at the

low-level, it gives you the XLA optimizing compiler for speed. XLA works

wonders for increasing performance on GPUs, and it’s the primary method of

tapping the power of Google’s TPUs (Tensor Processing Units), which deliver

unparalleled performance for training models at massive scales. Then there are

all the things that TensorFlow has been doing well for years. Do you need to

serve models in a well-defined and repeatable manner on a mature platform?

TensorFlow Serving is there for you. Do you need to retarget your model

deployments for the web, or for low-power compute such as smartphones, or for

resource-constrained devices like IoT things? TensorFlow.js and TensorFlow Lite

are both very mature at this point.

IoT Will Power Itself – Power Electronics News

Energy harvesting is nothing new, with solar power being one of the most famous

examples. Solar energy works well for powering parking meters, but if we’re

going to bring online the packaging and containers that are at the heart of our

supply chains—things that are indoors and stacked on top of each other—we need

another solution. The technology that gives mundane things like transporting

cash registers both their intelligence and energy-harvesting power are small,

inexpensive, brand-size computers printed as stickers and affixed to cash

registers, sweater tags, vaccine vials, or other items racing in the global

supply chain. These sticker tags, called IoT Pixels, include an ARM processor, a

Bluetooth radio, sensors, and a security module — basically a complete

system-on-a-chip (SoC). All that remains is to power this tiny SoC in the most

efficient and economical way possible. It turns out that as wireless networks

permeate our lives and radio frequency (RF) activity is everywhere, the prospect

of recycling that RF activity into energy is the most practical and ubiquitous

solution.

CoAuthor: Stanford experiments with human-AI collaborative writing

CoAuthor is based on GPT-3, one of the recent large language models from OpenAI,

trained on a massive collection of already-written text on the internet. It

would be a tall order to think a model based on existing text might be capable

of creating something original, but Lee and her collaborators wanted to see how

it can nudge writers to deviate from their routines—to go beyond their comfort

zone (e.g., vocabularies that they use daily)—to write something that they would

not have written otherwise. They also wanted to understand the impact such

collaborations have on a writer’s personal sense of accomplishment and

ownership. “We want to see if AI can help humans achieve the intangible

qualities of great writing,” Lee says. Machines are good at doing search and

retrieval and spotting connections. Humans are good at spotting creativity. If

you think this article is written well, it is because of the human author, not

in spite of it. ... The goal, Lee says, was not to build a system that can make

humans write better and faster. Instead, it was to investigate the potential of

recent large language models to aid in the writing process and see where they

succeed and fail.

LastPass source code breach – do we still recommend password managers?

The breach itself actually happened two weeks before that, the company said, and

involved attackers getting into the system where LastPass keeps the source code

of its software. From there, LastPass reported, the attackers “took portions of

source code and some proprietary LastPass technical information.” We didn’t

write this incident up last week, because there didn’t seem to be a lot that we

could add to the LastPass incident report – the crooks rifled through their

proprietary source code and intellectual property, but apparently didn’t get at

any customer or employee data. In other words, we saw this as a deeply

embarrassing PR issue for LastPass itself, given that the whole purpose of the

company’s own product is to help customers keep their online accounts to

themselves, but not as an incident that directly put customers’ online accounts

at risk. However, over the past weekend we’ve had several worried enquiries from

readers (and we’ve seen some misleading advice on social media), so we thought

we’d look at the main questions that we’ve received so far.

FBI issues alert over cybercriminal exploits targeting DeFi

The FBI observed cybercriminals exploiting vulnerabilities in smart contracts

that govern DeFi platforms in order to steal investors’ cryptocurrency. In a

specific example, the FBI mentioned cases where hackers used a “signature

verification vulnerability” to plunder $321 million from the Wormhole token

bridge back in February. It also mentioned a flash loan attack that was used to

trigger an exploit in the Solana DeFi protocol Nirvana in July. However, that’s

just a drop in a vast ocean. According to an analysis from blockchain security

firm CertiK, since the start of the year, over $1.6 billion has been exploited

from the DeFi space, surpassing the total amount stolen in 2020 and 2021

combined. While the FBI admitted that “all investment involves some risk,” the

agency has recommended that investors research DeFi platforms extensively before

use and, when in doubt, seek advice from a licensed financial adviser. The

agency said it was also very important that the platform's protocols are sound

and to ensure they have had one or more code audits performed by independent

auditors.

Privacy and security issues associated with facial recognition software

Facial recognition technology in surveillance has improved dramatically in

recent years, meaning it is quite easy to track a person as they move about a

city, he said. One of the privacy concerns about the power of such technology is

who has access to that information and for what purpose. Ajay Mohan, principal,

AI & analytics at Capgemini Americas, agreed with that assessment. “The big

issue is that companies already collect a tremendous amount of personal and

financial information about us [for profit-driven applications] that basically

just follows you around, even if you don’t actively approve or authorize it,”

Mohan said. “I can go from here to the grocery store, and then all of a sudden,

they have a scan of my face, and they’re able to track it to see where I’m

going.” In addition, artificial intelligence (AI) continues to push the

capabilities of facial recognition systems in terms of their performance, while

from an attacker perspective, there is emerging research leveraging AI to create

facial “master keys,” that is, AI generation of a face that matches many

different faces, through the use of what’s called Generative Adversarial Network

techniques, according to Lewis.

Quote for the day:

"If you don't demonstrate leadership

character, your skills and your results will be discounted, if not dismissed."

-- Mark Miller

:quality(70)/cloudfront-eu-central-1.images.arcpublishing.com/thenational/VQ56ARTBSKPJ4OO3DMFY6AU3ZY.jpg)