Best practices for recovering a Microsoft network after an incident

Often a recovery process is different for different sized organizations. A small

business might just want to be back functional as soon as possible while a

medium-sized business might take the time to do a root cause analysis. According

to the NIST document, “Identifying the root cause(s) of a cyber event is

important to planning the best response, containment, and recovery actions.

While knowing the full root cause is always desirable, adversaries are

incentivized to hide their methods, so discovering the full root cause is not

always achievable.” If you use Microsoft Defender for Business Server, Microsoft

recommends proactive adjustments to your server to ensure that you can best

prevent attacks, specifically that you use the same attack surface reduction

rules recommendation for workstations. One example from the screen image below,

you want to block all office applications from creating client processes. As you

rebuild your network after an incident, remember these settings as you often

redeploy servers with default settings. You might not have remembered or

documented all your settings that you need to do to better protect your network.

How AI Is Transforming The Future Of The Finance Industry

Since the entire foundation of AI is learning from past data, it only seems

sensible that AI would flourish in the financial services industry, where

keeping books and records is a given for businesses. Consider the use of credit

cards as an example. Today, we utilize credit scores to determine who is and is

not eligible for credit cards. However, it is not always advantageous for

businesses to divide people into “haves” and “have-nots.” Instead, information

about a person’s loan repayment patterns, the number of loans that are still

open, the number of credit cards that person has already, etc. can be used to

tailor the interest rate on a card so that the financial institution issuing the

card feels more comfortable with it. ... When it comes to security and fraud

detection, AI is on top. It can leverage historical spending patterns across

various transaction instruments to unexpected flag activity, such as using a

foreign card shortly after it has been used elsewhere or an effort to withdraw

money in an unusual amount for the account in the issue. The system has no

qualms about learning, which is another excellent aspect of AI fraud

detection.

Meta faces new FTC lawsuit for VR company acquisition

On Wednesday, the FTC sued Meta in an attempt to block its acquisition of

virtual reality technology company Within Unlimited and its VR fitness app

Supernatural. The FTC in a press release said Meta's "virtual reality empire"

already includes a virtual reality fitness app and alleged Meta is attempting to

"buy its way to the top." "Meta already owns a best-selling virtual reality

fitness app, and it had the capabilities to compete even more closely with

Within's popular Supernatural app," John Newman, FTC Bureau of Competition

deputy director, said in the release. "But Meta chose to buy market position

instead of earning it on the merits. This is an illegal acquisition, and we will

pursue all appropriate relief." According to Meta's statement in response to the

lawsuit, the FTC's case is "based on ideology and speculation, not evidence."

... It's not the first time the FTC has accused Meta of buying out the

competition. In an ongoing lawsuit against the company, the FTC alleged that

Meta's previous Instagram and WhatsApp acquisitions served to kill what the

company viewed as competition to its popular social media site Facebook.

Blockchain Applications That Make Sense Right Now

Using blockchain technology, users can create digital assets that are

verifiable, scarce and portable – the core properties of a “token”. The owner of

a blockchain-generated token can be sure they are the only owner of a limited

quantity item. These are tokens that have genuine value as they solve the

ownership challenge digital assets have had since day one. This is the core

building block that is not possible in Web2’s centralized world. Yes, Fortnite

can sell you virtual clothing, and a bank can show a deposit in your account,

but those assets are at the behest of the central actors. Creators are similarly

beholden to the platforms that distribute their products, and their popularity

is governed by their algorithms and business interests of those centralized

platforms. With blockchain, new types of assets can be created that hold value

outside any centralized platform (even though those platforms may still be very

relevant for creation, display and distribution). No centralized actor can

unilaterally change the ownership of an asset ‘on-chain’, and rules are visible

in public code. Ownership can be ascertained with certainty for all who examine

it.

Yes, you are being watched, even if no one is looking for you

Whether or not you pass under the gaze of a surveillance camera or license plate

reader, you are tracked by your mobile phone. GPS tells weather apps or maps

your location, Wi-Fi uses your location, and cell-tower triangulation tracks

your phone. Bluetooth can identify and track your smartphone, and not just for

COVID-19 contact tracing, Apple’s “Find My” service, or to connect headphones.

People volunteer their locations for ride-sharing or for games like Pokemon Go

or Ingress, but apps can also collect and share location without your knowledge.

Many late-model cars feature telematics that track locations–for example, OnStar

or Bluelink. All this makes opting out impractical. The same thing is true

online. Most websites feature ad trackers and third-party cookies, which are

stored in your browser whenever you visit a site. They identify you when you

visit other sites so advertisers can follow you around. Some websites also use

key logging, which monitors what you type into a page before hitting submit.

Similarly, session recording monitors mouse movements, clicks, scrolling and

typing, even if you don’t click “submit.”

How remote work disrupted global supply chains

To make matters worse, many U.S.-based distributors and retailers decided to

bulk up their inventories to hedge against shortages. The surge of e-commerce

contributed to the disruptive spiral by making two-day shipping a necessity. In

addition, the resulting shortage of warehouse space worsened bottlenecks by

pushing supplies back to shipping docks and freight terminals. While remote work

isn’t entirely to blame for the supply chain crisis, it clearly kicked off a

sequence of events that took on a life of its own. Zoom, Google Docs, and Amazon

undermined the assumption that history would repeat itself. When is it all going

to end? Experts disagree. Most say things will probably improve for the rest of

this year and return to something close to normal by the end of 2023. But even

the Federal Reserve Bank of Cleveland recently admitted that the sources it

relies upon for intelligence “are mostly based on hope rather than on concrete

evidence.” In the meantime, the crisis has also cast the spotlight on the

delicate interconnections that hold the world’s supply lines together and the

effects that minor disruptions at the far end of the chain can have further

upstream.

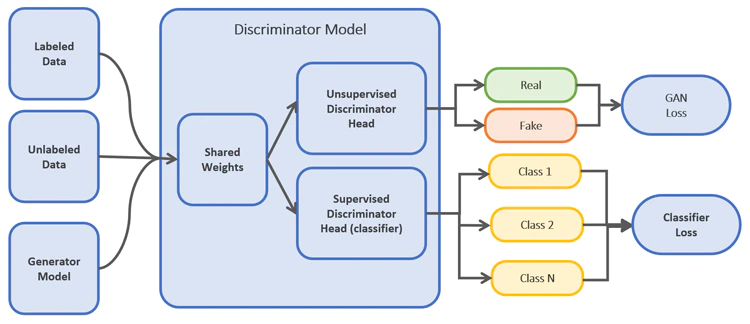

Network security depends on two foundations you probably don’t have

Any use of the network creates traffic and traffic patterns. Malware that’s

probing for vulnerabilities is an application, and it also generates a traffic

pattern. If AI/ML can monitor traffic patterns, it can pick out a malware probe

from normal application access. Even if malware infects a user with the right to

access a set of applications, it’s unlikely the malware would be able to

duplicate the traffic pattern that user generated with legitimate access. Thus,

AI/ML could detect a difference, and create an alert. That alert, like a journal

alert on unauthorized connections, would then be followed up to validate the

state of the user’s device security. The advantage of the AI/ML traffic pattern

analysis is that it can be effective even when user identity is difficult to pin

down, so explicit connection authorization is problematic. In fact, you can do

traffic pattern analysis at any level from single users to the entire network.

Think of it as involving a kind of source/destination-address-logging process;

at a given point, have I seen packets from or to this address or this subnetwork

before? If not, then a more detailed analysis may be in order, or even an

alert.

Building resilience against emerging security threats

Threats such as malware and data breaches almost always rely on misconfigured

systems to succeed. Perhaps a default password hasn’t been changed, a cloud

storage instance has been set to public, or a dangerous port is accidentally

left open to the internet. These are all errors that can be hard to spot in a

complex data centre. It’s a time-consuming task that may not be a top priority

amongst competing business goals, meaning the vulnerability remains

unidentified. Configuration management tools help here by scanning the entire

estate, from cloud storage to local servers, websites to network devices, and

more, identifying misconfigurations. They are vendor agnostic and surface

anomalies that might otherwise go unnoticed – until it is too late. Auditing the

estate in this way gives CISOs the visibility and control they need to

effectively monitor their estate and be proactive in remediating

misconfigurations. Armed with this insight, the company’s risk is reduced and

its resilience is enhanced.

SQL Server 2022: Here’s what you need to know

If you’ve ever looked at the claims for blockchains and thought that an

append-only database could do that without all the work of designing and

maintaining a distributed system that likely doesn’t scale to high-throughput

queries (or the environmental impact of blockchain mining), another feature that

started out in Azure SQL and is now coming to SQL Server 2022 is just what you

need. “Ledger brings the benefits of blockchains to relational databases by

cryptographically linking the data and their changes in a blockchain structure

to make the data tamper-evident and verifiable, making it easy to implement

multi-party business process, such as supply chain systems, and can also

streamline compliance audits,” Khan explained. For example, the quality of an

ice cream manufacturer’s ice cream depends on both the ingredients that its

suppliers send and the finished ice cream it delivers being shipped at the right

temperature. If the refrigerated truck has a fault, the cream might curdle, or

the ice cream might melt and then refreeze once it’s in the store freezer. By

collecting sensor information from everyone in its supply chain, the ice cream

manufacturer can track down where the problem is.

7 benefits of using design review in your agile architecture practices

For an enterprise to practice architecture in an agile environment, several

architects and designers must support the project's agile value streams, which

are the actions taken from conception to delivery and support that add value to

a product or service. One organization may have a distinct team of architects

producing solution designs and templates. Another might place the architect

inside the agile squad in a dual senior engineer role. ... The things involved

in a design review include:The designer is the person who wants to solve a

problem. The documentation is the document at the center of attention. It

contains information regarding all aspects of the problem and the proposed

solution. The reviewer is the person who will review the documentation. The

process includes the agreed-upon rules and interactions that define the

designer's and reviewer's communications. It may stand alone or be part of a

bigger process. For example, in a software development life cycle, it could

precede development, or in an API specification, it could include evaluating

changes. The review scope is the area the reviewer tries to cover when

reviewing the documentation (technical or not).

Quote for the day:

"You've got to risk the terrible and

pathetic, in order to get to the graceful and elegant."