Quote for the day:

“The greatest leader is not necessarily the one who does the greatest things. He is the one that gets the people to do the greatest things.” -- Ronald Reagan

Neuromorphic computing and the future of edge AI

While QC captures the mainstream headlines, neuromorphic computing has

positioned itself as a force in the next era of AI. While conventional AI

relies heavily on GPU/TPU-based architectures, neuromorphic systems mimic the

parallel and event-driven nature of the human brain. ... Neuromorphic hardware

has shown promise in edge environments where power efficiency, latency and

adaptability matter most. From wearable medical devices to battlefield

robotics, systems that can “think locally” without requiring constant cloud

connectivity offer clear advantages. ... As neuromorphic computing matures,

ethical and sustainability considerations will shape adoption as much as raw

performance. Spiking neural networks’ efficiency reduces carbon footprints by

cutting energy demands compared to GPUs, aligning with global decarbonization

targets. At the same time, ensuring that neuromorphic models are transparent,

bias‑aware and auditable is critical for applications in healthcare, defense

and finance. Calls for AI governance frameworks now explicitly include

neuromorphic AI, reflecting its potential role in high‑stakes decision‑making.

Embedding sustainability and ethics into the neuromorphic roadmap will ensure

that efficiency gains do not come at the cost of fairness or

accountability.

While QC captures the mainstream headlines, neuromorphic computing has

positioned itself as a force in the next era of AI. While conventional AI

relies heavily on GPU/TPU-based architectures, neuromorphic systems mimic the

parallel and event-driven nature of the human brain. ... Neuromorphic hardware

has shown promise in edge environments where power efficiency, latency and

adaptability matter most. From wearable medical devices to battlefield

robotics, systems that can “think locally” without requiring constant cloud

connectivity offer clear advantages. ... As neuromorphic computing matures,

ethical and sustainability considerations will shape adoption as much as raw

performance. Spiking neural networks’ efficiency reduces carbon footprints by

cutting energy demands compared to GPUs, aligning with global decarbonization

targets. At the same time, ensuring that neuromorphic models are transparent,

bias‑aware and auditable is critical for applications in healthcare, defense

and finance. Calls for AI governance frameworks now explicitly include

neuromorphic AI, reflecting its potential role in high‑stakes decision‑making.

Embedding sustainability and ethics into the neuromorphic roadmap will ensure

that efficiency gains do not come at the cost of fairness or

accountability.10 security leadership career-killers — and how to avoid them

“Security has evolved from being the end goal to being a business-enabling

function,” says James Carder, CISO at software maker Benevity. “That means

security strategies, communications, planning, and execution need to be

aligned with business outcomes. If security efforts aren’t returning

meaningful ROI, CISOs are likely doing something wrong. Security should not

operate as a cost center, and if we act or report like one, we’re failing in

our roles.” ... CISOs generally know that the security function can’t be the

“department of no.” But some don’t quite get to a “yes,” either, which means

they’re still failing their organizations in a way that could stymie their

careers, says Aimee Cardwell, CISO in residence at tech company Transcend and

former CISO of UnitedHealth Group. ... CISOs who are too rigid with the

rules do a disservice to their organizations and their professional prospects,

says Cardwell. Such a situation recently came up in her organization, where

one of her team members initially declined to permit a third-party application

from being used by workers, pointing to a security policy barring such apps.

... CISOs who don’t have a firm grasp on all that they must secure won’t

succeed in their roles. “If they don’t have visibility, if they can’t talk

about the effectiveness of the controls, then they won’t have credibility and

the confidence in them among leadership will erode,” Knisley says.

“Security has evolved from being the end goal to being a business-enabling

function,” says James Carder, CISO at software maker Benevity. “That means

security strategies, communications, planning, and execution need to be

aligned with business outcomes. If security efforts aren’t returning

meaningful ROI, CISOs are likely doing something wrong. Security should not

operate as a cost center, and if we act or report like one, we’re failing in

our roles.” ... CISOs generally know that the security function can’t be the

“department of no.” But some don’t quite get to a “yes,” either, which means

they’re still failing their organizations in a way that could stymie their

careers, says Aimee Cardwell, CISO in residence at tech company Transcend and

former CISO of UnitedHealth Group. ... CISOs who are too rigid with the

rules do a disservice to their organizations and their professional prospects,

says Cardwell. Such a situation recently came up in her organization, where

one of her team members initially declined to permit a third-party application

from being used by workers, pointing to a security policy barring such apps.

... CISOs who don’t have a firm grasp on all that they must secure won’t

succeed in their roles. “If they don’t have visibility, if they can’t talk

about the effectiveness of the controls, then they won’t have credibility and

the confidence in them among leadership will erode,” Knisley says.A CIO's Evolving Role in the Generative AI Era

The dual mandate facing CIOs today is demanding but unavoidable. They must

deliver quick AI pilots that boards can take to the shareholders while also

enforcing guardrails on security, ethics and cost aspects. Too much caution

can make CIOs irrelevant. This balancing act requires not only technical

fluency but also narrative skill. The ability to translate AI experiments into

business outcomes that CEOs and boards can trust can make CIOs a force. The

MIT report highlights another critical decision point: whether to build or

buy. Many enterprises attempt internal builds, but externally built AI

partnerships succeed twice as often. CIOs, pressured for fast results, must be

pragmatic about when to build and when to partner. Gen AI does not - and never

will - replace the CIO role. But it demands corrections. The CIO who once

focused on alignment must now lead business transformation. Those who succeed

will act less as CIOs and more as AI diplomats, bridging hype with pragmatism,

connecting technological opportunities to shareholder value and balancing the

boardroom's urgency with the operational reality. As AI advances, so does the

CIO's role - but only if they evolve. Their reporting line to the CEO

symbolizes greater trust and higher stakes. Unlike previous technology

cycles, AI has brought the CIO to the forefront of transformation.

The dual mandate facing CIOs today is demanding but unavoidable. They must

deliver quick AI pilots that boards can take to the shareholders while also

enforcing guardrails on security, ethics and cost aspects. Too much caution

can make CIOs irrelevant. This balancing act requires not only technical

fluency but also narrative skill. The ability to translate AI experiments into

business outcomes that CEOs and boards can trust can make CIOs a force. The

MIT report highlights another critical decision point: whether to build or

buy. Many enterprises attempt internal builds, but externally built AI

partnerships succeed twice as often. CIOs, pressured for fast results, must be

pragmatic about when to build and when to partner. Gen AI does not - and never

will - replace the CIO role. But it demands corrections. The CIO who once

focused on alignment must now lead business transformation. Those who succeed

will act less as CIOs and more as AI diplomats, bridging hype with pragmatism,

connecting technological opportunities to shareholder value and balancing the

boardroom's urgency with the operational reality. As AI advances, so does the

CIO's role - but only if they evolve. Their reporting line to the CEO

symbolizes greater trust and higher stakes. Unlike previous technology

cycles, AI has brought the CIO to the forefront of transformation. Building an AI Team May Mean Hiring Where the Talent Is, Not Where Your Bank Is

Much of the adaptation of banking to AI approaches requires close collaboration

between AI talent with people who understand how the banking processes involved

need to work. This will put people closer together, literally, to facilitate

both quick and in-depth but always frequent interactions to make collaboration

work — paradoxically, increased automation needs more face-to-face dealings at

the formative stages. However, the "where" of the space will also hinge on where

AI and innovation talent can be recruited, where that talent is being bred and

wants to work, and the types of offices that talent will be attracted to. ...

"Banks are also recruiting for emerging specialties in responsible AI and AI

governance, ensuring that their AI initiatives are ethical, compliant and

risk-managed," the report says. "As ‘agentic AI’ — autonomous AI agents — and

generative AI gain traction, firms will need experts in these cutting-edge

fields too." ... Decisions don’t stop at the border anymore. Jesrani says that

savvy banks look for pockets of talent as well. ... "Banks are contemplating

their global strategies because emerging markets can provide them with talent

and capabilities that they may not be able to obtain in the U.S.," says Haglund.

"Or there may be things happening in those markets that they need to be a part

of in order to advance their core business capabilities."

Much of the adaptation of banking to AI approaches requires close collaboration

between AI talent with people who understand how the banking processes involved

need to work. This will put people closer together, literally, to facilitate

both quick and in-depth but always frequent interactions to make collaboration

work — paradoxically, increased automation needs more face-to-face dealings at

the formative stages. However, the "where" of the space will also hinge on where

AI and innovation talent can be recruited, where that talent is being bred and

wants to work, and the types of offices that talent will be attracted to. ...

"Banks are also recruiting for emerging specialties in responsible AI and AI

governance, ensuring that their AI initiatives are ethical, compliant and

risk-managed," the report says. "As ‘agentic AI’ — autonomous AI agents — and

generative AI gain traction, firms will need experts in these cutting-edge

fields too." ... Decisions don’t stop at the border anymore. Jesrani says that

savvy banks look for pockets of talent as well. ... "Banks are contemplating

their global strategies because emerging markets can provide them with talent

and capabilities that they may not be able to obtain in the U.S.," says Haglund.

"Or there may be things happening in those markets that they need to be a part

of in order to advance their core business capabilities."

How Data Immaturity is Preventing Advanced AI

Data immaturity, in the context of AI, refers to an organisation’s

underdeveloped or inadequate data practices, which limit its ability to leverage

AI effectively. It encompasses issues with data quality, accessibility,

governance, and infrastructure. Critical signs of data immaturity include

inconsistent, incomplete, or outdated data leading to unreliable AI outcomes;

data silos across departments hindering access and comprehensive analysis, as

well as weak data governance caused by a lack of policies on data ownership,

compliance and security, which introduces risks and restricts AI usage. ... Data

immaturity also leads to a lack of trust in analysis and predictability of

execution. That puts a damper on any plans to leverage AI in a more autonomous

manner—whether for business or operational process automation. A recent study by

Kearney found that organisations globally are expecting to increase data and

analytics budgets by 22% in the next three years as AI adoption scales.

Fragmented data limits the predictive accuracy and reliability of AI, which are

crucial for autonomous functions where decisions are made without human

intervention. As a result, organisations must get their data houses in order

before they will be able to truly take advantage of AI’s potential to optimise

workflows and free up valuable time for humans to focus on strategy and design,

tasks for which most AI is not yet well suited.

Data immaturity, in the context of AI, refers to an organisation’s

underdeveloped or inadequate data practices, which limit its ability to leverage

AI effectively. It encompasses issues with data quality, accessibility,

governance, and infrastructure. Critical signs of data immaturity include

inconsistent, incomplete, or outdated data leading to unreliable AI outcomes;

data silos across departments hindering access and comprehensive analysis, as

well as weak data governance caused by a lack of policies on data ownership,

compliance and security, which introduces risks and restricts AI usage. ... Data

immaturity also leads to a lack of trust in analysis and predictability of

execution. That puts a damper on any plans to leverage AI in a more autonomous

manner—whether for business or operational process automation. A recent study by

Kearney found that organisations globally are expecting to increase data and

analytics budgets by 22% in the next three years as AI adoption scales.

Fragmented data limits the predictive accuracy and reliability of AI, which are

crucial for autonomous functions where decisions are made without human

intervention. As a result, organisations must get their data houses in order

before they will be able to truly take advantage of AI’s potential to optimise

workflows and free up valuable time for humans to focus on strategy and design,

tasks for which most AI is not yet well suited.

From Reactive Tools to Intelligent Agents: Fulcrum Digital’s AI-First Transformation

To mature, LLM is just one layer. Then you require the integration layer, how you integrate it. Every customer has multiple assets in their business which have to connect with LLM layers. Every business has so many existing applications and new applications; businesses are also buying some new AI agents from the market. How do you bring new AI agents, existing old systems, and new modern systems of the business together — integrating with LLM? That is one aspect. The second aspect is every business has its own data. So LLM has to train on those datasets. Copilot and OpenAI are trained on zillions of data, but that is LLM. Industry wants SLM—small language models, private language models, and industry-orientated language models. So LLMs have to be fine-tuned according to the industry and also fine-tuned according to their data. Nowadays people come to realise that LLMs will never give you 100 per cent accurate solutions, no matter which LLM you choose. That is the phenomenon customers and everybody are now learning. The difference between us and others: many players who are new to the game deliver results with LLMs at 70–75 per cent. Because we have matured this game with multiple LLMs coexisting, and with those LLMs together maturing our Ryze platform, we are able to deliver more than 93–95 per cent accuracy.You Didn't Get Phished — You Onboarded the Attacker

Many organizations respond by overcorrecting: "I want my entire company to be

as locked down as my most sensitive resource." It seems sensible—until the

work slows to a crawl. Without nuanced controls that allow your security

policies to distinguish between legitimate workflows and unnecessary exposure,

simply applying rigid controls that lock everything down across the

organization will grind productivity to a halt. Employees need access to do

their jobs. If security policies are too restrictive, employees are either

going to find workarounds or continually ask for exceptions. Over time, risk

creeps in as exceptions become the norm. This collection of internal

exceptions slowly pushes you back towards "the castle and moat" approach. The

walls are fortified from the outside, but open on the inside. And giving

employees the key to unlock everything inside so they can do their jobs means

you are giving one to Jordan, too. ... A practical way to begin is by piloting

ZSP on your most sensitive system for two weeks. Measure how access requests,

approvals, and audits flow in practice. Quick wins here can build momentum for

wider adoption, and prove that security and productivity don't have to be at

odds. ... When work demands more, employees can receive it on request through

time-bound, auditable workflows. Just enough access is granted just in time,

then removed. By taking steps to operationalize zero standing privileges, you

empower legitimate users to move quickly—without leaving persistent privileges

lying around for Jordan to find.

Many organizations respond by overcorrecting: "I want my entire company to be

as locked down as my most sensitive resource." It seems sensible—until the

work slows to a crawl. Without nuanced controls that allow your security

policies to distinguish between legitimate workflows and unnecessary exposure,

simply applying rigid controls that lock everything down across the

organization will grind productivity to a halt. Employees need access to do

their jobs. If security policies are too restrictive, employees are either

going to find workarounds or continually ask for exceptions. Over time, risk

creeps in as exceptions become the norm. This collection of internal

exceptions slowly pushes you back towards "the castle and moat" approach. The

walls are fortified from the outside, but open on the inside. And giving

employees the key to unlock everything inside so they can do their jobs means

you are giving one to Jordan, too. ... A practical way to begin is by piloting

ZSP on your most sensitive system for two weeks. Measure how access requests,

approvals, and audits flow in practice. Quick wins here can build momentum for

wider adoption, and prove that security and productivity don't have to be at

odds. ... When work demands more, employees can receive it on request through

time-bound, auditable workflows. Just enough access is granted just in time,

then removed. By taking steps to operationalize zero standing privileges, you

empower legitimate users to move quickly—without leaving persistent privileges

lying around for Jordan to find.

OT Security: When Shutting Down Is Not an Option

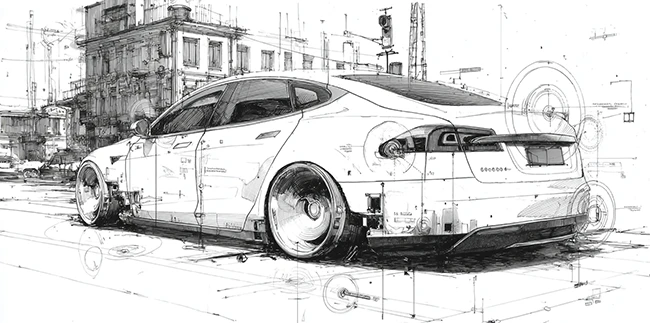

some of the most urgent and disruptive threats today are unfolding far from the keyboard, in operational technology environments that keep factories running, energy flowing and transportation systems moving. In these sectors, digital attacks can lead to physical consequences, and defending OT environments demands specialized skills. Real-world incidents across manufacturing and critical infrastructure show how quickly operations can be disrupted when OT systems are not adequately protected. Just this week, Jaguar Land Rover disclosed that a cyberattack "severely disrupted" its automotive manufacturing operations. ... OT environments present challenges that differ sharply from traditional IT. While security is improving, OT security teams must protect legacy control systems running outdated firmware, making them difficult to patch. Operators need to prioritize uptime and safety over system changes; and IT and OT teams frequently work in silos. These conditions mean that breaches can have physical as well as digital consequences, from halting production to endangering lives. Training tailored to OT is essential to secure critical systems while maintaining operational continuity. ... An OT cybersecurity learning ecosystem is not a one-time checklist but a continuous program. The following elements help organizations choose training that meets current needs while building capacity for ongoing improvement.Connected cars are racing ahead, but security is stuck in neutral

Connected cars are essentially digital platforms with multiple entry points

for attackers. The research highlights several areas of concern. Remote access

attacks can target telematics systems, wireless interfaces, or mobile apps

linked to the car. Data leaks are another major issue because connected cars

collect sensitive information, including location history and driving

behavior, which is often stored in the cloud. Sensors present their own set of

risks. Cameras, radar, lidar, and GPS can be manipulated, creating confusion

for driver assistance systems. Once inside a vehicle, attackers can move

deeper by exploiting the CAN bus, which connects key systems such as brakes,

steering, and acceleration. ... Most drivers want information about what data

is collected and where it goes, yet very few said they have received that

information. Brand perception also plays a role. Many participants prefer

European or Japanese brands, while some expressed distrust toward vehicles

from certain countries, citing political concerns, safety issues, or perceived

quality gaps. ... Manufacturers are pushing out new software-defined features,

integrating apps, and rolling out over the air updates. This speed increases

the number of attack paths and makes it harder for security practices and

rules to keep up.

Connected cars are essentially digital platforms with multiple entry points

for attackers. The research highlights several areas of concern. Remote access

attacks can target telematics systems, wireless interfaces, or mobile apps

linked to the car. Data leaks are another major issue because connected cars

collect sensitive information, including location history and driving

behavior, which is often stored in the cloud. Sensors present their own set of

risks. Cameras, radar, lidar, and GPS can be manipulated, creating confusion

for driver assistance systems. Once inside a vehicle, attackers can move

deeper by exploiting the CAN bus, which connects key systems such as brakes,

steering, and acceleration. ... Most drivers want information about what data

is collected and where it goes, yet very few said they have received that

information. Brand perception also plays a role. Many participants prefer

European or Japanese brands, while some expressed distrust toward vehicles

from certain countries, citing political concerns, safety issues, or perceived

quality gaps. ... Manufacturers are pushing out new software-defined features,

integrating apps, and rolling out over the air updates. This speed increases

the number of attack paths and makes it harder for security practices and

rules to keep up.