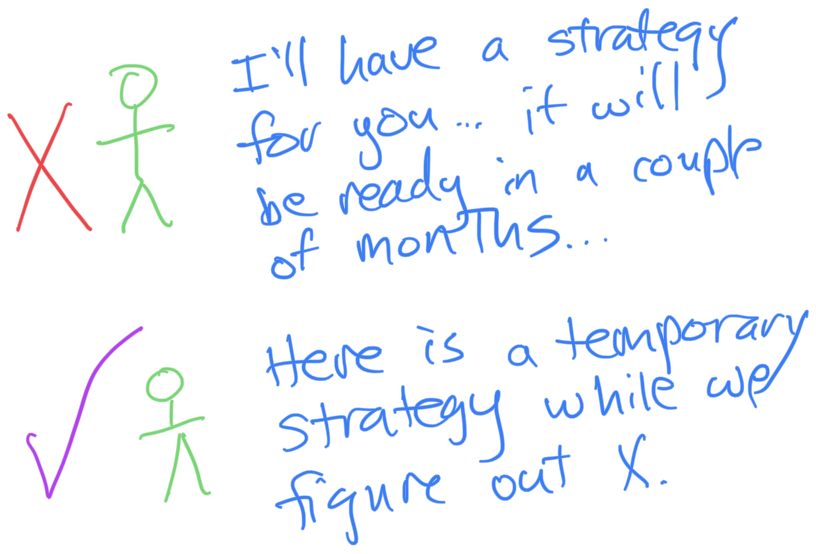

7 lies IT leaders should never tell

Things break, and in most cases, it comes as a surprise. IT consists of many

systems requiring different degrees of connectivity and monitoring, making it

difficult to know absolutely everything at every moment. The key to minimizing

failures is to be proactive rather than simply waiting for bad things to happen.

CIOs should not only expect things to break but also be honest about this with

their team members and business colleagues. “Eat, sleep, and live that life,”

advises Andre Preoteasa, internal IT director at IT business management firm

Electric. “There are things you know, things you don’t know, and things you

don’t know you don’t know,” he observes. “Write down the first two, then think

endlessly about the last one — it will make you more prepared for the unknowns

when they happen.” Preoteasa stresses the importance of building and maintaining

detailed disaster recovery and business continuity plans. “IT leaders that don’t

have [such plans] put the company in a bad position,” he notes. “The exercise

alone of writing things down shows you’re thinking about the future.”

Amid Legal Fallout, Cyber Insurers Redefine State-Sponsored Attacks as Act of War

Acts of war are a common insurance exclusion. Traditionally, exclusions

required a "hot war," such as what we see in Ukraine today. However, courts

are starting to recognize cyberattacks as potential acts of war without a

declaration of war or the use of land troops or aircraft. The state-sponsored

attack itself constitutes a war footing, the carriers maintain. ...

Effectively, Forrester's Valente notes, larger enterprises might have to set

aside large stores of cash in case they are hit with a state-sponsored attack.

Should insurance carriers be successful in asserting in court that a

state-sponsored attack is, by definition, an act of war, no company will have

coverage unless they negotiate that into the contract specifically to

eliminate the exclusion. When buying cyber insurance, "it is worth having a

detailed conversation with the broker to compare so-called 'war exclusions'

and determining whether there are carriers offering more favorable terms,"

says Scott Godes, partner and co-chair of the Insurance Recovery and

Counseling Practice and the Data Security & Privacy practice at District

of Columbia law firm Barnes & Thornburg.

Top 5 challenges of implementing industrial IoT

Scalability is another challenge faced by professionals trying to make

progress with their IIoT implementations. Bain’s 2022 study of IIoT

decision-makers indicated that 80% of those who purchase IIoT technology scale

fewer than 60% of their planned projects. The top three reasons why those

respondents failed to scale their projects were that the integration effort

was overly complicated and required too much effort, the associated vendors

could not support scaling, and the life cycle support for the project was too

expensive or not credible. One of the study’s takeaways was that hardware

could help close gaps that prevent company decision-makers from scaling.

Another best practice is for people to take a long-term viewpoint with any

IIoT project. Some people may only think about what it will take to implement

an initial proof of concept. That’s just a starting point. They’ll have to

look beyond the early efforts if they want to eventually scale the project,

but many of the things learned during the starting phase of a project can be

beneficial to know during later stages.

AWS And Blockchain

The customer CIO, an extremely smart person, spoke up, in beautifully-rounded

European vowels: “Here’s a use case I’ve been told about that’s on my mind.”

He named a region in Asia and explained that the small farmers there mark

their landholdings carefully, but then the annual floods sometimes wash the

markers away. Then unscrupulous larger landowners use the absence of markers

to cut away at the smallholdings of the poorest. “But if the boundary markers

were on the blockchain,” he said, “they wouldn’t be able to do that, would

they?” ... I thought. Then said “As a lifelong technologist, I’ve always been

dubious about technology as a solution to a political problem. It seems a good

idea to have a land-registry database but, blockchain or no, I wonder if the

large landowners might be able to find another way to fiddle the records and

still steal the land? Perhaps this is more about power than boundary markers?”

Later in the ensuing discussion I cautiously offered something like the

following, locking eyes on the CIO: “There are many among Amazon’s senior

engineers who think blockchain is a solution looking for a problem.” He went

entirely expressionless and the discussion moved on.

The key message is that before persisting the data into the storage layers

(Bronze, Silver, Gold), the data must pass data quality checks and for the

corrupted data records that fail the data quality checks to be dealt with

separately, before they are written into the storage layer. ... The “Bronze

=> Silver => Gold” pattern is a type of data flow design , also called a

medallion architecture. A medallion architecture is designed to incrementally

and progressively improve the structure and quality of data as it flows

through each layer of the architecture. This is why it is relevant for today’s

article regarding data quality and reliability. ... Generally the data quality

requirement become more and more stringent as the data flows from raw to

bronze to silver and to gold as the gold layer directly serves the business.

You should, by now, have a high-level understanding of what a medallion data

design pattern is and why it is relevant for a data quality discussion.

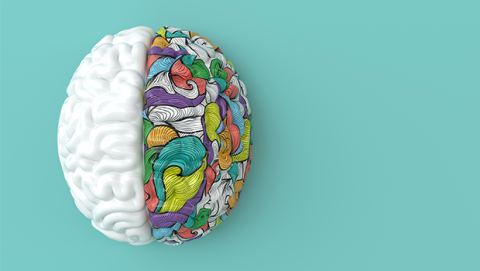

The Digital Skills Gap is Jeopardising Growth

With people staying in workforces longer than ever before and careers spanning

five decades becoming the norm, upskilling at a massive scale is needed.

However, this need is not fully addressed; a worrying 6 in 10 (58%) people we

surveyed in the UK told us that they have already been negatively affected by

a lack of digital skills. Organisations can’t just rely on recruiting from a

limited pool of digital specialists. More focus is also needed by

organisations to upskill their own employees, in both tech and human digital

skills. At a recent digital skills panel debate in Manchester, the director of

a recruitment agency stated bluntly that: “Many businesses are currently

overpaying to bring in external digital skills because of increased

competition and this just isn’t sustainable. Upskilling your current teams

should be as important as recruiting in new talent to keep costs in check and

create a more balanced and loyal workforce.” It’s crucial to upskill

employees, not only to get the necessary digital capabilities in our

organisations, but to build loyalty and retain valued team members.

Emerging sustainable technologies – expert predictions

AI and automation technologies offer a smart solution, too; they could channel

energy when it is plentiful into less time-sensitive uses, such as charging up

electric vehicles or heating storage heaters. For example, Drax has looked at

ways of combining AI with smart meters to channel our energy use, so that we

take advantage of those periods when energy creation exceeds demand. The

debate over whether we need new technologies or just need to scale-up existing

sustainable technologies has even reached the higher echelons of power. John

Kerry, US special presidential envoy for climate, and a certain Bill Gates say

we need technologies which haven’t been invented yet. World-renowned climate

change scientist Michael Mann disagrees. In his expert opinion, we just need

to scale up existing technologies. ... But there is one other application — an

application which will create extraordinary opportunity and open the way for

many technologies we have been considering up to now. When all of our power is

provided by renewables, the total annual supply is likely to exceed total

annual demand by a large margin.

Women in IT: Progress in Workforce Culture, But Problems Persist

From Milică's perspective, the greatest challenge facing women in IT today is

a lack of role models. “Women need to be the role models who can inspire young

minds, especially more women and minority leaders,” she says. “Even at the

individual level, each of us -- teachers, parents, and other influential

adults -- can plant the seed and grow the understanding among young people of

the importance of IT jobs, and how that career path can make a difference in

our world and society.” She adds hiring bias and pay inequality, along with

the lack of female role models, leaders, and advancement opportunities, all

discourage women from pursuing a STEAM career. “Women have to work much harder

both to get hired and to advance their careers -- which perhaps explains why

52% of women in cybersecurity hold postgraduate degrees, compared to only 44%

of men,” Milică notes. She adds the industry also hasn’t done a great job

sparking interest at an early age. “Attention to a career path starts with

children as early as elementary school, and by middle or high school, many

students will have made their decisions,” she explains.

EPSS explained: How does it compare to CVSS?

EPSS aims to help security practitioners and their organizations improve

vulnerability prioritization efforts. There are an exponentially growing

number of vulnerabilities in today’s digital landscape and that number is

increasing due to factors such as the increased digitization of systems and

society, increased scrutiny of digital products, and improved research and

reporting capabilities. Organizations generally can only fix between 5% and

20% of vulnerabilities each month, EPSS claims. Fewer than 10% of published

vulnerabilities are ever known to be exploited in the wild. Longstanding

workforce issues are also at play, such as the annual ISC2 Cybersecurity

Workforce Study, which shows shortages exceeding two million cybersecurity

professionals globally. These factors warrant organizations having a coherent

and effective approach to aid in prioritizing vulnerabilities that pose the

highest risk to their organization to avoid wasting limited resources and

time. The EPSS model aims to provide some support by producing probability

scores that a vulnerability will be exploited in the next 30 days and the

scores range between 0 and 1 or 0% and 100%.

Could it be quitting time?

The book tackles a challenge that proves stubbornly difficult for most people.

Letting go of anything is hard, especially at a time when pundits tout the

power of grit, building resilience, and toughing it out. Duke provides

permission to see quitting as not only viable but often preferable, and she

explains why people rarely give up at the right time. “Quitting is hard, too

hard to do entirely on our own,” she writes. “We as individuals are riddled by

the host of biases, like the sunk cost fallacy, endowment effect, status quo

bias, and loss aversion, which lead to escalation of commitment. Our

identities are entwined in the things that we’re doing. Our instinct is to

want to protect that identity, making us stick to things even more.” These

biases—some of them unconscious—prompt us to stick with jobs that have lost

their appeal or value; hold on to losing stocks long after an inner voice

screams “Sell!”; or endure myriad other situations that no longer serve us.

Duke focuses far more on the thinking behind the decision to “quit or grit”

rather than on the decision’s final outcomes.

Quote for the day:

"Teamwork is the secret that make

common people achieve uncommon result." -- Ifeanyi Enoch Onuoha

:quality(70)/cloudfront-us-east-1.images.arcpublishing.com/archetype/C5ZAKRQUJFBRVMC745AIBMCF3A.jpg)