Pairing AI With Human Judgment Is Key To Avoiding 'Mount Stupid'

We are in the midst of what has been called the age of automation — a

transformation of our economy, as robots, algorithms, AI and machines become

integrated into everything we do. It would be a mistake to assume automation

corrects for the Dunning-Kruger effect. Like humans, dumb bots and even smart

AI often do not understand the limitations of their own competency. Machines

are just as likely to scale Mount Stupid, and it can just as likely lead to

disastrous decisions. But there is a fix for that. For humans, the fix is

adding some humility to our decision making. For machines, it means creating

flexible systems that are designed to make allowances and seamlessly handle

outlier events — the unknowns. Having humans integrated into that system

allows one to identify those potential automation failures. In automation,

this is sometimes referred to as a human-in-the-loop system. Much like an

autonomous vehicle, these systems keep improving as they acquire more input.

It’s not rigid; if the autonomous vehicle encounters a piece of furniture in

the road, a remote driver can step in to navigate around it in real-time while

the AI or automation system learns from the actions taken by the remote

driver. Human-in-the-loop systems are flexible and can seamlessly handle

outlier events.

UK government ramps up efforts to regulate tech giants

Digital Secretary Oliver Dowden said: “There is growing consensus in the UK

and abroad that the concentration of power among a small number of tech

companies is curtailing growth of the sector, reducing innovation and having

negative impacts on the people and businesses that rely on them. It’s time to

address that and unleash a new age of tech growth.” While the Furman report

found that there have been a number of efforts between the tech giants to

support interoperability, giving consumers greater freedom and flexibility,

these can be hampered by technical challenges and a lack of coordination. The

report's authors wrote that, in some cases, lack of interoperability are due

to misaligned incentives. “Email standards emerged due to co-operation but

phone number portability only came about when it was required by regulators.

Private efforts by digital platforms will be similarly hampered by misaligned

incentives. Open Banking provides an instructive example of how policy

intervention can overcome technical and coordination challenges and misaligned

incentives.” In July, when the DMT was setup, law firm Osborne Clarke warn

about the disruption to businesses increased regulations could bring.

Consumption of public cloud is way ahead of the ability to secure it

As the shift to working from home at the start of the year began, the old

reliance on the VPN showed itself to be a potential bottleneck to employees

being able to do what they are paid for. "I think the new mechanism that we've

been sitting on -- everyone's been doing for 20 years around VPN as a way of

segmentation -- and then the zero trust access model is relatively new, I

think that mechanism is really intriguing because [it] is so extensible to so

many different problems in use cases that VPN's didn't solve, and then other

use cases that people didn't even consider because there was no mechanism to

do it," Jefferson said. Going a step further, Eren thinks VPN usage between

client and sites is on life support, but VPNs themselves are not going away.

... According to Jefferson, the new best practice is to push security controls

as far out to the edge as possible, which undermines the role of traditional

appliances like firewalls to be able to enforce security, and people are

having to work out the best place for their controls in the new working

environment. "I used to be pretty comfortable. This guy, he had 10,000 lines

of code written on my Palo Alto or Cisco and every time we did a firewall

refresh every 10 years, we had to worry about the 47,000 ACLs [access control

list] on the firewall, and now that gets highly distributed," he said.

IOT & Distributed Ledger Technology Is Solving Digital Economy Challenges

DLTs can play an important function in driving data provenance yet ought to be

utilized in conjunction with technologies, for example, hardware root of trust

and immutable storage. Distributed ledger technology just keeps up a record of

the transactions themselves, so if you have poor or fake information, it will

simply disclose to you where that terrible information has been. All in all,

DLTs alone don’t address software engineering’s trash in, trash out issue, yet

offer impressive advantages when utilized in concert with technologies that

ensure data integrity. Blockchain innovation vows to be the missing

connection empowering peer-to-peer contractual behavior with no third party to

“certify” the IoT transaction. It answers the challenge of scalability, single

purpose of disappointment, time stamping, record, security, trust and

reliability in a steady way. Blockchain innovation could give a basic

infrastructure to two devices to straightforwardly move a piece of property,

for example, cash or information between each other with a secured and

reliable time-stamped contractual handshake. To empower message exchanges, IoT

devices will use smart contracts which at that point model the understanding

between the two gatherings.

Does small data provide sharper insights than big data?

Data Imbalance occurs when the number of data points for different classes is

uneven. Imbalance in most machine learning models is not a problem, but

imbalance is consequential in Small Data. One technique is to change the Loss

Function by adjusting weights, another example of how AI models are not

perfect. A very readable explanation of imbalance and its remedies can be

found here. Difficulty in Optimization is a fundamental problem since

that is what machine learning is meant to do. Optimization starts with

defining some kind of loss function/cost function. It ends with minimizing it

using one or the other optimization routines, usually Gradient Descent, an

iterative algorithm for finding a local minimum of a differentiable function

(first-semester calculus, not magic). But if the dataset is weak, the

technique may not optimize. The most popular remedy is Transfer Learning. As

the name implies, transfer learning is a machine learning method where a model

is reused to enhance another model. A simple explanation of transfer learning

can be found here. I wanted to do #3 first, because #2 is the more compelling

discussion about small data.

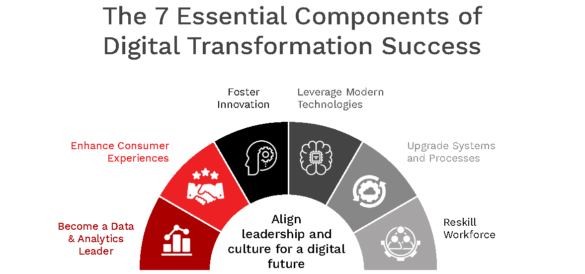

84% of global decision makers accelerating digital transformation plans

“New ways of working, initially broadly imposed by the global pandemic, are

morphing into lasting models for the future,” said Mickey North Rizza, program

vice president for IDC‘s Enterprise Applications and Digital Commerce research

practice. “Permanent technology changes, underpinned by improved

collaboration, include supporting hybrid work, accelerating cloud use,

increasing automation, going contactless, adopting smaller TaskApps, and

extending the partnership ecosystem. Enterprise application vendors need to

assess their immediate and long-term strategies for delivering collaboration

platforms in conjunction with their core software.” “If we’ve learned anything

this year, it’s that the business environment can change almost overnight, and

as business leaders we have to be able to reimagine our organizations and

seize opportunities to secure sustainable competitive advantage,” said Mike

Ettling, CEO, Unit4. “Our study shows what is possible with continued

investment in innovation and a people-first, flexible enterprise applications

strategy. As many countries go back into some form of lockdown, this

people-centric focus is crucial if businesses are to survive the challenges of

the coming months.”

How Apache Pulsar is Helping Iterable Scale its Customer Engagement Platform

Pulsar’s top layer consists of brokers, which accept messages from producers

and send them to consumers, but do not store data. A single broker handles

each topic partition, but the brokers can easily exchange topic ownership, as

they do not store topic states. This makes it easy to add brokers to increase

throughput and immediately take advantage of new brokers. This also enables

Pulsar to handle broker failures. ... One of the most important functions of

Iterable’s platform is to schedule and send marketing emails on behalf of

Iterable’s customers. To do this, we publish messages to customer-specific

queues, then have another service that handles the final rendering and sending

of the message. These queues were the first thing we decided to migrate from

RabbitMQ to Pulsar. We chose marketing message sends as our first Pulsar use

case for two reasons. First, because sending incorporated some of our more

complex RabbitMQ use cases. And second, because it represented a very large

portion of our RabbitMQ usage. This was not the lowest risk use case; however,

after extensive performance and scalability testing, we felt it was where

Pulsar could add the most value.

Algorithmic transparency obligations needed in public sector

The review notes that bias can enter algorithmic decision-making systems in a

number of ways. These include historical bias, in which data reflecting

previously biased human decision-making or historical social inequalities is

used to build the model; data selection bias, in which the data collection

methods used mean it is not representative; and algorithmic design bias, in

which the design of the algorithm itself leads to an introduction of bias.

Bias can also enter the algorithmic decision-making process because of human

error as, depending on how humans interpret or use the outputs of an

algorithm, there is a risk of bias re-entering the process as they apply their

own conscious or unconscious biases to the final decision. “There is also risk

that bias can be amplified over time by feedback loops, as models are

incrementally retrained on new data generated, either fully or partly, via use

of earlier versions of the model in decision-making,” says the review. “For

example, if a model predicting crime rates based on historical arrest data is

used to prioritise police resources, then arrests in high-risk areas could

increase further, reinforcing the imbalance.”

Regulation on data governance

The data governance regulation will ensure access to more data for the EU

economy and society and provide for more control for citizens and companies

over the data they generate. This will strengthen Europe’s digital sovereignty

in the area of data. It will be easier for Europeans to allow the use of data

related to them for the benefit of society, while ensuring full protection of

their personal data. For example, people with rare or chronic diseases may

want to give permission for their data to be used in order to improve

treatments for those diseases. Through personal data spaces, which are novel

personal information management tools and services, Europeans will gain more

control over their data and decide on a detailed level who will get access to

their data and for what purpose. Businesses, both small and large, will

benefit from new business opportunities as well as from a reduction in costs

for acquiring, integrating and processing data, from lower barriers to enter

markets, and from a reduction in time-to-market for novel products and

services. ... Member States will need to be technically equipped to ensure

that privacy and confidentiality are fully respected.

Why Vulnerable Code Is Shipped Knowingly

Even with a robust application security program, organizations will still deploy

vulnerable code! The difference is that they will do so with a thorough and

contextual understanding of the risks they're taking rather than allowing

developers or engineering managers — who lack security expertise — to make that

decision. Application security requires a constant triage of potential risks,

involving prioritization decisions that allow development teams to mitigate risk

while still meeting key deadlines for delivery. As application security has

matured, no single testing technique has helped development teams mitigate all

security risk. Teams typically employ multiple tools, often from multiple

vendors, at various points in the SDLC. Usage varies, as do the tools that

organizations deem most important, but most organizations end up utilizing a set

of tools to satisfy their security needs. Lastly, while most organizations

provide developers with some level of security training, more than 50% only do

so annually or less often. This is simply not frequent or thorough enough to

develop secure coding habits. While development managers are often responsible

for this training, in many organizations, application security analysts carry

the burden of performing remedial training for development teams or individual

developers who have a track record of introducing too many security issues.

Quote for the day:

"Most people live with pleasant illusions, but leaders must deal with hard realities." - Orrin Woodward