Reworking the Taxonomy for Richer Risk Assessments

With pre-assessment and planning, you need to think about the desired outcome

(i.e., identify the risks to the facility) and identify the necessary actions

to mitigate or eliminate the risks and associated vulnerabilities. The

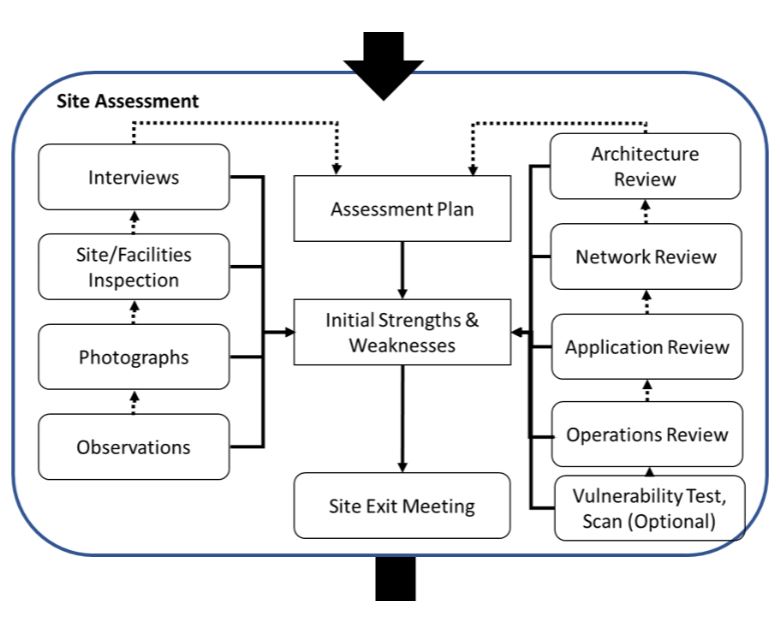

flow chart above is a detailed view of this phase and includes collecting and

digesting documents, identifying the team members and the necessary skill

sets, and getting ready for travel. Of course, contacting the "customer" and

setting up the necessary on-site logistics are important. ... Don't forget

these threats and vulnerabilities can be cyber or physical. They can also be

part of the site management and culture. What about training or lack thereof?

They can all contribute to the risk profile of the facility. The graphic above

offers some elements of the on-site activities. You can see that we have

inspections, observations, taking photographs, and looking at the site network

and architecture. Even a cyber-vulnerability scan may be part of the site

assessment. These activities are intended to be part of the site assessment

plan. However, don't let the plan place barriers on your site risk reviews.

Feel free to follow leads and evidence of problems, since that is why you are

on-site rather than doing a remote risk assessment via Zoom.

How blockchain is set to revolutionize the healthcare sector

Despite its potential, data portability across multiple systems and services

is a real issue. There is nothing more valuable and personal to an individual

than their personal medical records, so making data shareable across services

will inevitably raise concerns around the spectre of data being misused.

Currently, data does not flow seamlessly across technology solutions within

healthcare. For example, in the UK your hospital records do not form part of

your GP records, but the advantages are clear in terms of treatment and

preventative care were they to do so. Unfortunately, it is not likely a

centralised storage and delivery system will get traction until there is one

that can ensure the appropriate encryption and security. The risks are simply

too high. Yet, it is an issue that a technology like blockchain can tackle.

This is because the purpose of the chain is to store a series of transactions

in a way that cannot be altered or changed. What renders it immutable is the

combination of two opposing things: the cryptography and its openness. Each

transaction is signed with a private key and then distributed amongst a peer

to peer set of participants. Without a valid signature, new blocks created by

data changes are ignored and not added to the chain.

UX Patterns: Stale-While-Revalidate

Stale-while-revalidate (SWR) caching strategies provide faster feedback to

the user of web applications, while still allowing eventual consistency.

Faster feedback reduces the necessity to show spinners and may result in

better-perceived user experience. ... Developers may also

usestale-while-revalidate strategies in single-page applications that make

use of dynamic APIs. In such applications, oftentimes a large part of the

application state comes from remotely stored data (the source of truth). As

that remote data may be changed by other actors, fetching it anew on each

request guarantees to always return the freshest data available.

Stale-while-revalidate strategies substitute the requirement to always have

the latest data for that of having the latest data eventually. The mechanism

works in single-page applications in a similar way as in HTTP requests. The

application sends a request to the API server endpoint for the first time,

caches and returns the resulting response. The next time the application

will make the same request, the cached response will be returned

immediately, while simultaneously the request will proceed asynchronously.

When the response is received, the cache is updated, with the appropriate

changes to the UI taking place.

The Inevitable Rise of Intelligence in the Edge Ecosystem

Edge computing is becoming an integral part of the distributed computing

model, says Nishith Pathak, global CTO for analytics and emerging technology

with DXC Technology. He says there is ample opportunity to employ edge

computing across industry verticals that require near real-time

interactions. “Edge computing now mimics the public cloud,” Pathak says, in

some ways offering localized versions of cloud capabilities regarding

compute, the network, and storage. Benefits of edge-based computing include

avoiding latency issues, he says, and anonymizing data so only relevant

information moves to the cloud. This is possible because “a humungous amount

of data” can be processed and analyzed by devices at the edge, Pathak says.

This includes connected cars, smart cities, drones, wearables, and other

internet of things applications that consume on demand compute. The

population of devices and scope of infrastructure that support the edge are

expected to accelerate, says Jeff Loucks, executive director of Deloitte’s

center for technology, media and telecommunications. He says implementations

of the new communications standard have exceeded initial predictions that

there would be 100 private 5G network deployments by the end of 2020. “I

think that’s going to be closer to 1,000,” he says.

Take a Dip into Windows Containers with OpenShift 4.6

Windows Operating System in a container? Who would have thought?!? If you

asked me that question a few years back, I would have told you with

conviction that it would never happen! But if you ask me now, I will answer

you with a big, emphatic yes and even show you how to do so!In this article,

I will demonstrate how you can run Windows workloads in OpenShift 4.6 by

deploying a Windows container on a Windows worker node. In addition, I will

then highlight some of the issues and challenges that I see from a system

administrator perspective. ... For customers who have heterogeneous

environments with a mix of Linux and Windows workloads, the announcement of

a supported Windows container feature on OpenShift 4.6 is exciting news. As

of this writing, the supported workloads to run on Windows containers can be

either .NET core applications, traditional .NET framework applications, or

other Windows applications that run on a Windows server. So when did the

work start to make Windows containers possible to run on top of OpenShift?

In 2018, Red Hat and Microsoft announced the joint engineering collaboration

with the goal of bringing a supported Windows containers feature into

OpenShift.

GPS and water don't mix. So scientists have found a new way to navigate under the sea

Underwater devices already exist, for example to be fitted on whales as

trackers, but they typically act as sound emitters. The acoustic signals

produced are intercepted by a receiver that in turn can figure out the

origin of the sound. Such devices require batteries to function, which means

that they need to be replaced regularly – and when it is a migrating whale

wearing the tracker, that is no simple task. On the other hand, the UBL

system developed by MIT's team reflects signals, rather than emits them. The

technology builds on so-called piezoelectric materials, which produce a

small electrical charge in response to vibrations. This electrical charge

can be used by the device to reflect the vibration back to the direction

from which it came. In the researchers' system, therefore, a transmitter

sends sound waves through water towards a piezoelectric sensor. The acoustic

signals, when they hit the device, trigger the material to store an

electrical charge, which is then used to reflect a wave back to a receiver.

Based on how long it takes for the sound wave to reflect off the sensor and

return, the receiver can calculate the distance to the UBL. "In

contrast to traditional underwater acoustic communication systems, which

require each sensor to generate its own signals, backscatter nodes

communicate by simply reflecting acoustic signals in the environment," said

the researchers.

Temporal Tackles Microservice Reliability Headaches

Temporal consists of a programming framework (or SDK) and a managed service

(or backend). The core abstraction in Temporal is a fault-oblivious stateful

Workflow with business logic expressed as code. The state of the Workflow

code, including local variables and threads it creates, is immune to process

and Temporal service failures. Temporal supports the programming languages

Java and Go, but has SDKs in the works for Ruby, Python, Node.js, C#/.NET,

Swift, Haskell, Rust, C++ and PHP. In the event of a failure while running a

Workflow, state is fully restored to the line in the code where the failure

occurred and the process continues without developer intervention. One of

the restrictions on Workflow code, however, is that it must produce exactly

the same result each time it is executed, which rules out external API

calls. Those must be handled through what it calls Activities, which the

Workflow orchestrates. An activity is a function or an object method in one

of the supported languages, stored in task queues until an available worker

invokes its implementation function. When the function returns, the worker

reports its result to the Temporal service, which then reports to the

Workflow about completion.

The Cybersecurity Myths We Hear Ourselves Saying

There is a widely held belief — including from 19% of respondents — that the

brands you can trust won't take advantage of you and that they will protect

your data, as they surely do everyone else's data. However, the reality is

that almost all mainstream sites are collecting data about you, and if

they're not profiting off that data themselves, then there is a very good

chance that hackers are. The more sites you go to, even trusted ones, the

more cookies that are held in your browser. What's more, by surfing to

numerous sites, not only are you providing more data about yourself, but

you're also providing more pools of data that are being held by the various

sites you visit. Applying basic theories of probability, increasing the

number of pools increases the probability that any one of them will be

breached. The hard truth is that the only way to effectively ensure privacy

is to disconnect from the internet. Failing that, another good way to

protect data is by encrypting internet traffic history by using a VPN. A VPN

adds an extra layer of encrypted protection to a secured Wi-Fi network,

preventing corporate agents from tracking you while you're online.

Running React Applications at the Edge with Cloudflare Workers

Cloudflare Workers are a cool technology introduced by Cloudflare a couple

of years ago. Normally, you might have a server living in a data center

somewhere in the world. You’ll likely put a CDN in front of that to handle

caching and manage the load. But imagine having the power of a server

directly inside your CDN’s data center. This is what Cloudflare Workers

offers —a way to execute code directly at the edge of the CDN. This is

a really powerful way to manage and modify requests going to and from your

origin server—but it also opens up a whole new set of possibilities: instead

of paying for and managing your own server, you can use Cloudflare Workers

as your origin. This means lightning-fast responses directly at the edge

without a round trip to another data center. ... These patterns are what

inspired Flareact. Cloudflare Workers offers a Workers Sites feature that

allows you to host a static site on top of Cloudflare Workers, with assets

stored in a KV [Key/Value] store at the edge. This, combined with the

underlying Workers dynamic platform, seemed like the perfect use case for

Next.js. However, due to technical constraints, it proved too difficult

to get Next.js working on Cloudflare Workers. So I set out to build my own

framework modeled after Next.js.

The future is female: overcoming the challenges of being a woman in tech

Self-doubt affects everyone, but being in an industry in which you are

outnumbered by thopposite gender is particularly tough. According to

TrustRadius, three out of four tech professionals have experienced imposter

syndrome at work, but women are 22% more likely than men to feel this way.

Sheryl Sandberg even said that women in tech “hold ourselves back in ways

both big and small, by lacking self-confidence, by not raising our hands,

and by pulling back when we should be leaning in.” This is unsurprising, as

women are typically taught not to brag from an early age. Self marketing

might feel egotistical and uncomfortable at first but it definitely feels

more natural with practice! Confidence comes with knowledge; with technology

constantly evolving as new software and systems are created, women making

their way in tech should continue to learn as much as possible. Being on top

of new developments will get you noticed and make it easier to advocate for

yourself. But, if you don’t feel comfortable selling yourself, let others do

this for you. Ask trusted clients, colleagues and contacts to give

testimonials – many will be delighted to do so – and sing the praises of

those around you, as people will return the favour.

Quote for the day:

"The problem with being a leader is that you're never sure if you're being followed or chased." -- Claire A. Murray

No comments:

Post a Comment