Why securing the DNS layer is crucial to fight cyber crime

When left insecure, DNS servers can result in devastating consequences for

businesses that fall victim to attack. Terry Bishop, solutions architect at

RiskIQ, says: “Malicious actors are constantly looking to exploit weak links

in target organisations. A vulnerable DNS server would certainly be considered

a high-value target, given the variety of directions that could be taken once

compromised. “At RiskIQ, we find most organisations are unaware of about 30%

of their external-facing assets. That can be websites, mail servers, remote

gateways, and so on. If any of these systems are left unpatched, unmonitored

or unmanaged, it presents an opportunity for compromise and further potential

exploit, whether that is towards company assets, or other more valuable

infrastructure such as DNS servers are dependent on the motives of the

attacker and the specifics of the breached environment.” Kevin Curran,

senior member at the Institute of Electrical and Electronics Engineers (IEEE)

and professor of cyber security at Ulster University, agrees that DNS attacks

can be highly disruptive. In fact, an improperly working DNS layer would

effectively break the internet, he says.

The Dark Side of AI: Previewing Criminal Uses

Criminals' Top Goal: Profit, If that's the high level, the applied level is

that criminals have never shied away from finding innovative ways to earn an

illicit profit, be it through social engineering refinements, new business

models or adopting new types of technology. And AI is no exception. "Criminals

are likely to make use of AI to facilitate and improve their attacks by

maximizing opportunities for profit within a shorter period, exploiting more

victims and creating new, innovative criminal business models - all the while

reducing their chances of being caught," according to the report. Thankfully,

all is not doom and gloom. "AI promises the world greater efficiency,

automation and autonomy," says Edvardas Šileris, who heads Europol's European

Cybercrime Center, aka EC3. "At a time where the public is getting

increasingly concerned about the possible misuse of AI, we have to be

transparent about the threats, but also look into the potential benefits from

AI technology." ... Even criminal uptake of deepfakes has been scant. "The

main use of deepfakes still overwhelmingly appears to be for non-consensual

pornographic purposes," according to the report. It cites research from last

year by the Amsterdam-based AI firm Deeptrace , which "found 15,000 deepfake

videos online..."

Flash storage debate heats up over QLC SSDs vs. HDDs

Rosemarin said some vendors front end QLC with TLC flash, storage class memory

or DRAM to address caching and performance issues, but they run the risk of

scaling problems and destroying the cost advantage that the denser flash

technology can bring. "We had to launch a whole new architecture with

FlashArray//C to optimize and run QLC," Rosemarin said. "Otherwise, you're

very quickly going to get in a position where you're going to tell clients it

doesn't make sense to use QLC because [the] architecture can't do it

cost-efficiently." Vast Data's Universal Storage uses Intel Optane SSDs,

built on faster, more costly 3D XPoint technology, to buffer writes, store

metadata and improve latency and endurance. But Jeff Denworth, co-founder and

chief marketing officer at the startup, said the system brings cost savings

over alternatives through better longevity and data-reduction code, for

starters. "We ask customers all the time, 'If you had the choice, would you

buy a hard drive-based system, if cost wasn't the only issue?' And not a

single customer has ever said, 'Yeah, give me spinning rust,'" Denworth said.

Denser NAND flash chip technology isn't the only innovation that could help to

drive down costs of QLC flash. Roger Peene, a vice president in Micron's

storage business unit, spotlighted the company's latest 176-layer 3D NAND that

can also boost density and lower costs.

Instrumenting the Network for Successful AIOps

The highest quality network data is obtained by deploying devices such as

network TAPs that mirror the raw network traffic. Many vendors offer physical

and virtual versions of these to gather packet data from the data center as

well as virtualized segments of the network. AWS and Google Cloud have both

launched Virtual Private Cloud (VPC)traffic/packet mirroring features in the

last year that allow users to duplicate traffic to and from their applications

and forward it to cloud-native performance and security monitoring tools, so

there are solid options for gathering packet data from cloud-hosted

applications too. The network taps let network monitoring tools view the

raw data without impacting the actual data-plane. When dealing with high

sensitivity applications such as ultra-low-latency trading, high quality

network monitoring tools use timestamping with nanosecond accuracy to identify

bursts with millisecond resolution which might cause packet drops that normal

SNMP type counters can’t explain. This fidelity of data is relevant in other

high quality applications such as real-time video decoding, gaming multicast

servers, HPC and other critical IOT control systems.

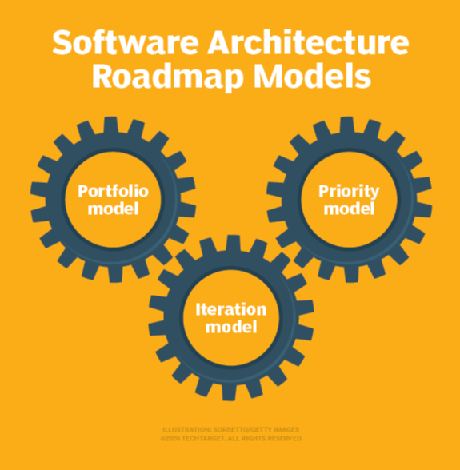

How to create an effective software architecture roadmap

The iteration model demonstrates how the architecture and related software

systems will change and evolve on the way to a final goal. Each large

iteration segment represents one milestone goal of an overall initiative, such

as updating a particular application database or modernizing a set of legacy

services. Then, each one of those segments contains a list of every project

involved in meeting that milestone. For instance, a legacy service

modernization iteration requires a review of the code, refactoring efforts,

testing phases and deployment preparations. While architects may feel

pressured to create a realistic schedule from the start of the iteration

modeling phase, Richards said that it's not harmful to be aspirational,

imaginative or even mildly unrealistic at this stage. Since this is still an

unrefined plan, try to ignore limitations like cost and staffing, and focus on

goals. ... Once an architect has an iteration model in place, the portfolio

model injects reality into the roadmap. In this stage, the architect or

software-side project lead analyzes the feasibility of the overall goal. They

examine the initiative, the requirements for each planned iteration and the

resources available for the individual projects within those iterations.

How new-age data analytics is revolutionising the recruitment and hiring segment

There are innumerable advantages attached to opting for AI over an ordinary

recruitment team. With the introduction of AI, companies can easily lower the

costs involved in maintaining a recruitment team. The highly automated

screening procedures select quality candidates that in turn will help the

organization grow and retain better personnel – a factor that is otherwise

overlooked in the conventional recruitment process. Employing AI and ML

automates the whole recruitment process and helps eliminate the probability of

human errors. Automation increases efficiency and improves the performance of

other departments of the company. The traditional recruitment process tends to

be very costly. Several teams are often needed for the purpose of hiring

people in a company. But with the help of AI and ML, the unnecessary costs can

be done away with and the various stages of hiring can all be conducted on a

single dedicated platform. Additionally, if the company engages in a lot of

contract work, then AI can be used for analysing the project plan and

predicting the kinds, numbers, ratio and skills of workers that may be

required for the purpose. The scope of AI and ML cannot be undermined by the

capabilities of current systems.

6 experts share quantum computing predictions for 2021

"Next year is going to be when we start seeing what algorithms are going to

show the most promise in this near term era. We have enough qubits, we have

really high fidelities, and some capabilities to allow brilliant people to

have a set of tools that they just haven't had access to," Uttley

said. "Next year what we will see is the advancement into some areas that

really start to show promise. Now you can double down instead of doing a

scattershot approach. You can say, 'This is showing really high energy, let's

put more resources and computational time against it.' Widespread use, where

it's more integrated into the typical business process, that is probably a

decade away. But it won't be that long before we find applications for which

we're using quantum computers in the real world. That is in more the 18-24

month range." Uttley noted that the companies already using Honeywell's

quantum computer are increasingly interested in spending more and more time

with it. Companies working with chemicals and the material sciences have shown

the most interest he said, adding that there are also healthcare applications

that would show promise.

How Industrial IoT Security Can Catch Up With OT/IT Convergence

The bigger challenge, he says, is not in the silicon of servers and networking

appliances but in the brains of security professionals. "The harder problem, I

think, is the skills problem, which is that we have very different expertise

existing within companies and in the wider security community, between people

who are IT security experts and people who are OT security experts," Tsonchev

says. "And it's very rare to find one individual where those skills converge."

It's critical that companies looking to solve the converged security problem,

whether in technology or technologists, to figure out what the technology and

skills need to look like in order to support their business goals. And they

need to recognize that the skills to protect both sides of the organization

may not reside in a single person, Tsonchev says. "There's obviously a very

deep cultural difference that comes from the nature of the environments

characterized by the standard truism that confidentiality is the priority in

IT and availability is the priority in OT," he explains. And that difference

in mindset is natural – and to some extent essential – based on the

requirements of the job. Where the two can begin to come together, Tsonchev

says, is in the evolution away from a protection-based mindset to a way of

looking at security based on risk and risk tolerance.

Dark Data: Goldmine or Minefield?

The issue here is that companies are still thinking in terms of sandboxes even

when they are face-to-face with the entire beach. A system that considers

analytics and governance flip sides of the same coin and incorporates them

synergistically across all enterprise data is called for. Data that has been

managed has the potential to capture the corpus of human knowledge within the

organization, reflecting the human intent of a business. can offer substantial

insight into employee work patterns, communication networks, subject matter

expertise, and even organizational influencers and business processes. It also

holds the potential for eliminating duplicative human effort, which can be an

excellent tool to increase productivity and output. The results of this alone

are a sure-fire way to boost productivity, spot common pain points that may

not be effective to the workstream and can share insights to organizations

where untapped potential may lay. Companies that have successfully

bridged information management with analytics are answering fundamental

business questions that have massive impact on revenue: Who are the key

employees? ... With the increase in sophistication of analytics and its

convergence with information governance, we will likely see a renaissance for

this dark data that is presently largely a liability.

NCSC issues retail security alert ahead of Black Friday sales

“We want online shoppers to feel confident that they’re making the right

choices, and following our tips will reduce the risk of giving an early gift to

cyber criminals. If you spot a suspicious email, report it to us, or if you

think you’ve fallen victim to a scam, report the details to Action Fraud and

contact your bank as soon as you can.” Helen Dickinson, chief executive of the

British Retail Consortium (BRC), added: “With more and more of us browsing and

shopping online, retailers have invested in cutting-edge systems and expertise

to protect their customers from cyber threats, and the BRC recently published a

Cyber Resilience Toolkit for extra support to help to make the industry more

secure. “However, we as customers also have a part to play and should follow the

NCSC’s helpful tips for staying safe online.” The NCSC’s advice, which can be

accessed online at its website, includes a number of tips, including being

selective about where you shop, only providing necessary information, using

secure and protected payments, securing online accounts, identifying potential

phishing attempts, and how to deal with any problems. Carl Wearn, head of

e-crime at Mimecast, commented: “Some of the main things to look out for include

phishing emails and brand spoofing, as we are likely to see an increase in both.

Quote for the day:

“Focus on the journey, not the destination. Joy is found not in finishing an activity but in doing it.” -- Greg Anderson

No comments:

Post a Comment