To do in 2021: Get up to speed with quantum computing 101

For business leaders who are new to quantum computing, the overarching

question is whether to invest the time and effort required to develop a

quantum strategy, Savoie wrote in a recent column for Forbes. The business

advantages could be significant, but developing this expertise is expensive

and the ROI is still long term. Understanding early use cases for the

technology can inform this decision. Savoie said that one early use for

quantum computing is optimization problems, such as the classic traveling

salesman problem of trying to find the shortest route that connects multiple

cities. "Optimization problems hold enormous importance for finance, where

quantum can be used to model complex financial problems with millions of

variables, for instance to make stock market predictions and optimize

portfolios," he said. Savoie said that one of the most valuable applications

for quantum computing is to create synthetic data to fill gaps in data used to

train machine learning models. "For example, augmenting training data in this

way could improve the ability of machine learning models to detect rare

cancers or model rare events, such as pandemics," he said.

SmartKey And Chainlink To Collaborate In Govt-Approved Blockchain Project

Chainlink is the missing link in developing and delivering a virtually

limitless number of smart city integrations that combine SmartKey’s API and

blockchain-enabled hardware with real world data and systems to harness the

power of automated data-driven IoT applications with tangible value. The two

protocols are complementary: The SmartKey protocol manages access to different

physical devices across the Blockchain of Things (BoT) space (e.g. opening a

gate), while the Chainlink Network allows developers to connect SmartKey

functionalities with different sources of data (e.g. weather data, user web

apps). The integration focuses on connecting all the data and events sourced

and delivered by the Chainlink ecosystem to the SmartKey connector, which then

turns that data (commands issued by Ethereum smart contracts) into

instructions for IoT devices (e.g., active sensors GSM — GPS). Our connectors

can also deliver information to Chainlink oracles confirming these real world

instructions were carried out (e.g. gate was opened), potentially leading to

additional smart contract outputs. The confirmation of service delivery is a

“contract key” that connects both ecosystems into one “world” and relays an

Ethereum action to IoT devices.

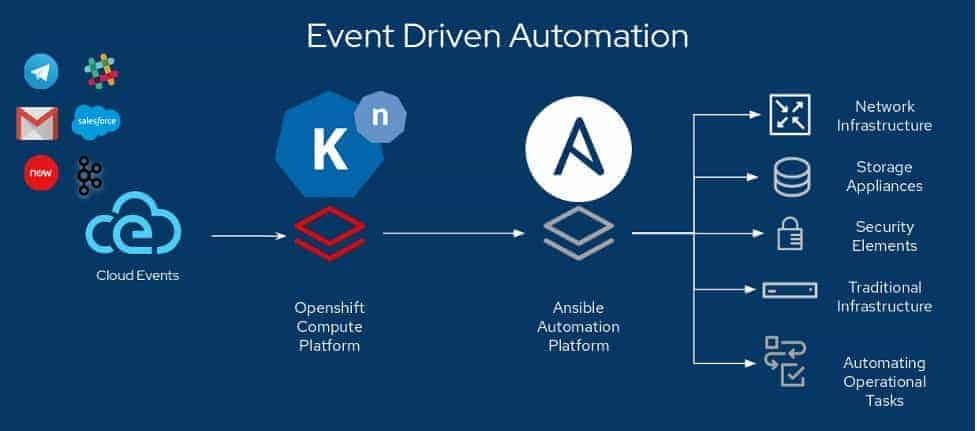

DevOps + Serverless = Event Driven Automation

For the most part, Serverless is seen as Function as a Service (FaaS). While

it is definitely true that most Serverless code being implemented today is

FaaS, that’s not the destination, but the pitstop. The Serverless space is

still evolving. Let’s take a journey and explore how far Serverless has come,

and where it is going. Our industry started with what I call “Phase 1.0”, when

we just started talking or hearing about Serverless, and for the most part

just thought about it as Functions – small snippets of code running on demand

and for a short period of time. AWS Lambda made this paradigm very popular,

but it had its own limitations around execution time, protocols, and poor

local development experience. Since then, more people have realized that the

same serverless traits and benefits could be applied to microservices and

Linux containers. This leads us into what I’m calling the “Phase 1.5”. Some

solutions here completely abstract Kubernetes, delivering the serverless

experience through an abstraction layer that sits on top of it, like Knative.

By opening up Serverless to containers, users are not limited to function

runtimes and can now use any programming language they want.

Self-documenting Architecture

A self-documenting architecture would reduce the learning curve. It would

accentuate poor design choices and help us to make better ones. It would help

us to see the complexity we are adding to the big picture as we make changes

in the small and help us to keep complexity lower. And it would save us from

messy whiteboard diagrams that explain how one person incorrectly thinks the

system works . ... As software systems gradually evolve on a continual basis,

individual decisions may appear to make sense in isolation, but from a big

picture architectural perspective those changes may add unnecessary complexity

to the system. With a self-documenting architecture, everybody who makes

changes to the system can easily zoom out to the bigger picture and consider

the wider implications of their changes. One of the reasons I use the Bounded

Context Canvas is because it visualises all of the key design decisions for an

individual service. Problems with inconsistent naming, poorly-defined

boundaries, or highly-coupled public interfaces jump out at you. When these

decisions are made in isolation they seem OK, it is only when considered in

the bigger picture that the overall design appears sub-optimal.

Is graph technology the fuel that’s missing for data-based government?

Another government context for use of graphs is global smart city projects.

For instance, in Turku, Finland, graph databases are being deployed to

leverage IoT data to make better decisions about urban planning. According to

Jussi Vira, CEO of Turku City Data, the IT services company that is assisting

the city of Turku to achieve its ideas: “A lack of clear ways to bridge the

gap between data and business problems was inhibiting our ability to innovate

and generate value from data”. By deploying graphs, his team is able to

represent many real-world business problems as people, objects, locations and

events, and their interrelationships. Turku City Data found graphs represent

data in the same way in which business problems are described, so it was

easier to match relevant datasets to concrete business problems. Adopting

graph technology has enabled the city of Turku to deliver daily supplies to

elderly citizens who cannot leave their homes because of the Covid-19

pandemic. The service determines routes through the city that optimise

delivery speed and minimise transportation resources while maintaining

unbroken temperature-controlled shipping requirements for foodstuffs and

sensitive medication.

The Relationship Between Software Architecture And Business Models (and more)

A software architecture has to implement the domain concepts in order to

deliver the how of the business model. There are an unlimited number of ways

to model a business domain, however. It is not a deterministic, sequential

process. A large domain must be decomposed into software sub-systems. Where

should the boundaries be? Which responsibilities should live in each

sub-system? There are many choices to make and the arbiter is the business

model. A software architecture, therefore, is an opinionated model of the

business domain which is biased towards maximising the business model. When

software systems align poorly with the business domain, changes become harder

and the business model is less successful. When developers have to mentally

translate from business language to the words in code it takes longer and

mistakes are more likely. When new pieces of work always slice across-multiple

sub-systems, it takes longer to make changes and deploy them. It is,

therefore, fundamentally important to align the architecture and the domain as

well as possible.

In 2021, edge computing will hit an inflection point

Data center marketplaces will emerge as a new edge hosting option. When people

talk about the location of "the edge," their descriptions vary widely.

Regardless of your own definition, edge computing technology needs to sit as

close to "the action" as possible. It may be a factory floor, a hospital room,

or a North Sea oil rig. In some cases, it can be in a data center off premises

but still as close to the action as makes sense. This rules out many of the

big data centers run by cloud providers or co-location services that are close

to major population centers. If your enterprise is highly distributed, those

centers are too far. We see a promising new option emerging that unites

smaller, more local data centers in a cooperative marketplace model. New data

center aggregators such as Edgevana and Inflect allow you to think globally

and act locally, expanding your geographic technology footprint. They don't

necessarily replace public cloud, content delivery networks, or traditional

co-location services — in fact, they will likely enhance these services. These

marketplaces are nascent in 2020 but will become a viable model for edge

computing in 2021.

Why Security Awareness Training Should Be Backed by Security by Design

The concepts of "safe by design" or "secure by design" are well-established

psychological enablers of behavior. For example, regulators and technical

architects across the automobile and airlines industries prioritize safety

above all else. "This has to emanate across the entire ecosystem, from the

seatbelts in vehicles, to traffic lights, to stringent exams for drivers,"

says Daniel Norman, senior solutions analyst for ISF and author of the report.

"This ecosystem is designed in a way where an individual's ability to behave

insecurely is reduced, and if an unsafe behavior is performed, then the

impacts are minimized by robust controls." As he explains, these principles of

security by design can translate to cybersecurity in a number of ways,

including how applications, tools, policies, and procedures are all designed.

The goal is to provide every employee role "with an easy, efficient route

toward good behavior." This means sometimes changing the physical office

environment or the digital user interface (UI) environment. For example,

security by design to improve phishing susceptibility might include

implementing easy-to-use phishing reporting buttons within employee email

clients. Similarly, it might mean creating colorful pop-ups in email platforms

to remind users not to send confidential information.

Tech Should Enable Change, Not Drive It

Technology should remove friction and allow people to do their jobs, while

enabling speed and agility. This means ensuring a culture of connectivity

where there is trust, free-flowing ideation, and the ability to collaborate

seamlessly. Technology can also remove interpersonal friction, by helping to

build trust and transparency — for example, blockchain and analytics can help

make corporate records more trustworthy, permitting easy access for regulators

and auditors that may enhance trust inside and outside the organization. This

is important; one study found that transparency from management is directly

proportional to employee happiness. And happy employees are more productive

employees. Technology should also save employees time, freeing them up to take

advantage of opportunities for human engagement (or, in a pandemic scenario,

enabling virtual engagement), as well as allowing people to focus on

higher-value tasks. ... It’s vital that businesses recognize diversity and

inclusion as a moral and a business imperative, and act on it. Diversity can

boost creativity and innovation, improve brand reputation, increase employee

morale and retention, and lead to greater innovation and financial

performance.

Researchers bring deep learning to IoT devices

The customized nature of TinyNAS means it can generate compact neural networks

with the best possible performance for a given microcontroller – with no

unnecessary parameters. “Then we deliver the final, efficient model to the

microcontroller,” say Lin. To run that tiny neural network, a microcontroller

also needs a lean inference engine. A typical inference engine carries some dead

weight – instructions for tasks it may rarely run. The extra code poses no

problem for a laptop or smartphone, but it could easily overwhelm a

microcontroller. “It doesn’t have off-chip memory, and it doesn’t have a disk,”

says Han. “Everything put together is just one megabyte of flash, so we have to

really carefully manage such a small resource.” Cue TinyEngine. The researchers

developed their inference engine in conjunction with TinyNAS. TinyEngine

generates the essential code necessary to run TinyNAS’ customized neural

network. Any deadweight code is discarded, which cuts down on compile-time. “We

keep only what we need,” says Han. “And since we designed the neural network, we

know exactly what we need. That’s the advantage of system-algorithm

codesign.”

Quote for the day:

"Empowerment is the magic wand that turns a frog into a prince. Never estimate the power of the people, through true empowerment great leaders are born." -- Lama S. Bowen

No comments:

Post a Comment