Quote for the day:

"Success is liking yourself, liking what you do, and liking how you do it." -- Maya Angelou

Scalability and Flexibility: Every Software Architect's Challenge

Building successful business applications involves addressing practical

challenges and strategic trade-offs. Cloud computing offers flexibility, but

poor resource management can lead to ballooning costs. Organizations often face

dilemmas when weighing feature richness against budget constraints. Engaging

stakeholders early in the development process ensures alignment with priorities.

... Right-sizing cloud resources is essential for software architects, who can

leverage tools to monitor usage and scale resources automatically based on

demand. Serverless computing models, which charge only for execution time, are

ideal for unpredictable workloads and seasonal fluctuations, ensuring

organizations only use what they need when needed. .. The next decade will usher

in unprecedented opportunities for innovation in business applications.

Regularly reviewing market trends and user feedback ensures applications remain

relevant. Features like voice commands and advanced analytics are becoming

standard as users demand more intuitive interfaces, boosting overall performance

and creating new avenues for innovation. Software architects can stay alert and

flexible by regularly assessing application performance, user feedback, and

market trends to guarantee that systems remain relevant.

Building successful business applications involves addressing practical

challenges and strategic trade-offs. Cloud computing offers flexibility, but

poor resource management can lead to ballooning costs. Organizations often face

dilemmas when weighing feature richness against budget constraints. Engaging

stakeholders early in the development process ensures alignment with priorities.

... Right-sizing cloud resources is essential for software architects, who can

leverage tools to monitor usage and scale resources automatically based on

demand. Serverless computing models, which charge only for execution time, are

ideal for unpredictable workloads and seasonal fluctuations, ensuring

organizations only use what they need when needed. .. The next decade will usher

in unprecedented opportunities for innovation in business applications.

Regularly reviewing market trends and user feedback ensures applications remain

relevant. Features like voice commands and advanced analytics are becoming

standard as users demand more intuitive interfaces, boosting overall performance

and creating new avenues for innovation. Software architects can stay alert and

flexible by regularly assessing application performance, user feedback, and

market trends to guarantee that systems remain relevant.Navigating the Future of Network Security with Secure Access Service Edge (SASE)

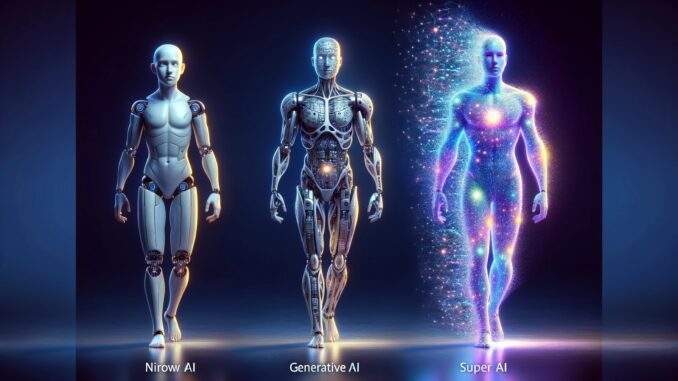

As businesses expand their digital footprint, cyber attackers increasingly target unsecured cloud resources and remote endpoints. Traditional perimeter-based network and security architectures are not capable of protecting distributed environments. Therefore, organizations must adopt a holistic, future-proof network and cybersecurity architecture to succeed in this rapidly changing business landscape. The ChallengesPerimeter-based security revolves around defending the network’s boundary. It assumes that anyone who has gained access to the network is trusted and that everything outside the network is a potential threat. While this model worked well when applications, data, and users were contained within corporate walls, it is not adequate in a world where cloud applications and hybrid work are the norm. ... ... SASE is an architecture comprising a broad spectrum of technologies, including Zero Trust Network Access (ZTNA), Secure Web Gateway (SWG), Firewall as a Service (FWaaS), Cloud Access Security Brocker (CASB), Data Loss Prevention (DLP), and Software-Defined Wide Area Networking (SD-WAN). Everything is embodied into a single, cloud-native platform that provides advanced cyber protection and seamless network performance for highly distributed applications and users.Whether AI is a bubble or revolution, how does software survive?

Bubble or not, AI has certainly made some waves, and everyone is looking to find

the right strategy. It’s already caused a great deal of disruption—good and

bad—among software companies large and small. The speed at which the technology

has moved from its coming out party, has been stunning; costs have dropped,

hardware and software have improved, and the mediocre version of many jobs can

be replicated in a chat window. It’s only going to continue. “AI is positioned

to continuously disrupt itself, said McConnell. “It's going to be a constant

disruption. If that's true, then all of the dollars going to companies today are

at risk because those companies may be disrupted by some new technology that's

just around the corner.” First up on the list of disruption targets: startups.

If you’re looking to get from zero to market fit, you don’t need to build the

same kind of team like you used to. “Think about the ratios between how many

engineers there are to salespeople,” said Tunguz. “We knew what those were for

10 or 15 years, and now none of those ratios actually hold anymore. If we are

really are in a position that a single person can have the productivity of 25,

management teams look very different. Hiring looks extremely different.” That’s

not to say there won’t be a need for real human coders. We’ve seen how badly the

vibe coding entrepreneurs get dunked on when they put their shoddy apps in front

of a merciless internet.

Bubble or not, AI has certainly made some waves, and everyone is looking to find

the right strategy. It’s already caused a great deal of disruption—good and

bad—among software companies large and small. The speed at which the technology

has moved from its coming out party, has been stunning; costs have dropped,

hardware and software have improved, and the mediocre version of many jobs can

be replicated in a chat window. It’s only going to continue. “AI is positioned

to continuously disrupt itself, said McConnell. “It's going to be a constant

disruption. If that's true, then all of the dollars going to companies today are

at risk because those companies may be disrupted by some new technology that's

just around the corner.” First up on the list of disruption targets: startups.

If you’re looking to get from zero to market fit, you don’t need to build the

same kind of team like you used to. “Think about the ratios between how many

engineers there are to salespeople,” said Tunguz. “We knew what those were for

10 or 15 years, and now none of those ratios actually hold anymore. If we are

really are in a position that a single person can have the productivity of 25,

management teams look very different. Hiring looks extremely different.” That’s

not to say there won’t be a need for real human coders. We’ve seen how badly the

vibe coding entrepreneurs get dunked on when they put their shoddy apps in front

of a merciless internet.

The AI security gap no one sees—until it’s too late

The most serious—and least visible—gaps stem from the “Jenga-style” layering of managed AI services, where cloud providers stack one service on another and ship them with user-friendly but overly permissive defaults. Tenable’s 2025 Cloud AI Risk Report shows that 77 percent of organisations running Google Cloud’s Vertex AI Workbench leave the notebook’s default Compute Engine service account untouched; that account is an all-powerful identity which, if hijacked, lets an attacker reach every other dependent service. ... CIOs should treat every dataset in the AI pipeline as a high-value asset. Begin with automated discovery and classification across all clouds so you know exactly where proprietary corpora or customer PII live, then encrypt them in transit and at rest in private, version-controlled buckets. Enforce least-privilege access through short-lived service-account tokens and just-in-time elevation, and isolate training workloads on segmented networks that cannot reach production stores or the public internet. Feed telemetry from storage, IAM and workload layers into a Cloud-Native Application Protection Platform that includes Data Security Posture Management; this continuously flags exposed buckets, over-privileged identities and vulnerable compute images, and pushes fixes into CI/CD pipelines before data can leak.5 questions defining the CIO agenda today

CIOs along with their executive colleagues and board members “realize that hacks

and disruptions by bad actors are an inevitability,” SIM’s Taylor says. That

realization has shifted security programs from being mostly defensive measures

to ones that continuously evolve the organization’s ability to identify breaches

quickly, respond rapidly, and return to operations as fast as possible, Taylor

says. The goal today is ensuring resiliency — even as the bad actors and their

attack strategies evolve. ... Building a tech stack that can grow and retract

with business needs, and that can evolve quickly to capitalize on an

ever-shifting technology landscape, is no easy feat, Phelps and other IT leaders

readily admit. “In modernizing, it’s such a moving target, because once you got

it modernized, something new can come out that’s better and more automated. The

entire infrastructure is evolving so quickly,” says Diane Gutiw ... “CIOs

should be asking, ‘How do I change or adapt what I do now to be able to manage a

hybrid workforce? What does the future of work look like? How do I manage that

in a secure, responsible way and still take advantage of the efficiencies? And

how do I let my staff be innovative without violating regulation?’” Gutiw says,

noting that today’s managers “are the last generation of people who will only

manage people.”

CIOs along with their executive colleagues and board members “realize that hacks

and disruptions by bad actors are an inevitability,” SIM’s Taylor says. That

realization has shifted security programs from being mostly defensive measures

to ones that continuously evolve the organization’s ability to identify breaches

quickly, respond rapidly, and return to operations as fast as possible, Taylor

says. The goal today is ensuring resiliency — even as the bad actors and their

attack strategies evolve. ... Building a tech stack that can grow and retract

with business needs, and that can evolve quickly to capitalize on an

ever-shifting technology landscape, is no easy feat, Phelps and other IT leaders

readily admit. “In modernizing, it’s such a moving target, because once you got

it modernized, something new can come out that’s better and more automated. The

entire infrastructure is evolving so quickly,” says Diane Gutiw ... “CIOs

should be asking, ‘How do I change or adapt what I do now to be able to manage a

hybrid workforce? What does the future of work look like? How do I manage that

in a secure, responsible way and still take advantage of the efficiencies? And

how do I let my staff be innovative without violating regulation?’” Gutiw says,

noting that today’s managers “are the last generation of people who will only

manage people.”

Microsoft just taught its AI agents to talk to each other—and it could transform how we work

Microsoft is giving organizations more flexibility with their AI models by

enabling them to bring custom models from Azure AI Foundry into Copilot Studio.

This includes access to over 1,900 models, including the latest from OpenAI

GPT-4.1, Llama, and DeepSeek. “Start with off-the-shelf models because they’re

already fantastic and continuously improving,” Smith said. “Companies typically

choose to fine-tune these models when they need to incorporate specific domain

language, unique use cases, historical data, or customer requirements. This

customization ultimately drives either greater efficiency or improved accuracy.”

The company is also adding a code interpreter feature that brings Python

capabilities to Copilot Studio agents, enabling data analysis, visualization,

and complex calculations without leaving the Copilot Studio environment. Smith

highlighted financial applications as a particular strength: “In financial

analysis and services, we’ve seen a remarkable breakthrough over the past six

months,” Smith said. “Deep reasoning models, powered by reinforcement learning,

can effectively self-verify any process that produces quantifiable outputs.” He

added that these capabilities excel at “complex financial analysis where users

need to generate code for creating graphs, producing specific outputs, or

conducting detailed financial assessments.”

Microsoft is giving organizations more flexibility with their AI models by

enabling them to bring custom models from Azure AI Foundry into Copilot Studio.

This includes access to over 1,900 models, including the latest from OpenAI

GPT-4.1, Llama, and DeepSeek. “Start with off-the-shelf models because they’re

already fantastic and continuously improving,” Smith said. “Companies typically

choose to fine-tune these models when they need to incorporate specific domain

language, unique use cases, historical data, or customer requirements. This

customization ultimately drives either greater efficiency or improved accuracy.”

The company is also adding a code interpreter feature that brings Python

capabilities to Copilot Studio agents, enabling data analysis, visualization,

and complex calculations without leaving the Copilot Studio environment. Smith

highlighted financial applications as a particular strength: “In financial

analysis and services, we’ve seen a remarkable breakthrough over the past six

months,” Smith said. “Deep reasoning models, powered by reinforcement learning,

can effectively self-verify any process that produces quantifiable outputs.” He

added that these capabilities excel at “complex financial analysis where users

need to generate code for creating graphs, producing specific outputs, or

conducting detailed financial assessments.”

Culture fit is a lie: It’s time we prioritised culture add

The idea of culture fit originated with the noble intent of fostering team

cohesion. But over time, it has become an excuse to hire people who are

familiar, comfortable and easy to manage. In doing so, companies inadvertently

create echo chambers—workforces that lack diverse perspectives, struggle to

challenge the status quo and fail to innovate. Ankur Sharma, Co-Founder &

Head of People at Rebel Foods, understands this well. Speaking at the TechHR

Pulse Mumbai 2025 conference, Sharma explained how Rebel Foods moved beyond

hiring for cultural likeness. “We are not building a family; we are building a

winning team,” he said, emphasising that what truly matters is competency,

accountability and adaptability. The problem with culture fit is not just about

homogeneity—it’s about stagnation. When teams are made up of individuals who

think alike, they lose the ability to see challenges from multiple

angles. Companies that prioritise cultural uniformity often struggle to

pivot in response to industry shifts. ... Leading organisations are abandoning

the notion of culture fit and shifting towards ‘culture add’—hiring employees

who bring fresh ideas, challenge existing norms, and contribute new

perspectives. Instead of asking, ‘Will this person fit in?’ Hiring managers are

asking, ‘What unique value does this person bring?’

The idea of culture fit originated with the noble intent of fostering team

cohesion. But over time, it has become an excuse to hire people who are

familiar, comfortable and easy to manage. In doing so, companies inadvertently

create echo chambers—workforces that lack diverse perspectives, struggle to

challenge the status quo and fail to innovate. Ankur Sharma, Co-Founder &

Head of People at Rebel Foods, understands this well. Speaking at the TechHR

Pulse Mumbai 2025 conference, Sharma explained how Rebel Foods moved beyond

hiring for cultural likeness. “We are not building a family; we are building a

winning team,” he said, emphasising that what truly matters is competency,

accountability and adaptability. The problem with culture fit is not just about

homogeneity—it’s about stagnation. When teams are made up of individuals who

think alike, they lose the ability to see challenges from multiple

angles. Companies that prioritise cultural uniformity often struggle to

pivot in response to industry shifts. ... Leading organisations are abandoning

the notion of culture fit and shifting towards ‘culture add’—hiring employees

who bring fresh ideas, challenge existing norms, and contribute new

perspectives. Instead of asking, ‘Will this person fit in?’ Hiring managers are

asking, ‘What unique value does this person bring?’

/presentations/architecture-green-software/en/slides/Sara-1735037626770.jpg)

_Dzmitry_Skazau_Alamy.jpg?width=1280&auto=webp&quality=95&format=jpg&disable=upscale)