5G versus 4G: How speed, latency and application support differ

5G uses new and so far rarely used radio millimeter bands in the 30 GHz to 300 GHz range. Current 4G networks operate on frequencies below 6GHz. Low latency is one of 5G's most important attributes, making the technology highly suitable for critical applications that require rapid responsiveness, such as remote vehicle control. 5G networks are capable of latency rates of under a millisecond in ideal conditions. 4G latency varies from carrier to carrier and cell to cell. Still, on the whole, 5G is estimated to be 60 to 120 times faster than average 4G latencies. Over time, 5G is expected to advance wireless networking by bringing fiber-like speeds and extremely low latency capabilities to almost any location. In terms of peak speed, 5G is approximately 20 times faster than 4G. The new technology also offers a minimum peak download speed of 20 Gb/s (while 4G pokes along at only 1 Gb/s). Generally speaking, fixed site users, such as offices and homes, will experience somewhat higher speeds than mobile users.

This old ransomware is using an unpleasant new trick to try and make you pay up

This ransomware attack begins, like many others, with brute force attacks targeting weak passwords on RDP ports. Once inside the network, the attackers harvest the admin credentials required to move across the network before encrypting servers and wiping back-ups. Victims are then presented with a ransom note that tells them to send an email to the ransomware distributors, who also warn victims not to use any security software against CryptoMix, with the attackers claiming that this could permanently damage the system (a common tactic used by attackers to dissuade victims from using security software to restore their computer). But if a victim engages with the attackers over email, they'll find out that those behind CryptoMix claim that the money made from the ransom demand -- usually two or three bitcoins -- will be donated to charity. Obviously, this isn't the case, but in an effort to lure victims into believing the scam, the CryptoMix distributors appear to have taken information about real children from crowdfunding and local news websites.

DevOps to DevSecOps adjustment banks on cross-group collaboration

Eventually, the CIO and chief information security officer (CISO) must participate in the DevOps discussion. This is especially true when a development project needs to be compliant with the Sarbanes-Oxley Act, Health Insurance Portability and Accountability Act or other compliance standards. This discussion needs to cover how teams can work together to achieve IT delivery goals with the best use of resources. For Peterson, the borderless environment of the cloud makes it tougher for CISOs and their teams to keep an organization secure. Security, development and operations teams must agree to communicate and share knowledge across domains. Because so many people have roles in a company's security strategy, Sadin suggested organizations designate a single point person for risk or security -- DevOps shop or not. But don't let security silo itself, Rowley cautioned. When security becomes so removed from the process that it doesn't function well with the other C-level executives or departments, security will fail.

HQ 2.0: The Next-Generation Corporate Center

In short, the economies of scale associated with centralized services have eroded. The functional security blankets justifying their expense no longer apply; it is not enough anymore to meet regulatory requirements and provide basic internal services. The business units and the new generation of talent are demanding more. Finally, the combination of new digital Industry 4.0–style platforms, robotics, intelligent machines, and advanced analytics are allowing companies to harness the explosion of data and fundamentally alter how and where work gets done. ... The new corporate center will be smaller, but it will still have executive and functional leaders and their staffs, mostly limited to five ongoing roles. First, they will define and communicate the company vision, values, and identity. Second, they will develop the corporate strategy and be responsible for the necessary critical enterprise-wide capabilities. Third, they will oversee the business unit portfolio, and related legal, regulatory, and fiduciary activities. Fourth, they will allocate capital.

Don’t Panic: Biometric Security is Still Secure for Enterprises

The early assumptions surrounding biometric security—that it could supplant passwords—fuels the current panic in the discourse. However, biometric security remains secure so long as your enterprise treats it as another layer in your overall authentication platform. When you incorporate biometric security into your two-factor authentication, your access management becomes stronger; hackers will have to acquire both your employees’ passwords and their biometric information to try and break into the network. However, two-factor authentication faces its own scrutiny. Hackers have found ways to subvert the traditional authentication use of mobile devices and insert themselves into the authentication process. Therefore, enterprises embrace multi-factor authentication (MFA) for its more layered approach to access management. Additionally, multifactor authentication can be applied in a granular fashion. Your regular employees may only require two-factor authentication, whereas your most privileged users may need as many as five factors to access your sensitive digital assets.

Threat of a Remote Cyberattack on Today's Aircraft Is Real

Responding to the attack, Boeing issued a multiparagraph statement that included this passage: "Boeing is confident in the cyber-security measures of its airplanes. … Boeing's cyber-security measures … meet or exceed all applicable regulatory standards." ... To solve it, we need industry regulations that require updated cybersecurity policies and protocols, including mandatory penetration testing by aviation experts who are independent of manufacturers, vendors, service providers and aircraft operators. Be mindful of those who claim aviation expertise; few have the necessary experience, but many claim they do. "Pen testing" is essentially what DHS experts were conducting during the Boeing 757 attack. A pen test is a simulated attack on a computer system that identifies its vulnerabilities and strengths. Pen testing is one of many ways to mitigate risk, and we need more trained aviation and cyber personnel to deal with the current and emerging cyber threats — those that haven't even been conceived of yet.

Enterprise search trends to look for in 2019

Imagine if your company’s Intranet search were as easy, personalised, and contextual as Google’s Internet search. Cognitive search will help make this a reality by giving enterprise users the ability to locate truly relevant text, image, and video files from within large volumes of both internal and external data. One the biggest challenges facing enterprise search is the nature of much of the data. Gartner estimates that 80% of organisational data is unstructured, meaning that it doesn’t adhere to predetermined models. This results in irregularities and ambiguities that can make it difficult to find using traditional search programs. AI programs help automatically tag this unstructured information, making it much more easily discoverable. Cognitive search also improves accuracy by considering the context of each query. By examining and learning from past searches, these types of systems can identify the person who is looking for the information and what type of content the person is expecting to find.

IoT devices proliferate, from smart bulbs to industrial vibration sensors

Arguably the biggest and most-established use in this area is preventive maintenance, usually in an industrial setting. The concept is simple, but it relies on a lot of clever computational work and careful integration. Preventive maintenance uses gadgets like vibration and wear sensors to measure the stresses on and performance of factory equipment. For example, in a turbine those sensors feed their data into software running on either an edge device sitting somewhere on the factory floor for quick communication with the endpoint or on a server somewhere in the data center or cloud. Once there, the data can be parsed by a machine-learning system that correlates real-time data with historical, enabling the detection of potential reliability issues without the need for human inspection. Fleet management’s another popular use case for IoT devices. These systems either take advantage of a GPS locator already installed on a car or add a new one for the purpose, sending that data via cellular network back to the company, allowing rental car firms or really any company with a large number of cars or trucks to keep track of their movements.

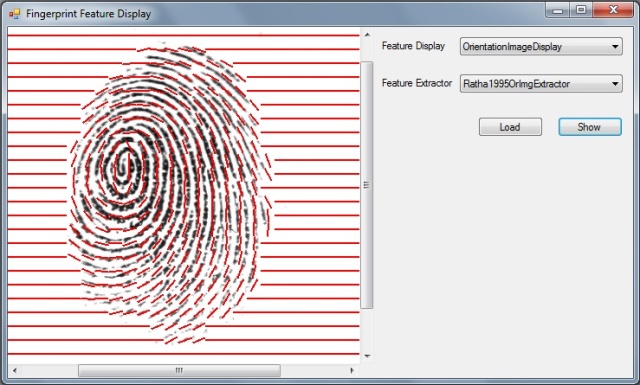

A Framework in C# for Fingerprint Verification

Fingerprint recognition is an active research area nowadays. An important component in fingerprint recognition systems is the fingerprint matching algorithm. According to the problem domain, fingerprint matching algorithms are classified in two categories: fingerprint verification algorithms and fingerprint identification algorithms. The aim of fingerprint verification algorithms is to determine whether two fingerprints come from the same finger or not. On the other hand, the fingerprint identification algorithms search a query fingerprint in a database looking for the fingerprints coming from the same finger. There are hundreds of papers concerning fingerprint verification but, as far as we know, there is not any framework for fingerprint verification available on the web. So, you must implement your own tools in order to test the performance of your fingerprint verification algorithms. Moreover, you must spend a lot of time implementing algorithms of other authors to compare with your algorithms.

Towards Successful Resilient Software Design

What is different these days, is the fact, that almost every system is a distributed system. Systems talk to each other all the time and also usually the systems themselves are split up in remote parts that do the same. Developments like microservices, mobile computing and (I)IoT multiply the connections between collaborating system parts, i.e., take that development to the next level. The remote communication needed to let the systems and their parts talk to each other implies failure modes that only exist across process boundaries, not inside a process. These failure modes like, e.g., non-responsiveness, latency, incomplete or out-of-order messages will cause all kinds of undesired failures on the application level if we ignore their existence. In other words, ignoring the effects of distribution is not an option if you need a robust, highly available systems. This leads me to the “what” of RSD: I tend to define resilient software design as “designing an application in a way that ideally a user does not notice at all if an unexpected failure occurs or that the user at least can continue to use the application with a defined reduced functional scope”.

Quote for the day:

"The quality of a leader is reflected in the standards they set for themselves." -- Ray Kroc

No comments:

Post a Comment