Quote for the day:

“The art of leadership is saying no, not yes. It is very easy to say yes.” -- Tony Blair

When Browsers Become the Attack Surface: Rethinking Security for Scattered Spider

Scattered Spider, also referred to as UNC3944, Octo Tempest, or Muddled Libra,

has matured over the past two years through precision targeting of human

identity and browser environments. This shift differentiates them from other

notorious cybergangs like Lazarus Group, Fancy Bear, and REvil. If sensitive

information such as your calendar, credentials, or security tokens is alive

and well in browser tabs, Scattered Spider is able to acquire them. ... Once

user credentials get into the wrong hands, attackers like Scattered Spider

will move quickly to hijack previously authenticated sessions by stealing

cookies and tokens. Securing the integrity of browser sessions can best be

achieved by restricting unauthorized scripts from gaining access or

exfiltrating these sensitive artifacts. Organizations must enforce contextual

security policies based on components such as device posture, identity

verification, and network trust. By linking session tokens to context,

enterprises can prevent attacks like account takeovers, even after credentials

have become compromised. ... Although browser security is the last mile of

defense for malware-less attacks, integrating it into an existing security

stack will fortify the entire network. By implementing activity logs enriched

with browser data into SIEM, SOAR, and ITDR platforms, CISOs can correlate

browser events with endpoint activity for a much fuller picture.

Scattered Spider, also referred to as UNC3944, Octo Tempest, or Muddled Libra,

has matured over the past two years through precision targeting of human

identity and browser environments. This shift differentiates them from other

notorious cybergangs like Lazarus Group, Fancy Bear, and REvil. If sensitive

information such as your calendar, credentials, or security tokens is alive

and well in browser tabs, Scattered Spider is able to acquire them. ... Once

user credentials get into the wrong hands, attackers like Scattered Spider

will move quickly to hijack previously authenticated sessions by stealing

cookies and tokens. Securing the integrity of browser sessions can best be

achieved by restricting unauthorized scripts from gaining access or

exfiltrating these sensitive artifacts. Organizations must enforce contextual

security policies based on components such as device posture, identity

verification, and network trust. By linking session tokens to context,

enterprises can prevent attacks like account takeovers, even after credentials

have become compromised. ... Although browser security is the last mile of

defense for malware-less attacks, integrating it into an existing security

stack will fortify the entire network. By implementing activity logs enriched

with browser data into SIEM, SOAR, and ITDR platforms, CISOs can correlate

browser events with endpoint activity for a much fuller picture. The Transformation Resilience Trifecta: Agentic AI, Synthetic Data and Executive AI Literacy

The current state of Agentic AI is, in a word, fragile. Ask anyone in the

trenches. These agents can be brilliant one minute and baffling the next.

Instructions get misunderstood. Tasks break in new contexts. Chaining agents

into even moderately complex workflows exposes just how early we are in this

game. Reliability? Still a work in progress. And yet, we’re seeing companies

experiment. Some are stitching together agents using LangChain or CrewAI. Others

are waiting for more robust offerings from Microsoft Copilot Studio, OpenAI’s

GPT-4o Agents, or Anthropic’s Claude toolsets. It’s the classic innovator’s

dilemma: Move too early, and you waste time on immature tech. Move too late, and

you miss the wave. Leaders must thread that needle — testing the waters while

tempering expectations. ... Here’s the scarier scenario I’m seeing more often:

“Shadow AI.” Employees are already using ChatGPT, Claude, Copilot, Perplexity —

all under the radar. They’re using it to write reports, generate code snippets,

answer emails, or brainstorm marketing copy. They’re more AI-savvy than their

leadership. But they don’t talk about it. Why? Fear. Risk. Politics. Meanwhile,

some executives are content to play cheerleader, mouthing AI platitudes on

LinkedIn but never rolling up their sleeves. That’s not leadership — that’s

theater.

The current state of Agentic AI is, in a word, fragile. Ask anyone in the

trenches. These agents can be brilliant one minute and baffling the next.

Instructions get misunderstood. Tasks break in new contexts. Chaining agents

into even moderately complex workflows exposes just how early we are in this

game. Reliability? Still a work in progress. And yet, we’re seeing companies

experiment. Some are stitching together agents using LangChain or CrewAI. Others

are waiting for more robust offerings from Microsoft Copilot Studio, OpenAI’s

GPT-4o Agents, or Anthropic’s Claude toolsets. It’s the classic innovator’s

dilemma: Move too early, and you waste time on immature tech. Move too late, and

you miss the wave. Leaders must thread that needle — testing the waters while

tempering expectations. ... Here’s the scarier scenario I’m seeing more often:

“Shadow AI.” Employees are already using ChatGPT, Claude, Copilot, Perplexity —

all under the radar. They’re using it to write reports, generate code snippets,

answer emails, or brainstorm marketing copy. They’re more AI-savvy than their

leadership. But they don’t talk about it. Why? Fear. Risk. Politics. Meanwhile,

some executives are content to play cheerleader, mouthing AI platitudes on

LinkedIn but never rolling up their sleeves. That’s not leadership — that’s

theater.Red Hat strives for simplicity in an ever more complex IT world

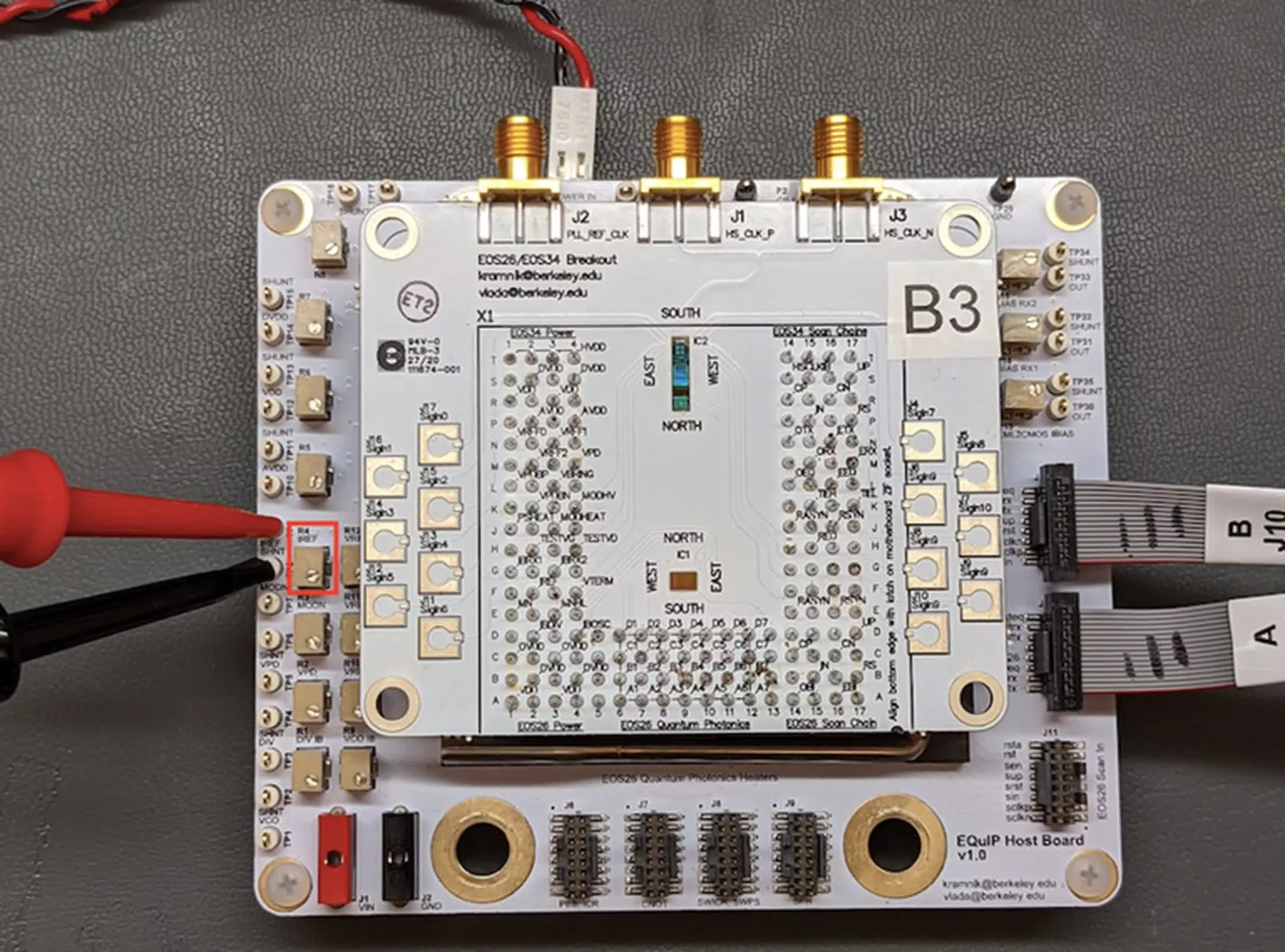

One of the most innovative developments in RHEL 10 is bootc in image mode, where

VMs run like a container and are part of the CI/CD pipeline. By using immutable

images, all changes are controlled from the development environment. Van der

Breggen illustrates this with a retail scenario: “I can have one POS system for

the payment kiosk, but I can also have another POS system for my cashiers. They

use the same base image. If I then upgrade that base image to later releases of

RHEL, I create one new base image, tag it in the environments, and then all 500

systems can be updated at once.” Red Hat Enterprise Linux Lightspeed acts as a

command-line assistant that brings AI directly into the terminal. ... For edge

devices, Red Hat uses a solution called Greenboot, which does not immediately

proceed to a rollback but can wait for one if a certain condition are met.

After, for example, three reboots without a working system, it reverts to the

previous working release. However, not everything has been worked out perfectly

yet. Lightspeed currently only works online, while many customers would like to

use it offline because their RHEL systems are tucked away behind firewalls. Red

Hat is still looking into possibilities for an expansion here, although making

the knowledge base available offline poses risks to intellectual property.

One of the most innovative developments in RHEL 10 is bootc in image mode, where

VMs run like a container and are part of the CI/CD pipeline. By using immutable

images, all changes are controlled from the development environment. Van der

Breggen illustrates this with a retail scenario: “I can have one POS system for

the payment kiosk, but I can also have another POS system for my cashiers. They

use the same base image. If I then upgrade that base image to later releases of

RHEL, I create one new base image, tag it in the environments, and then all 500

systems can be updated at once.” Red Hat Enterprise Linux Lightspeed acts as a

command-line assistant that brings AI directly into the terminal. ... For edge

devices, Red Hat uses a solution called Greenboot, which does not immediately

proceed to a rollback but can wait for one if a certain condition are met.

After, for example, three reboots without a working system, it reverts to the

previous working release. However, not everything has been worked out perfectly

yet. Lightspeed currently only works online, while many customers would like to

use it offline because their RHEL systems are tucked away behind firewalls. Red

Hat is still looking into possibilities for an expansion here, although making

the knowledge base available offline poses risks to intellectual property.

The state of DevOps and AI: Not just hype

The vision of AI that takes you from a list of requirements through work items to build to test to, finally, deployment is still nothing more than a vision. In many cases, DevOps tool vendors use AI to build solutions to the problems their customers have. The result is a mixture of point solutions that can solve immediate developer problems. ... Machine learning is speeding up testing by failing faster. Build steps get reordered automatically so those that are likely to fail happen earlier, which means developers aren’t waiting for the full build to know when they need to fix something. Often, the same system is used to detect flaky tests by muting tests where failure adds no value. ... Machine learning gradually helps identify the characteristics of a working system and can raise an alert when things go wrong. Depending on the governance, it can spot where a defect was introduced and start a production rollback while also providing potential remediation code to fix the defect. ... There’s a lot of puffery around AI, and DevOps vendors are not helping. A lot of their marketing emphasizes fear: “Your competitors are using AI, and if you’re not, you’re going to lose” is their message. Yet DevOps vendors themselves are only one or two steps ahead of you in their AI adoption journey. Don’t adopt AI pell-mell due to FOMO, and don’t expect to replace everyone under the CTO with a large language model.5 Ways To Secure Your Industrial IoT Network

IIoT is a subcategory of the Internet of Things (IoT). It is made up of a system

of interconnected smart devices that uses sensors, actuators, controllers and

intelligent control systems to collect, transmit, receive and analyze data....

IIoT also has its unique architecture that begins with the device layer, where

equipment, sensors, actuators and controllers collect raw operational data. That

information is passed through the network layer, which transmits it to the

internet via secure gateways. Next, the edge or fog computing layer processes

and filters the data locally before sending it to the cloud, helping reduce

latency and improving responsiveness. Once in the service and application

support layer, the data is stored, analyzed, and used to generate alerts and

insights. ... Many IIoT devices are not built with strong cybersecurity

protections. This is especially true for legacy machines that were never

designed to connect to modern networks. Without safeguards such as encryption or

secure authentication, these devices can become easy targets. ... Defending

against IIoT threats requires a layered approach that combines technology,

processes and people. Manufacturers should segment their networks to limit the

spread of attacks, apply strong encryption and authentication for connected

devices, and keep software and firmware regularly updated.

IIoT is a subcategory of the Internet of Things (IoT). It is made up of a system

of interconnected smart devices that uses sensors, actuators, controllers and

intelligent control systems to collect, transmit, receive and analyze data....

IIoT also has its unique architecture that begins with the device layer, where

equipment, sensors, actuators and controllers collect raw operational data. That

information is passed through the network layer, which transmits it to the

internet via secure gateways. Next, the edge or fog computing layer processes

and filters the data locally before sending it to the cloud, helping reduce

latency and improving responsiveness. Once in the service and application

support layer, the data is stored, analyzed, and used to generate alerts and

insights. ... Many IIoT devices are not built with strong cybersecurity

protections. This is especially true for legacy machines that were never

designed to connect to modern networks. Without safeguards such as encryption or

secure authentication, these devices can become easy targets. ... Defending

against IIoT threats requires a layered approach that combines technology,

processes and people. Manufacturers should segment their networks to limit the

spread of attacks, apply strong encryption and authentication for connected

devices, and keep software and firmware regularly updated.

AI Chatbots Are Emotionally Deceptive by Design

Even without deep connection, emotional attachment can lead users to place too

much trust in the content chatbots provide. Extensive interaction with a social

entity that is designed to be both relentlessly agreeable, and specifically

personalized to a user’s tastes, can also lead to social “deskilling,” as some

users of AI chatbots have flagged. This dynamic is simply unrealistic in genuine

human relationships. Some users may be more vulnerable than others to this kind

of emotional manipulation, like neurodiverse people or teens who have limited

experience building relationships. ... With AI chatbots, though, deceptive

practices are not hidden in user interface elements, but in their human-like

conversational responses. It’s time to consider a different design paradigm, one

that centers user protection: non-anthropomorphic conversational AI. All AI

chatbots can be less anthropomorphic than they are, at least by default, without

necessarily compromising function and benefit. A companion AI, for example, can

provide emotional support without saying, “I also feel that way sometimes.” This

non-anthropomorphic approach is already familiar in robot design, where

researchers have created robots that are purposefully designed to not be

human-like. This design choice is proven to more appropriately reflect system

capabilities, and to better situate robots as useful tools, not friends or

social counterparts.

Even without deep connection, emotional attachment can lead users to place too

much trust in the content chatbots provide. Extensive interaction with a social

entity that is designed to be both relentlessly agreeable, and specifically

personalized to a user’s tastes, can also lead to social “deskilling,” as some

users of AI chatbots have flagged. This dynamic is simply unrealistic in genuine

human relationships. Some users may be more vulnerable than others to this kind

of emotional manipulation, like neurodiverse people or teens who have limited

experience building relationships. ... With AI chatbots, though, deceptive

practices are not hidden in user interface elements, but in their human-like

conversational responses. It’s time to consider a different design paradigm, one

that centers user protection: non-anthropomorphic conversational AI. All AI

chatbots can be less anthropomorphic than they are, at least by default, without

necessarily compromising function and benefit. A companion AI, for example, can

provide emotional support without saying, “I also feel that way sometimes.” This

non-anthropomorphic approach is already familiar in robot design, where

researchers have created robots that are purposefully designed to not be

human-like. This design choice is proven to more appropriately reflect system

capabilities, and to better situate robots as useful tools, not friends or

social counterparts.

How AI product teams are rethinking impact, risk, feasibility

We’re at a strange crossroads in the evolution of AI. Nearly every enterprise

wants to harness it. Many are investing heavily. But most are falling flat. AI

is everywhere — in strategy decks, boardroom buzzwords and headline-grabbing

POCs. Yet, behind the curtain, something isn’t working. ... One of the most

widely adopted prioritization models in product management is RICE — which

scores initiatives based on Reach, Impact, Confidence, and Effort. It’s elegant.

It’s simple. It’s also outdated. RICE was never designed for the world of

foundation models, dynamic data pipelines or the unpredictability of

inference-time reasoning. ... To make matters worse, there’s a growing mismatch

between what enterprises want to automate and what AI can realistically handle.

Stanford’s 2025 study, The Future of Work with AI Agents, provides a fascinating

lens. ... ARISE adds three crucial layers that traditional frameworks miss:

First, AI Desire — does solving this problem with AI add real value, or are we

just forcing AI into something that doesn’t need it? Second, AI Capability — do

we actually have the data, model maturity and engineering readiness to make this

happen? And third, Intent — is the AI meant to act on its own or assist a human?

Proactive systems have more upside, but they also come with far more risk. ARISE

lets you reflect that in your prioritization.

We’re at a strange crossroads in the evolution of AI. Nearly every enterprise

wants to harness it. Many are investing heavily. But most are falling flat. AI

is everywhere — in strategy decks, boardroom buzzwords and headline-grabbing

POCs. Yet, behind the curtain, something isn’t working. ... One of the most

widely adopted prioritization models in product management is RICE — which

scores initiatives based on Reach, Impact, Confidence, and Effort. It’s elegant.

It’s simple. It’s also outdated. RICE was never designed for the world of

foundation models, dynamic data pipelines or the unpredictability of

inference-time reasoning. ... To make matters worse, there’s a growing mismatch

between what enterprises want to automate and what AI can realistically handle.

Stanford’s 2025 study, The Future of Work with AI Agents, provides a fascinating

lens. ... ARISE adds three crucial layers that traditional frameworks miss:

First, AI Desire — does solving this problem with AI add real value, or are we

just forcing AI into something that doesn’t need it? Second, AI Capability — do

we actually have the data, model maturity and engineering readiness to make this

happen? And third, Intent — is the AI meant to act on its own or assist a human?

Proactive systems have more upside, but they also come with far more risk. ARISE

lets you reflect that in your prioritization.

Cloud control: The key to greener, leaner data centers

To fully unlock these cost benefits, businesses must adopt FinOps practices: the discipline of bringing engineering, finance, and operations together to optimize cloud spending. Without it, cloud costs can quickly spiral, especially in hybrid environments. But, with FinOps, organizations can forecast demand more accurately, optimise usage, and ensure every pound spent delivers value. ... Cloud platforms make it easier to use computing resources more efficiently. Even though the infrastructure stays online, hyperscalers can spread workloads across many customers, keeping their hardware busier and more productive. The advantage is that hyperscalers can distribute workloads across multiple customers and manage capacity at a large scale, allowing them to power down hardware when it's not in use. ... The combination of cloud computing and artificial intelligence (AI) is further reshaping data center operations. AI can analyse energy usage, detect inefficiencies, and recommend real-time adjustments. But running these models on-premises can be resource-intensive. Cloud-based AI services offer a more efficient alternative. Take Google, for instance. By applying AI to its data center cooling systems, it cut energy use by up to 40 percent. Other organizations can tap into similar tools via the cloud to monitor temperature, humidity, and workload patterns and automatically adjust cooling, load balancing, and power distribution.You Backed Up Your Data, but Can You Bring It Back?

Many IT teams assume that the existence of backups guarantees successful

restoration. This misconception can be costly. A recent report from Veeam

revealed that 49% of companies failed to recover most of their servers after a

significant incident. This highlights a painful reality: Most backup strategies

focus too much on storage and not enough on service restoration. Having backup

files is not the same as successfully restoring systems. In real-world recovery

scenarios, teams face unknown dependencies, a lack of orchestration, incomplete

documentation, and gaps between infrastructure and applications. When services

need to be restored in a specific order and under intense pressure, any

oversight can become a significant bottleneck. ... Relying on a single backup

location creates a single point of failure. Local backups can be fast but are

vulnerable to physical threats, hardware failures, or ransomware attacks. Cloud

backups offer flexibility and off-site protection but may suffer bandwidth

constraints, cost limitations, or provider outages. A hybrid backup strategy

ensures multiple recovery paths by combining on-premises storage, cloud

solutions, and optionally offline or air-gapped options. This approach allows

teams to choose the fastest or most reliable method based on the nature of the

disruption.

Many IT teams assume that the existence of backups guarantees successful

restoration. This misconception can be costly. A recent report from Veeam

revealed that 49% of companies failed to recover most of their servers after a

significant incident. This highlights a painful reality: Most backup strategies

focus too much on storage and not enough on service restoration. Having backup

files is not the same as successfully restoring systems. In real-world recovery

scenarios, teams face unknown dependencies, a lack of orchestration, incomplete

documentation, and gaps between infrastructure and applications. When services

need to be restored in a specific order and under intense pressure, any

oversight can become a significant bottleneck. ... Relying on a single backup

location creates a single point of failure. Local backups can be fast but are

vulnerable to physical threats, hardware failures, or ransomware attacks. Cloud

backups offer flexibility and off-site protection but may suffer bandwidth

constraints, cost limitations, or provider outages. A hybrid backup strategy

ensures multiple recovery paths by combining on-premises storage, cloud

solutions, and optionally offline or air-gapped options. This approach allows

teams to choose the fastest or most reliable method based on the nature of the

disruption.

Beyond Prevention: How Cybersecurity and Cyber Insurance Are Converging to Transform Risk Management

Historically, cybersecurity and cyber insurance have operated in silos, with

companies deploying technical defenses to fend off attacks while holding a cyber

insurance policy as a safety net. This fragmented approach often leaves gaps in

coverage and preparedness. ... The insurance sector is at a turning point.

Traditional models that assess risk at the point of policy issuance are rapidly

becoming outdated in the face of constantly evolving cyber threats. Insurers who

fail to adapt to an integrated model risk being outpaced by agile Cyber

Insurtech companies, which leverage cutting-edge cyber intelligence, machine

learning, and risk analytics to offer adaptive coverage and continuous

monitoring. Some insurers have already begun to reimagine their role—not only as

claim processors but as active partners in risk prevention. ... A combined

cybersecurity and insurance strategy goes beyond traditional risk management. It

aligns the objectives of both the insurer and the insured, with insurers

assuming a more proactive role in supporting risk mitigation. By reducing the

probability of significant losses through continuous monitoring and risk-based

incentives, insurers are building a more resilient client base, directly

translating to reduced claim frequency and severity.

Historically, cybersecurity and cyber insurance have operated in silos, with

companies deploying technical defenses to fend off attacks while holding a cyber

insurance policy as a safety net. This fragmented approach often leaves gaps in

coverage and preparedness. ... The insurance sector is at a turning point.

Traditional models that assess risk at the point of policy issuance are rapidly

becoming outdated in the face of constantly evolving cyber threats. Insurers who

fail to adapt to an integrated model risk being outpaced by agile Cyber

Insurtech companies, which leverage cutting-edge cyber intelligence, machine

learning, and risk analytics to offer adaptive coverage and continuous

monitoring. Some insurers have already begun to reimagine their role—not only as

claim processors but as active partners in risk prevention. ... A combined

cybersecurity and insurance strategy goes beyond traditional risk management. It

aligns the objectives of both the insurer and the insured, with insurers

assuming a more proactive role in supporting risk mitigation. By reducing the

probability of significant losses through continuous monitoring and risk-based

incentives, insurers are building a more resilient client base, directly

translating to reduced claim frequency and severity.

/articles/mvp-dilemma/en/smallimage/mvp-dilemma-thumbnail-1748330492438.jpg)

_Borka_Kiss_Alamy.jpg?width=1280&auto=webp&quality=95&format=jpg&disable=upscale)

/pcq/media/media_files/2025/03/28/FMHV44KuuMhvUZSHNwsy.webp)