Quote for the day:

"You live longer once you realize that any time spent being unhappy is wasted." -- Ruth E. Renkl

Six hard truths for software development bosses

Everyone behaves differently when the boss is around. Everyone. And you, as a

boss, need to realize this. There are two things to realize here. Firstly,

when you are present, people will change who they are and what they say.

Secondly, you should consider that fact when deciding whether to be in the

room. ... Bosses need to realize that what they say, even comments that you

might think are flippant and not meant to be taken seriously, will be taken

seriously. ... The other side of that coin is that your silence and non-action

can have profound effects. Maybe you space out in a meeting and miss a

question. The team might think you blew them off and left the great idea

hanging. Maybe you forgot to answer an email. Maybe you had bigger fish to fry

and you were a bit short and dismissive of an approach by a direct report.

Small lapses can be easily misconstrued by your team. ... You are the boss.

You have the power to promote, demote, and award raises and bonuses. These

powers are important, and people will see you in that light. Even your best

attempts at being cordial, friendly, and collegial will not overcome the

slight apprehension your authority will engender. Your mood on any given day

will be noticed and tracked. ... You can and should have input into technical

decisions and design decisions, but your team will want to be the ones driving

what direction things take and how things get done.

Everyone behaves differently when the boss is around. Everyone. And you, as a

boss, need to realize this. There are two things to realize here. Firstly,

when you are present, people will change who they are and what they say.

Secondly, you should consider that fact when deciding whether to be in the

room. ... Bosses need to realize that what they say, even comments that you

might think are flippant and not meant to be taken seriously, will be taken

seriously. ... The other side of that coin is that your silence and non-action

can have profound effects. Maybe you space out in a meeting and miss a

question. The team might think you blew them off and left the great idea

hanging. Maybe you forgot to answer an email. Maybe you had bigger fish to fry

and you were a bit short and dismissive of an approach by a direct report.

Small lapses can be easily misconstrued by your team. ... You are the boss.

You have the power to promote, demote, and award raises and bonuses. These

powers are important, and people will see you in that light. Even your best

attempts at being cordial, friendly, and collegial will not overcome the

slight apprehension your authority will engender. Your mood on any given day

will be noticed and tracked. ... You can and should have input into technical

decisions and design decisions, but your team will want to be the ones driving

what direction things take and how things get done.

AI prompt injection gets real — with macros the latest hidden threat

“Broadly speaking, this threat vector — ‘malicious prompts embedded in macros’

— is yet another prompt injection method,” Roberto Enea, lead data scientist

at cybersecurity services firm Fortra, told CSO. “In this specific case, the

injection is done inside document macros or VBA [Visual Basic for

Applications] scripts and is aimed at AI systems that analyze files.” Enea

added: “Typically, the end goal is to mislead the AI system into classifying

malware as safe.” ... “Attackers could embed hidden instructions in common

business files like emails or Word documents, and when Copilot processed the

file, it executed those instructions automatically,” Quentin Rhoads-Herrera,

VP of cybersecurity services at Stratascale, explained. In response to the

vulnerability, Microsoft recommended patching, restricting Copilot access,

stripping hidden metadata from shared files, and enabling its built-in AI

security controls. ... “We’ve already seen proof-of-concept attacks where

malicious prompts are hidden inside documents, macros, or configuration files

to trick AI systems into exfiltrating data or executing unintended actions,”

Stratascale’s Rhoads-Herrera commented. “Researchers have also demonstrated

how LLMs can be misled through hidden instructions in code comments or

metadata, showing the same principle at work.” Rhoads-Herrera added: “While

some of these remain research-driven, the techniques are quickly moving into

the hands of attackers who are skilled at weaponizing proof-of-concepts.”

“Broadly speaking, this threat vector — ‘malicious prompts embedded in macros’

— is yet another prompt injection method,” Roberto Enea, lead data scientist

at cybersecurity services firm Fortra, told CSO. “In this specific case, the

injection is done inside document macros or VBA [Visual Basic for

Applications] scripts and is aimed at AI systems that analyze files.” Enea

added: “Typically, the end goal is to mislead the AI system into classifying

malware as safe.” ... “Attackers could embed hidden instructions in common

business files like emails or Word documents, and when Copilot processed the

file, it executed those instructions automatically,” Quentin Rhoads-Herrera,

VP of cybersecurity services at Stratascale, explained. In response to the

vulnerability, Microsoft recommended patching, restricting Copilot access,

stripping hidden metadata from shared files, and enabling its built-in AI

security controls. ... “We’ve already seen proof-of-concept attacks where

malicious prompts are hidden inside documents, macros, or configuration files

to trick AI systems into exfiltrating data or executing unintended actions,”

Stratascale’s Rhoads-Herrera commented. “Researchers have also demonstrated

how LLMs can be misled through hidden instructions in code comments or

metadata, showing the same principle at work.” Rhoads-Herrera added: “While

some of these remain research-driven, the techniques are quickly moving into

the hands of attackers who are skilled at weaponizing proof-of-concepts.”Are you really ready for AI? Exposing shadow tools in your organisation

When an organisation doesn’t regulate an approved framework of AI tools in

place, its employees will commonly turn to using these applications across

everyday actions. By now, everyone is aware of the existence of generative AI

assets, whether they are actively using them or not, but without a proper

ruleset in place, everyday employee actions can quickly become security

nightmares. This can be everything from employees pasting sensitive client

information or proprietary code into public generative AI tools to developers

downloading promising open-source models from unverified repositories. ... The

root cause of turning to shadow AI isn’t malicious intent. Unlike cyber

actors, aiming to disrupt and exploit business infrastructure weaknesses for a

hefty payout, employees aren’t leaking data outside of your organisation

intentionally. AI is simply an accessible, powerful tool that many find

exciting. In the absence of clear policies, training and oversight, and the

increased pressure of faster, greater delivery, people will naturally seek the

most effective support to get the job done. ... Regardless, you cannot protect

against what you can’t see. Tools like Data Loss Prevention (DLP) and Cloud

Access Security Brokers (CASB), which detect unauthorised AI use, must be an

essential part of your security monitoring toolkit. Ensuring these alerts

connect directly to your SIEM and defining clear processes for escalation and

correction are also key for maximum security.

When an organisation doesn’t regulate an approved framework of AI tools in

place, its employees will commonly turn to using these applications across

everyday actions. By now, everyone is aware of the existence of generative AI

assets, whether they are actively using them or not, but without a proper

ruleset in place, everyday employee actions can quickly become security

nightmares. This can be everything from employees pasting sensitive client

information or proprietary code into public generative AI tools to developers

downloading promising open-source models from unverified repositories. ... The

root cause of turning to shadow AI isn’t malicious intent. Unlike cyber

actors, aiming to disrupt and exploit business infrastructure weaknesses for a

hefty payout, employees aren’t leaking data outside of your organisation

intentionally. AI is simply an accessible, powerful tool that many find

exciting. In the absence of clear policies, training and oversight, and the

increased pressure of faster, greater delivery, people will naturally seek the

most effective support to get the job done. ... Regardless, you cannot protect

against what you can’t see. Tools like Data Loss Prevention (DLP) and Cloud

Access Security Brokers (CASB), which detect unauthorised AI use, must be an

essential part of your security monitoring toolkit. Ensuring these alerts

connect directly to your SIEM and defining clear processes for escalation and

correction are also key for maximum security.How to error-proof your team’s emergency communications

Hierarchy paralysis occurs when critical information is withheld by junior staff due to the belief that speaking up may undermine the chain of command. Junior operators may notice an anomaly or suspect a procedure is incorrect, but often neglect to disclose their concerns until after a mistake has happened. They may assume their input will be dismissed or even met with backlash due to their position. In many cases, their default stance is to believe that senior staff are acting on insight that they themselves lack. CRM trains employees to follow a structured verbal escalation path during critical incidents. Similar to emergency operations procedures (EOPs), staff are taught to express their concerns using short, direct phrases. This approach helps newer employees focus on the issue itself rather than navigating the interaction’s social aspects — an area that can lead to cognitive overload or delayed action. In such scenarios, CRM recommends the “2-challenge rule”: team members should attempt to communicate an observed issue twice, and if the issue remains unaddressed, escalate it to upper management. ... Strengthening emergency protocols can help eliminate miscommunication between employees and departments. Owners and operators can adopt strategies from other mission-critical industries to reduce human error and improve team responsiveness. While interpersonal issues between departments and individuals in different roles are inevitable, tighter emergency procedures can ensure consistency and more predictable team behavior.SpamGPT – AI-powered Attack Tool Used By Hackers For Massive Phishing Attack

.webp?w=696&resize=696,0&ssl=1) SpamGPT’s dark-themed user interface provides a comprehensive dashboard for

managing criminal campaigns. It includes modules for setting up SMTP/IMAP

servers, testing email deliverability, and analyzing campaign results features

typically found in Fortune 500 marketing tools but repurposed for cybercrime.

The platform gives attackers real-time, agentless monitoring dashboards that

provide immediate feedback on email delivery and engagement. ... Attackers no

longer need strong writing skills; they can simply prompt the AI to create

scam templates for them. The toolkit’s emphasis on scale is equally

concerning, as it promises guaranteed inbox delivery to popular providers like

Gmail, Outlook, and Microsoft 365 by abusing trusted cloud services such as

Amazon AWS and SendGrid to mask its malicious traffic. ... What once required

significant technical expertise can now be executed by a single operator with

a ready-made toolkit. The rise of such AI-driven platforms signals a new

evolution in cybercrime, where automation and intelligent content generation

make attacks more scalable, convincing, and difficult to detect. To counter

this emerging threat, organizations must harden their email defenses.

Enforcing strong email authentication protocols such as DMARC, SPF, and DKIM

is a critical first step to make domain spoofing more difficult. Furthermore,

enterprises should deploy AI-powered email security solutions capable of

detecting the subtle linguistic patterns and technical signatures of

AI-generated phishing content.

SpamGPT’s dark-themed user interface provides a comprehensive dashboard for

managing criminal campaigns. It includes modules for setting up SMTP/IMAP

servers, testing email deliverability, and analyzing campaign results features

typically found in Fortune 500 marketing tools but repurposed for cybercrime.

The platform gives attackers real-time, agentless monitoring dashboards that

provide immediate feedback on email delivery and engagement. ... Attackers no

longer need strong writing skills; they can simply prompt the AI to create

scam templates for them. The toolkit’s emphasis on scale is equally

concerning, as it promises guaranteed inbox delivery to popular providers like

Gmail, Outlook, and Microsoft 365 by abusing trusted cloud services such as

Amazon AWS and SendGrid to mask its malicious traffic. ... What once required

significant technical expertise can now be executed by a single operator with

a ready-made toolkit. The rise of such AI-driven platforms signals a new

evolution in cybercrime, where automation and intelligent content generation

make attacks more scalable, convincing, and difficult to detect. To counter

this emerging threat, organizations must harden their email defenses.

Enforcing strong email authentication protocols such as DMARC, SPF, and DKIM

is a critical first step to make domain spoofing more difficult. Furthermore,

enterprises should deploy AI-powered email security solutions capable of

detecting the subtle linguistic patterns and technical signatures of

AI-generated phishing content.How attackers weaponize communications networks

The most attractive targets for advanced threat actors are not endpoint devices or individual servers, but the foundational communications networks that connect everything. This includes telecommunications providers, ISPs, and the routing infrastructure that forms the internet’s backbone. These networks are a “target-rich environment” because compromising a single point of entry can grant access to a vast amount of data from a multitude of downstream targets. The primary motivation is overwhelmingly geopolitical. We’re seeing a trend of nation-state actors, such as those behind the Salt Typhoon campaign, moving beyond corporate espionage to a more strategic, long-term intelligence-gathering mission. ... Two recent trends are particularly telling and serve as major warning signs. The first is the sheer scale and persistence of these attacks. ... The second trend is the fusion of technical exploits with AI-powered social engineering. ... A key challenge is the lack of a standardized global approach. Differing regulations around data retention, privacy, and incident reporting can create a patchwork of security requirements that threat actors can easily exploit. For a global espionage campaign, a weak link in one country’s regulatory framework can compromise an entire international communications chain. The goal of international policy should be to establish a baseline of security that includes mandatory incident reporting, a unified approach to patching known vulnerabilities, and a focus on building a collective defense.AI's free web scraping days may be over, thanks to this new licensing protocol

AI companies are capturing as much content as possible from websites while

also extracting information. Now, several heavyweight publishers and tech

companies -- Reddit, Yahoo, People, O'Reilly Media, Medium, and Ziff Davis

(ZDNET's parent company) -- have developed a response: the Really Simple

Licensing (RSL) standard. You can think of RSL as Really Simple Syndication's

(RSS) younger, tougher brother. While RSS is about syndication, getting your

words, stories, and videos out onto the wider web, RSL says: "If you're an AI

crawler gobbling up my content, you don't just get to eat for free anymore."

The idea behind RSL is brutally simple. Instead of the old robots.txt file --

which only said, "yes, you can crawl me," or "no, you can't," and which AI

companies often ignore -- publishers can now add something new:

machine-readable licensing terms. Want an attribution? You can demand it. Want

payment every time an AI crawler ingests your work, or even every time it

spits out an answer powered by your article? Yep, there's a tag for that too.

... It's a clever fix for a complex problem. As Tim O'Reilly, the O'Reilly

Media CEO and one of the RSL initiative's high-profile backers, said: "RSS was

critical to the internet's evolution…but today, as AI systems absorb and

repurpose that same content without permission or compensation, the rules need

to evolve. RSL is that evolution."

AI companies are capturing as much content as possible from websites while

also extracting information. Now, several heavyweight publishers and tech

companies -- Reddit, Yahoo, People, O'Reilly Media, Medium, and Ziff Davis

(ZDNET's parent company) -- have developed a response: the Really Simple

Licensing (RSL) standard. You can think of RSL as Really Simple Syndication's

(RSS) younger, tougher brother. While RSS is about syndication, getting your

words, stories, and videos out onto the wider web, RSL says: "If you're an AI

crawler gobbling up my content, you don't just get to eat for free anymore."

The idea behind RSL is brutally simple. Instead of the old robots.txt file --

which only said, "yes, you can crawl me," or "no, you can't," and which AI

companies often ignore -- publishers can now add something new:

machine-readable licensing terms. Want an attribution? You can demand it. Want

payment every time an AI crawler ingests your work, or even every time it

spits out an answer powered by your article? Yep, there's a tag for that too.

... It's a clever fix for a complex problem. As Tim O'Reilly, the O'Reilly

Media CEO and one of the RSL initiative's high-profile backers, said: "RSS was

critical to the internet's evolution…but today, as AI systems absorb and

repurpose that same content without permission or compensation, the rules need

to evolve. RSL is that evolution." AI is changing the game for global trade: Nagendra Bandaru, Wipro

AI is revolutionising global supply chain and trade management by enabling

businesses across industries to make real-time, intelligent decisions. This

transformative shift is driven by the deployment of AI agents, which

dynamically respond to changing tariff regimes, logistics constraints, and

demand fluctuations. Moving beyond traditional static models, AI agents are

helping create more adaptive and responsive supply chains. ... The strategic

focus is also evolving. While cost optimisation remains important, AI is now

being leveraged to de-risk operations, anticipate geopolitical disruptions,

and ensure continuity. In essence, agentic AI is reshaping supply chains

into predictive, adaptive ecosystems that align more closely with the

complexities of global trade. ... The next frontier is going to be

threefold: first, the rise of agentic AI at scale marks a shift from

isolated use cases to enterprise-wide deployment of autonomous agents

capable of managing end-to-end trade ecosystems; second, the development of

sovereign and domain-specific language models is enabling lightweight,

highly contextualised solutions that uphold data sovereignty while

delivering robust, enterprise-grade outcomes; and third, the convergence of

AI with emerging technologies—including blockchain for provenance and

quantum computing for optimisation—is poised to redefine global trade

dynamics.

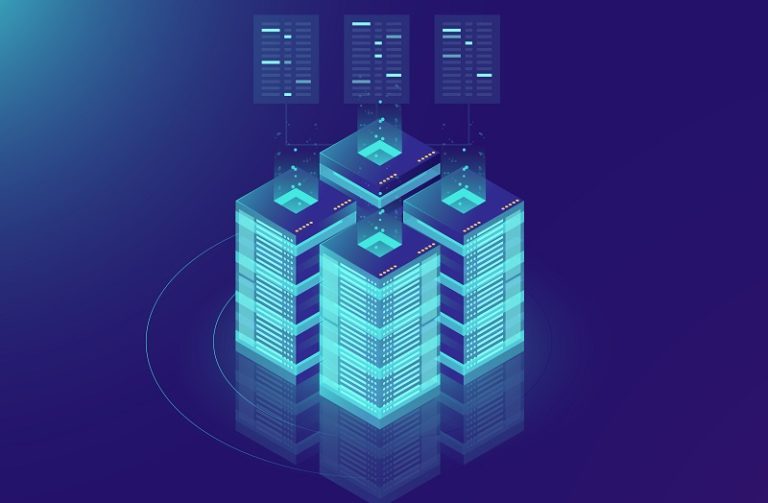

5 challenges every multicloud strategy must address

Transferring AI data among various cloud services and providers also adds

complexity — but also significant risks. “Tackling software sprawl, especially

as organizations accelerate their adoption of AI, is a top action for CIOs and

CTOs,” says Mindy Lieberman, CIO at database platform provider MongoDB. ... A

multicloud environment can complicate the management of data sovereignty.

Companies need to ensure that data remains in line with the laws and

regulations of the specific geographic regions where it is stored and

processed. ... Deploying even one cloud service can present cybersecurity

risks for an enterprise, so having a strong security program in place is all

the more vital for a multicloud environment. The risks stem from expanded

attack surfaces, inconsistent security practices among service providers,

increased complexity of the IT infrastructure, fragmented visibility, and

other factors. IT needs to be able to manage user access to cloud services and

detect threats across multiple environments — in many cases without even

having a full inventory of cloud services. ... “With greater complexity comes

more potential avenues of failure, but also more opportunities for

customization and optimization,” Wall says. “Each cloud provider offers unique

strengths and weaknesses, which means forward-thinking enterprises must know

how to leverage the right services at the right time.”

Transferring AI data among various cloud services and providers also adds

complexity — but also significant risks. “Tackling software sprawl, especially

as organizations accelerate their adoption of AI, is a top action for CIOs and

CTOs,” says Mindy Lieberman, CIO at database platform provider MongoDB. ... A

multicloud environment can complicate the management of data sovereignty.

Companies need to ensure that data remains in line with the laws and

regulations of the specific geographic regions where it is stored and

processed. ... Deploying even one cloud service can present cybersecurity

risks for an enterprise, so having a strong security program in place is all

the more vital for a multicloud environment. The risks stem from expanded

attack surfaces, inconsistent security practices among service providers,

increased complexity of the IT infrastructure, fragmented visibility, and

other factors. IT needs to be able to manage user access to cloud services and

detect threats across multiple environments — in many cases without even

having a full inventory of cloud services. ... “With greater complexity comes

more potential avenues of failure, but also more opportunities for

customization and optimization,” Wall says. “Each cloud provider offers unique

strengths and weaknesses, which means forward-thinking enterprises must know

how to leverage the right services at the right time.”

/dq/media/media_files/2025/08/28/will-the-future-be-consolidated-platforms-2025-08-28-12-43-37.jpg)