The importance of Robotic Process Automation

Michael believes that RPA will grow in importance in the future for a number of

reasons. Firstly, understanding. It’s no longer an unknown technology. So many

large organizations have Digital Workforces and so the worry and uncertainty

around them have gone. Secondly, there is a real drive to add ‘Intelligent’

ahead of ‘Automation’. Whilst we aren’t quite at the widespread adoption of

‘intelligent Automation’ just yet, these cognitive elements are getting better

and more available each week. Once we have more use cases then we will see the

early adopters of RPA start to take the next step and begin to ‘add the human

back into the robot’. Thirdly – the net cost of RPA is decreasing. There are now

community versions available free of charge, additional software given as part

of the platforms, and training available for free. The barriers to entry are

disappearing Furthermore, Mahesh highlights that the global pandemic and the

economic crisis has put a lot of organizations in a state of flux, made them

change business processes, and has also highlighted the need for more automation

through RPA.

How AI Is Changing The Real Estate Landscape

AI has applications in estimating the market value of properties and predicting

their future price trajectory. For example, ML algorithms combine current market

data and public information such as mobility metrics, crime rates, schools, and

buying trends to arrive at the best pricing strategy. The AI uses a regression

algorithm– accounting for property features such as size, number of rooms,

property age, home quality characteristics, and macroeconomic demographics–to

calculate the best price range. To wit, the AI algorithms can predict the prices

based on the geographic location or future development. Online real estate

marketplace Zillow puts out home valuations for 104 million homes across the US.

The company, founded by former Microsoft executives, uses cutting edge

statistical and machine learning models to vet hundreds of data points for

individual homes. Zillow employs a neural network-based model to extract

insights from huge swathes of data and tax assessor records and direct feeds

from hundreds of multiple listing services and brokerages.

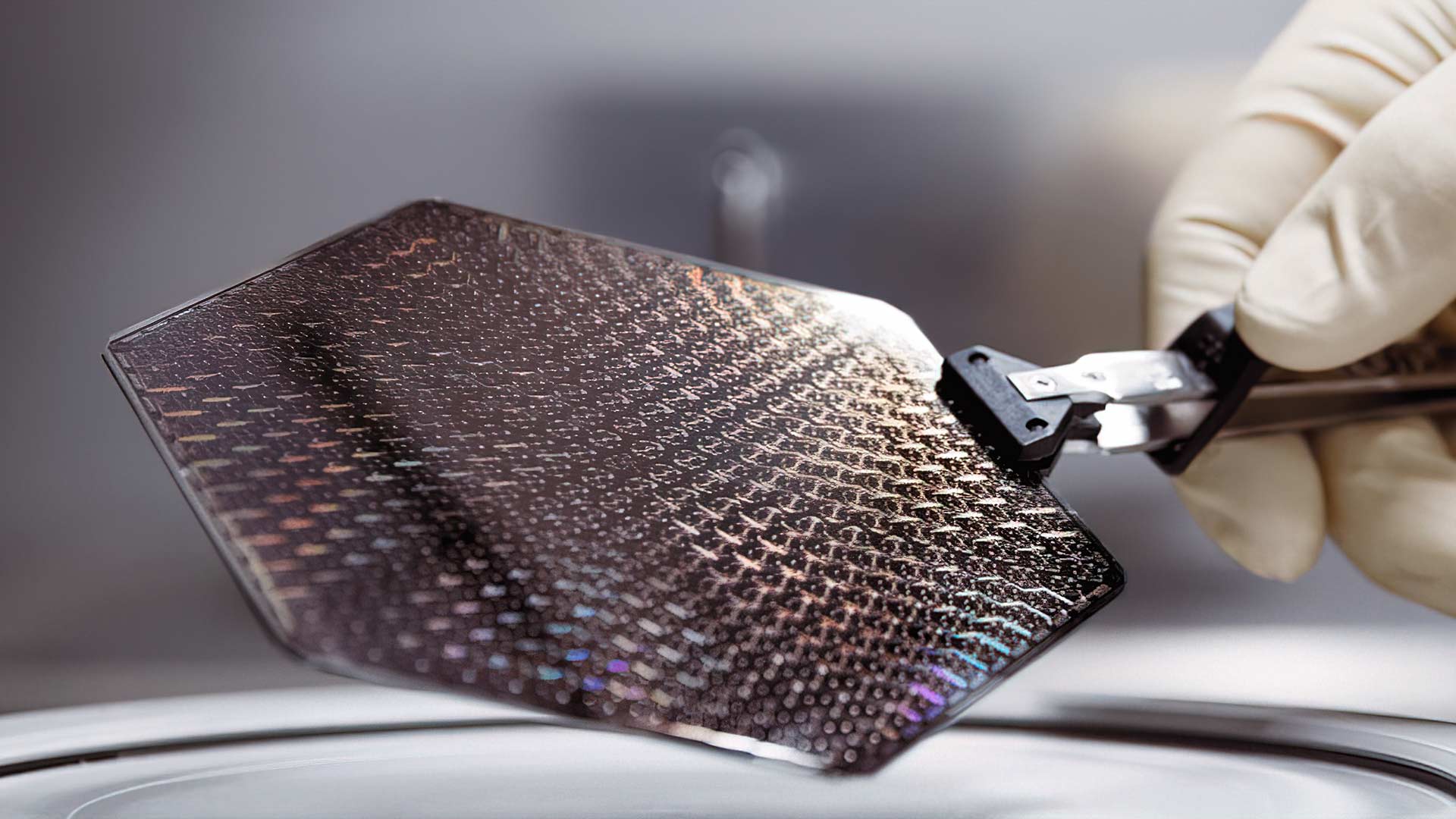

Quantum Computing just got desktop sized

Quantum computing is coming on leaps and bounds. Now there’s an operating system

available on a chip thanks to a Cambridge University-led consortia with a vision

is make quantum computers as transparent and well known as RaspberryPi. This

“sensational breakthrough” is likened by the Cambridge Independent Press to the

moment during the 1960s when computers shrunk from being room-sized to being sat

on top of a desk. Around 50 quantum computers have been built to date, and they

all use different software – there is no quantum equivalent of Windows, IOS or

Linux. The new project will deliver an OS that allows the same quantum software

to run on different types of quantum computing hardware. The system,

Deltaflow.OS (full name Deltaflow-on-ARTIQ) has been designed by Cambridge Uni

startup Riverlane. It runs on a chip developed by consortium member SEEQC using

a fraction of the space necessary in previous hardware. SEEQC is headquartered

in the US with a major R&D site in the UK. “In its most simple terms, we

have put something that once filled a room onto a chip the size of a coin, and

it works,” said Dr. Matthew Hutchings.

This Week in Programming: GitHub Copilot, Copyright Infringement and Open Source Licensing

On the idea of copyright infringement, Guadamuz first points to a research

paper by Alber Ziegler published by GitHub, which looks at situations where

Copilot reproduces exact texts, and finds those instances to be exceedingly

rare. In the original paper, Ziegler notes that “when a suggestion contains

snippets copied from the training set, the UI should simply tell you where

it’s quoted from,” as a solution against infringement claims. On the idea of

the GPL license and “derivative” works, Guadamuz again disagrees, arguing that

the issue at hand comes down to how the GPL defines modified works, and that

“derivation, modification, or adaptation (depending on your jurisdiction) has

a specific meaning within the law and the license.” “You only need to comply

with the license if you modify the work, and this is done only if your code is

based on the original to the extent that it would require a copyright

permission, otherwise it would not require a license,” writes Guadamuz. “As I

have explained, I find it extremely unlikely that similar code copied in this

manner would meet the threshold of copyright infringement, there is not enough

code copied...”

Django Vs Express: The Key Differences To Observe in 2021

Django is an Python framework that provides rapid development. It has a

pragmatic and clean design. It is recognized for having a ‘batteries included’

viewpoint, hence it is ready to be utilized. Here are some of the vital

features of Django: Django takes care of content management, user

authentication, site maps, and RSS feeds effectively; Extremely fast: This

framework was planned to aid programmers to take web applications from the

initial conception to project completion as rapidly as possible. ...

Express.js is a flexible and minimal Node.js web app framework that supplies a

vigorous set of traits for mobile and web-based apps. With innumerable HTTP

utility approaches and middleware at disposal, making a dynamic API is easy

and quick. Numerous popular web frameworks are constructed on this framework.

Below are some of the noteworthy features of Express.js: Middleware is a

fragment of the platform that has access to the client request, database, and

other such middlewares. It is primarily accountable for the organized

organization of dissimilar functions of this framework; Express.js supplies

several commonly utilized traits of Node.js in the kind of functions that can

be freely employed anywhere in the package.

Unleashing the Power of MLOps and DataOps in Data Science

Data is overwhelming, and so is the science of mining, analyzing, and

delivering it for real-time consumption. No matter how much data is good for

business, it is still vulnerable to putting the privacy of millions of users

at unimaginable risk. That is exactly why there is a sudden inclination

towards more automated processes. In the past year, enterprises sticking to

conventional analytics have realized that they will not survive any longer

without a makeover. For example, enterprises are experimenting with

micro-databases, each storing master data for a particular business entity

only. There is also an increase in the adoption of self-servicing practices to

discover, cleanse, and prepare data. They have understood the importance of

embracing the ‘XOps’ mindset and delegate more important roles to MLOps and

DataOps practices. Now, MLOps are important because bringing ML models to

execution is more difficult than training them or deploying them as APIs. The

complication further worsens in the absence of governance tools.

TrickBot Spruces Up Its Banking Trojan Module

TrickBot is a sophisticated (and common) modular threat known for stealing

credentials and delivering a range of follow-on ransomware and other malware.

But it started out as a pure-play banking trojan, harvesting online banking

credentials by redirecting unsuspecting users to malicious copycat websites.

According to researchers at Kryptos Logic Threat Intelligence, this

functionality is carried out by TrickBot’s webinject module. When victim

attempts to visit a target URL (like a banking site), the TrickBot webinject

package performs either a static or dynamic web injection to achieve its goal,

as researchers explained: “The static inject type causes the victim to be

redirected to an attacker-controlled replica of the intended destination site,

where credentials can then be harvested,” they said, in a Thursday posting.

“The dynamic inject type transparently forwards the server response to the

TrickBot command-and-control server (C2), where the source is then modified to

contain malicious components before being returned to the victim as though it

came from the legitimate site.”

How a college student founded a free and open source operating system

FreeDOS was a very popular project throughout the 1990s and into the early

2000s, but the community isn’t as big these days. But it’s great that we are

still an engaged and active group. If you look at the news items on our

website, you’ll see we post updates on a fairly regular basis. It’s hard to

estimate the size of the community. I’d say we have a few dozen members who

are very active. And we have a few dozen others who reappear occasionally to

post new versions of their programs. I think to maintain an active community

that’s still working on an open source DOS from 1994 is a great sign. Some

members have been with us from the very beginning, and I’m really thankful to

count them as friends. We do video hangouts on a semi-regular basis. It’s

great to finally “meet” the folks I’ve only exchanged emails with over the

years. It's meetings like this when I remember open source is more than just

writing code; it's about a community. And while I've always done well with our

virtual community that communicates via email, I really appreciated getting to

talk to people without the asynchronous delay or artificial filter of

email—making that real-time connection means a lot to me.

Let Google Cloud’s predictive services autoscale your infrastructure

Predictive autoscaling uses your instance group’s CPU history to forecast future

load and calculate how many VMs are needed to meet your target CPU utilization.

Our machine learning adjusts the forecast based on recurring load patterns for

each MIG. You can specify how far in advance you want autoscaler to create new

VMs by configuring the application initialization period. For example, if your

app takes 5 minutes to initialize, autoscaler will create new instances 5

minutes ahead of the anticipated load increase. This allows you to keep your CPU

utilization within the target and keep your application responsive even when

there’s high growth in demand. Many of our customers have different capacity

needs during different times of the day or different days of the week. Our

forecasting model understands weekly and daily patterns to cover for these

differences. For example, if your app usually needs less capacity on the weekend

our forecast will capture that. Or, if you have higher capacity needs during

working hours, we also have you covered.

Why should you try it?

The IoT Cloud Market

Cloud computing and the Internet of Things (IoT) have become inseparable when

one or the other is discussed and with good reason: You really can’t have IoT

without the cloud. The cloud, a grander idea that stands on its own, is

nonetheless integral to the IoT platform’s success. The Internet of Things is a

system of unrelated computing devices, mechanical and digital machines, objects,

and other devices provided with unique identifiers (an IP address) and the

ability to transfer data over a network without requiring human-to-human or

human-to-computer interaction. Whereas the traditional internet consists of

clients – PCs, tablets, and smartphones, primarily – the Internet of Things

could be cars, street signs, refrigerators, or watches. Whereas traditional

Internet input and interaction relies on human input, IoT is almost totally

automated. Because the bulk of IoT devices are not in traditional data centers

and almost all are connected wirelessly, they are reliant on the cloud for

connectivity. For example, connected cars that send up terabytes of telemetry

aren’t always going to be near a data center to transmit their data, so they

need cloud connectivity.

Quote for the day:

"Strong convictions precede great

actions." -- James Freeman Clarke

No comments:

Post a Comment