Quote for the day:

“Every adversity, every failure, every heartache carries with it the seed of an equal or greater benefit.” -- Napoleon Hill

Major Enterprise AI Assistants Can Be Abused for Data Theft, Manipulation

In the case of Copilot Studio agents that engage with the internet — over 3,000

instances have been found — the researchers showed how an agent could be

hijacked to exfiltrate information that is available to it. Copilot Studio is

used by some organizations for customer service, and Zenity showed how it can be

abused to obtain a company’s entire CRM. When Cursor is integrated with Jira

MCP, an attacker can create malicious Jira tickets that instruct the AI agent to

harvest credentials and send them to the attacker. This is dangerous in the case

of email systems that automatically open Jira tickets — hundreds of such

instances have been found by Zenity. In a demonstration targeting Salesforce’s

Einstein, the attacker can target instances with case-to-case automations —

again hundreds of instances have been found. The threat actor can create

malicious cases on the targeted Salesforce instance that hijack Einstein when

they are processed by it. The researchers showed how an attacker could update

the email addresses for all cases, effectively rerouting customer communication

through a server they control. In a Gemini attack demo, the experts showed how

prompt injection can be leveraged to get the gen-AI tool to display incorrect

information.

In the case of Copilot Studio agents that engage with the internet — over 3,000

instances have been found — the researchers showed how an agent could be

hijacked to exfiltrate information that is available to it. Copilot Studio is

used by some organizations for customer service, and Zenity showed how it can be

abused to obtain a company’s entire CRM. When Cursor is integrated with Jira

MCP, an attacker can create malicious Jira tickets that instruct the AI agent to

harvest credentials and send them to the attacker. This is dangerous in the case

of email systems that automatically open Jira tickets — hundreds of such

instances have been found by Zenity. In a demonstration targeting Salesforce’s

Einstein, the attacker can target instances with case-to-case automations —

again hundreds of instances have been found. The threat actor can create

malicious cases on the targeted Salesforce instance that hijack Einstein when

they are processed by it. The researchers showed how an attacker could update

the email addresses for all cases, effectively rerouting customer communication

through a server they control. In a Gemini attack demo, the experts showed how

prompt injection can be leveraged to get the gen-AI tool to display incorrect

information. Who’s Leading Whom? The Evolving Relationship Between Business and Data Teams

As the data boom matured, organizations realized that clear business questions weren’t enough. If we wanted analytics to drive value, we had to build stronger technical teams, including data scientists and machine learning engineers. And we realized something else: we had spent years telling business leaders they needed a working knowledge of data science. Now we had to tell data scientists they needed a working knowledge of the business. This shift in emphasis was necessary, but it didn’t go perfectly. We had told the data teams to make their work useful, usable, and used, and they took that mandate seriously. But in the absence of clear guidance and shared norms, they filled in the gap in ways that didn’t always move the business forward. ... The foundation of any effective business-data partnership is a shared understanding of what actually counts as evidence. Without it, teams risk offering solutions that don’t stand up to scrutiny, don’t translate into action, or don’t move the business forward. A shared burden of proof makes sure that everyone is working from the same assumptions about what’s convincing and credible. This shared commitment is the foundation that allows the organization to decide with clarity and confidence.A new worst coder has entered the chat: vibe coding without code knowledge

A clear disconnect then stood out to me between the vibe coding of this app and

the actual practiced work of coding. Because this app existed solely as an

experiment for myself, the fact that it didn’t work so well and the code wasn’t

great didn’t really matter. But vibe coding isn’t being touted as “a great use

of AI if you’re just mucking about and don’t really care.” It’s supposed to be a

tool for developer productivity, a bridge for nontechnical people into

development, and someday a replacement for junior developers. That was the

promise. And, sure, if I wanted to, I could probably take the feedback from my

software engineer pals and plug it into Bolt. One of my friends recommended

adding “descriptive class names” to help with the readability, and it took

almost no time for Bolt to update the code. ... The mess of my code would

be a problem in any of those situations. Even though I made something that

worked, did it really? Had this been a real work project, a developer would have

had to come in after the fact to clean up everything I had made, lest future

developers be lost in the mayhem of my creation. This is called the

“productivity tax,” the biggest frustration that developers have with AI tools,

because they spit out code that is almost—but not quite—right.

A clear disconnect then stood out to me between the vibe coding of this app and

the actual practiced work of coding. Because this app existed solely as an

experiment for myself, the fact that it didn’t work so well and the code wasn’t

great didn’t really matter. But vibe coding isn’t being touted as “a great use

of AI if you’re just mucking about and don’t really care.” It’s supposed to be a

tool for developer productivity, a bridge for nontechnical people into

development, and someday a replacement for junior developers. That was the

promise. And, sure, if I wanted to, I could probably take the feedback from my

software engineer pals and plug it into Bolt. One of my friends recommended

adding “descriptive class names” to help with the readability, and it took

almost no time for Bolt to update the code. ... The mess of my code would

be a problem in any of those situations. Even though I made something that

worked, did it really? Had this been a real work project, a developer would have

had to come in after the fact to clean up everything I had made, lest future

developers be lost in the mayhem of my creation. This is called the

“productivity tax,” the biggest frustration that developers have with AI tools,

because they spit out code that is almost—but not quite—right.

From WAF to WAAP: The Evolution of Application Protection in the API Era

The most dangerous attacks often use perfectly valid API calls arranged in unexpected sequences or volumes. API attacks don't break the rules. Instead, they abuse legitimate functionality by understanding the business logic better than the developers who built it. Advanced attacks differ from traditional web threats. For example, an SQL injection attempt looks syntactically different from legitimate input, making it detectable through pattern matching. However, an API attack might consist of perfectly valid requests that individually pass all schema validation tests, with the malicious intent emerging only from their sequence, timing, or cross-endpoint correlation patterns. ... The strategic value of WAAP goes well beyond just keeping attackers out. It's becoming a key enabler for faster, more confident API development cycles. Think about how your API security works today — you build an endpoint, then security teams manually review it, continuous penetration testing (link is external) breaks it, you fix it, and around and around you go. This approach inevitably creates friction between velocity and security. Through continuous visibility and protection, WAAP allows development teams to focus on building features rather than manually hardening each API endpoint. Hence, you can shift the traditional security bottleneck into a security enablement model.Scrutinizing LLM Reasoning Models

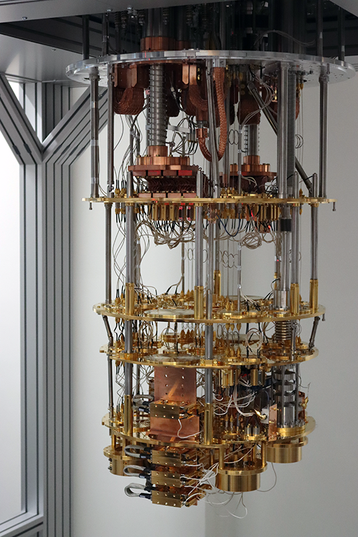

Assessing CoT quality is an important step towards improving reasoning model

outcomes. Other efforts attempt to grasp the core cause of reasoning

hallucination. One theory suggests the problem starts with how reasoning models

are trained. Among other training techniques, LLMs go through multiple rounds of

reinforcement learning (RL), a form of machine learning that teaches the

difference between desirable and undesirable behavior through a point-based

reward system. During the RL process, LLMs learn to accumulate as many positive

points as possible, with “good” behavior yielding positive points and “bad”

behavior yielding negative points. While RL is used on non-reasoning LLMs, a

large amount of it seems to be necessary to incentivize LLMs to produce CoT,

which means that reasoning models generally receive more of it. ... If

optimizing for CoT length leads to confused reasoning or inaccurate answers, it

might be better to incentivize models to produce shorter CoT. This is the

intuition that inspired researchers at Wand AI to see what would happen if they

used RL to encourage conciseness and directness rather than verbosity. Across

multiple experiments conducted in early 2025, Wand AI’s team discovered a

“natural correlation” between CoT brevity and answer accuracy, challenging the

widely held notion that the additional time and compute required to create long

CoT leads to better reasoning outcomes.

Assessing CoT quality is an important step towards improving reasoning model

outcomes. Other efforts attempt to grasp the core cause of reasoning

hallucination. One theory suggests the problem starts with how reasoning models

are trained. Among other training techniques, LLMs go through multiple rounds of

reinforcement learning (RL), a form of machine learning that teaches the

difference between desirable and undesirable behavior through a point-based

reward system. During the RL process, LLMs learn to accumulate as many positive

points as possible, with “good” behavior yielding positive points and “bad”

behavior yielding negative points. While RL is used on non-reasoning LLMs, a

large amount of it seems to be necessary to incentivize LLMs to produce CoT,

which means that reasoning models generally receive more of it. ... If

optimizing for CoT length leads to confused reasoning or inaccurate answers, it

might be better to incentivize models to produce shorter CoT. This is the

intuition that inspired researchers at Wand AI to see what would happen if they

used RL to encourage conciseness and directness rather than verbosity. Across

multiple experiments conducted in early 2025, Wand AI’s team discovered a

“natural correlation” between CoT brevity and answer accuracy, challenging the

widely held notion that the additional time and compute required to create long

CoT leads to better reasoning outcomes.

4 regions you didn't know already had age verification laws – and how they're enforced

Australia’s 2021 Online Safety Act was less focused on restricting access to

adult content than it was on tackling issues of cyberbullying and online abuse

of children, especially on social media platforms. The act introduced a legal

framework to allow people to request the removal of hateful and abusive

content, ... Chinese law has required online service providers to

implement a real-name registration system for over a decade. In 2012, the

Decision on Strengthening Network Information Protection was passed, before

being codified into law in 2016 as the Cybersecurity Law. The legislation

requires online service providers to collect users’ real names, ID numbers, and

other personal information. ... As with the other laws we’ve looked at, COPPA

has its fair share of critics and opponents, and has been criticized as being

both ineffective and unconstitutional by experts. Critics claim that it

encourages users to lie about their age to access content, and allows websites

to sidestep the need for parental consent. ... In 2025, the European Commission

took the first steps towards creating an EU-wide strategy for age verification

on websites when it released a prototype app for a potential age verification

solution called a mini wallet, which is designed to be interoperable with the EU

Digital Identity Wallet scheme.

Australia’s 2021 Online Safety Act was less focused on restricting access to

adult content than it was on tackling issues of cyberbullying and online abuse

of children, especially on social media platforms. The act introduced a legal

framework to allow people to request the removal of hateful and abusive

content, ... Chinese law has required online service providers to

implement a real-name registration system for over a decade. In 2012, the

Decision on Strengthening Network Information Protection was passed, before

being codified into law in 2016 as the Cybersecurity Law. The legislation

requires online service providers to collect users’ real names, ID numbers, and

other personal information. ... As with the other laws we’ve looked at, COPPA

has its fair share of critics and opponents, and has been criticized as being

both ineffective and unconstitutional by experts. Critics claim that it

encourages users to lie about their age to access content, and allows websites

to sidestep the need for parental consent. ... In 2025, the European Commission

took the first steps towards creating an EU-wide strategy for age verification

on websites when it released a prototype app for a potential age verification

solution called a mini wallet, which is designed to be interoperable with the EU

Digital Identity Wallet scheme.

The AI-enabled company of the future will need a whole new org chart

Let’s say you’ve designed a multi-agent team of AI products. Now you need to

integrate them into your company by aligning them with your processes, values

and policies. Of course, businesses onboard people all the time – but not

usually 50 different roles at once. Clearly, the sheer scale of agentic AI

presents its own challenges. Businesses will need to rely on a really tight

onboarding process. The role of the agent onboarding lead creates the AI

equivalent of an employee handbook: spelling out what agents are responsible

for, how they escalate decisions, and where they must defer to humans. They’ll

define trust thresholds, safe deployment criteria, and sandbox environments for

gradual rollout. ... Organisational change rarely fails on capability – it fails

on culture. The AI Culture & Collaboration Officer protects the human

heartbeat of the company through a time of radical transition. As agents take on

more responsibilities, human employees risk losing a sense of purpose,

visibility, or control. The culture officer will continually check how everyone

feels about the transition. This role ensures collaboration rituals evolve,

morale stays intact, and trust is continually monitored — not just in the

agents, but in the organisation’s direction of travel. It’s a future-facing HR

function with teeth.

Let’s say you’ve designed a multi-agent team of AI products. Now you need to

integrate them into your company by aligning them with your processes, values

and policies. Of course, businesses onboard people all the time – but not

usually 50 different roles at once. Clearly, the sheer scale of agentic AI

presents its own challenges. Businesses will need to rely on a really tight

onboarding process. The role of the agent onboarding lead creates the AI

equivalent of an employee handbook: spelling out what agents are responsible

for, how they escalate decisions, and where they must defer to humans. They’ll

define trust thresholds, safe deployment criteria, and sandbox environments for

gradual rollout. ... Organisational change rarely fails on capability – it fails

on culture. The AI Culture & Collaboration Officer protects the human

heartbeat of the company through a time of radical transition. As agents take on

more responsibilities, human employees risk losing a sense of purpose,

visibility, or control. The culture officer will continually check how everyone

feels about the transition. This role ensures collaboration rituals evolve,

morale stays intact, and trust is continually monitored — not just in the

agents, but in the organisation’s direction of travel. It’s a future-facing HR

function with teeth.

The Myth of Legacy Programming Languages: Age Doesn't Define Value

Instead of trying to define legacy languages based on one or two subjective

criteria, a better approach is to consider the wide range of factors that may

make a language count as legacy or not. ... Languages may be considered legacy

when no one is still actively developing them — meaning the language standards

cease receiving updates, often along with complementary resources like libraries

and compilers. This seems reasonable because when a language ceases to be

actively maintained, it may stop working with modern hardware platforms. ...

Distinguishing between legacy and modern languages based on their popularity may

also seem reasonable. After all, if few coders are still using a language,

doesn't that make it legacy? Maybe, but there are a couple of complications to

consider. One is that measuring the popularity of programming languages in a

highly accurate way is impossible — so just because one authority deems a

language to be unpopular doesn't necessarily mean developers hate it. The other

challenge is that when a language becomes unpopular, it tends to mean that

developers no longer prefer it for writing new applications. ... Programming

languages sometimes end up in the "legacy" bin when they are associated with

other forms of legacy technology — or when they lack associations with more

"modern" technologies.

Instead of trying to define legacy languages based on one or two subjective

criteria, a better approach is to consider the wide range of factors that may

make a language count as legacy or not. ... Languages may be considered legacy

when no one is still actively developing them — meaning the language standards

cease receiving updates, often along with complementary resources like libraries

and compilers. This seems reasonable because when a language ceases to be

actively maintained, it may stop working with modern hardware platforms. ...

Distinguishing between legacy and modern languages based on their popularity may

also seem reasonable. After all, if few coders are still using a language,

doesn't that make it legacy? Maybe, but there are a couple of complications to

consider. One is that measuring the popularity of programming languages in a

highly accurate way is impossible — so just because one authority deems a

language to be unpopular doesn't necessarily mean developers hate it. The other

challenge is that when a language becomes unpopular, it tends to mean that

developers no longer prefer it for writing new applications. ... Programming

languages sometimes end up in the "legacy" bin when they are associated with

other forms of legacy technology — or when they lack associations with more

"modern" technologies.

From Data Overload to Actionable Insights: Scaling Viewership Analytics with Semantic Intelligence

Semantic intelligence allows users to find reliable and accurate answers,

irrespective of the terminology used in a query. They can interact freely with

data and discover new insights by navigating massive databases, which previously

required specialized IT involvement, in turn, reducing the workload of already

overburdened IT teams. At its core, semantic intelligence lays the foundation

for true self-serve analytics, allowing departments across an organization to

confidently access information from a single source of truth. ... A semantic

layer in this architecture lets you query data in a way that feels natural and

enables you to get relevant and precise results. It bridges the gap between

complex data structures and user-friendly access. This allows users to ask

questions without any need to understand the underlying data intricacies.

Standardized definitions and context across the sources streamlines analytics

and accelerates insights using any BI tool of choice. ... One of the core

functions of semantic intelligence is to standardize definitions and provide a

single source of truth. This improves overall data governance with role-based

access controls and robust security at all levels. In addition, row- and

column-level security at both user and group levels can ensure that access to

specific rows is restricted for specific users.

Semantic intelligence allows users to find reliable and accurate answers,

irrespective of the terminology used in a query. They can interact freely with

data and discover new insights by navigating massive databases, which previously

required specialized IT involvement, in turn, reducing the workload of already

overburdened IT teams. At its core, semantic intelligence lays the foundation

for true self-serve analytics, allowing departments across an organization to

confidently access information from a single source of truth. ... A semantic

layer in this architecture lets you query data in a way that feels natural and

enables you to get relevant and precise results. It bridges the gap between

complex data structures and user-friendly access. This allows users to ask

questions without any need to understand the underlying data intricacies.

Standardized definitions and context across the sources streamlines analytics

and accelerates insights using any BI tool of choice. ... One of the core

functions of semantic intelligence is to standardize definitions and provide a

single source of truth. This improves overall data governance with role-based

access controls and robust security at all levels. In addition, row- and

column-level security at both user and group levels can ensure that access to

specific rows is restricted for specific users.

Why VAPT is now essential for small & medium business security

One misconception, often held by smaller companies, is that they are less likely

to be targeted. Industry experts disagree. "You might think, 'Well, we're a

small company. Who'd want to hack us?' But here's the hard truth: Cybercriminals

love easy targets, and small to medium businesses often have the weakest

defences," states a representative from Borderless CS. VAPT combines two

different strategies to identify vulnerabilities and potential entry points

before malicious actors do. A Vulnerability Assessment scans servers, software,

and applications for known problems in a manner similar to a security

walkthrough of a physical building. Penetration Testing (often shortened to pen

testing) simulates real attacks, enabling businesses to understand how a

determined attacker might breach their systems. ... Borderless CS maintains that

VAPT is applicable across sectors. "Retail businesses store customer data and

payment info. Healthcare providers hold sensitive patient information. Service

companies often rely on cloud tools and email systems that are vulnerable. Even

a small eCommerce store can be a jackpot for the wrong person. Cyber attackers

don't discriminate. In fact, they often prefer smaller businesses because they

assume you haven't taken strong security measures. Let's not give them that

satisfaction."

One misconception, often held by smaller companies, is that they are less likely

to be targeted. Industry experts disagree. "You might think, 'Well, we're a

small company. Who'd want to hack us?' But here's the hard truth: Cybercriminals

love easy targets, and small to medium businesses often have the weakest

defences," states a representative from Borderless CS. VAPT combines two

different strategies to identify vulnerabilities and potential entry points

before malicious actors do. A Vulnerability Assessment scans servers, software,

and applications for known problems in a manner similar to a security

walkthrough of a physical building. Penetration Testing (often shortened to pen

testing) simulates real attacks, enabling businesses to understand how a

determined attacker might breach their systems. ... Borderless CS maintains that

VAPT is applicable across sectors. "Retail businesses store customer data and

payment info. Healthcare providers hold sensitive patient information. Service

companies often rely on cloud tools and email systems that are vulnerable. Even

a small eCommerce store can be a jackpot for the wrong person. Cyber attackers

don't discriminate. In fact, they often prefer smaller businesses because they

assume you haven't taken strong security measures. Let's not give them that

satisfaction."