Quantum entanglement-as-a-service: "The key technology" for unbreakable networks

Like classical networks, quantum networks require hardware-independent control

plane software to manage data exchange between layers, allocate resources and

control synchronization, the company said. "We want to be the Switzerland of

quantum networking," said Jim Ricotta, Aliro CEO. Networked quantum computers

are needed to run quantum applications such as physics-based secure

communications and distributed quantum computing. "A unified control plane is

one of several foundational technologies that Aliro is focused on as the first

networking company of the quantum era," Ricotta said. "Receiving Air Force

contracts to advance this core technology validates our approach and helps

accelerate the time to market for this and other technologies needed to

realize the potential of quantum communication." Entanglement is a physical

phenomenon that involves tiny things such as individual photons or electrons,

Ricotta said. When they are entangled, "then they become highly correlated"

and appear together. It doesn't matter if they are hundreds of miles apart, he

said.

Design for Your Strengths

Strengths and weaknesses are often mirrors of each other. My aerobic weakness

had, as its inverse, a superstrength of anaerobic power. Indeed, these two

attributes often go hand in hand. Finally, I had figured out how to put this

to use. After the Lillehammer Olympics, I dropped out of the training camp.

But I was more dedicated than ever to skating. I moved to Milwaukee, and

without the financial or logistical support of the Olympic Committee, began a

regimen of work, business school, and self-guided athletic training. I woke up

every day at 6 a.m. and went to the rink. There I put on my pads and blocks

and skated from 7 until 9:30. Then I changed into a suit for my part-time job

as an engineer. At 3 p.m., I left work in Milwaukee and drove to the Kellogg

Business School at Northwestern, a two-hour drive. I had class from 6 to 9

p.m., usually arrived home at 11, and lifted weights until midnight. I did

that every day for two and a half years. Many people assume that being an

Olympic athlete requires a lot of discipline. But in my experience, the

discipline is only physical.

‘Next Normal’ Approaching: Advice From Three Business Leaders On Navigating The Road Ahead

With some analysts predicting a "turnover tsunami" on the horizon, talent

strategy has taken on a new sense of urgency. Lindsey Slaby, Founding

Principal of marketing strategy consultancy Sunday Dinner, focuses on building

stronger marketing organizations. She shares: Organizations are accelerating

growth by attracting new talent muscle and re-skilling their existing teams. A

rigorous approach to talent has never been as important as it is right now.

The relationship between employer and employee has undergone significant

recalibration the last year with the long-term impact of the nation’s largest

work-from-home experiment yet to come into clear view. But much like the

Before Times, perhaps the greatest indicator of how an organization will fare

on the talent front comes down to how it invests in its people and

specifically their future potential. Slaby believes there is a core ingredient

to any winning talent strategy: Successful organizations prioritize learning

and development. Training to anticipate the pace of change is essential. It is

imperative that marketers practice ‘strategy by doing’ and understand the

underlying technology that fuels their go-to-market approach.

The Beauty of Edge Computing

The volume and velocity of data generated at the edge is a primary factor that

will impact how developers allocate resources at the edge and in the cloud. “A

major impact I see is how enterprises will manage their cloud storage because

it’s impractical to save the large amounts of data that the Edge creates

directly to the cloud,” says Will Kelly, technical marketing manager for a

container security startup (@willkelly). “Edge computing is going to shake up

cloud financial models so let’s hope enterprises have access to a cloud

economist or solution architect who can tackle that challenge for them.” With

billions of industrial and consumer IoT devices being deployed, managing the

data is an essential consideration in any edge-to-cloud strategy. “Advanced

consumer applications such as streaming multiplayer games, digital assistants

and autonomous vehicle networks demand low latency data so it is important to

consider the tremendous efficiencies achieved by keeping data physically close

to where it is consumed,” says Scott Schober, President/CEO of Berkeley

Varitronics Systems, Inc. (@ScottBVS).

Facebook Makes a Big Leap to MySQL 8

The company skipped entirely upgrading to MySQL 5.7 release, the major release

between 5.6 and 8.0. At the time, Facebook was building its custom storage

engine, called MyRocks, for MySQL and didn’t want to interrupt the

implementation process, the engineers write. MyRocks is a MySQL adaptation for

RocksDB, a storage engine optimized for fast write performance that Instagram

built for to optimize Cassandra. Facebook itself was using MyRocks to power

its “user database service tier,” but would require some features in MySQL 8.0

to fully support such optimizations. Skipping over version 5.7, however,

complicated the upgrade process. “Skipping a major version like 5.7 introduced

problems, which our migration needed to solve,” the engineers admitted in the

blog post. Servers could not simply be upgraded in place. They had to use

logical dump to capture the data and rebuild the database servers from scratch

— work that took several days in some instances. API changes from 5.6 to 8.0

also had to be rooted out, and supporting two major versions within a single

replica set is just plain tricky.

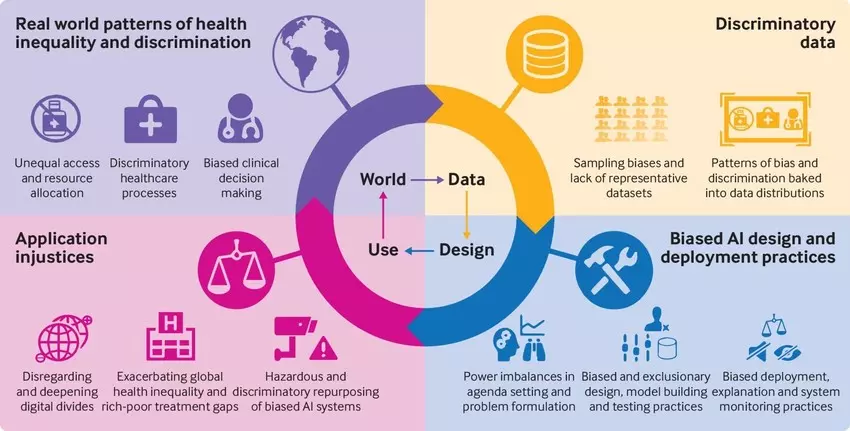

Research shows AI is often biased. Here's how to make algorithms work for all of us

Inclusive design emphasizes inclusion in the design process. The AI product

should be designed with consideration for diverse groups such as gender, race,

class, and culture. Foreseeability is about predicting the impact the AI

system will have right now and over time. Recent research published by the

Journal of the American Medical Association (JAMA) reviewed more than 70

academic publications based on the diagnostic prowess of doctors against

digital doppelgangers across several areas of clinical medicine. A lot of the

data used in training the algorithms came from only three states:

Massachusetts, California and New York. Will the algorithm generalize well to

a wider population? A lot of researchers are worried about algorithms for

skin-cancer detection. Most of them do not perform well in detecting skin

cancer for darker skin because they were trained primarily on light-skinned

individuals. The developers of the skin-cancer detection model didn't apply

principles of inclusive design in the development of their models.

Leverage the Cloud to Help Consolidate On-Prem Systems

The recommended approach is to "create or recreate" a representation of the

final target system in-the-cloud, but not re-engineer any components into

cloud-native equivalents. The same number of LPARs, same memory/disk/CPU

allocations, same file system structures, same exact IP addresses, same exact

hostnames, and network subnets are created in the cloud that represents as

much as possible a "clone" of the eventual system of record that will exist

on-prem. The benefit of this approach is that you can apply "cloud

flexibility" to what was historically a "cloud stubborn" system. Fast cloning,

ephemeral longevity, software-defined networking, API automation can all be

applied to the temporary stand-in running in the cloud. As design principles

are finalized based on research performed on the cloud version of the system,

those findings can be applied to the on-prem final buildout. To jump-start the

cloud build-out process, it is possible to reuse existing on-prem assets as

the foundation for components built in the cloud. LPARs in the cloud can be

based on existing mksysb images already created on-prem.

Scaling API management for long-term growth

To manage the complexity of the API ecosystem, organisations are embracing API

management tools to identify, control, secure and monitor API use in their

existing applications and services. Having visibility and control of API

consumption provides a solid foundation for expanding API provision,

discovery, adoption and monetisation. Many organizations start with an

in-house developed API management approach. However, as their API management

strategies mature, they often find the increasing complexity of maintaining

and monitoring the usage of APIs, and the components of their API management

solution itself, a drain on technical resources and a source of technical

debt. A common challenge for API management approaches is becoming a victim of

one’s own success. For instance, a company that deploys an API management

solution for a region or department may quickly get requests for access from

other teams seeking to benefit from the value delivered, such as API

discoverability and higher service reliability. While this demand should be

seen as proof of a great approach to digitalization, it adds challenges and

raises questions for example around capacity, access control, administration

rights and governance.

Is Blockchain the Ultimate Cybersecurity Solution for My Applications?

Blockchain can provide a strong and effective solution for securing networked

ledgers. However, it does not guarantee the security of individual

participants or eliminate the need to follow other cybersecurity best

practices. Blockchain application depends on external data or other at-risk

resources; thus, it cannot be a panacea. The blockchain implementation code

and the environments in which the blockchain technology run must be checked

for cyber vulnerabilities. Blockchain technology provides stronger,

transactional security than traditional, centralized computing services for

secured networked transaction ledger. For example, say I use distributed

ledger technology (DLT), an intrinsic blockchain feature, while creating my

blockchain-based application. DLT increases cyberresiliency because it creates

a situation where there is no single point of contact. In the DLT, an attack

on one or a small number of participants does not affect other nodes. Thus,

DLT helps maintain transparency and availability, and continue the

transactions. Another advantage of DLT is that endpoint vulnerabilities are

addressed.

Why bigger isn’t always better in banking

Of course, there are outliers. One of them is Brown Brothers Harriman, a

merchant/investment bank that traces its origins back some 200 years, and that

is the subject of an engaging new book, Inside Money. Historian Zachary

Karabell (disclosure: we were graduate school classmates in the last

millennium) offers not just an intriguing family and personal history, but a

lesson in how to balance risk and ambition against responsibility and

longevity—and in why bigger isn’t always better. The firm’s survival is even

more remarkable given that US financial history often reads as a string of

booms, bubbles, busts, and bailouts. The Panic of 1837. The Panic of 1857. The

Civil War. The Panic of 1907. The Great Depression. The Great Recession of

2008. In finance, leverage—i.e., debt—is the force that allows companies to

lift more than they could under their own power. It’s also the force that can

crush them when circumstances change. And Brown Brothers has thrived in part

by avoiding excessive leverage. Today, the bank primarily “acts as a custodian

for trillions of dollars of global assets,” Karabell writes. “Its culture

revolves around service.”

Quote for the day:

"Leadership is absolutely about inspiring action, but it is also about

guarding against mis-action." -- Simon Sinek

No comments:

Post a Comment