Why Are Real IT Cyber Security Improvements So Hard to Achieve?

It’s easy to point fingers in various directions to try to explain why we have

done such a poor job of improving IT security over the years. Unfortunately,

most of the places at which blame is typically directed bear limited, if any,

responsibility for our lack of security. It’s hard to deny that software is

more complex today than it was 10 or 20 years ago. The cloud, distributed

infrastructure, microservices, containers and the like have led to software

environments that change faster and involve more moving pieces. It’s

reasonable to argue that this added complexity has made modern environments

more difficult to secure. There may be some truth to this. But, on the

flipside, you have to remember that the complexity brings new security

benefits, too. In theory, distributed architectures, microservices and other

modern models make it easier to isolate or segment workloads in ways that

should mitigate the impact of a breach. Thus, I think it’s simplistic to say

that the reason IT cyber security remains so poor is that software has grown

more complex, and that security strategies and tools have not kept pace. You

could argue just as plausibly that modern architectures should have improved

security.

Facebook is recycling heat from its data centers to warm up these homes

The tech giant stressed that the heat distribution system it has developed

uses exclusively renewable energy. The data center is entirely supplied by

wind power, and Fjernvarme Fyn's facility only uses pumps and coils to

transfer the heat. As a result, the project is expected to reduce Odense's

demand for coal by up to 25%. Although Facebook is keen to use the heat

recovery system in other locations, the company didn't reveal any plans to

export the technology just yet. "Our ability to do heat recovery depends on a

number of factors, so we will evaluate them first," said Edelman. For example,

the proximity of the data center to the community it can provide heat for will

be a key criteria to consider. Improving data centers' green credentials

has been a priority for technology companies as of late. Google recently

showcased a new tool that can match the timing of some compute tasks in data

centers to the availability of lower-carbon energy. The platform can

shift non-urgent workloads to times of the day when wind or solar sources of

energy are more plentiful. The search giant is aiming for "24x7 carbon-free

energy" in all of its data centers, which means constantly matching facilities

with sources of carbon-free power.

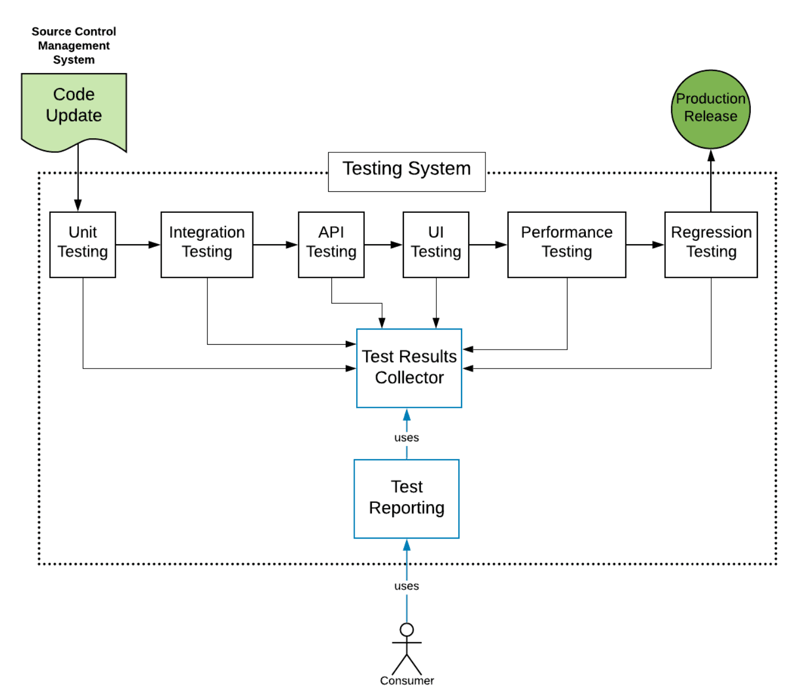

Understanding When to Use a Test Tool vs. a Test System

A system is a group of parts that interact in concert to form a unified whole.

A system has an identifiable purpose. For example, the purpose of a school

system is to educate students. The purpose manufacturing system is to produce

one or many end products. In turn, the purpose of a testing system is to

ensure that features and functions within the scope of the software's entire

domain operate to specified expectations. Typically a testing system is made

of parts that test specific aspects of the software under consideration.

However, unlike a testing tool, which is limited in scope, a testing system

encompasses all the testing that takes place within the SDLC. Thus a

testing system needs to support all aspects of software testing throughout the

SDLC in terms of execution, data collection, and reporting. First and

foremost, a testing system needs to be able to control testing workflows. This

means that the system can execute tests according to a set of predefined

events. For example, when new code is committed to a source control

repository, or when a new or updated component is ready to be added to an

existing application.

Wi-Fi 6E: When it’s coming and what it’s good for

There’s so much confusion around all the 666 numbers, it’ll scare you to

death. You’ve got Wi-Fi 6, Wi-Fi 6E – and Wi-Fi 6 still has additional

enhancements coming after that, with multi-user multiple input, multiple

output (multi-user MIMO) functionalities. Then there’s the 6GHz spectrum, but

that’s not where Wi-Fi 6 gets its name from: It’s the sixth generation of

Wi-Fi. On top of all that, we are just getting a handle 5G and there already

talking about 6G – seriously, look it up – it's going to get even more

confusing. ... The last time we got a boost in UNII-2 and UNII-2 Extended was

15 years ago and smartphones hadn’t even taken off yet. Now being able to get

1.2GHz is enormous. With Wi-Fi 6E, we’re not doubling the amount of Wi-Fi

space, we're actually quadrupling the amount of usable space. That’s three,

four, or five times more spectrum, depending on where you are in the world.

Plus you don't have to worry about DFS [dynamic frequency selection],

especially indoors. Wi-Fi 6E is not going to be faster than Wi-Fi 6 and it’s

not adding enhanced technology features. The neat thing is operating the 6GHz

will require Wi-Fi 6 or above clients. So, we’re not going to have any slow

clients and we’re not going to have a lot of noise.

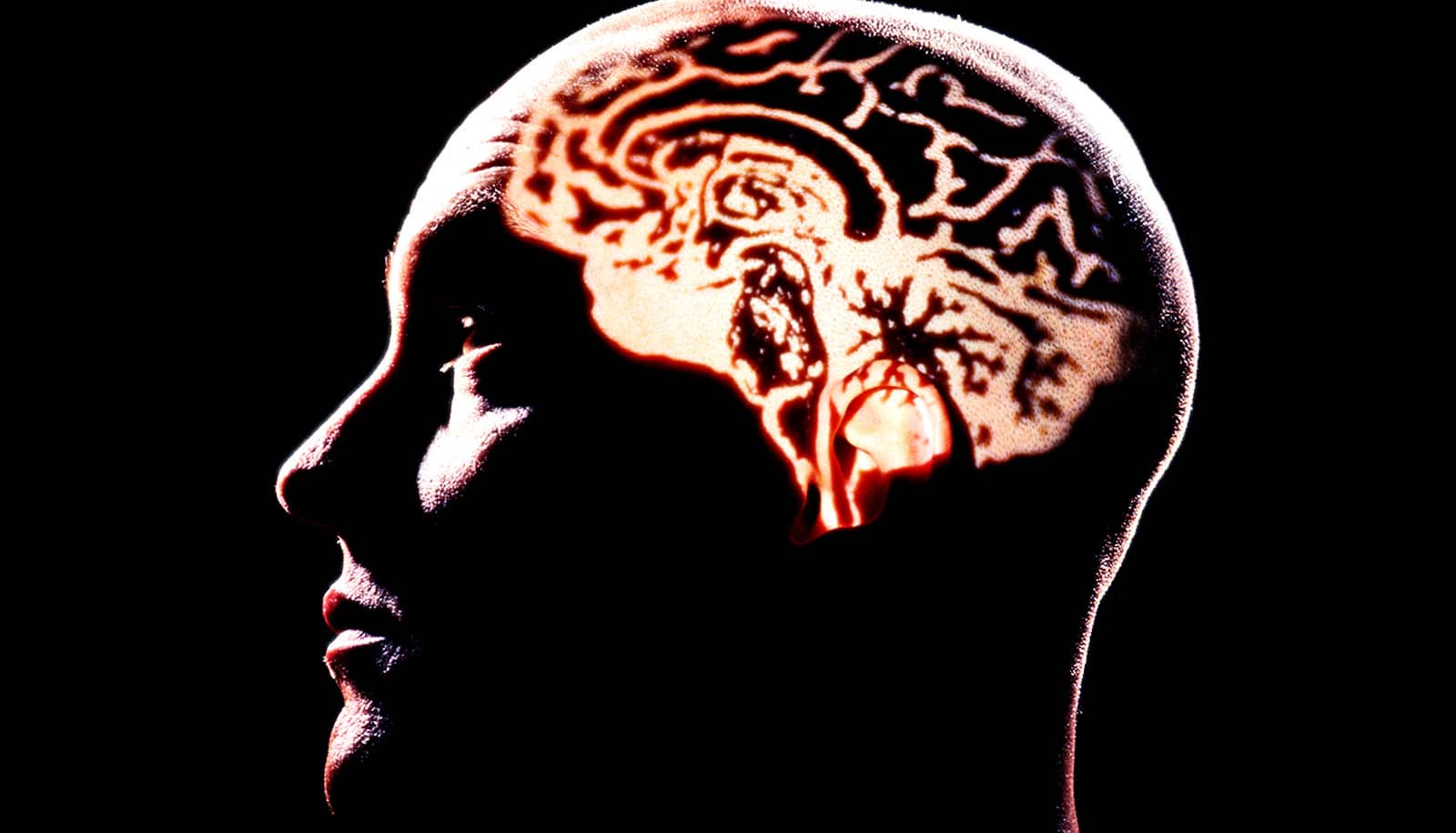

AI Tracks Seizures In Real Time

In brain science, the current understanding of most seizures is that they

occur when normal brain activity is interrupted by a strong, sudden

hyper-synchronized firing of a cluster of neurons. During a seizure, if a

person is hooked up to an electroencephalograph—a device known as an EEG that

measures electrical output—the abnormal brain activity is presented as

amplified spike-and-wave discharges. “But the seizure detection accuracy is

not that good when temporal EEG signals are used,” Bomela says. The team

developed a network inference technique to facilitate detection of a seizure

and pinpoint its location with improved accuracy. During an EEG session, a

person has electrodes attached to different spots on their head, each

recording electrical activity around that spot. “We treated EEG electrodes as

nodes of a network. Using the recordings (time-series data) from each node, we

developed a data-driven approach to infer time-varying connections in the

network or relationships between nodes,” Bomela says. Instead of looking

solely at the EEG data—the peaks and strengths of individual signals—the

network technique considers relationships.

How to Calculate ROI on Infrastructure Automation

The equation is simple. You have a long, manual process. You figure out a way

to automate it. Ta-da! What once took two hours now takes two minutes. And you

save sweet 118 minutes. If you run this lovely piece of automation very

frequently, the value is multiplied. Saving 118 minutes 10 times a day is very

significant. Like magic. ... Back to the value formula. In real life, there

are more facets to this formula. One of the factors that affect the value you

get from automation is how many people have access to it. You can automate

something that can potentially run 2,000 times a day, every day; this could be

a game-changer in terms of value. But if this is something that 2,000

different people need to do, there is also the question of how accessible your

automation is. Getting your automation to run smoothly by other people is not

always a piece of cake (“What’s your problem?! It’s in git! Yes, you just get

it from there. I’ll send you the link. You don’t have a user? Get a user! You

can’t run it? Of course, you can’t, you need a runtime. Just get the runtime.

It’s all in the readme! Oh, wait, the version is not in the readme. Get 3.0,

it only works with 3.0. Oh, and you edited the config file, right?”).

The most in-demand IT staff companies want to hire

Companies want people who are good communicators and who will be

proactive--for example, quickly addressing a support ticket that comes in in

the morning, so users don't have to wait, Wallenberg added. In terms of

security hiring trends, "there have always been really brilliant people who

can sell the need for security to the business,'' and that is needed now more

than ever in IT, he said. "In a perfect world, it shouldn't have taken

high-profile breaches of personal and identifiable information for companies

to wake up and say we need to invest more money in it. So security leadership

and, further down the pole, they have to sell their vision on steps they need

to take to more systematically ensure systems are safe and companies are

protected from threats." Because of the current climate, it is also critical

that companies are prepared to handle remote onboarding of new tech team

members, Wallenberg said. "Companies that adopted a cloud-first strategy years

ago are in a much better position to onboard [new staff] than people who need

an office network to connect,'' he said.

An enterprise architect's guide to the data modeling process

Conceptual modeling in the process is normally based on the relationship

between application components. The model assigns a set of properties for each

component, which will then define the data relationships. These components can

include things like organizations, people, facilities, products and

application services. The definitions of these components should identify

business relationships. For example, a product ships from a warehouse, and

then to a retail store. An effective conceptual data model diligently traces

the flow of these goods, orders and payments between the various software

systems the company uses. Conceptual models are sometimes translated directly

into physical database models. However, when data structures are complex, it's

worth creating a logical model that sits in between. It populates the

conceptual model with the specific parametric data that will, eventually,

become the physical model. In the logical modeling step, create unique

identifiers that define each component's property and the scope of the data

fields.

"Leaders must encourage their organizations to dance to forms of music yet

to be heard." -- Warren G. Bennis

Microsoft's ZeRO-2 Speeds up AI Training 10x

Recent trends in NLP research have seen improved accuracy from larger models trained on larger datasets. OpenAI have proposed a set of "scaling laws" showing that model accuracy has a power-law relation with model size, and recently tested this idea by creating the GPT-3 model which has 175 billion parameters. Because these models are simply too large to fit in the memory of a single GPU, training them requires a cluster of machines and model-parallel training techniques that distribute the parameters across the cluster. There are several open-source frameworks available that implement efficient model parallelism, including GPipe and NVIDIA's Megatron, but these have sub-linear speedup due to the overhead of communication between cluster nodes, and using the frameworks often requires model refactoring. ZeRO-2 reduces the memory needed for training using three strategies: reducing model state memory requirements, offloading layer activations to the CPU, and reducing memory fragmentation.The unexpected future of medicine

Along with robots, drones are being enlisted as a way of stopping the

person-to-person spread of coronavirus. Deliveries made by drone rather than

by truck, for example, remove the need for a human driver who may

inadvertently spread the virus. A number of governments have already drafted

drones in to help with distributing PPE to hospitals in need of kit: in the

UK, a trial of drones taking equipment from Hampshire to the Isle of Wight

was brought forward following the COVID-19 outbreak. In Ghana, drones have

also been put to work collecting patients samples for coronavirus testing,

bringing the tests from rural areas into hospitals in more populous regions

for testing. Meanwhile, in several countries, drones are also being used to

drop off medicine to people in remote communities or those who are

sheltering in place. Drones have also been used to disinfect outdoor markets

and other areas to slow the spread of the disease. And in South Korea,

drones have been drafted in to celebrate healthcare workers and spread

public health messages, such as reminding people to continue wearing masks

and washing their hands.

Quote for the day:

No comments:

Post a Comment