Quote for the day:

"To succeed in business it is necessary to make others see things as you see them." -- Aristotle Onassis

World Backup Day: Time to take action on data protection

“The best protection that businesses can give their backups is to keep at

least two copies, one offline and the other offsite”, continues Fine. “By

keeping one offline, an airgap is created between the backup and the rest of

the IT environment. Should a business be the victim of a cyberattack, the

threat physically cannot spread into the backup as there’s no connection to

enable this daisy-chain effect. By keeping another copy offsite, businesses

can prevent the backup suffering due to the same disaster (such as flooding or

wildfires) as the main office.” ... “As such, traditional backup best

practices remain important. Measures like encryption (in transit and at rest),

strong access controls, immutable or write-once storage, and air-gapped or

physically separated backups help defend against increasingly sophisticated

threats. To ensure true resilience, backups must be tested regularly. Testing

confirms that the data is recoverable, helps teams understand the recovery

process, and verifies recovery speeds, whilst supporting good governance and

risk management.” ... “With the move towards a future of AI-driven

technologies, the amount of data we generate and use is set to increase

exponentially. With data often containing valuable information, any loss or

impact could have devastating consequences.”

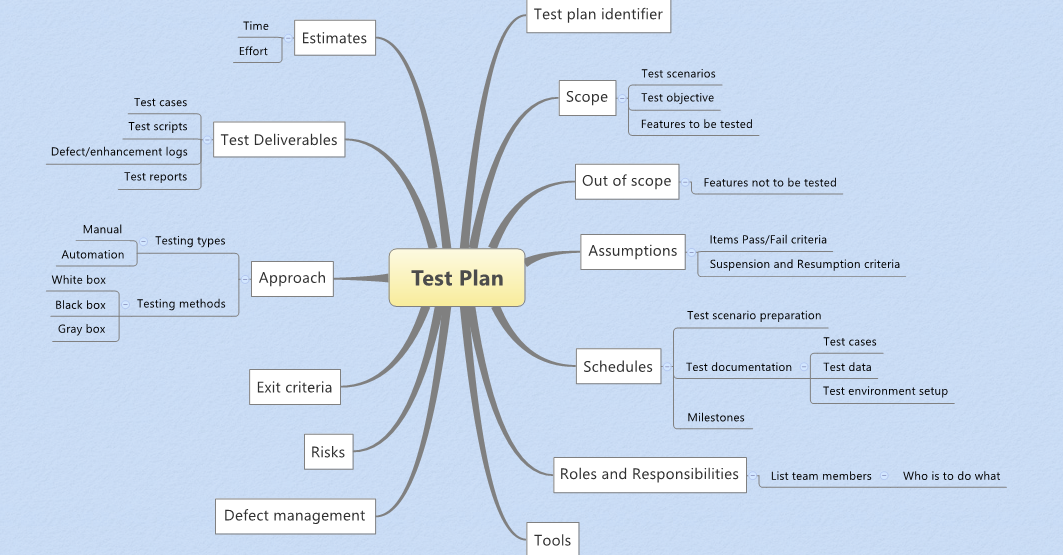

5 Common Pitfalls in IT Disaster Recovery (and How to Avoid Them)

One of the most common missteps in IT disaster recovery is viewing it as a

“check-the-box” exercise — something to complete once and file away. But

disaster recovery isn’t static. As infrastructure evolves, business processes

shift and new threats emerge, a plan that was solid two years ago may now be

dangerously outdated. An untested, unrefreshed IT/DR plan can give a false

sense of security, only to fail when it’s needed most. Instead, treat IT/DR as

a living process. Regularly review and update it with changes to your

technology stack, business priorities, and risk landscape. ... A disaster

recovery plan that lives only on paper is likely to fail. Many organizations

either skip testing altogether or run through it under ideal, low-pressure

conditions (far from the chaos of a real crisis). When a true disaster hits,

the stress, urgency, and complexity can quickly overwhelm teams that haven’t

practiced their roles. That’s why regular, scenario-based testing is

essential. ... Even the most robust IT disaster recovery plan can fail if

roles are unclear and communication breaks down. Without well-defined

responsibilities and structured escalation paths, response efforts become

disorganized and slow — often when speed matters most.

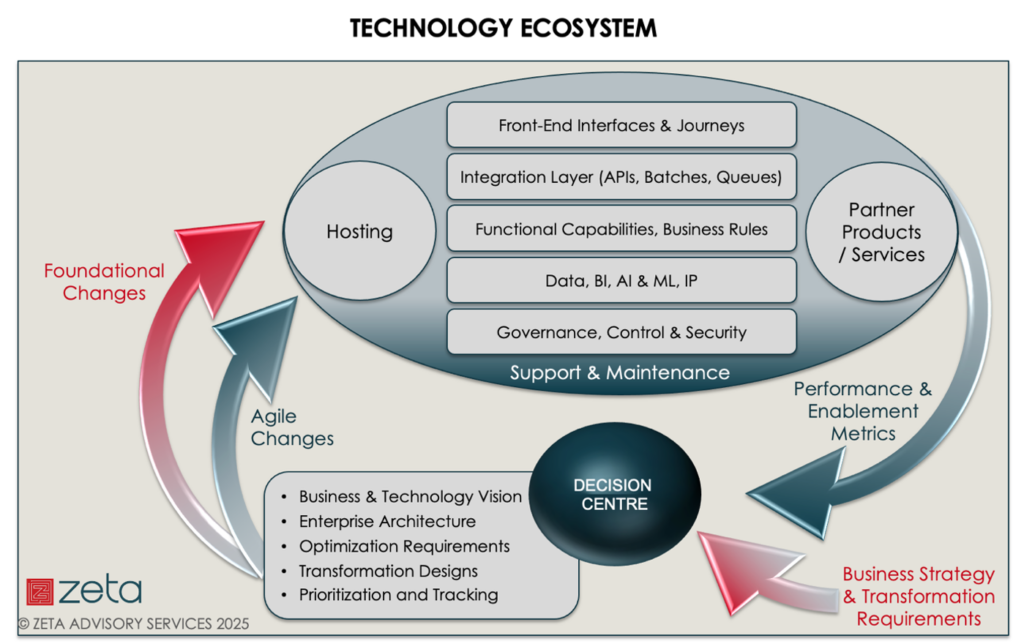

How CISOs can balance business continuity with other responsibilities

The challenge for CISOs is providing security while ensuring the business

recovers quickly without reinfecting systems or making rushed decisions that

could lead to repeated incidents. The new reality of business continuity is

dealing with cyber-led disruptions. Organizations have taken note, with 46% of

organizations nominating cybersecurity incidents as the top business

continuity priority ... While CISOs may find that their remit is expanding to

cover business continuity, a lack of clear delineation of roles and

responsibilities can spell trouble. To effectively handle business continuity,

cybersecurity leaders need a framework to collaborate with IT leadership.

Responding to events requires a delicate balance between thoroughness of

investigation and speed of recovery that traditional business continuity plan

approaches may not fit. On paper, the CISO owns the protection of

confidentiality, integrity, and availability, but availability was outsourced

a long time ago to either the CIO or facilities, according to Blake. “BCDR is

typically owned by the CIO or facilities, but in a cyber incident, the CISO

will be holding the toilet chain for the attack, while all the plumbing is

provided by the CIO,” he says

Two things you need in place to successfully adopt AI

A well-defined policy is essential for companies to deploy and leverage this

technology securely. This technology will continue to move fast and innovate

giving automation and machines more power in organizational decision-making,

and the first line of defense for companies is a clear, accessible AI policy

that the whole company is aware of and subscribes to. Enforcing a security

policy also means defining what risk ratings are acceptable for an

organization, and the ability to reprioritize the risk ratings as the

environment changes. There are always going to be errors and false positives.

Different organizations have different risk tolerances or different

interpretations depending on their operations and data sensitivity. ...

Developers need to have a secure code mindset that extends beyond basic coding

knowledge. Code written by developers needs to be clear, elegant, and secure.

If it is not, it leaves that written code open for attack. Secure coding

training driven by industry is, therefore, a must and must be built into an

organization’s DNA, especially during a time when the already prevalent AppSec

dilemma is being intensified by the current tech layoffs.

3 things haven’t changed in software engineering

Strategic thinking has long been part of a software engineer’s job, to go

beyond coding to building. Working in service of a larger purpose helps

engineers develop more impactful solutions than simply coding to a set of

specifications. With the rise in AI-assisted coding—and, thus, the ability to

code and build much faster—the “why” remains at the forefront. We drive

business impact by delivering measurable customer benefits. And you have to

understand a problem before you can solve it with code. ... The best engineers

are inherently curious, with an eye for detail and a desire to learn. Through

the decades, that hasn’t really changed; a learning mindset continues to be

important for technologists at every level. I’ve always been curious about

what makes things tick. As a child, I remember taking things apart to see how

they worked. I knew I wanted to be an engineer when I was able to put them

back together again. ... Not every great coder aspires to be a people leader;

I certainly didn’t. I was introverted growing up. But as I worked my way up at

Intuit, I saw firsthand how the right leadership skills could deepen my

impact, even when I wasn’t charged with leading anybody. I’ve seen how quick

decision making, holistic problem solving, and efficient delegation can drive

impact at every level of an organization. And these assets only become more

important as we fold AI into the process.

Understanding AI Agent Memory: Building Blocks for Intelligent Systems

Episodic memory in AI refers to the storage of past interactions and the

specific actions taken by the agent. Like human memory, episodic memory

records the events or “episodes” an agent experiences during its operation.

This type of memory is crucial because it enables the agent to reference

previous conversations, decisions, and outcomes to inform future actions. ...

Semantic memory in AI encompasses the agent’s repository of factual, external

information and internal knowledge. Unlike episodic memory, which is tied to

specific interactions, semantic memory holds generalized knowledge that the

agent can use to understand and interpret the world. This may include language

rules, domain-specific information, or self-awareness of the agent’s

capabilities and limitations. One common semantic memory use is in

Retrieval-Augmented Generation (RAG) applications, where the agent leverages a

vast data store to answer questions accurately. ... Procedural memory is the

backbone of an AI system’s operational aspects. It includes systemic

information such as the structure of the system prompt, the tools available to

the agent, and the guardrails that ensure safe and appropriate interactions.

In essence, procedural memory defines “how” the agent functions rather than

“what” it knows.

Why Leadership Teams Need Training In Crisis Management

You don’t have the time to mull over different iterations or think about

different possibilities and outcomes. You and your team need to make a

decision quickly. Depending on the crisis at hand, you’ll need to assess the

information available, evaluate potential risks, and make a timely decision.

Waiting can be detrimental to your business. Failure to inform customers that

their information was compromised during a cybersecurity attack could lead

them to take their business elsewhere. ... Crisis or not, communication is how

teams facilitate information and build trust. During a crisis, it’s up to the

leader to communicate efficiently and effectively to the internal teams. It’s

natural for panic to ensue during a time of unpredictability and stress. ...

it’s not only internal communications that you’re responsible for. You also

need to consider what you’re communicating to your customers, vendors, and

shareholders. This is where crisis management can come in handy. While you

should know how best to speak to your team, communicating externally can

present itself as more challenging. ... One crisis can be the end of your

business if not handled properly and considerably. This is especially the case

for businesses that undergo internal crises, such as cybersecurity attacks,

product recalls, or miscalculated marketing campaigns.

SaaS Is Broken: Why Bring Your Own Cloud (BYOC) Is the Future

BYOC allows customers to run SaaS applications using their own cloud

infrastructure and resources rather than relying on a third-party vendor’s

infrastructure. This hybrid approach preserves the convenience and velocity of

SaaS while balancing cost and ownership with the control of self-hosted

solutions. Building a BYOC stack that is easy to adopt, cost-effective, and

performant is a significant engineering challenge. But as a software vendor,

there are many benefits to your customers that make it worth the effort. ...

SaaS brought speed and simplicity to software consumption, while traditional on

premises offered control and predictability. But a more balanced approach is

emerging as companies face rising costs, compliance challenges, and the need for

data ownership. BYOC is the consolidated evolution of both worlds — combining

the convenience of SaaS with the control of on premises. Instead of sending

massive amounts of data to third-party vendors, companies can run SaaS

applications within their cloud infrastructure. This means predictable costs,

better compliance, and tailored performance. We’ve seen this hybrid model

succeed in other areas. Meta’s Llama gained massive adoption as users could run

it on their infrastructure.

What Happens When AI Is Used as an Autonomous Weapon

The threat to enterprises is already substantial, according to Ben Colman,

co-founder and CEO at deepfake and AI-generated media detection platform Reality

Defender. “We’re seeing bad actors leverage AI to create highly convincing

impersonations that bypass traditional security mechanisms at scale. AI voice

cloning technology is enabling fraud at unprecedented levels, where attackers

can convincingly impersonate executives in phone calls to authorize wire

transfers or access sensitive information,” Colman says. Meanwhile, deepfake

videos are compromising verification processes that previously relied on visual

confirmation, he adds. “These threats are primarily coming from organized

criminal networks and nation-state actors who recognize the asymmetric advantage

AI offers. They’re targeting communication channels first because they’re the

foundation of trust in business operations.” Attackers are using AI capabilities

to automate, scale, and disguise traditional attack methods. According to Casey

Corcoran, field CISO at SHI company Stratascale, examples include creating more

convincing phishing and social engineering attacks to automatically modify

malware so that it is unique to each attack, thereby defeating signature-based

detection.

Worldwide spending on genAI to surge by hundreds of billions of dollars

“The market’s growth trajectory is heavily influenced by the increasing

prevalence of AI-enabled devices, which are expected to comprise almost the

entire consumer device market by 2028,” said Lovelock. “However, consumers are

not chasing these features. As the manufacturers embed AI as a standard feature

in consumer devices, consumers will be forced to purchase them.” In fact, for

organizations, AI PCs could solve key issues organizations face when using cloud

and data center AI instances, including cost, security, and privacy concerns,

according to a study released this month by IDC Research. This year is expected

to be the year of the AI PC, according to Forrester Research. It defines an AI

PC as one that has an embedded AI processor and algorithms specifically designed

to improve the experience of AI workloads across the central processing unit

(CPU), graphics processing unit (GPU), and neural processing unit, or NPU. ...

“This reflects a broader trend toward democratizing AI capabilities, ensuring

that teams across functions and levels can benefit from its transformative

potential,” said Tom Mainelli, IDC’s group vice president for device and

consumer research. “As AI tools become more accessible and tailored to specific

job functions, they will further enhance productivity, collaboration, and

innovation across industries.”

/dq/media/media_files/2025/03/27/mMzxBShG6YdS5dM6yOml.jpg)

/articles/dirma-measuring-disaster-recovery/en/smallimage/dirma-measuring-disaster-recovery-thumbnail-1742895674005.jpg)