Quote for the day:

“Develop success from failures. Discouragement and failure are two of the surest stepping stones to success.” -- Dale Carnegie

Is ‘Decentralized Data Contributor’ the Next Big Role in the AI Economy?

Training AI models requires real-world, high-quality, and diverse data. The problem is that the astronomical demand is slowly outpacing the available sources. Take public datasets as an example. Not only is this data overused, but it’s often restricted to avoid privacy or legal concerns. There’s also a huge issue with geographic or spatial data gaps where the information is incomplete regarding specific regions, which can and will lead to inaccuracies or biases with AI models. Decentralized contributors can help bust these challenges. ... Even though a large part of the world’s population has no problem with passively sharing data when browsing the web, due to the relative infancy of decentralized systems, active data contribution may seem to many like a bridge too far. Anonymized data isn’t 100% safe. Determined threat actor parties can sometimes re-identify individuals from unnamed datasets. The concern is valid, which is why decentralized projects working in the field must adopt privacy-by-design architectures where privacy is a core part of the system instead of being layered on top after the fact. Zero-knowledge proofs is another technique that can reduce privacy risks by allowing contributors to prove the validity of the data without exposing any information. For example, demonstrating their identity meets set criteria without divulging anything identifiable.

Training AI models requires real-world, high-quality, and diverse data. The problem is that the astronomical demand is slowly outpacing the available sources. Take public datasets as an example. Not only is this data overused, but it’s often restricted to avoid privacy or legal concerns. There’s also a huge issue with geographic or spatial data gaps where the information is incomplete regarding specific regions, which can and will lead to inaccuracies or biases with AI models. Decentralized contributors can help bust these challenges. ... Even though a large part of the world’s population has no problem with passively sharing data when browsing the web, due to the relative infancy of decentralized systems, active data contribution may seem to many like a bridge too far. Anonymized data isn’t 100% safe. Determined threat actor parties can sometimes re-identify individuals from unnamed datasets. The concern is valid, which is why decentralized projects working in the field must adopt privacy-by-design architectures where privacy is a core part of the system instead of being layered on top after the fact. Zero-knowledge proofs is another technique that can reduce privacy risks by allowing contributors to prove the validity of the data without exposing any information. For example, demonstrating their identity meets set criteria without divulging anything identifiable.The ROI of Governance: Nithesh Nekkanti on Taming Enterprise Technical Debt

A key symptom of technical debt is rampant code duplication, which inflates maintenance efforts and increases the risk of bugs. A multi-pronged strategy focused on standardization and modularity proved highly effective, leading to a 30% reduction in duplicated code. This initiative went beyond simple syntax rules to forge a common development language, defining exhaustive standards for Apex and Lightning Web Components. By measuring metrics like technical debt density, teams can effectively track the health of their codebase as it evolves. ... Developers may perceive stricter quality gates as a drag on velocity, and the task of addressing legacy code can seem daunting. Overcoming this resistance requires clear communication and a focus on the long-term benefits. "Driving widespread adoption of comprehensive automated testing and stringent code quality tools invariably presents cultural and operational challenges," Nekkanti acknowledges. The solution was to articulate a compelling vision. ... Not all technical debt is created equal, and a mature governance program requires a nuanced approach to prioritization. The PEC developed a technical debt triage framework to systematically categorize issues based on type, business impact, and severity. This structured process is vital for managing a complex ecosystem, where a formal Technical Governance Board (TGB) can use data to make informed decisions about where to invest resources.Why Third-Party Risk Management (TPRM) Can’t Be Ignored in 2025

In today’s business world, no organization operates in a vacuum. We rely on

vendors, suppliers, and contractors to keep things running smoothly. But every

connection brings risk. Just recently, Fortinet made headlines as threat

actors were found maintaining persistent access to FortiOS and FortiProxy

devices using known vulnerabilities—while another actor allegedly offered a

zero-day exploit for FortiGate firewalls on a dark web forum. These aren’t

just IT problems—they’re real reminders of how vulnerabilities in third-party

systems can open the door to serious cyber threats, regulatory headaches, and

reputational harm. That’s why Third-Party Risk Management (TPRM) has become a

must-have, not a nice-to-have. ... Think of TPRM as a structured way to stay

on top of the risks your third parties, suppliers and vendors might expose you

to. It’s more than just ticking boxes during onboarding—it’s an ongoing

process that helps you monitor your partners’ security practices, compliance

with laws, and overall reliability. From cloud service providers, logistics

partners, and contract staff to software vendors, IT support providers,

marketing agencies, payroll processors, data analytics firms, and even

facility management teams—if they have access to your systems, data, or

customers, they’re part of your risk surface.

In today’s business world, no organization operates in a vacuum. We rely on

vendors, suppliers, and contractors to keep things running smoothly. But every

connection brings risk. Just recently, Fortinet made headlines as threat

actors were found maintaining persistent access to FortiOS and FortiProxy

devices using known vulnerabilities—while another actor allegedly offered a

zero-day exploit for FortiGate firewalls on a dark web forum. These aren’t

just IT problems—they’re real reminders of how vulnerabilities in third-party

systems can open the door to serious cyber threats, regulatory headaches, and

reputational harm. That’s why Third-Party Risk Management (TPRM) has become a

must-have, not a nice-to-have. ... Think of TPRM as a structured way to stay

on top of the risks your third parties, suppliers and vendors might expose you

to. It’s more than just ticking boxes during onboarding—it’s an ongoing

process that helps you monitor your partners’ security practices, compliance

with laws, and overall reliability. From cloud service providers, logistics

partners, and contract staff to software vendors, IT support providers,

marketing agencies, payroll processors, data analytics firms, and even

facility management teams—if they have access to your systems, data, or

customers, they’re part of your risk surface.

Ushering in a new era of mainframe modernization

One of the key challenges in modern IT environments is integrating data across

siloed systems. Mainframe data, despite being some of the most valuable in the

enterprise, often remains underutilized due to accessibility barriers. With a

z17 foundation, software data solutions can more easily bridge critical systems,

offering unprecedented data accessibility and observability. For CIOs, this is

an opportunity to break down historical silos and make real-time mainframe data

available across cloud and distributed environments without compromising

performance or governance. As data becomes more central to competitive

advantage, the ability to bridge existing and modern platforms will be a

defining capability for future-ready organizations. ... For many industries,

mainframes continue to deliver unmatched performance, reliability, and security

for mission-critical workloads—capabilities that modern enterprises rely on to

drive digital transformation. Far from being outdated, mainframes are evolving

through integration with emerging technologies like AI, automation, and hybrid

cloud, enabling organizations to modernize without disruption. With decades of

trusted data and business logic already embedded in these systems, mainframes

provide a resilient foundation for innovation, ensuring that enterprises can

meet today’s demands while preparing for tomorrow’s challenges.

One of the key challenges in modern IT environments is integrating data across

siloed systems. Mainframe data, despite being some of the most valuable in the

enterprise, often remains underutilized due to accessibility barriers. With a

z17 foundation, software data solutions can more easily bridge critical systems,

offering unprecedented data accessibility and observability. For CIOs, this is

an opportunity to break down historical silos and make real-time mainframe data

available across cloud and distributed environments without compromising

performance or governance. As data becomes more central to competitive

advantage, the ability to bridge existing and modern platforms will be a

defining capability for future-ready organizations. ... For many industries,

mainframes continue to deliver unmatched performance, reliability, and security

for mission-critical workloads—capabilities that modern enterprises rely on to

drive digital transformation. Far from being outdated, mainframes are evolving

through integration with emerging technologies like AI, automation, and hybrid

cloud, enabling organizations to modernize without disruption. With decades of

trusted data and business logic already embedded in these systems, mainframes

provide a resilient foundation for innovation, ensuring that enterprises can

meet today’s demands while preparing for tomorrow’s challenges.

Fighting Cyber Threat Actors with Information Sharing

Effective threat intelligence sharing creates exponential defensive improvements that extend far beyond individual organizational benefits. It not only raises the cost and complexity for attackers but also lowers their chances of success. Information Sharing and Analysis Centers (ISACs) demonstrate this multiplier effect in practice. ISACs are, essentially, non-profit organizations that provide companies with timely intelligence and real-world insights, helping them boost their security. The success of existing ISACs has also driven expansion efforts, with 26 U.S. states adopting the NAIC Model Law to encourage information sharing in the insurance sector. ... Although the benefits of information sharing are clear, actually implementing them is a different story. Common obstacles include legal issues regarding data disclosure, worries over revealing vulnerabilities to competitors, and the technical challenge itself – evidently, devising standardized threat intelligence formats is no walk in the park. And yet it can certainly be done. Case in point: the above-mentioned partnership between CrowdStrike and Microsoft. Its success hinges on its well-thought-out governance system, which allows these two business rivals to collaborate on threat attribution while protecting their proprietary techniques and competitive advantages.The Ultimate Guide to Creating a Cybersecurity Incident Response Plan

Creating a fit-for-purpose cyber incident response plan isn’t easy. However, by

adopting a structured approach, you can ensure that your plan is tailored for

your organisational risk context and will actually help your team manage the

chaos that ensues a cyber attack. In our experience, following a step-by-step

process to building a robust IR plan always works. Instead of jumping straight

into creating a plan, it’s best to lay a strong foundation with training and

risk assessment and then work your way up. ... Conducting a cyber risk

assessment before creating a Cybersecurity Incident Response Plan is critical.

Every business has different assets, systems, vulnerabilities, and exposure to

risk. A thorough risk assessment identifies what assets need the most

protection. The assets could be customer data, intellectual property, or

critical infrastructure. You’ll be able to identify where the most likely entry

points for attackers may be. This insight ensures that the incident response

plan is tailored and focused on the most pressing risks instead of being a

generic checklist. A risk assessment will also help you define the potential

impact of various cyber incidents on your business. You can prioritise response

strategies based on what incidents would be most damaging. Without this step,

response efforts may be misaligned or inadequate in the face of a real threat.

Creating a fit-for-purpose cyber incident response plan isn’t easy. However, by

adopting a structured approach, you can ensure that your plan is tailored for

your organisational risk context and will actually help your team manage the

chaos that ensues a cyber attack. In our experience, following a step-by-step

process to building a robust IR plan always works. Instead of jumping straight

into creating a plan, it’s best to lay a strong foundation with training and

risk assessment and then work your way up. ... Conducting a cyber risk

assessment before creating a Cybersecurity Incident Response Plan is critical.

Every business has different assets, systems, vulnerabilities, and exposure to

risk. A thorough risk assessment identifies what assets need the most

protection. The assets could be customer data, intellectual property, or

critical infrastructure. You’ll be able to identify where the most likely entry

points for attackers may be. This insight ensures that the incident response

plan is tailored and focused on the most pressing risks instead of being a

generic checklist. A risk assessment will also help you define the potential

impact of various cyber incidents on your business. You can prioritise response

strategies based on what incidents would be most damaging. Without this step,

response efforts may be misaligned or inadequate in the face of a real threat.

How to Become the Leader Everyone Trusts and Follows With One Skill

Leaders grounded in reason have a unique ability; they can take complex situations and make sense of them. They look beyond the surface to find meaning and use logic as their compass. They're able to spot patterns others might miss and make clear distinctions between what's important and what's not. Instead of being guided by emotion, they base their decisions on credibility, relevance and long-term value. ... The ego doesn't like reason. It prefers control, manipulation and being right. At its worst, it twists logic to justify itself or dominate others. Some leaders use data selectively or speak in clever soundbites, not to find truth but to protect their image or gain power. But when a leader chooses reason, something shifts. They let go of defensiveness and embrace objectivity. They're able to mediate fairly, resolve conflicts wisely and make decisions that benefit the whole team, not just their own ego. This mindset also breaks down the old power structures. Instead of leading through authority or charisma, leaders at this level influence through clarity, collaboration and solid ideas. ... Leaders who operate from reason naturally elevate their organizations. They create environments where logic, learning and truth are not just considered as values, they're part of the culture. This paves the way for innovation, trust and progress.Why enterprises can’t afford to ignore cloud optimization in 2025

Cloud computing has long been the backbone of modern digital infrastructure,

primarily built around general-purpose computing. However, the era of

one-size-fits-all cloud solutions is rapidly fading in a business environment

increasingly dominated by AI and high-performance computing (HPC) workloads.

Legacy cloud solutions struggle to meet the computational intensity of deep

learning models, preventing organizations from fully realizing the benefits of

their investments. At the same time, cloud-native architectures have become the

standard, as businesses face mounting pressure to innovate, reduce

time-to-market, and optimize costs. Without a cloud-optimized IT infrastructure,

organizations risk losing key operational advantages—such as maximizing

performance efficiency and minimizing security risks in a multi-cloud

environment—ultimately negating the benefits of cloud-native adoption. Moreover,

running AI workloads at scale without an optimized cloud infrastructure leads to

unnecessary energy consumption, increasing both operational costs and

environmental impact. This inefficiency strains financial resources and

undermines corporate sustainability goals, which are now under greater scrutiny

from stakeholders who prioritize green initiatives.

Cloud computing has long been the backbone of modern digital infrastructure,

primarily built around general-purpose computing. However, the era of

one-size-fits-all cloud solutions is rapidly fading in a business environment

increasingly dominated by AI and high-performance computing (HPC) workloads.

Legacy cloud solutions struggle to meet the computational intensity of deep

learning models, preventing organizations from fully realizing the benefits of

their investments. At the same time, cloud-native architectures have become the

standard, as businesses face mounting pressure to innovate, reduce

time-to-market, and optimize costs. Without a cloud-optimized IT infrastructure,

organizations risk losing key operational advantages—such as maximizing

performance efficiency and minimizing security risks in a multi-cloud

environment—ultimately negating the benefits of cloud-native adoption. Moreover,

running AI workloads at scale without an optimized cloud infrastructure leads to

unnecessary energy consumption, increasing both operational costs and

environmental impact. This inefficiency strains financial resources and

undermines corporate sustainability goals, which are now under greater scrutiny

from stakeholders who prioritize green initiatives.

Data Protection for Whom?

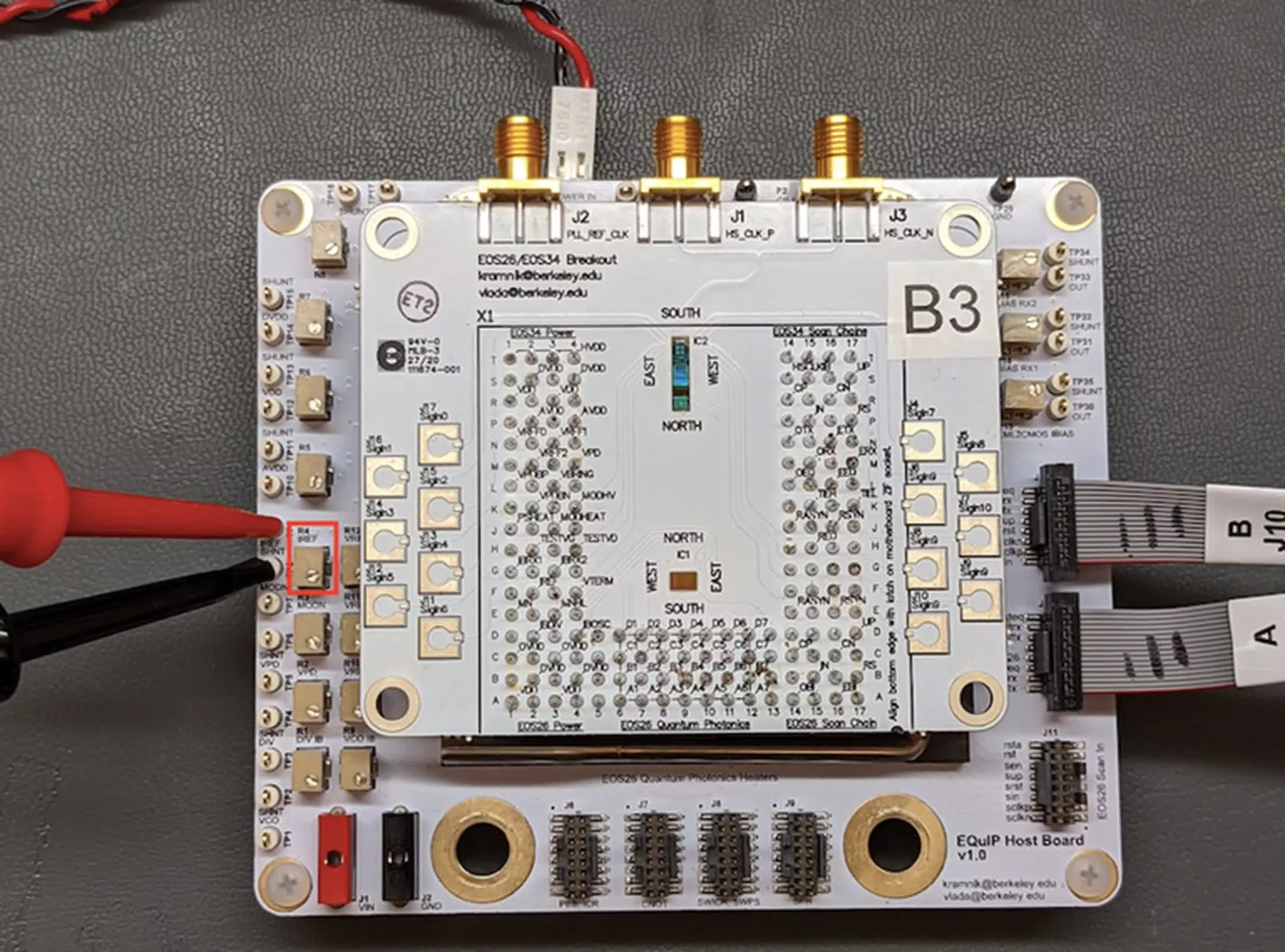

Stargate’s slow start reveals the real bottlenecks in scaling AI infrastructure

“Scaling AI infrastructure depends less on the technical readiness of servers or

GPUs and more on the orchestration of distributed stakeholders — utilities,

regulators, construction partners, hardware suppliers, and service providers —

each with their own cadence and constraints,” Gogia said. ... Mazumder

warned that “even phased AI infrastructure plans can stall without early

coordination” and advised that “enterprises should expect multi-year rollout

horizons and must front-load cross-functional alignment, treating AI infra as a

capital project, not a conventional IT upgrade.” ... Given the lessons from

Stargate’s delays, analysts recommend a pragmatic approach to AI infrastructure

planning. Rather than waiting for mega-projects to mature, Mazumder emphasized

that “enterprise AI adoption will be gradual, not instant and CIOs must pivot to

modular, hybrid strategies with phased infrastructure buildouts.” ... The

solution is planning for modular scaling by deploying workloads in hybrid and

multi-cloud environments so progress can continue even when key sites or

services lag. ... For CIOs, the key lesson is to integrate external readiness

into planning assumptions, create coordination checkpoints with all providers,

and avoid committing to go-live dates that assume perfect alignment.

“Scaling AI infrastructure depends less on the technical readiness of servers or

GPUs and more on the orchestration of distributed stakeholders — utilities,

regulators, construction partners, hardware suppliers, and service providers —

each with their own cadence and constraints,” Gogia said. ... Mazumder

warned that “even phased AI infrastructure plans can stall without early

coordination” and advised that “enterprises should expect multi-year rollout

horizons and must front-load cross-functional alignment, treating AI infra as a

capital project, not a conventional IT upgrade.” ... Given the lessons from

Stargate’s delays, analysts recommend a pragmatic approach to AI infrastructure

planning. Rather than waiting for mega-projects to mature, Mazumder emphasized

that “enterprise AI adoption will be gradual, not instant and CIOs must pivot to

modular, hybrid strategies with phased infrastructure buildouts.” ... The

solution is planning for modular scaling by deploying workloads in hybrid and

multi-cloud environments so progress can continue even when key sites or

services lag. ... For CIOs, the key lesson is to integrate external readiness

into planning assumptions, create coordination checkpoints with all providers,

and avoid committing to go-live dates that assume perfect alignment.

/dq/media/media_files/2025/05/21/YEYrWoduJEOJSC7iWMtt.png)