Quote for the day:

“There is no failure except in no longer trying.” -- Chris Bradford

AWS Outage Is Just the Latest Internet Glitch Banks Must Insulate Against

If clouds fail or succumb to cyberattacks, the damage can be enormous,

measured only by the maliciousness and creativity of the hacker and the

redundancy and resilience of the defenses that users have in place. ... As I

describe in The Unhackable Internet, we are already way down the rabbit hole

of cyber insecurity. It would take a massive coordinated global effort to

secure the current internet. That is unlikely to happen. Therefore, the most

realistic business strategy is to assume the inevitable: A glitch, human error

or a successful breach or cloud failure will occur. That means systems must be

in place to distribute patches, resume operations, reconstruct networks, and

recover lost data. Redundancy is a necessary component to get back online, but

how much redundancy is feasible or economically sustainable? And will those

backstops actually work? ... Given these ever-increasing challenges and cyber

incursions in the financial services business, I have argued for a fundamental

change in regulation — one that will keep regulators on the cutting edge of

digital and cybersecurity developments. To accomplish that, regulation should

be a more collaborative experience that invests the financial industry in its

own oversight and systemic security. This effort should include industry

executives and their staffs. Their expertise in the oversight process would

enrich the quality of regulation, particularly from the perspective of

strengthening the cyber defenses of the industry.

If clouds fail or succumb to cyberattacks, the damage can be enormous,

measured only by the maliciousness and creativity of the hacker and the

redundancy and resilience of the defenses that users have in place. ... As I

describe in The Unhackable Internet, we are already way down the rabbit hole

of cyber insecurity. It would take a massive coordinated global effort to

secure the current internet. That is unlikely to happen. Therefore, the most

realistic business strategy is to assume the inevitable: A glitch, human error

or a successful breach or cloud failure will occur. That means systems must be

in place to distribute patches, resume operations, reconstruct networks, and

recover lost data. Redundancy is a necessary component to get back online, but

how much redundancy is feasible or economically sustainable? And will those

backstops actually work? ... Given these ever-increasing challenges and cyber

incursions in the financial services business, I have argued for a fundamental

change in regulation — one that will keep regulators on the cutting edge of

digital and cybersecurity developments. To accomplish that, regulation should

be a more collaborative experience that invests the financial industry in its

own oversight and systemic security. This effort should include industry

executives and their staffs. Their expertise in the oversight process would

enrich the quality of regulation, particularly from the perspective of

strengthening the cyber defenses of the industry.The 10 biggest issues CISOs and cyber teams face today

“It’s not finger-pointing; we’re all learning,” Lee says. “Business is now

expected to embrace and move quickly with AI. Boards and C-level executives are

saying, ‘We have to lean into this more’ and then they turn to security teams to

support AI. But security doesn’t fully understand the risk. No one has this down

because it’s moving so fast.” As a result, many organizations skip security

hardening in their rush to embrace AI. But CISOs are catching up. ... Moreover,

Todd Moore, global vice president of data security at Thales, says CISOs are

facing a torrent of AI-generated data — generally unstructured data such as chat

logs — that needs to be secured. “In some aspects, AI is becoming the new

insider threat in organizations,” he says. “The reason why I say it’s a new

insider threat is because there’s a lot of information that’s being put in

places you never expected. CISOs need to identify and find that data and be able

to see if that data is critical and then be able to protect it.” ... “We’re now

getting to the stage where no one is off-limits,” says Simon Backwell, head of

information security at tech company Benifex and a member of ISACA’s Emerging

Trends Working Group. “Attack groups are getting bolder, and they don’t care

about the consequences. They want to cause mass destruction.”

“It’s not finger-pointing; we’re all learning,” Lee says. “Business is now

expected to embrace and move quickly with AI. Boards and C-level executives are

saying, ‘We have to lean into this more’ and then they turn to security teams to

support AI. But security doesn’t fully understand the risk. No one has this down

because it’s moving so fast.” As a result, many organizations skip security

hardening in their rush to embrace AI. But CISOs are catching up. ... Moreover,

Todd Moore, global vice president of data security at Thales, says CISOs are

facing a torrent of AI-generated data — generally unstructured data such as chat

logs — that needs to be secured. “In some aspects, AI is becoming the new

insider threat in organizations,” he says. “The reason why I say it’s a new

insider threat is because there’s a lot of information that’s being put in

places you never expected. CISOs need to identify and find that data and be able

to see if that data is critical and then be able to protect it.” ... “We’re now

getting to the stage where no one is off-limits,” says Simon Backwell, head of

information security at tech company Benifex and a member of ISACA’s Emerging

Trends Working Group. “Attack groups are getting bolder, and they don’t care

about the consequences. They want to cause mass destruction.”

The AI Inflection Point Isn’t in the Cloud, It’s at the Edge

Beyond the screen, there is a need for agentic applications that specifically

reduce latency and improve throughput. “You need an agentic architecture with

several things going on,” Shelby said about using models to analyze the

packaging of pharmaceuticals, for instance. “You might need to analyze the

defects. Then you might need an LLM with a RAG behind it to do manual lookup.

That’s very complex. It might need a lot of data behind it. It might need to be

very large. You might need 100 billion parameters.” The analysis, he noted, may

require integration with a backend system to perform another task, necessitating

collaboration among several agents. AI appliances are then necessary to manage

multiagent workflows and larger models. ... The nature of LLMs, Shelby said,

requires a person to tell you if the LLM’s output is correct, which in turn

impacts how to judge the relevancy of LLMs in edge environments. It’s not like

you can rely on an LLM to provide an answer to a prompt. Consider a camera in

the Texas landscape, focusing on an oil pump, Shelby said. “The LLM is like,

‘Oh, there are some campers cooking some food,’ when really there’s a fire” at

the oil pump. So, how do you make the process testable in a way that engineers

expect, Shelby asked. It requires end-to-end guard rails. And that’s why random,

cloud-based LLMs do not yet apply to industrial environments.

Beyond the screen, there is a need for agentic applications that specifically

reduce latency and improve throughput. “You need an agentic architecture with

several things going on,” Shelby said about using models to analyze the

packaging of pharmaceuticals, for instance. “You might need to analyze the

defects. Then you might need an LLM with a RAG behind it to do manual lookup.

That’s very complex. It might need a lot of data behind it. It might need to be

very large. You might need 100 billion parameters.” The analysis, he noted, may

require integration with a backend system to perform another task, necessitating

collaboration among several agents. AI appliances are then necessary to manage

multiagent workflows and larger models. ... The nature of LLMs, Shelby said,

requires a person to tell you if the LLM’s output is correct, which in turn

impacts how to judge the relevancy of LLMs in edge environments. It’s not like

you can rely on an LLM to provide an answer to a prompt. Consider a camera in

the Texas landscape, focusing on an oil pump, Shelby said. “The LLM is like,

‘Oh, there are some campers cooking some food,’ when really there’s a fire” at

the oil pump. So, how do you make the process testable in a way that engineers

expect, Shelby asked. It requires end-to-end guard rails. And that’s why random,

cloud-based LLMs do not yet apply to industrial environments.Scaling Identity Security in Cloud Environments

One significant challenge organizations face is the disconnect between security and research and development (R&D) teams. This gap can lead to vulnerabilities being overlooked during the development phase, resulting in potential security risks once new systems are operational in cloud environments. To bridge this gap, a collaborative approach involving both teams is essential. Creating a secure cloud environment necessitates an understanding of the specific needs and challenges faced by each department. ... The journey to achieving scalable identity security in cloud environments is ongoing and requires constant vigilance. By integrating NHI management into their cybersecurity strategies, organizations can reduce risks, increase efficiencies, and ensure compliance with regulatory requirements. With security continue to evolve, staying informed and adaptable remains key. To gain further insights into cybersecurity, you might want to read about some cybersecurity predictions for 2025 and how they may influence your strategies surrounding NHI management. The integration of effective NHI and secrets management into cloud security controls is not just recommended but necessary for safeguarding data. It’s an invaluable part of a broader cybersecurity strategy aimed at minimizing risk and ensuring seamless, secure operations across all sectors.Owning the Fallout: Inside Blameless Culture

For an organization to truly own the fallout after an incident, there must be a

cultural shift from blame to inquiry. A ‘blameless culture’ doesn’t mean it’s a

free-for-all, with no accountability. Instead, it’s a circumstance where the

first question after an incident isn’t “Who screwed up?” it’s “What failed — and

why?” As Gustavo Razzetti describes, “blame is a sign of an unhealthy culture,”

and the goal is to replace it with curiosity. In a blameless postmortem, you break down what happened, map the contributing

systemic factors, and focus on where processes, tooling, or assumptions broke

down. This mindset aligns with the concept of just culture, which balances

accountability and systems thinking. After an incident, the focus is to ask how

things went wrong, not whom to punish — unless egregious misconduct is

involved. ... The most powerful learning happens in the moment when incident patterns

redirect strategic priorities. For example, during post-mortems, a team could

discover that under-monitored dependencies cause high-severity incidents. With a

resilience mindset, that insight can become an objective: “Build automated

dependency-health dashboards by Q2.” When feedback and insights flow into OKRs,

teams internalize resilience as part of delivery, not an afterthought. Resilient

teams move beyond damage control to institutional learning.

For an organization to truly own the fallout after an incident, there must be a

cultural shift from blame to inquiry. A ‘blameless culture’ doesn’t mean it’s a

free-for-all, with no accountability. Instead, it’s a circumstance where the

first question after an incident isn’t “Who screwed up?” it’s “What failed — and

why?” As Gustavo Razzetti describes, “blame is a sign of an unhealthy culture,”

and the goal is to replace it with curiosity. In a blameless postmortem, you break down what happened, map the contributing

systemic factors, and focus on where processes, tooling, or assumptions broke

down. This mindset aligns with the concept of just culture, which balances

accountability and systems thinking. After an incident, the focus is to ask how

things went wrong, not whom to punish — unless egregious misconduct is

involved. ... The most powerful learning happens in the moment when incident patterns

redirect strategic priorities. For example, during post-mortems, a team could

discover that under-monitored dependencies cause high-severity incidents. With a

resilience mindset, that insight can become an objective: “Build automated

dependency-health dashboards by Q2.” When feedback and insights flow into OKRs,

teams internalize resilience as part of delivery, not an afterthought. Resilient

teams move beyond damage control to institutional learning.

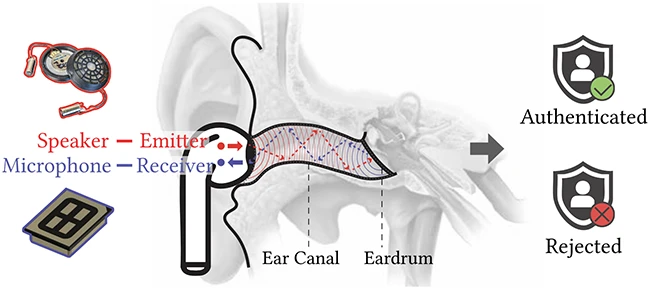

Can your earbuds recognize you? Researchers are working on it

Each person’s ear canal produces a distinct acoustic signature, so the

researchers behind EarID designed a method that allows earbuds to identify their

wearer by using sound. The earbuds emit acoustic signals into the user’s ear

canal, and the reflections from that sound reveal patterns shaped by the ear’s

structure. What makes this study stand out is that the authentication process

happens entirely on the earbuds themselves. The device extracts a unique binary

key based on the user’s ear canal shape and then verifies that key on the paired

mobile device. By working with binary keys instead of raw biometric data, the

system avoids sending sensitive information over Bluetooth. This helps prevent

interception or replay attacks that could expose biometric data. ... A key part

of the research is showing that earbuds can handle biometric processing without

large hardware or cloud support. EarID runs on a small microcontroller

comparable to those found in commercial earbuds. The researchers measured

performance on an Arduino platform with an 80 MHz chip and found that it could

perform the key extraction in under a third of a second. For comparison,

traditional machine learning classifiers took three to ninety times longer to

train and process data. This difference could make a real impact if ear canal

authentication ever reaches consumer devices, since users expect quick and

seamless authentication.

Each person’s ear canal produces a distinct acoustic signature, so the

researchers behind EarID designed a method that allows earbuds to identify their

wearer by using sound. The earbuds emit acoustic signals into the user’s ear

canal, and the reflections from that sound reveal patterns shaped by the ear’s

structure. What makes this study stand out is that the authentication process

happens entirely on the earbuds themselves. The device extracts a unique binary

key based on the user’s ear canal shape and then verifies that key on the paired

mobile device. By working with binary keys instead of raw biometric data, the

system avoids sending sensitive information over Bluetooth. This helps prevent

interception or replay attacks that could expose biometric data. ... A key part

of the research is showing that earbuds can handle biometric processing without

large hardware or cloud support. EarID runs on a small microcontroller

comparable to those found in commercial earbuds. The researchers measured

performance on an Arduino platform with an 80 MHz chip and found that it could

perform the key extraction in under a third of a second. For comparison,

traditional machine learning classifiers took three to ninety times longer to

train and process data. This difference could make a real impact if ear canal

authentication ever reaches consumer devices, since users expect quick and

seamless authentication.

What It 'Techs' to Run Real-Time Payments at Scale

Beyond hosting applications, the architecture is designed for scale, reuse and

rapid provisioning. APIs and services support multiple verticals including

lending, insurance, investments and even quick commerce through a shared

infrastructure-as-a-service model. "Every vertical uses the same underlying

infra, and we constantly evaluate whether something can be commoditized for the

group and then scaled centrally. It's easier to build and scale one accounting

stack than reinvent it every time," Nigam said. Early investments in real-time

compute systems and edge analytics enable rapid anomaly detection and insights,

cutting operational downtime by 30% and improving response times to under 50

milliseconds. A recent McKinsey report on financial infrastructure in emerging

economies underscores the importance of edge computation and near-real-time

monitoring for high-volume payments networks - a model increasingly being

adopted by global fintech leaders to ensure both speed and reliability. ...

Handling spikes and unexpected surges is another critical consideration. India's

payments ecosystem experiences predictable peaks - including festival seasons or

IPL weekends - and unpredictable surges triggered by government announcements or

regulatory deadlines. When a payments platform is built for population scale,

any single merchant or use case does not create a surge at this level.

Beyond hosting applications, the architecture is designed for scale, reuse and

rapid provisioning. APIs and services support multiple verticals including

lending, insurance, investments and even quick commerce through a shared

infrastructure-as-a-service model. "Every vertical uses the same underlying

infra, and we constantly evaluate whether something can be commoditized for the

group and then scaled centrally. It's easier to build and scale one accounting

stack than reinvent it every time," Nigam said. Early investments in real-time

compute systems and edge analytics enable rapid anomaly detection and insights,

cutting operational downtime by 30% and improving response times to under 50

milliseconds. A recent McKinsey report on financial infrastructure in emerging

economies underscores the importance of edge computation and near-real-time

monitoring for high-volume payments networks - a model increasingly being

adopted by global fintech leaders to ensure both speed and reliability. ...

Handling spikes and unexpected surges is another critical consideration. India's

payments ecosystem experiences predictable peaks - including festival seasons or

IPL weekends - and unpredictable surges triggered by government announcements or

regulatory deadlines. When a payments platform is built for population scale,

any single merchant or use case does not create a surge at this level.

Who’s right — the AI zoomers or doomers?

Earlier this week, the Emory Wheel editorial board published an opinion column

claiming that without regulation, AI will soon outpace humanity’s ability to

control it. The post said AI’s uncontrolled evolution threatens human autonomy,

free expression, and democracy, stressing that the technical development is

faster than what lawmakers can handle. ... Both zoomers and doomers agree that

humanity’s fate will be decided when the industry releases AGI or

superintelligent AI. But there’s strong disagreement on when that will happen.

From OpenAI’s Sam Altman to Elon Musk, Eric Schmidt, Demis Hassabis, Dario

Amodei, Masayoshi Son, Jensen Huang, Ray Kurzweil, Louis Rosenberg, Geoffrey

Hinton, Mark Zuckerberg, Ajeya Cotra, and Jürgen Schmidhuber — all predict AGI

by later this year to later this decade. ... Some say we need strict global

rules, maybe like those for nuclear weapons. Others say strong laws would slow

progress, stop new ideas, and give the benefits of AI to China. ... AI is

already causing harms. It contributes to privacy invasion, disinformation and

deepfakes, surveillance overreach, job displacement, cybersecurity threats,

child and psychological harms, environmental damage, erosion of human creativity

and autonomy, economic and political instability, manipulation and loss of trust

in media, unjust criminal justice outcomes, and other problems.

Earlier this week, the Emory Wheel editorial board published an opinion column

claiming that without regulation, AI will soon outpace humanity’s ability to

control it. The post said AI’s uncontrolled evolution threatens human autonomy,

free expression, and democracy, stressing that the technical development is

faster than what lawmakers can handle. ... Both zoomers and doomers agree that

humanity’s fate will be decided when the industry releases AGI or

superintelligent AI. But there’s strong disagreement on when that will happen.

From OpenAI’s Sam Altman to Elon Musk, Eric Schmidt, Demis Hassabis, Dario

Amodei, Masayoshi Son, Jensen Huang, Ray Kurzweil, Louis Rosenberg, Geoffrey

Hinton, Mark Zuckerberg, Ajeya Cotra, and Jürgen Schmidhuber — all predict AGI

by later this year to later this decade. ... Some say we need strict global

rules, maybe like those for nuclear weapons. Others say strong laws would slow

progress, stop new ideas, and give the benefits of AI to China. ... AI is

already causing harms. It contributes to privacy invasion, disinformation and

deepfakes, surveillance overreach, job displacement, cybersecurity threats,

child and psychological harms, environmental damage, erosion of human creativity

and autonomy, economic and political instability, manipulation and loss of trust

in media, unjust criminal justice outcomes, and other problems.

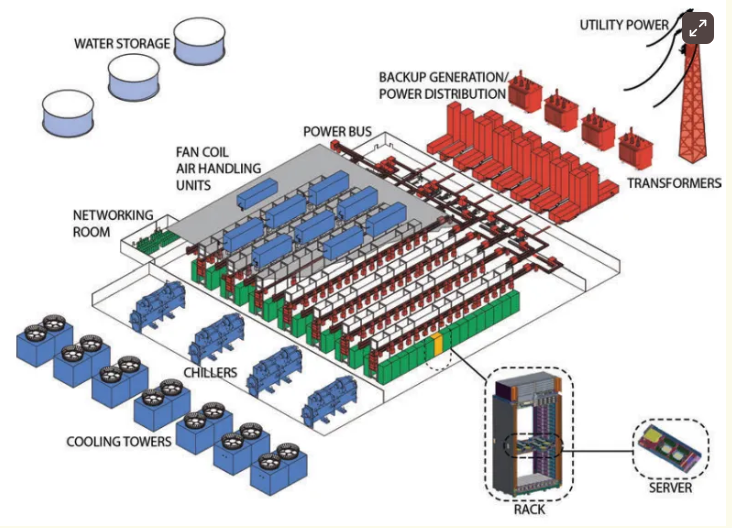

Powering Data in the Age of AI: Part 3 – Inside the AI Data Center Rebuild

You can’t design around AI the way data centers used to handle general compute.

The loads are heavier, the heat is higher, and the pace is relentless. You start

with racks that pull more power than entire server rooms did a decade ago, and

everything around them has to adapt. New builds now work from the inside out.

Engineers start with workload profiles, then shape airflow, cooling paths, cable

runs, and even structural supports based on what those clusters will actually

demand. In some cases, different types of jobs get their own electrical zones.

That means separate cooling loops, shorter throw cabling, dedicated switchgear —

multiple systems, all working under the same roof. Power delivery is changing,

too. In a conversation with BigDATAwire, David Beach, Market Segment Manager at

Anderson Power, explained, “Equipment is taking advantage of much higher

voltages and simultaneously increasing current to achieve the rack densities

that are necessary. This is also necessitating the development of components and

infrastructure to properly carry that power.” ... We know that hardware alone

doesn’t move the needle anymore. The real advantage comes from pushing it online

quickly, without getting bogged down by power, permits, and other obstacles.

That’s where the cracks are beginning to open.

You can’t design around AI the way data centers used to handle general compute.

The loads are heavier, the heat is higher, and the pace is relentless. You start

with racks that pull more power than entire server rooms did a decade ago, and

everything around them has to adapt. New builds now work from the inside out.

Engineers start with workload profiles, then shape airflow, cooling paths, cable

runs, and even structural supports based on what those clusters will actually

demand. In some cases, different types of jobs get their own electrical zones.

That means separate cooling loops, shorter throw cabling, dedicated switchgear —

multiple systems, all working under the same roof. Power delivery is changing,

too. In a conversation with BigDATAwire, David Beach, Market Segment Manager at

Anderson Power, explained, “Equipment is taking advantage of much higher

voltages and simultaneously increasing current to achieve the rack densities

that are necessary. This is also necessitating the development of components and

infrastructure to properly carry that power.” ... We know that hardware alone

doesn’t move the needle anymore. The real advantage comes from pushing it online

quickly, without getting bogged down by power, permits, and other obstacles.

That’s where the cracks are beginning to open.Strategic Domain-Driven Design: The Forgotten Foundation of Great Software

The strategic aspect of DDD is often overlooked because many people do not

recognize its importance. This is a significant mistake when applying DDD.

Strategic design provides context for the model, establishes clear boundaries,

and fosters a shared understanding between business and technology. Without this

foundation, developers may focus on modeling data rather than behavior, create

isolated microservices that do not represent the domain accurately, or implement

design patterns without a clear purpose. ... The first step in strategic

modeling is to define your domain, which refers to the scope of knowledge and

activities that your software intends to address. Next, we apply the age-old

strategy of "divide and conquer," a principle used by the Romans that remains

relevant in modern software development. We break down the larger domain into

smaller, focused areas known as subdomains. ... Once the language is aligned,

the next step is to define bounded contexts. These are explicit boundaries that

indicate where a particular model and language apply. Each bounded context

encapsulates a subset of the ubiquitous language and establishes clear borders

around meaning and responsibilities. Although the term is often used in

discussions about microservices, it actually predates that movement.

The strategic aspect of DDD is often overlooked because many people do not

recognize its importance. This is a significant mistake when applying DDD.

Strategic design provides context for the model, establishes clear boundaries,

and fosters a shared understanding between business and technology. Without this

foundation, developers may focus on modeling data rather than behavior, create

isolated microservices that do not represent the domain accurately, or implement

design patterns without a clear purpose. ... The first step in strategic

modeling is to define your domain, which refers to the scope of knowledge and

activities that your software intends to address. Next, we apply the age-old

strategy of "divide and conquer," a principle used by the Romans that remains

relevant in modern software development. We break down the larger domain into

smaller, focused areas known as subdomains. ... Once the language is aligned,

the next step is to define bounded contexts. These are explicit boundaries that

indicate where a particular model and language apply. Each bounded context

encapsulates a subset of the ubiquitous language and establishes clear borders

around meaning and responsibilities. Although the term is often used in

discussions about microservices, it actually predates that movement.

No comments:

Post a Comment